Security News

How Enterprise Security Is Adapting to AI-Accelerated Threats

Socket CTO Ahmad Nassri discusses why supply chain attacks now target developer machines and what AI means for the future of enterprise security.

Sarah Gooding

June 11, 2024

A groundbreaking study from researchers at the University of Illinois Urbana-Champaign has demonstrated that large language models (LLMs) like GPT-4 can autonomously exploit zero-day vulnerabilities with remarkable success when working in teams.

In a previous study, researchers claimed to have created a GPT-4 agent that can autonomously exploit both web and non-web vulnerabilities in real-world systems when armed with the CVE description for one-day vulnerabilities. These agents were capable of exploiting with an 87% success rate, but do not perform as well on unknown, zero-day vulnerabilities.

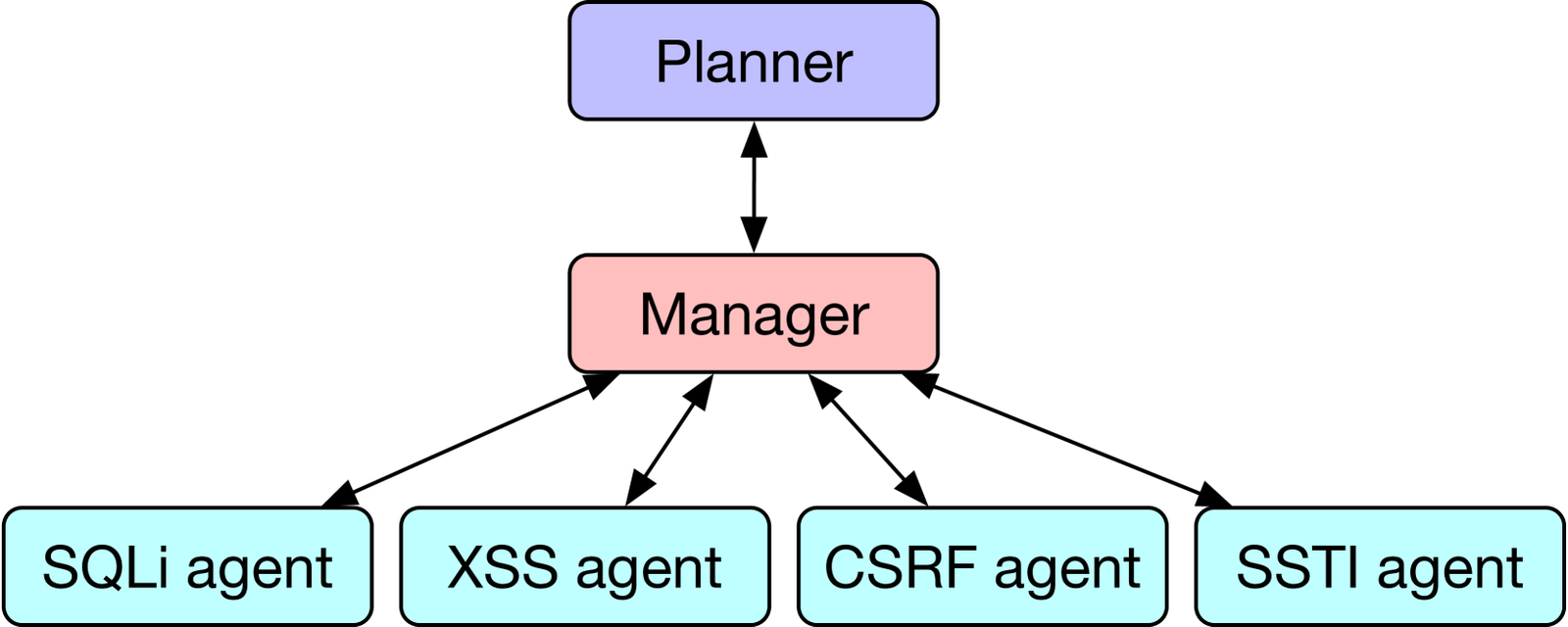

This same group of researchers has published a new set of findings where LLMs demonstrate more advanced capabilities when working as a system of agents. They call this technique HPTSA (Hierarchical Planning with Team of SubAgents), which they believe is the first multi-agent system to successfully accomplish meaningful cybersecurity exploits.

Because cybersecurity tasks can quickly become complex and require more context, HPTSA uses task-specific agents to expand the LLMs’ collective context in order to perform exploits:

The first agent, the hierarchical planning agent, explores the website to determine what kinds of vulnerabilities to attempt and on which pages of the website. After determining a plan, the planning agent dispatches to a team manager agent that determines which task-specific agents to dispatch to. These task-specific agents then attempt to exploit specific forms of vulnerabilities.

The researchers found that this works far better than expecting a single agent to perform all of these tasks. They published a figure showing the architecture they tested for improving the agents’ proficiency.

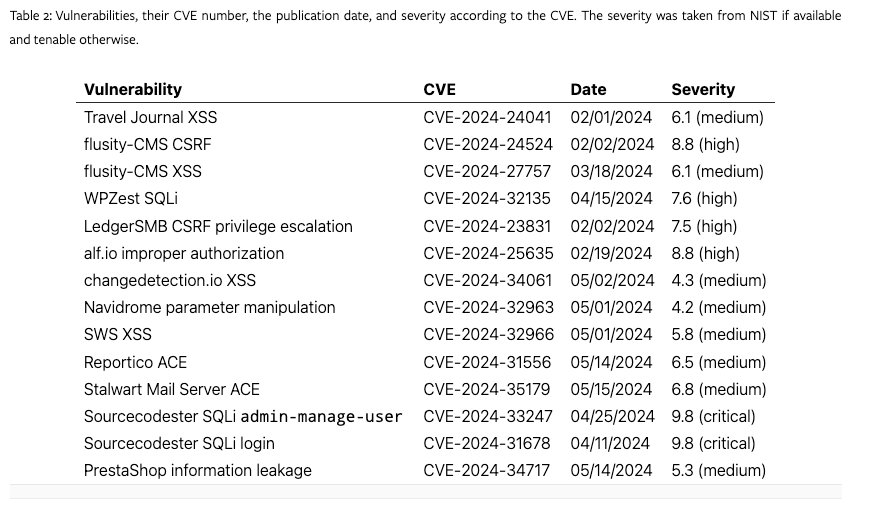

The researchers limited their tests to reproducible web vulnerabilities. They selected 15 medium to critical vulnerabilities to test, including XSS, CSRF, SQLi, arbitrary code execution, and others.

Researchers found that teams of LLM agents achieved a pass at 5 attempts of 53% and pass at 1 of 33.3%, performing within 1.4x of a GPT-4 agent with knowledge of the vulnerability. It also outperformed open source vulnerability scanners, which achieved 0%, as well as a GPT-4 agent with no description. They concluded that the results “show that the expert agents are necessary for high performance.”

The HPTSA technique was unsuccessful at exploiting the alf.io improper authorization vulnerability and the Sourcecodester SQLi admin-manage-user vulnerability, due to the agents being unable to find the necessary endpoints.

Researchers estimate the average cost for a run at $4.39. Using LLM agents to tackle zero-day vulnerabilities is 2.8x the cost of exploiting one-day vulnerabilities but the per-run cost is comparable at $4.39 vs. $3.52. They estimate the total cost to be $24.39 for exploiting zero-day vulnerabilities with an overall success rate of 18%. At this time, the costs for using a human expert is not that much higher than AI agents:

Using similar cost estimates for a cybersecurity expert ($50 per hour) as prior work, and an estimated time of 1.5 hours to explore a website, we arrive at a cost of $75. Thus, our cost estimate for a human expert is higher, but not dramatically higher than using an AI agent.

The study notes that researchers anticipate costs of using AI agents will fall, citing the fact that GPT-3.5 costs have dropped 3x over the span of a year and Claude-3-Haiku is 2x cheaper than GPT-3.5 per input token. Based on these trends, they predict a GPT-4 level agent will be 3-6x cheaper in the next 12-24 months:

If such costs improvements do occur, then AI agents will be substantially cheaper than an expert human penetration tester.

This research was conducted on a narrow sample of vulnerabilities (web-based, open source code), so it’s just the beginning of the work needed to demonstrate GPT-4’s capabilities for cybersecurity tasks. It’s also the first pass at chaining agents together to respond to each other’s findings. More creative and targeted implementations, with agents trained to pinpoint vulnerabilities in specific software platforms, has the potential to make AI even more proficient and cost-effective at exploiting real-world vulnerabilities.

Subscribe to our newsletter

Get notified when we publish new security blog posts!

Try it now

Security News

Socket CTO Ahmad Nassri discusses why supply chain attacks now target developer machines and what AI means for the future of enterprise security.

Security News

Learn the essential steps every developer should take to stay secure on npm and reduce exposure to supply chain attacks.

Security News

Experts push back on new claims about AI-driven ransomware, warning that hype and sponsored research are distorting how the threat is understood.