@directus-labs/ai-image-moderation-operation

Advanced tools

@directus-labs/ai-image-moderation-operation - npm Package Compare versions

Comparing version

@@ -1,43 +0,1 @@ | ||

| import { request, log } from 'directus:api'; | ||

| var api = { | ||

| id: 'directus-labs-image-moderation-operation', | ||

| handler: async ({ apiKey, url, threshold = 80 }) => { | ||

| try { | ||

| const response = await request('https://api.clarifai.com/v2/users/clarifai/apps/main/models/moderation-recognition/outputs', { | ||

| method: 'POST', | ||

| headers: { | ||

| Authorization: `Key ${apiKey}`, | ||

| 'Content-Type': 'application/json', | ||

| }, | ||

| body: JSON.stringify({ | ||

| inputs: [{ | ||

| data: { | ||

| image: { url } | ||

| } | ||

| }] | ||

| }) | ||

| }); | ||

| if(response.status != 200) throw new Error('An error occurred when accessing Clarifai') | ||

| const concepts = response.data.outputs[0].data.concepts | ||

| .filter(c => c.name != 'safe') | ||

| .map(c => ({ | ||

| name: c.name, | ||

| value: (Number(c.value) * 100).toFixed(2) | ||

| })); | ||

| const flags = concepts | ||

| .filter(c => c.value >= threshold / 100) | ||

| .map(c => c.name); | ||

| return { concepts, flags } | ||

| } catch(error) { | ||

| log(JSON.stringify(error)); | ||

| throw new Error(error.message) | ||

| } | ||

| }, | ||

| }; | ||

| export { api as default }; | ||

| import{request as a,log as e}from"directus:api";var t={id:"directus-labs-image-moderation-operation",handler:async({apiKey:t,url:r,threshold:i=80})=>{try{const e=await a("https://api.clarifai.com/v2/users/clarifai/apps/main/models/moderation-recognition/outputs",{method:"POST",headers:{Authorization:`Key ${t}`,"Content-Type":"application/json"},body:JSON.stringify({inputs:[{data:{image:{url:r}}}]})});if(200!=e.status)throw new Error("An error occurred when accessing Clarifai");const o=e.data.outputs[0].data.concepts.filter((a=>"safe"!=a.name)).map((a=>({name:a.name,value:(100*Number(a.value)).toFixed(2)}))),n=o.filter((a=>a.value>=i/100)).map((a=>a.name));return{concepts:o,flags:n}}catch(a){throw e(JSON.stringify(a)),new Error(a.message)}}};export{t as default}; |

@@ -1,60 +0,1 @@ | ||

| var app = { | ||

| id: 'directus-labs-image-moderation-operation', | ||

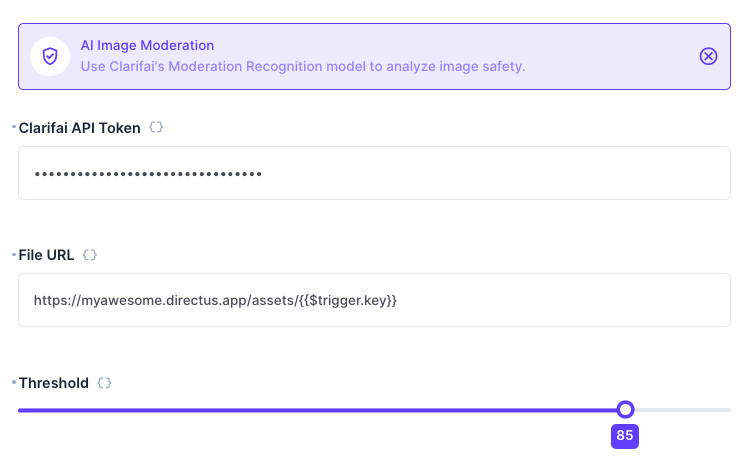

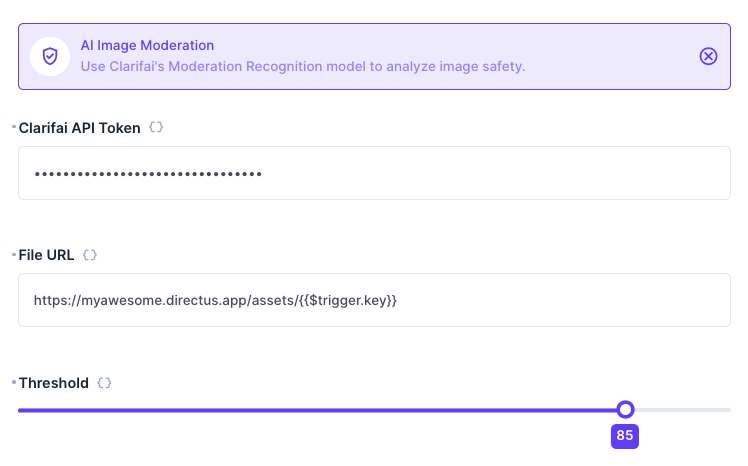

| name: 'AI Image Moderation', | ||

| icon: 'verified_user', | ||

| description: 'Use Clarifai\'s Moderation Recognition model to analyze image safety.', | ||

| overview: ({ url, threshold }) => [ | ||

| { | ||

| label: 'URL', | ||

| text: url, | ||

| }, | ||

| { | ||

| label: 'Threshold', | ||

| text: threshold || 80 + '%', | ||

| }, | ||

| ], | ||

| options: [ | ||

| { | ||

| field: 'apiKey', | ||

| name: 'Clarifai API Token', | ||

| type: 'string', | ||

| required: true, | ||

| meta: { | ||

| width: 'full', | ||

| interface: 'input', | ||

| options: { | ||

| masked: true, | ||

| }, | ||

| }, | ||

| }, | ||

| { | ||

| field: 'url', | ||

| name: 'File URL', | ||

| type: 'string', | ||

| required: true, | ||

| meta: { | ||

| width: 'full', | ||

| interface: 'input', | ||

| }, | ||

| }, | ||

| { | ||

| field: 'threshold', | ||

| name: 'Threshold', | ||

| type: 'integer', | ||

| schema: { | ||

| default_value: 80 | ||

| }, | ||

| meta: { | ||

| width: 'full', | ||

| interface: 'slider', | ||

| options: { | ||

| max: 99, | ||

| min: 1, | ||

| step: 1 | ||

| } | ||

| }, | ||

| } | ||

| ], | ||

| }; | ||

| export { app as default }; | ||

| var e={id:"directus-labs-image-moderation-operation",name:"AI Image Moderation",icon:"verified_user",description:"Use Clarifai's Moderation Recognition model to analyze image safety.",overview:({url:e,threshold:i})=>[{label:"URL",text:e},{label:"Threshold",text:i||"80%"}],options:[{field:"apiKey",name:"Clarifai API Token",type:"string",required:!0,meta:{width:"full",interface:"input",options:{masked:!0}}},{field:"url",name:"File URL",type:"string",required:!0,meta:{width:"full",interface:"input"}},{field:"threshold",name:"Threshold",type:"integer",schema:{default_value:80},meta:{width:"full",interface:"slider",options:{max:99,min:1,step:1}}}]};export{e as default}; |

| { | ||

| "name": "@directus-labs/ai-image-moderation-operation", | ||

| "description": "Use Clarifai's Moderation Recognition Model to analyze image safety.", | ||

| "icon": "extension", | ||

| "version": "1.0.0", | ||

| "keywords": [ | ||

| "directus", | ||

| "directus-extension", | ||

| "directus-extension-operation" | ||

| ], | ||

| "type": "module", | ||

| "license": "MIT", | ||

| "files": [ | ||

| "dist" | ||

| ], | ||

| "directus:extension": { | ||

| "type": "operation", | ||

| "path": { | ||

| "app": "dist/app.js", | ||

| "api": "dist/api.js" | ||

| }, | ||

| "source": { | ||

| "app": "src/app.js", | ||

| "api": "src/api.js" | ||

| }, | ||

| "host": "^10.10.0", | ||

| "sandbox": { | ||

| "enabled": true, | ||

| "requestedScopes": { | ||

| "log": {}, | ||

| "request": { | ||

| "methods": ["POST"], | ||

| "urls": [ | ||

| "https://api.clarifai.com/v2/**" | ||

| ] | ||

| } | ||

| } | ||

| } | ||

| }, | ||

| "scripts": { | ||

| "build": "directus-extension build", | ||

| "dev": "directus-extension build -w --no-minify", | ||

| "link": "directus-extension link" | ||

| }, | ||

| "devDependencies": { | ||

| "@directus/extensions-sdk": "11.0.1", | ||

| "vue": "^3.4.21" | ||

| } | ||

| "name": "@directus-labs/ai-image-moderation-operation", | ||

| "description": "Use Clarifai's Moderation Recognition Model to analyze image safety.", | ||

| "icon": "extension", | ||

| "version": "1.0.1", | ||

| "keywords": [ | ||

| "directus", | ||

| "directus-extension", | ||

| "directus-extension-operation" | ||

| ], | ||

| "type": "module", | ||

| "license": "MIT", | ||

| "files": [ | ||

| "dist" | ||

| ], | ||

| "directus:extension": { | ||

| "type": "operation", | ||

| "path": { | ||

| "app": "dist/app.js", | ||

| "api": "dist/api.js" | ||

| }, | ||

| "source": { | ||

| "app": "src/app.js", | ||

| "api": "src/api.js" | ||

| }, | ||

| "host": "^10.10.0", | ||

| "sandbox": { | ||

| "enabled": true, | ||

| "requestedScopes": { | ||

| "log": {}, | ||

| "request": { | ||

| "methods": [ | ||

| "POST" | ||

| ], | ||

| "urls": [ | ||

| "https://api.clarifai.com/v2/**" | ||

| ] | ||

| } | ||

| } | ||

| } | ||

| }, | ||

| "scripts": { | ||

| "build": "directus-extension build", | ||

| "dev": "directus-extension build -w --no-minify", | ||

| "link": "directus-extension link" | ||

| }, | ||

| "devDependencies": { | ||

| "@directus/extensions-sdk": "11.0.1", | ||

| "vue": "^3.4.21" | ||

| } | ||

| } |

@@ -5,7 +5,7 @@ # AI Image Moderation Operation | ||

|  | ||

|  | ||

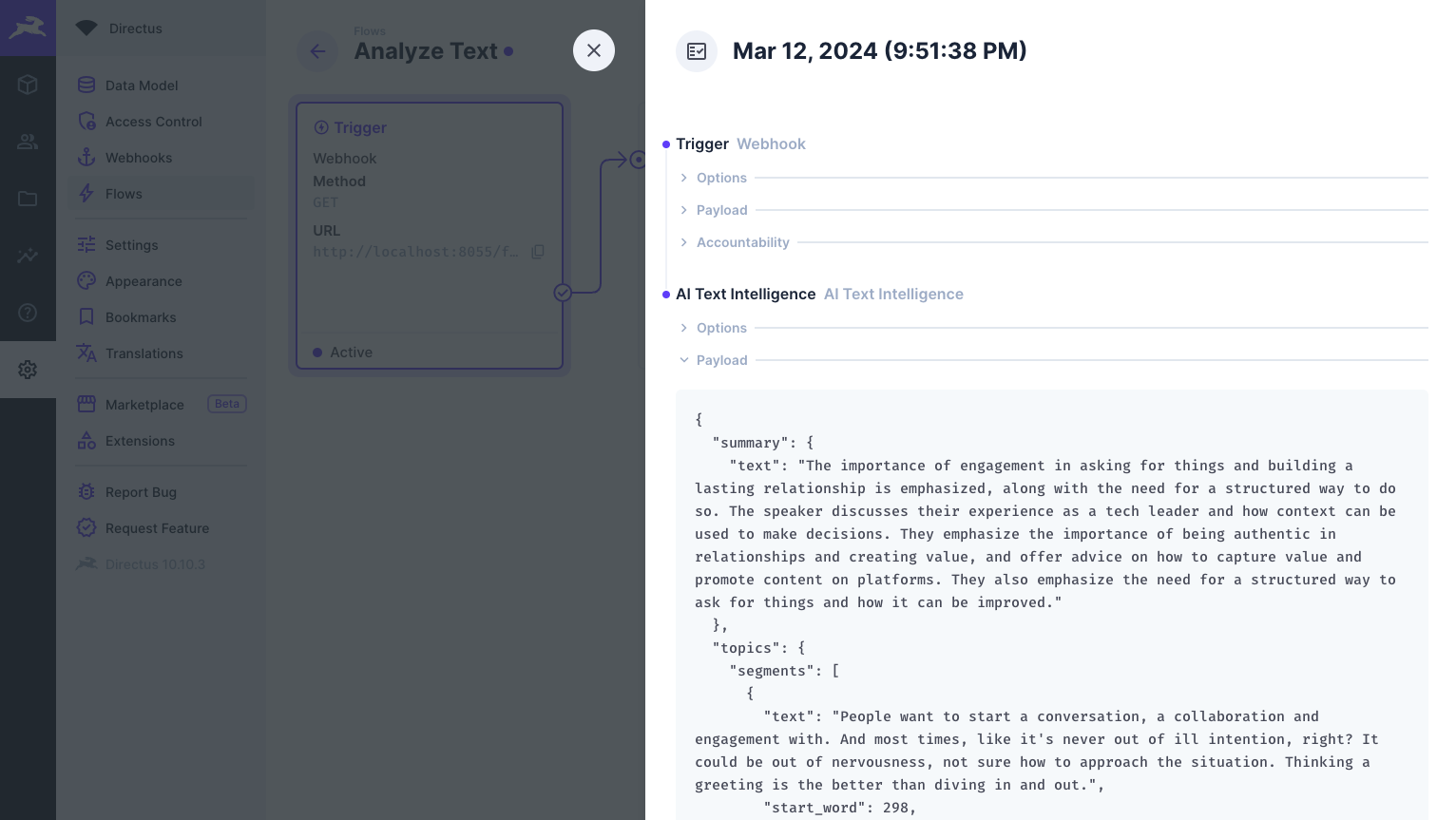

| This operation contains four configuration options - a [Clarifai API Key](https://clarifai.com/settings/security), a link to a file, and a threshold percentage for the concepts to be 'flagged'. It returns a JSON object containing a score for each concept, and an array of which concepts are over the threshold. | ||

|  | ||

|  | ||

@@ -12,0 +12,0 @@ ## Output |

New alerts

New author

Supply chain riskA new npm collaborator published a version of the package for the first time. New collaborators are usually benign additions to a project, but do indicate a change to the security surface area of a package.

Found 1 instance in 1 package

Trivial Package

Supply chain riskPackages less than 10 lines of code are easily copied into your own project and may not warrant the additional supply chain risk of an external dependency.

Found 1 instance in 1 package

Minified code

QualityThis package contains minified code. This may be harmless in some cases where minified code is included in packaged libraries, however packages on npm should not minify code.

Found 1 instance in 1 package

Worsened metrics

- Total package byte prevSize

4703

-8.5%- Lines of code

6

-93.75%- Number of low quality alerts

2

100%- Number of medium supply chain risk alerts

2

Infinity%