Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

@gram-ai/sdk

Advanced tools

Developer-friendly Typescript SDK to interact with Gram toolsets. Gram allows you to use your agentic tools in a variety of different frameworks and protocols. Gram tools can be used with pretty much any model that supports function calling via a chat c

Developer-friendly Typescript SDK to interact with Gram toolsets. Gram allows you to use your agentic tools in a variety of different frameworks and protocols. Gram tools can be used with pretty much any model that supports function calling via a chat completions or responses style API.

The SDK can be installed with either npm, pnpm, bun or yarn package managers.

npm add @gram-ai/sdk

pnpm add @gram-ai/sdk

bun add @gram-ai/sdk

yarn add @gram-ai/sdk zod

# Note that Yarn does not install peer dependencies automatically. You will need

# to install zod as shown above.

[!NOTE] This package is published as an ES Module (ESM) only. For applications using CommonJS, use

await import("@gram-ai/sdk")to import and use this package.

import { generateText } from 'ai';

import { VercelAdapter } from "@gram-ai/sdk/vercel";

import { createOpenAI } from "@ai-sdk/openai";

const key = process.env.GRAM_API_KEY

const vercelAdapter = new VercelAdapter({apiKey: key});

const openai = createOpenAI({

apiKey: process.env.OPENAI_API_KEY

});

const tools = await vercelAdapter.tools({

project: "default",

toolset: "default",

environment: "default"

});

const result = await generateText({

model: openai("gpt-4"),

tools,

maxSteps: 5,

prompt: "Can you tell me what tools you have available?"

});

console.log(result.text);

import { LangchainAdapter } from "@gram-ai/sdk/langchain";

import { ChatOpenAI } from "@langchain/openai";

import { createOpenAIFunctionsAgent, AgentExecutor } from "langchain/agents";

import { pull } from "langchain/hub";

import { ChatPromptTemplate } from "@langchain/core/prompts";

const key = process.env.GRAM_API_KEY

const langchainAdapter = new LangchainAdapter({apiKey: key});

const llm = new ChatOpenAI({

modelName: "gpt-4",

temperature: 0,

openAIApiKey: process.env.OPENAI_API_KEY,

});

const tools = await langchainAdapter.tools({

project: "default",

toolset: "default",

environment: "default",

});

const prompt = await pull<ChatPromptTemplate>(

"hwchase17/openai-functions-agent"

);

const agent = await createOpenAIFunctionsAgent({

llm,

tools,

prompt

});

const executor = new AgentExecutor({

agent,

tools,

verbose: false,

});

const result = await executor.invoke({

input: "Can you tell me what tools you have available?"

});

console.log(result.output);

import { OpenAI } from 'openai';

import { OpenAIAdapter } from "@gram-ai/sdk/openai";

const key = process.env.GRAM_API_KEY

const openaiAdapter = new OpenAIAdapter({apiKey: key});

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY

});

const tools = await openaiAdapter.tools({

project: "default",

toolset: "default",

environment: "default"

});

const result = await openai.chat.completions.create({

model: openai("gpt-4"),

tools,

maxSteps: 5,

prompt: "Can you tell me what tools you have available?"

});

console.log(result.text);

import { FunctionCallingAdapter } from "@gram-ai/sdk/functioncalling";

const key = process.env.GRAM_API_KEY ?? "";

// vanilla client that matches the function calling interface for direct use with model provider APIs

const functionCallingAdapter = new FunctionCallingAdapter({apiKey: key});

const tools = await functionCallingAdapter.tools({

project: "default",

toolset: "default",

environment: "default",

});

// exposes name, description, parameters, and an execute and aexcute (async) function

console.log(tools[0].name)

console.log(tools[0].description)

console.log(tools[0].parameters)

console.log(tools[0].execute)

If preferred, it's possible to pass in user defined environment variables into tools calls rather than using hosted gram environments.

import { generateText } from 'ai';

import { VercelAdapter } from "@gram-ai/sdk/vercel";

import { createOpenAI } from "@ai-sdk/openai";

const key = process.env.GRAM_API_KEY

const vercelAdapter = new VercelAdapter({apiKey: key, environmentVariables: {

"MY_TOOL_TOKEN": "VALUE"

}});

const openai = createOpenAI({

apiKey: process.env.OPENAI_API_KEY

});

const tools = await vercelAdapter.tools({

project: "default",

toolset: "default",

environment: "default"

});

const result = await generateText({

model: openai("gpt-4"),

tools,

maxSteps: 5,

prompt: "Can you tell me what tools you have available?"

});

console.log(result.text);

Gram also instantly allows you to expose and use any toolset as a hosted MCP server.

{

"mcpServers": {

"GramTest": {

"command": "npx",

"args": [

"mcp-remote",

"https://app.getgram.ai/mcp/default/default/default",

"--allow-http",

"--header",

"Authorization:${GRAM_KEY}"

],

"env": {

"GRAM_KEY": "Bearer <your-key-here>"

}

}

}

}

You also have the option to add a unique slug to these servers and make them publicly available to pass your own credentials.

{

"mcpServers": {

"GramSlack": {

"command": "npx",

"args": [

"mcp-remote",

"https://app.getgram.ai/mcp/speakeasy-team-default",

"--allow-http",

"--header",

"MCP-SPEAKEASY_YOUR_TOOLSET_CRED:${VALUE}"

]

}

}

}

FAQs

Developer-friendly Typescript SDK to interact with Gram toolsets. Gram allows you to use your agentic tools in a variety of different frameworks and protocols. Gram tools can be used with pretty much any model that supports function calling via a chat c

We found that @gram-ai/sdk demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 4 open source maintainers collaborating on the project.

Did you know?

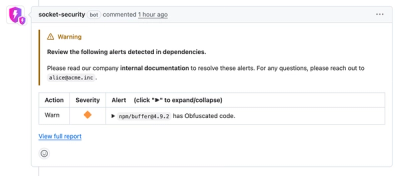

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.