Product

Announcing Bun and vlt Support in Socket

Bringing supply chain security to the next generation of JavaScript package managers

@hashicorp/broken-links-checker

Advanced tools

A /smart/ standalone package that checks for broken links in HTML files that reside in a given path. This package is compatiable with Netlify servers, as it will honor any redirects mentioned in the _redirects file. Additionaly, to avoid unneccesary bandwith & time, the checker will cache URL results for the life of the process, and resolve local URLs to local files.

To run, use the CLI as follows:

> node index.js --path ~/dev/Hashicorp/middleman/build

Or, in the working directory:

> npx broken-links-checker --path ./build

| Required? | Var Name | Description | Default |

|---|---|---|---|

| Yes | path | Path of HTML files | |

| No | baseUrl | Base URL to apply to relative links | https://www.hashicorp.com/ |

| No | verbose | false | |

| No | configPath | Path to a linksrc JSON config file |

The checker can be fine-tuned using a .linksrc.json file. By default, the checker will attemp to read a config file from the supplied path parameter, falling back to the current working directory, and finally to the user's home folder. Here are all the parameters (and their default values) you may specify in the config file:

{

// Feeling chatty?

"verbose": false,

// Base URL for which URLs are resolved to local HTML files

// from the given --path parameter

"baseUrl": "https://www.hashicorp.com/",

// Max number of outstanding external HTTP requests

"maxConcurrentUrlRequests": 50,

// Max number of HTML files to scan at once, in parallel

"maxConcurrentFiles": 10,

// Tags from which to read URLs, and their respective source attribute

"allowedTags": {

"a": "href",

"script": "src",

"style": "href",

"iframe": "src",

"img": "src",

"embed": "src",

"audio": "src",

"video": "src"

},

// URL protocols supported

"allowedProtocols": ["http:", "https:"],

// RegExp patterns to ignore completely

"excludePatterns": [

"^https?://.*linkedin.com",

"^https?://.*example.com",

"^https?://.*localhost"

],

// Exclude URLs that look like IPs, but are not in a public IPv4 range

"excludePrivateIPs": true,

// Check same-page anchor links?

"checkAnchorLinks": true,

// Timeout for external HTTP requests

"timeout": 60000,

// Should we retry when we get a 405 error for a HEAD request?

"retry405Head": true,

// Should we try retry on ENOTFOUND network error?

"retryENOTFOUND": true,

// Overall max number of retries for any given URL

"maxRetries": 3,

// Toggle local in-memory cache (to avoid querying the same URL multiple times)

"useCache": true,

// RegExp patterns for URLs that don't support HEAD requests

"avoidHeadForPatterns": [

"^https?://.*linkedin.com",

"^https?://.*youtube.com",

"^https?://.*meetup.com"

]

}

Links are divided into two categories: internal links, and external ones. To determine if a link is internal, we parsed it's hostname and compare it to configured baseUrl hostname. While external links are simply checked using an HTTP HEAD request, internal ones are a bit more nuanced, since we're relying on Middleman: we construct a local path for the given link by replacing the public host, with our local path (i.e. path parameter). We then try to match, in this order, an HTML file to the resulting path: ${path}, ${path}.html, and finally ${path}/index.html.

Each URL checked is stored in a local memory cache to avoid rechecking the same URL. Although unadvised, this behavior can be disabled.

External URL fetching is a bit more complex than a simple HEAD request. Some hosts apply explicit rate limits, and some apply implicit ones (e.g. LinkedIn). When we fetch an external URL, we first check out local cache. In case of a cache miss, we'll make a HEAD request to the given URL, up to maxRetries times (defaults to 3). HEAD requests require less bandwidth and are less obtrusive and GET requests. Some hosts are set to not respond to HEAD requets, and will respond with a HTTP/405 Method Not Allowed, in which case we'll retry the request with a GET request.

Netlify redirects in the form of a _redirects file are honored and processed using the NetlifyDevServer class. Redirects files are auto detected in the root of the given path.

FAQs

Broken Links Checker

The npm package @hashicorp/broken-links-checker receives a total of 5 weekly downloads. As such, @hashicorp/broken-links-checker popularity was classified as not popular.

We found that @hashicorp/broken-links-checker demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 20 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Bringing supply chain security to the next generation of JavaScript package managers

Product

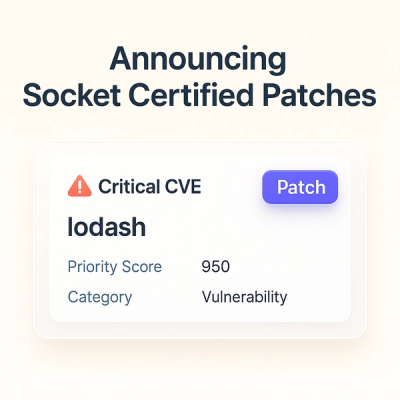

A safer, faster way to eliminate vulnerabilities without updating dependencies

Product

Reachability analysis for Ruby is now in beta, helping teams identify which vulnerabilities are truly exploitable in their applications.