Research

Malicious npm Packages Impersonate Flashbots SDKs, Targeting Ethereum Wallet Credentials

Four npm packages disguised as cryptographic tools steal developer credentials and send them to attacker-controlled Telegram infrastructure.

@kwj-team/comfyui-client

Advanced tools

api Client for ComfyUI that supports both NodeJS and Browser environments. It provides full support for all RESTful / WebSocket APIs.

Javascript api Client for ComfyUI that supports both NodeJS and Browser environments.

This client provides comprehensive support for all available RESTful and WebSocket APIs, with built-in TypeScript typings for enhanced development experience. Additionally, it introduces a programmable workflow interface, making it easy to create and manage workflows in a human-readable format.

documentations:

examples:

By incorporating these features, @stable-canvas/comfyui-client provides a robust and versatile solution for integrating ComfyUI capabilities into your projects effortlessly.

Use npm or yarn to install the @stable-canvas/comfyui-client package.

pnpm add @stable-canvas/comfyui-client

First, import the ComfyUIApiClient class from the package.

import { ComfyUIApiClient } from "@stable-canvas/comfyui-client";

Client instance, in Browser

const client = new ComfyUIApiClient({

api_host: "127.0.0.1:8188",

})

// connect ws client

client.connect();

Client instance, in NodeJs

import WebSocket from "ws";

import fetch from "node-fetch";

const client = new ComfyUIApiClient({

//...

WebSocket,

fetch,

});

// connect ws client

client.connect();

In addition to the standard API interfaces provided by comfyui, this library also wraps them to provide advanced calls

const result = await client.enqueue(

{ /* workflow prompt */ },

{

progress: ({max,value}) => console.log(`progress: ${value}/${max}`);

}

);

It's very simple; it includes the entire prompt interface life cycle and waits for and collectively returns the result at the end of the request

In some cases you might not want to use ws, then you can use

enqueue_polling, this function will perform similar behavior toenqueue, but uses rest http to poll the task status

Sometimes you may need to check some configurations of ComfyUI, such as whether a deployment service contains the needed model or lora, then these interfaces will be useful

getSamplers getSchedulers getSDModels getCNetModels getUpscaleModels getHyperNetworks getLoRAs getVAEs

If you need to manage the life cycle of your request, then this class can be very convenient

instance

// You can instantiate manually

const invoked = new InvokedWorkflow({ /* workflow */ }, client);

// or use the workflow api to instantiate

const invoked = your_workflow.instance();

running

// job enqueue

invoked.enqueue();

// job result promise

const job_promise = invoked.wait();

// if you want interrupt it

invoked.interrupt();

// query job status

invoked.query();

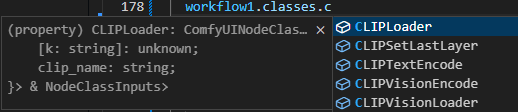

Inspired by this issue and this library, this library provides a programmable workflow interface.

It has the following use cases:

Here is a minimal example demonstrating how to create and execute a simple workflow using this library.

const workflow = new ComfyUIWorkflow();

const cls = workflow.classes;

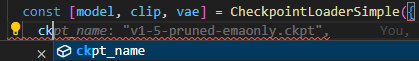

const [model, clip, vae] = cls.CheckpointLoaderSimple({

ckpt_name: "lofi_v5.baked.fp16.safetensors",

});

const enc = (text: string) => cls.CLIPTextEncode({ text, clip })[0];

const [samples] = cls.KSampler({

seed: Math.floor(Math.random() * 2 ** 32),

steps: 35,

cfg: 4,

sampler_name: "dpmpp_2m_sde_gpu",

scheduler: "karras",

denoise: 1,

model,

positive: enc("best quality, 1girl"),

negative: enc(

"worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T"

),

latent_image: cls.EmptyLatentImage({

width: 512,

height: 512,

batch_size: 1,

})[0],

});

cls.SaveImage({

filename_prefix: "from-sc-comfy-ui-client",

images: cls.VAEDecode({ samples, vae })[0],

});

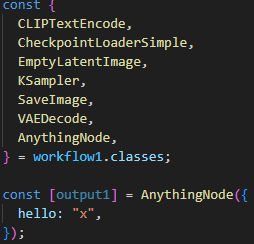

Both implementation and usage are extremely simple and human-readable. Below is a simple example of creating a workflow:

const createWorkflow = () => {

const workflow = new ComfyUIWorkflow();

const {

KSampler,

CheckpointLoaderSimple,

EmptyLatentImage,

CLIPTextEncode,

VAEDecode,

SaveImage,

NODE1,

} = workflow.classes;

const seed = Math.floor(Math.random() * 2 ** 32);

const pos = "best quality, 1girl";

const neg = "worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T";

const model1_name = "lofi_v5.baked.fp16.safetensors";

const model2_name = "case-h-beta.baked.fp16.safetensors";

const sampler_settings = {

seed,

steps: 35,

cfg: 4,

sampler_name: "dpmpp_2m_sde_gpu",

scheduler: "karras",

denoise: 1,

};

const [model1, clip1, vae1] = CheckpointLoaderSimple({

ckpt_name: model1_name,

});

const [model2, clip2, vae2] = CheckpointLoaderSimple({

ckpt_name: model2_name,

});

const dress_case = [

"white yoga",

"black office",

"pink sportswear",

"cosplay",

];

const generate_pipeline = (model, clip, vae, pos, neg) => {

const [latent_image] = EmptyLatentImage({

width: 640,

height: 960,

batch_size: 1,

});

const [positive] = CLIPTextEncode({ text: pos, clip });

const [negative] = CLIPTextEncode({ text: neg, clip });

const [samples] = KSampler({

...sampler_settings,

model,

positive,

negative,

latent_image,

});

const [image] = VAEDecode({ samples, vae });

return image;

};

for (const cloth of dress_case) {

const input_pos = `${pos}, ${cloth} dress`;

const image = generate_pipeline(model1, clip1, vae1, input_pos, neg);

SaveImage({

images: image,

filename_prefix: `${cloth}-lofi-v5`,

});

const input_pos2 = `${pos}, ${cloth} dress`;

const image2 = generate_pipeline(model2, clip2, vae2, input_pos2, neg);

SaveImage({

images: image2,

filename_prefix: `${cloth}-case-h-beta`,

});

}

return workflow;

};

const wf1 = createWorkflow();

// { prompt: {...}, workflow: {...} }

const wf1 = createWorkflow();

const result = await wf1.invoke(client);

npm install @stable-canvas/comfyui-client-cli

This tool converts the input workflow into executable code that uses this library.

Usage: nodejs-comfy-ui-client-code-gen [options]

Use this tool to generate the corresponding calling code using workflow

Options:

-V, --version output the version number

-t, --template [template] Specify the template for generating code, builtin tpl: [esm,cjs,web,none] (default: "esm")

-o, --out [output] Specify the output file for the generated code

-i, --in <input> Specify the input file, support .json file

-h, --help display help for command

example

cuc-w2c -i workflow.json -o out.js -t esm

{

"prompt": {

"1": {

"class_type": "CheckpointLoaderSimple",

"inputs": {

"ckpt_name": "lofi_v5.baked.fp16.safetensors"

}

},

"2": {

"class_type": "CLIPTextEncode",

"inputs": {

"text": "best quality, 1girl",

"clip": [

"1",

1

]

}

},

"3": {

"class_type": "CLIPTextEncode",

"inputs": {

"text": "worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T",

"clip": [

"1",

1

]

}

},

"4": {

"class_type": "EmptyLatentImage",

"inputs": {

"width": 512,

"height": 512,

"batch_size": 1

}

},

"5": {

"class_type": "KSampler",

"inputs": {

"seed": 2765233096,

"steps": 35,

"cfg": 4,

"sampler_name": "dpmpp_2m_sde_gpu",

"scheduler": "karras",

"denoise": 1,

"model": [

"1",

0

],

"positive": [

"2",

0

],

"negative": [

"3",

0

],

"latent_image": [

"4",

0

]

}

},

"6": {

"class_type": "VAEDecode",

"inputs": {

"samples": [

"5",

0

],

"vae": [

"1",

2

]

}

},

"7": {

"class_type": "SaveImage",

"inputs": {

"filename_prefix": "from-sc-comfy-ui-client",

"images": [

"6",

0

]

}

}

}

}

import {

ComfyUIApiClient,

ComfyUIWorkflow,

} from "@stable-canvas/comfyui-client";

async function main(envs = {}) {

const env = (k) => envs[k];

const client = new ComfyUIApiClient({

api_host: env("COMFYUI_CLIENT_API_HOST"),

api_host: env("COMFYUI_CLIENT_API_BASE"),

clientId: env("COMFYUI_CLIENT_CLIENT_ID"),

});

const createWorkflow = () => {

const workflow = new ComfyUIWorkflow();

const cls = workflow.classes;

const [MODEL_1, CLIP_1, VAE_1] = cls.CheckpointLoaderSimple({

ckpt_name: "lofi_v5.baked.fp16.safetensors",

});

const [CONDITIONING_1] = cls.CLIPTextEncode({

text: "best quality, 1girl",

clip: CLIP_1,

});

const [CONDITIONING_2] = cls.CLIPTextEncode({

text: "worst quality, bad anatomy, embedding:NG_DeepNegative_V1_75T",

clip: CLIP_1,

});

const [LATENT_1] = cls.EmptyLatentImage({

width: 512,

height: 512,

batch_size: 1,

});

const [LATENT_2] = cls.KSampler({

seed: 2765233096,

steps: 35,

cfg: 4,

sampler_name: "dpmpp_2m_sde_gpu",

scheduler: "karras",

denoise: 1,

model: MODEL_1,

positive: CONDITIONING_1,

negative: CONDITIONING_2,

latent_image: LATENT_1,

});

const [IMAGE_1] = cls.VAEDecode({

samples: LATENT_2,

vae: VAE_1,

});

const [] = cls.SaveImage({

filename_prefix: "from-sc-comfy-ui-client",

images: IMAGE_1,

});

return workflow;

};

const workflow = createWorkflow();

console.time("enqueue workflow");

try {

return await workflow.invoke(client);

} catch (error) {

throw error;

} finally {

console.timeEnd("enqueue workflow");

}

}

main("process" in globalThis ? globalThis.process.env : globalThis)

.then(() => {

console.log("DONE");

})

.catch((err) => {

console.error("ERR", err);

});

Contributions are welcome! Please feel free to submit a pull request.

MIT

FAQs

api Client for ComfyUI that supports both NodeJS and Browser environments. It provides full support for all RESTful / WebSocket APIs.

The npm package @kwj-team/comfyui-client receives a total of 7 weekly downloads. As such, @kwj-team/comfyui-client popularity was classified as not popular.

We found that @kwj-team/comfyui-client demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Four npm packages disguised as cryptographic tools steal developer credentials and send them to attacker-controlled Telegram infrastructure.

Security News

Ruby maintainers from Bundler and rbenv teams are building rv to bring Python uv's speed and unified tooling approach to Ruby development.

Security News

Following last week’s supply chain attack, Nx published findings on the GitHub Actions exploit and moved npm publishing to Trusted Publishers.