Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

@llamafarm/llamafarm

Advanced tools

🌾 Plant and harvest AI models, agents, and databases into single deployable binaries

Deploy AI models, agents, and databases into single deployable binaries - no cloud required.

npm install -g @llamafarm/llamafarm

# Deploy a model

llamafarm plant llama3-8b

# Deploy with optimization

llamafarm plant llama3-8b --optimize

# Deploy to specific target

llamafarm plant mistral-7b --target raspberry-pi

# Development/Testing (no model download)

llamafarm plant llama3-8b --mock

# 1. Plant - Configure your AI deployment

llamafarm plant llama3-8b \

--device mac-arm \

--agent chat-assistant \

--rag \

--database vector

# 2. Bale - Compile to single binary

llamafarm bale ./.llamafarm/llama3-8b \

--device mac-arm \

--optimize

# 3. Harvest - Deploy anywhere

llamafarm harvest llama3-8b-mac-arm-v1.0.0.bin --run

# Or just copy and run directly (no dependencies needed!)

./llama3-8b-mac-arm-v1.0.0.bin

plantDeploy a model to create a standalone binary.

llamafarm plant <model> [options]

Options:

--target <platform> Target platform (mac, linux, windows, raspberry-pi)

--optimize Enable size optimization

--agent <name> Include an agent

--rag Enable RAG pipeline

--database <type> Include database (vector, sqlite)

# Basic deployment

llamafarm plant llama3-8b

# Deploy with RAG and vector database

llamafarm plant mixtral-8x7b --rag --database vector

# Deploy optimized for Raspberry Pi

llamafarm plant llama3-8b --target raspberry-pi --optimize

# Deploy with custom agent

llamafarm plant llama3-8b --agent customer-service

bale🎯 The Baler - Compile your deployment into a single executable binary.

llamafarm bale <project-dir> [options]

Options:

--device <platform> Target platform (mac, linux, windows, raspberry-pi)

--output <path> Output binary path

--optimize <level> Optimization level (none, standard, max)

--sign Sign the binary for distribution

--compress Extra compression (slower but smaller)

The Baler packages everything into a single binary:

Supported Platforms:

mac / mac-arm / mac-intel - macOS with Metal accelerationlinux / linux-arm - Linux with CUDA supportwindows - Windows with DirectML/CUDAraspberry-pi - Optimized for ARM devicesjetson - NVIDIA Jetson edge devicesTypical Binary Sizes:

# Standard compilation

llamafarm bale ./.llamafarm/llama3-8b --device mac-arm

# Optimized for size

llamafarm bale ./.llamafarm/llama3-8b --device raspberry-pi --optimize max --compress

# Enterprise deployment with signing

llamafarm bale ./.llamafarm/mixtral --device linux --sign --output production.bin

harvestDeploy and run a compiled binary.

llamafarm harvest <binary-or-url> [options]

Options:

--run Run immediately after deployment

--daemon Run as background service

--port <number> Override default port

--verify Verify binary integrity

Create a llamafarm.yaml file for advanced configurations:

name: my-assistant

base_model: llama3-8b

plugins:

- vector_search

- voice_recognition

data:

- path: ./company-docs

type: knowledge

optimization:

quantization: int8

target_size: 2GB

Then build:

llamafarm build

For full documentation, visit https://docs.llamafarm.ai

Q: Can I run the binary on a different OS than where I compiled it?

A: No, you need to compile for each target platform. Use --device to specify the target.

Q: How much disk space do I need? A: During compilation, you need ~3x the final binary size. The final binary is typically 4-8GB for 7B models.

Q: Can I update the model without recompiling? A: No, the model is embedded in the binary. This ensures zero dependencies but means updates require recompilation.

Q: Does the binary need internet access? A: No! Everything runs completely offline once deployed.

MIT © LLaMA Farm Team

FAQs

🌾 Plant and harvest AI models, agents, and databases into single deployable binaries

The npm package @llamafarm/llamafarm receives a total of 0 weekly downloads. As such, @llamafarm/llamafarm popularity was classified as not popular.

We found that @llamafarm/llamafarm demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

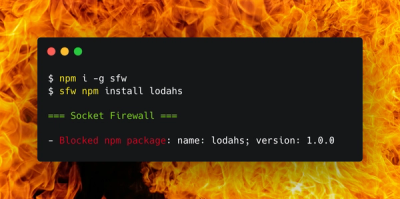

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.