Product

Announcing Bun and vlt Support in Socket

Bringing supply chain security to the next generation of JavaScript package managers

@mastra/evals

Advanced tools

A comprehensive evaluation framework for assessing AI model outputs across multiple dimensions.

A comprehensive evaluation framework for assessing AI model outputs across multiple dimensions.

npm install @mastra/evals

@mastra/evals provides a suite of evaluation metrics for assessing AI model outputs. The package includes both LLM-based and NLP-based metrics, enabling both automated and model-assisted evaluation of AI responses.

Answer Relevancy

Bias Detection

Context Precision & Relevancy

Faithfulness

Prompt Alignment

Toxicity

Completeness

Content Similarity

Keyword Coverage

import { ContentSimilarityMetric, ToxicityMetric } from '@mastra/evals';

// Initialize metrics

const similarityMetric = new ContentSimilarityMetric({

ignoreCase: true,

ignoreWhitespace: true,

});

const toxicityMetric = new ToxicityMetric({

model: openai('gpt-4'),

scale: 1, // Optional: adjust scoring scale

});

// Evaluate outputs

const input = 'What is the capital of France?';

const output = 'Paris is the capital of France.';

const similarityResult = await similarityMetric.measure(input, output);

const toxicityResult = await toxicityMetric.measure(input, output);

console.log('Similarity Score:', similarityResult.score);

console.log('Toxicity Score:', toxicityResult.score);

import { FaithfulnessMetric } from '@mastra/evals';

// Initialize with context

const faithfulnessMetric = new FaithfulnessMetric({

model: openai('gpt-4'),

context: ['Paris is the capital of France', 'Paris has a population of 2.2 million'],

scale: 1,

});

// Evaluate response against context

const result = await faithfulnessMetric.measure(

'Tell me about Paris',

'Paris is the capital of France with 2.2 million residents',

);

console.log('Faithfulness Score:', result.score);

console.log('Reasoning:', result.reason);

Each metric returns a standardized result object containing:

score: Normalized score (typically 0-1)info: Detailed information about the evaluationSome metrics also provide:

reason: Detailed explanation of the scoreverdicts: Individual judgments that contributed to the final scoreThe package includes built-in telemetry and logging capabilities:

import { attachListeners } from '@mastra/evals';

// Enable basic evaluation tracking

await attachListeners();

// Store evals in Mastra Storage (if storage is enabled)

await attachListeners(mastra);

// Note: When using in-memory storage, evaluations are isolated to the test process.

// When using file storage, evaluations are persisted and can be queried later.

Required for LLM-based metrics:

OPENAI_API_KEY: For OpenAI model access// Main package exports

import { evaluate } from '@mastra/evals';

// NLP-specific metrics

import { ContentSimilarityMetric } from '@mastra/evals/nlp';

@mastra/core: Core framework functionality@mastra/engine: LLM execution engine@mastra/mcp: Model Context Protocol integrationFAQs

A comprehensive evaluation framework for assessing AI model outputs across multiple dimensions.

The npm package @mastra/evals receives a total of 27,497 weekly downloads. As such, @mastra/evals popularity was classified as popular.

We found that @mastra/evals demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 11 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Bringing supply chain security to the next generation of JavaScript package managers

Product

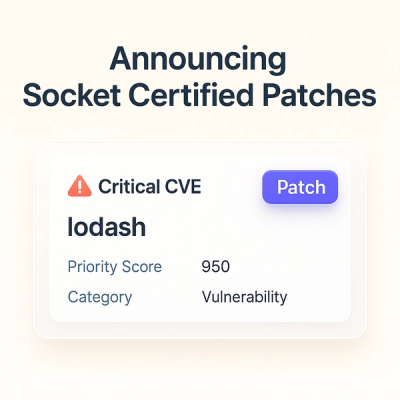

A safer, faster way to eliminate vulnerabilities without updating dependencies

Product

Reachability analysis for Ruby is now in beta, helping teams identify which vulnerabilities are truly exploitable in their applications.