Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

@stellartech/table-migration

Advanced tools

Migrate selected user tables from the current source Directus instance to another target Directus instance.

Migrate selected database tables from one Directus instance to another with ease, using this streamlined extension.

/admin/table-migration or use the navigation menuDestination Setup

Sync Schemas

Table Selection

Migration Options

Execute Migration

The extension follows this sequence:

This extension requires admin privileges on both instances to ensure:

directus_)The extension creates timestamped migration files in the source instance:

schema.json: Complete database schemaitems_full_data.json: Selected table dataitems_singleton.json: Singleton collection datafiles.json: File metadata for referenced filesError: s3:PutObject permission denied

Solution: Set MIGRATION_STORAGE_LOCATION=local in your environment variables

Error: s3:DeleteObject permission denied

Solution: The extension now uses timestamped filenames to avoid delete operations.

Update to version 1.0.6+ and use local storage.

Error: Service "files" is unavailable

Cause: S3 permissions blocking file operations

Solution: Add MIGRATION_STORAGE_LOCATION=local to bypass S3 entirely

Files not appearing in expected S3 bucket

Check: Extension is using local storage by default

Location: Files saved to local storage directory instead of S3

Access: Via Directus Admin → Files → Migration folders

Install Dependencies

npm install

Build the Extension

npm run build

This creates the dist/ folder with:

api.js - Server-side endpoint logicapp.js - Client-side module interfaceInstall in Directus

Option A: Copy to Extensions Directory

# Copy the entire extension folder to your Directus extensions directory

cp -r . /path/to/directus/extensions/table-migration

# Or copy just the built files if you have the package structure

mkdir -p /path/to/directus/extensions/table-migration

cp -r dist/* /path/to/directus/extensions/table-migration/

cp package.json /path/to/directus/extensions/table-migration/

Option B: Use the Link Command (Development)

# Link the extension for development

npm run link

Restart Directus

# Restart your Directus instance

pm2 restart directus

# or

systemctl restart directus

# or restart via your deployment method

Enable the Extension

npm run dev - Build with watch mode and no minificationnpm run build - Production buildnpm run validate - Validate extension structurenpm run add - Add extension to Directus (interactive)Use the included script to build and deploy the extension to your local Directus instances defined in tmp/directus/docker-compose.yaml.

cms/extensions/table-migration/deploy-local.sh

What it does:

npm run build)dist/* and package.jsontmp/directus/v1/extensions/table-migration and tmp/directus/v2/extensions/table-migrationdirectus-v1 and directus-v2 via Docker ComposeEnvironment overrides (optional):

COMPOSE_FILE path to docker-compose (default: tmp/directus/docker-compose.yaml)EXTENSION_NAME extension folder name (default: table-migration)TARGET_V1_DIR and TARGET_V2_DIR target extension pathsAfter running, test:

GET http://localhost:8055/migration/testGET http://localhost:8056/migration/testAfter installation, your Directus extensions directory should contain:

extensions/

└── table-migration/

├── dist/

│ ├── api.js # Server-side endpoints

│ └── app.js # Client-side module

├── package.json # Extension metadata

└── node_modules/ # Dependencies (if copied)

The extension provides these endpoints:

GET /migration/test - Test endpoint for verifying extension is workingPOST /migration/tables - Get available tables for migrationPOST /migration/check - Validate destination compatibilityPOST /migration/schema-sync - Compare and synchronize schema only (supports dry-run)POST /migration/dry-run - Test data migration without changes (requires schema to match)POST /migration/run - Execute data and files migration (requires schema to match)Simple test endpoint to verify the extension is installed and working.

Response:

{

"message": "Test endpoint working!",

"timestamp": "2024-01-01T00:00:00.000Z"

}

Returns all available user-created tables that can be migrated.

Request Body:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {}

}

Response:

[

{

"name": "articles",

"type": "table",

"count": 150,

"schema": {...}

},

{

"name": "settings",

"type": "singleton",

"count": 1,

"schema": {...}

}

]

Validates if the destination instance is compatible for migration.

Request Body:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {}

}

Response:

{

"status": "success",

"icon": "check",

"message": "This instance is compatible for migration."

}

Compares source schema to destination and applies differences. Use to align schemas before migrating any data/files.

Request Body:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"dryRun": true,

"scope": { "force": false }

}

dryRun is true, only compares and validates without applying.dryRun is false (or omitted), applies the schema changes. Use scope.force to force apply when needed.Response: Streaming text with comparison and apply results.

Tests the migration process without making any changes to the destination.

Request Body:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {

"selectedTables": ["articles", "categories"],

"updatedAfter": "2025-07-01T00:00:00.000Z",

"force": false

}

}

Alternatively, you can provide a blacklist of tables using excludedTables to migrate everything else (including Directus system tables). When excludedTables is provided, selectedTables is ignored:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {

"excludedTables": ["temporary_data", "logs"],

"force": false

}

}

Response: Streaming text response with migration progress.

Executes the actual migration process.

/migration/schema-sync first.Request Body:

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {

"selectedTables": ["articles", "categories"],

"force": false

},

"callbackUrl": "https://your-callback-endpoint.com/webhook" // Optional

}

Or with excludedTables (blacklist mode):

{

"baseURL": "https://destination.directus.app",

"token": "your-admin-token",

"scope": {

"excludedTables": ["drafts", "logs"],

"updatedAfter": "2025-07-01T00:00:00.000Z",

"force": false

},

"callbackUrl": "https://your-callback-endpoint.com/webhook"

}

Parameters:

baseURL (required): Destination Directus instance URLtoken (required): Admin token for destination instancescope (required): Migration configuration object

selectedTables (required unless excludedTables is provided): Array of table names to migrateexcludedTables (optional): Array of table names to exclude; when provided, all existing tables (including Directus system tables) will be migrated except those listed, and selectedTables will be ignoredupdatedAfter (optional): ISO datetime string. Only records with date_updated >= updatedAfter are migrated. If date_updated is null or not present, date_created >= updatedAfter is used. If neither field exists, the record is included.force (optional): Override compatibility warningscallbackUrl (optional): URL to call when migration completesResponse Options:

1. Synchronous Response (no callbackUrl): Streaming text response with migration progress and results.

2. Asynchronous Response (with callbackUrl):

{

"status": "started",

"processId": "migration_1672531200000_abc123def456",

"message": "Migration started in background",

"timestamp": "2024-01-01T00:00:00.000Z"

}

Callback Payload: When migration completes, the callback URL receives:

{

"processId": "migration_1672531200000_abc123def456",

"status": "completed", // or "failed"

"message": "Migration completed successfully",

"timestamp": "2024-01-01T00:00:00.000Z",

"details": {

"selectedTables": ["articles", "categories"],

"tablesMigrated": 2,

"filesMigrated": 15,

"folderId": "folder-uuid",

"isDryRun": false

}

}

Error Callback Payload:

{

"processId": "migration_1672531200000_abc123def456",

"status": "failed",

"message": "Schema migration failed: Connection timeout",

"timestamp": "2024-01-01T00:00:00.000Z",

"details": {

"selectedTables": ["articles", "categories"],

"isDryRun": false,

"error": "Error: Schema migration failed: Connection timeout"

}

}

Example 409 response:

{ "status": "danger", "icon": "error", "message": "Schema differences detected. Please run /migration/schema-sync first." }

The migration extension can be integrated with Directus flows for automated migration processes. However, there are important limitations to consider when using the endpoints in flows.

Purpose: Verify the migration extension is working

Name: Test Migration Extension

Method: GET

URL: {{$env.PUBLIC_URL}}/migration/test

{

"message": "Test endpoint working!",

"timestamp": "2025-07-16T15:21:21.460Z"

}

Purpose: Check if destination instance is compatible

Name: Check Destination Compatibility

Method: POST

URL: {{$env.PUBLIC_URL}}/migration/check

Headers:

Authorization: Bearer {{$env.ADMIN_TOKEN}}

Content-Type: application/json

Body:

{

"baseURL": "https://your-destination.directus.app",

"token": "destination-admin-token-here",

"scope": {}

}

{

"status": "success",

"icon": "check",

"message": "This instance is compatible for migration."

}

Error:

{

"status": "danger",

"icon": "error",

"message": "Version mismatch or connection failed"

}

Purpose: Only proceed if destination is compatible

Name: Check Compatibility Status

Rule:

{

"check_destination_compatibility": {

"status": {

"_eq": 200

}

}

}

Purpose: Retrieve a list of tables that can be migrated

Name: Get Available Tables

Method: POST

URL: {{$env.PUBLIC_URL}}/migration/tables

Headers:

Authorization: Bearer {{$env.ADMIN_TOKEN}}

Content-Type: application/json

Body:

{

"baseURL": "https://your-destination.directus.app",

"token": "destination-admin-token-here",

"scope": {}

}

[

{

"name": "FirstTable",

"collection": "FirstTable",

"icon": "folder",

"itemCount": 10,

"selected": false,

"singleton": false

},

{

"name": "SecondTable",

"collection": "SecondTable",

"icon": "table",

"itemCount": 20,

"selected": false,

"singleton": true

}

]

POST /migration/schema-sync.Purpose: Run the actual migration

IMPORTANT LIMITATIONS: The /migration/run endpoint has significant limitations when used in flows:

Name: Execute Migration

Method: POST

URL: {{$env.PUBLIC_URL}}/migration/run

Headers:

Authorization: Bearer {{$env.ADMIN_TOKEN}}

Content-Type: application/json

Body:

{

"baseURL": "https://your-destination.directus.app",

"token": "destination-admin-token-here",

"scope": {

"selectedTables": ["articles", "categories", "products"],

"force": false

}

}

Streaming Text Response:

Content-Type: text/plain with Transfer-Encoding: chunkedLong Execution Time:

Limited Error Handling:

To overcome these limitations, use the callbackUrl parameter:

Benefits:

Implementation Example:

{

"baseURL": "https://destination.directus.app",

"token": "destination-admin-token",

"scope": {

"selectedTables": ["articles", "categories"],

"force": false

},

"callbackUrl": "https://your-directus.app/flows/webhook/migration-complete"

}

Flow gets immediate response:

{

"status": "started",

"processId": "migration_1672531200000_abc123def456",

"message": "Migration started in background"

}

Callback webhook receives completion status:

{

"processId": "migration_1672531200000_abc123def456",

"status": "completed",

"details": {

"tablesMigrated": 2,

"filesMigrated": 15

}

}

1. Test Extension →

2. Check Destination →

3. Condition Check →

4. Get Tables →

5. Start Migration (with callback) →

6. Send "Started" Notification

[Separate Flow for Callback]

7. Webhook Trigger →

8. Process Migration Results →

9. Send Completion Notification

1. Test Extension →

2. Check Destination →

3. Condition Check →

4. Get Tables →

5. Trigger Migration (HTTP-only) →

6. Send Notification

Asynchronous Migration (Recommended):

{

"name": "Start Migration with Callback",

"method": "POST",

"url": "{{$env.PUBLIC_URL}}/migration/run",

"headers": {

"Authorization": "Bearer {{$env.ADMIN_TOKEN}}",

"Content-Type": "application/json"

},

"body": {

"baseURL": "https://destination.directus.app",

"token": "dest-token",

"scope": {

"selectedTables": ["articles", "categories"],

"force": false

},

"callbackUrl": "{{$env.PUBLIC_URL}}/flows/webhook/migration-complete"

}

}

Callback Flow Setup:

migration-complete{

"name": "Process Migration Results",

"condition": {

"webhook": {

"status": {

"_eq": "completed"

}

}

},

"operations": [

{

"type": "notification",

"recipient": "admin@example.com",

"subject": "Migration Completed",

"message": "Migration {{webhook.processId}} completed successfully. Tables migrated: {{webhook.details.tablesMigrated}}"

}

]

}

Synchronous Migration (Legacy):

{

"name": "Trigger Migration Only",

"method": "POST",

"url": "{{$env.PUBLIC_URL}}/migration/run",

"headers": {

"Authorization": "Bearer {{$env.ADMIN_TOKEN}}",

"Content-Type": "application/json"

},

"body": {

"baseURL": "https://destination.directus.app",

"token": "dest-token",

"scope": {

"selectedTables": ["{{selected_tables}}"],

"force": false

}

},

"timeout": 30000

}

Add these to your .env file:

# Source instance

PUBLIC_URL=https://your-source.directus.app

ADMIN_TOKEN=your-admin-token-here

# Destination instance

DEST_URL=https://your-destination.directus.app

DEST_TOKEN=destination-admin-token

# Storage configuration (optional)

MIGRATION_STORAGE_LOCATION=local

The extension supports configurable storage locations for migration files to work around S3 permission restrictions.

By default, the extension uses local storage for all migration files to avoid S3 permission issues:

Option 1: Local Storage (Default)

# Uses local file storage (bypasses S3 permissions)

MIGRATION_STORAGE_LOCATION=local

Option 2: Alternative S3 Storage

# Uses a different S3 storage location with proper permissions

MIGRATION_STORAGE_LOCATION=s3-backup

Option 3: Auto-detect Alternative Storage

# Remove or comment out to use the first non-default storage location

# MIGRATION_STORAGE_LOCATION=

If using S3 storage, your IAM role needs these permissions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject",

"s3:DeleteObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::your-bucket-name/*",

"arn:aws:s3:::your-bucket-name"

]

}

]

}

All migration files use timestamped filenames to prevent conflicts and avoid delete operations:

schema_2024-01-15T10-30-45-123Z.jsonitems_full_data_2024-01-15T10-30-45-124Z.jsonusers_2024-01-15T10-30-45-125Z.jsonThis ensures no existing files are overwritten and eliminates the need for S3 delete permissions.

Always test with /migration/dry-run before actual migration:

{

"baseURL": "https://destination.directus.app",

"token": "token",

"scope": {

"selectedTables": ["test_table"],

"force": false

}

}

Include error notification operations:

Name: Migration Error Alert

Recipient: Admin User

Subject: Migration Flow Failed

Message: Error in migration process: {{$last.error}}

For better reliability, consider setting up webhooks:

This avoids timeout and streaming response issues while providing proper completion notifications.

After migration completion, check the file library for migration artifacts:

schema.jsonitems_full_data.jsonitems_singleton.jsonfiles.jsonFAQs

Migrate selected user tables from the current source Directus instance to another target Directus instance.

We found that @stellartech/table-migration demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 3 open source maintainers collaborating on the project.

Did you know?

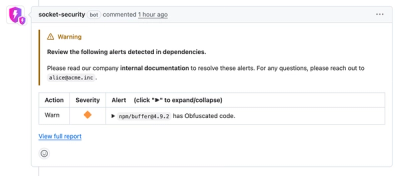

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.