Security News

npm Adopts OIDC for Trusted Publishing in CI/CD Workflows

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.

anthropic-proxy-nextgen

Advanced tools

A proxy service that allows Anthropic/Claude API requests to be routed through an OpenAI compatible API

A TypeScript-based proxy service that allows Anthropic/Claude API requests to be routed through an OpenAI compatible API to access alternative models.

Anthropic/Claude Proxy provides a compatibility layer between Anthropic/Claude and alternative models available through either e.g. OpenRouter or OpenAI compatible API URL. It dynamically selects models based on the requested Claude model name, mapping Opus/Sonnet to a configured "big model" and Haiku to a "small model".

Key features:

Model: deepseek/deepseek-chat-v3-0324 on OpenRouter

Model: claude-sonnet-4 on Github Copilot

$ npm install -g anthropic-proxy-nextgen

$ npm install anthropic-proxy-nextgen

Start the proxy server using the CLI:

$ npx anthropic-proxy-nextgen start \

--port 8080 \

--base-url=http://localhost:4000 \

--big-model-name=github-copilot-claude-sonnet-4 \

--small-model-name=github-copilot-claude-3.5-sonnet \

--openai-api-key=sk-your-api-key \

--log-level=DEBUG

or run with node:

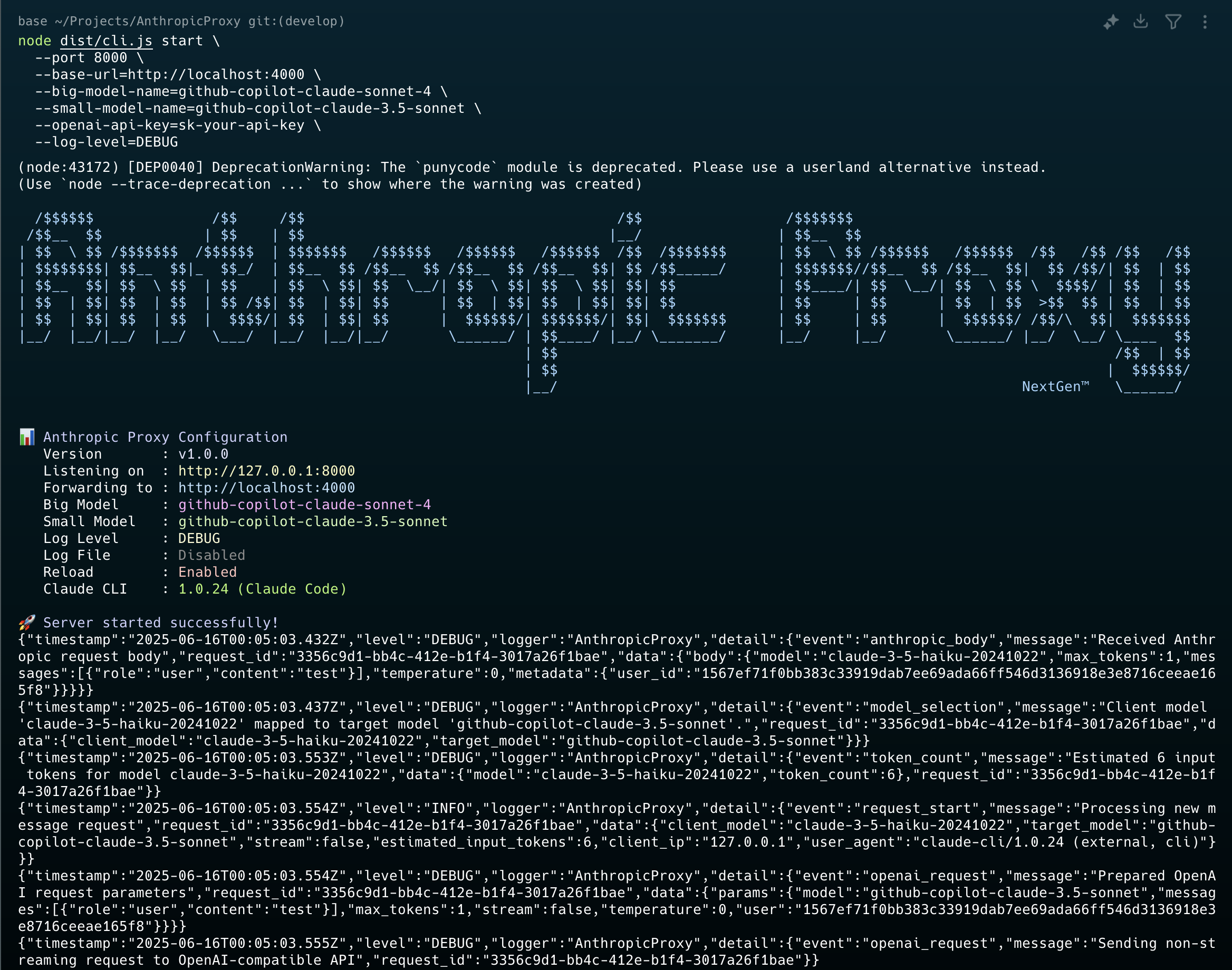

$ node dist/cli.js start \

--port 8080 \

--base-url=http://localhost:4000 \

--big-model-name=github-copilot-claude-sonnet-4 \

--small-model-name=github-copilot-claude-3.5-sonnet \

--openai-api-key=sk-your-api-key \

--log-level=DEBUG

--port, -p <port>: Port to listen on (default: 8080)--host, -h <host>: Host to bind to (default: 127.0.0.1)--base-url <url>: Base URL for the OpenAI-compatible API (required)--openai-api-key <key>: API key for the OpenAI-compatible service (required)--big-model-name <name>: Model name for Opus/Sonnet requests (default: github-copilot-claude-sonnet-4)--small-model-name <name>: Model name for Haiku requests (default: github-copilot-claude-3.5-sonnet)--referrer-url <url>: Referrer URL for requests (auto-generated if not provided)--log-level <level>: Log level - DEBUG, INFO, WARN, ERROR (default: INFO)--log-file <path>: Log file path for JSON logs--no-reload: Disable auto-reload in developmentYou can also use a .env file for configuration:

HOST=127.0.0.1

PORT=8080

REFERRER_URL=http://localhost:8080/AnthropicProxy

BASE_URL=http://localhost:4000

OPENAI_API_KEY=sk-your-api-key

BIG_MODEL_NAME=github-copilot-claude-sonnet-4

SMALL_MODEL_NAME=github-copilot-claude-3.5-sonnet

LOG_LEVEL=DEBUG

LOG_FILE_PATH=./logs/anthropic-proxy-nextgen.jsonl

import { startServer, createLogger, Config } from 'anthropic-proxy-nextgen';

const config: Config = {

host: '127.0.0.1',

port: 8080,

baseUrl: 'http://localhost:4000',

openaiApiKey: 'sk-your-api-key',

bigModelName: 'github-copilot-claude-sonnet-4',

smallModelName: 'github-copilot-claude-3.5-sonnet',

referrerUrl: 'http://localhost:8080/AnthropicProxy',

logLevel: 'INFO',

reload: false,

appName: 'AnthropicProxy',

appVersion: '1.0.0',

};

const logger = createLogger(config);

await startServer(config, logger);

# Clone the repository

$ git clone <repository-url>

$ cd AnthropicProxy

# Install dependencies

$ npm install

# Build the project

$ npm run build

# Run in development mode

$ npm run dev

# Run tests

$ npm test

# Lint and type check

$ npm run lint

$ npm run type-check

npm run build: Compile TypeScript to JavaScriptnpm run dev: Run in development mode with auto-reloadnpm start: Start the compiled servernpm test: Run testsnpm run lint: Run ESLintnpm run type-check: Run TypeScript type checkingThe proxy server exposes the following endpoints:

POST /v1/messages: Create a message (main endpoint)POST /v1/messages/count_tokens: Count tokens for a requestGET /: Health check endpoint# Set the base URL to point to your proxy

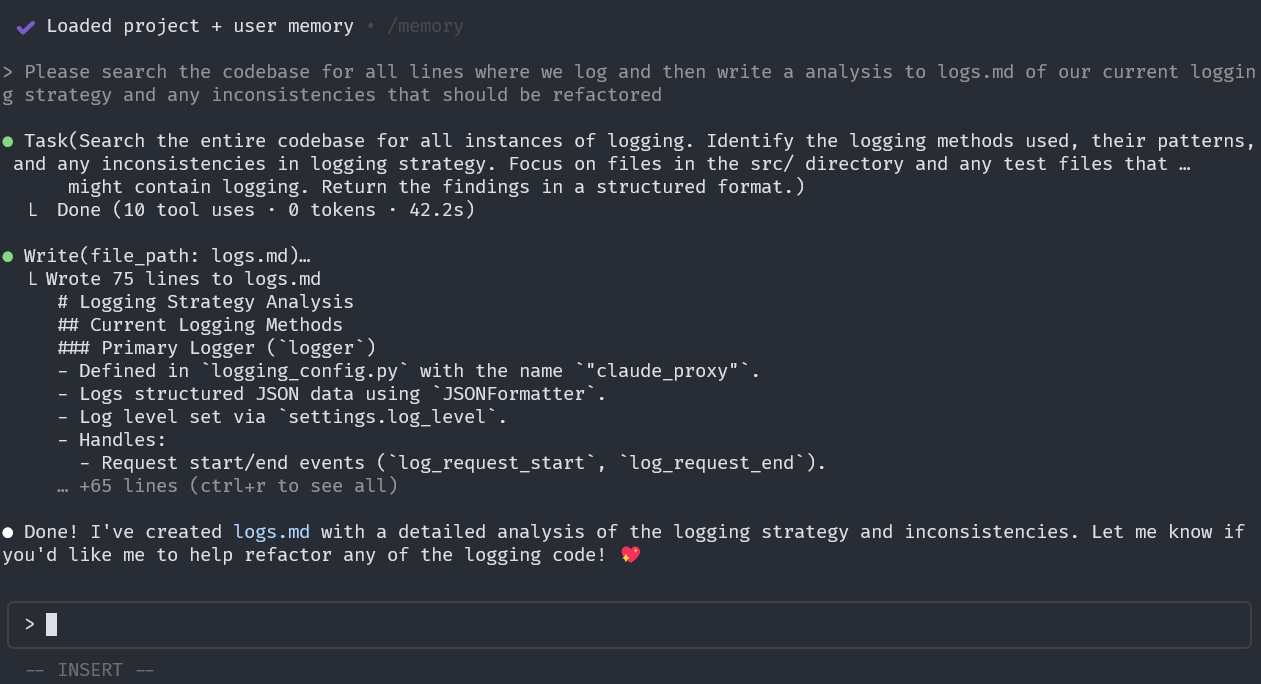

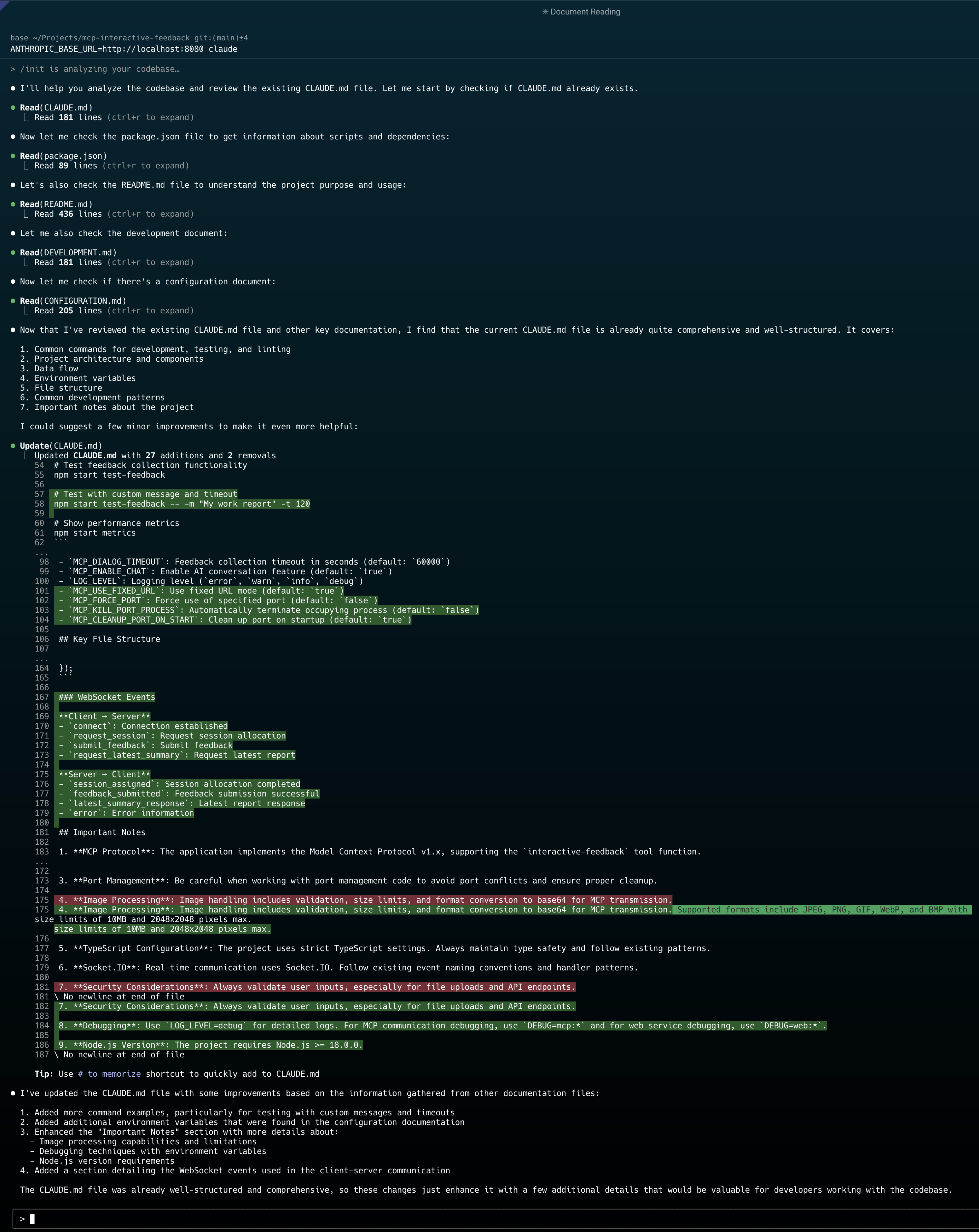

ANTHROPIC_BASE_URL=http://localhost:8080 claude

$ npx anthropic-proxy-nextgen start \

--base-url=https://openrouter.ai/api/v1 \

--openai-api-key=sk-or-v1-your-openrouter-key \

--big-model-name=anthropic/claude-3-opus \

--small-model-name=anthropic/claude-3-haiku

$ npx anthropic-proxy-nextgen start \

--base-url=http://localhost:4000 \

--openai-api-key=sk-your-github-copilot-key \

--big-model-name=github-copilot-claude-sonnet-4 \

--small-model-name=github-copilot-claude-3.5-sonnet

$ npx anthropic-proxy-nextgen start \

--base-url=http://localhost:1234/v1 \

--openai-api-key=not-needed \

--big-model-name=local-large-model \

--small-model-name=local-small-model

$ claude mcp add Context7 -- npx -y @upstash/context7-mcp

Added stdio MCP server Context7 with command: npx -y @upstash/context7-mcp to local config

$ claude mcp add atlassian -- npx -y mcp-remote https://mcp.atlassian.com/v1/sse

Added stdio MCP server atlassian with command: npx -y mcp-remote https://mcp.atlassian.com/v1/sse to local config

$ claude mcp add sequential-thinking -- npx -y @modelcontextprotocol/server-sequential-thinking

Added stdio MCP server sequential-thinking with command: npx -y @modelcontextprotocol/server-sequential-thinking to local config

$ claude mcp add sequential-thinking-tools -- npx -y mcp-sequentialthinking-tools

Added stdio MCP server sequential-thinking-tools with command: npx -y mcp-sequentialthinking-tools to local config

$ claude mcp add --transport http github https://api.githubcopilot.com/mcp/

Added HTTP MCP server github with URL: https://api.githubcopilot.com/mcp/ to local config

$ claude mcp list

Context7: npx -y @upstash/context7-mcp

sequential-thinking: npx -y @modelcontextprotocol/server-sequential-thinking

mcp-sequentialthinking-tools: npx -y mcp-sequentialthinking-tools

atlassian: npx -y mcp-remote https://mcp.atlassian.com/v1/sse

github: https://api.githubcopilot.com/mcp/ (HTTP)

"mcpServers": {

"Context7": {

"type": "stdio",

"command": "npx",

"args": [

"-y",

"@upstash/context7-mcp"

],

"env": {}

},

"sequential-thinking": {

"type": "stdio",

"command": "npx",

"args": [

"-y",

"@modelcontextprotocol/server-sequential-thinking"

],

"env": {}

},

"mcp-sequentialthinking-tools": {

"type": "stdio",

"command": "npx",

"args": [

"-y",

"mcp-sequentialthinking-tools"

]

},

"atlassian": {

"type": "stdio",

"command": "npx",

"args": [

"-y",

"mcp-remote",

"https://mcp.atlassian.com/v1/sse"

],

"env": {}

},

"github": {

"type": "http",

"url": "https://api.githubcopilot.com/mcp/"

}

}

This TypeScript implementation maintains the same core functionality as the Python version:

The TypeScript version provides the same API and functionality as the Python FastAPI version. Key differences:

FAQs

A proxy service that allows Anthropic/Claude API requests to be routed through an OpenAI compatible API

The npm package anthropic-proxy-nextgen receives a total of 2 weekly downloads. As such, anthropic-proxy-nextgen popularity was classified as not popular.

We found that anthropic-proxy-nextgen demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.

Research

/Security News

A RubyGems malware campaign used 60 malicious packages posing as automation tools to steal credentials from social media and marketing tool users.

Security News

The CNA Scorecard ranks CVE issuers by data completeness, revealing major gaps in patch info and software identifiers across thousands of vulnerabilities.