Security News

CVE Volume Surges Past 48,000 in 2025 as WordPress Plugin Ecosystem Drives Growth

CVE disclosures hit a record 48,185 in 2025, driven largely by vulnerabilities in third-party WordPress plugins.

In-Memory Cache with O(1) Operations and LRU Purging Strategy

Cache-LRU is a very small JavaScript library, providing an in-memory key-indexed object cache data structure with Least-Recently-Used (LRU) object purging strategy and main operations which all have strict O(1), i.e., constant and hence independent of the number of cached objects, time complexity (including the internal LRU purging functionality).

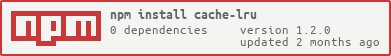

$ npm install cache-lru

new CacheLRU(): CacheLRU: [O(1)]

Create a new cache instance -- with initially no cached objects

and an infinite caching limit.

CacheLRU#limit(max?: Number): Number: [O(1)]

Set a new or get current maximum number of objects in the cache. On

setting a new maximum, if previously already more than max

objects are in the cache, the length() - max LRU ojects are

automatically deleted.

CacheLRU#length(): Number: [O(1)]

Get number of objects in the cache.

CacheLRU#dispose(callback: (key, val, op) => Void): CacheLRU: [O(1)]

Set a callback which is called once for every object just before it is

disposed off the cache, either because it is replaced by a set()

call or deleted by a del() call. The parameters to the callback are

the key under which the object was stored, the value of the object which

was stored and the operation (either set or del) which caused the

object disposal. This is usually used for explicit resource deallocation reasons.

CacheLRU#has(key: String): Boolean: [O(1)]

Check whether object exists under key -- without promoting the

corresponding object to be the new MRU object.

CacheLRU#peek(key: String): Object: [O(1)]

Get value of object under key -- without promoting the

corresponding object to be the new MRU object. If no

object exists under key the value undefined is returned.

CacheLRU#touch(key: String): CacheLRU: [O(1)]

Touch object under key and this way explicitly promote the

object to be the new MRU object.

If no object exists under key an exception is thrown.

CacheLRU#get(key: String): Object: [O(1)]

Get value of object under key -- and promote the

object to be the new MRU object.

If no object exists under key the value undefined is returned.

CacheLRU#set(key: String, val: Object, expires?: Number): CacheLRU: [O(1)]

Set value of object under key. If there is already an object stored

under key, replace it. Else insert as a new object into the cache.

In both cases, promote the affected object to be the new MRU object.

If the optional expires parameter is given, it should be the

duration in milliseconds the object should maximally last in the cache (it still

can be disposed earlier because of LRU purging of the cache). By

default this is Infinity.

CacheLRU#del(key: String): CacheLRU: [O(1)]

Delete object under key.

If no object exists under key an exception is thrown.

CacheLRU#clear(): CacheLRU: [O(n)]

Delete all objects in the cache.

CacheLRU#keys(): String[]: [O(n)]

Get the list of keys of all objects in the cache, in MRU to LRU order.

CacheLRU#values(): Object[]: [O(n)]

Get the list of values of all objects in the cache, in MRU to LRU order.

CacheLRU#each(callback: (val: Object, key: String, order: Number) =>, ctx: Object): Object: [O(n)]

Iterate over all objects in the cache, in MRU to LRU order, and call

the callback function for each object. The function receives the

object value, the object key and the iteration order (starting from

zero and steadily increasing).

Although Cache-LRU is written in ECMAScript 6, it is transpiled to ECMAScript 5 and this way runs in really all(!) current (as of 2016) JavaScript environments, of course.

Internally, Cache-LRU is based on a managing all objects in a two-headed double-linked list of buckets. This way it can achieve the O(1) time complexity in all its main operations, including the automatically happening LRU purging functionality.

Copyright (c) 2015-2024 Dr. Ralf S. Engelschall (http://engelschall.com/)

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.

FAQs

In-Memory Cache with O(1) Operations and LRU Purging Strategy

The npm package cache-lru receives a total of 1,695 weekly downloads. As such, cache-lru popularity was classified as popular.

We found that cache-lru demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

CVE disclosures hit a record 48,185 in 2025, driven largely by vulnerabilities in third-party WordPress plugins.

Security News

Socket CEO Feross Aboukhadijeh joins Insecure Agents to discuss CVE remediation and why supply chain attacks require a different security approach.

Security News

Tailwind Labs laid off 75% of its engineering team after revenue dropped 80%, as LLMs redirect traffic away from documentation where developers discover paid products.