Product

Introducing Socket Fix for Safe, Automated Dependency Upgrades

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

Amazon S3 adapter for catbox.

bucket - the S3 bucket. You need to have write access for it.accessKeyId - the Amazon access key. (If you don't specify key, it will attempt to use local credentials.)secretAccessKey - the Amazon secret access key. (If you don't specify secret, it will attempt to use local credentials.)region - the Amazon S3 region. (If you don't specify a region, the bucket will be created in US Standard.)endpoint - the S3 endpoint URL. (If you don't specify an endpoint, the bucket will be created at Amazon S3 using the provided region if any)setACL - defaults to true, if set to false, not ACL is set for the objectsACL - the ACL to set if setACL is not false, defaults to public-readsignatureVersion - specify signature version when using an S3 bucket that has Server Side Encryption enabled (set to either v2or v4).s3ForcePathStyle - force path style URLs for S3 objects (default: false), example:

https://bucket.s3.example.comhttps://s3.example.com/bucketAt the moment, Hapi doesn't support caching of non-JSONifiable responses (like Streams or Buffers, see #1948). If you want to use catbox-s3 for binary data, you have to handle it manually in your request handler:

var Catbox = require('catbox');

// On hapi server initialization:

// 1) Create a new catbox client instance

var cache = new Catbox.Client(require('catbox-s3'), {

accessKeyId : /* ... */,

secretAccessKey : /* ... */,

region : /* ... (optional) */,

bucket : /* ... */

});

// 2) Inititalize the caching

cache.start().catch((err) => {

if (err) { console.error(err); }

/* ... */

});

// Your route's request handler

var handler = async function (request, h) {

var cacheKey = {

id : /* cache item id */,

segment : /* cache segment name */

};

const result = await cache.get(cacheKey);

if (result) {

return h.response(result.item).type(/* response content type */);

}

const data = await yourBusinessLogic();

await cache.set(cacheKey, data, /* expiration in ms */);

return h.response(data).type(/* response content type */);

};

In order to run the tests, set the aforementioned options as environment variables:

S3_ACCESS_KEY=<YOURKEY> S3_SECRET_KEY=<YOURSECRET> S3_REGION=<REGION> S3_BUCKET=<YOURBUCKET> npm test

FAQs

Amazon S3 adapter for catbox

The npm package catbox-s3 receives a total of 35 weekly downloads. As such, catbox-s3 popularity was classified as not popular.

We found that catbox-s3 demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

Security News

CISA denies CVE funding issues amid backlash over a new CVE foundation formed by board members, raising concerns about transparency and program governance.

Product

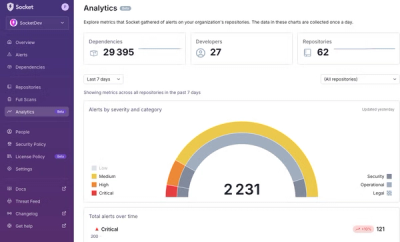

We’re excited to announce a powerful new capability in Socket: historical data and enhanced analytics.