Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

A mature CSV toolset with simple api, full of options and tested against large datasets.

The csv npm package is a comprehensive library for parsing and handling CSV data in Node.js. It provides a range of tools for reading, writing, transforming, and streaming CSV data, making it a versatile choice for developers working with CSV files in JavaScript.

Parsing CSV

This feature allows you to parse CSV data into arrays or objects. The code sample demonstrates how to parse a simple CSV string.

"use strict";

const parse = require('csv-parse');

const assert = require('assert');

const input = 'a,b,c\nd,e,f';

parse(input, function(err, output){

assert.deepEqual(

output,

[['a', 'b', 'c'], ['d', 'e', 'f']]

);

});Stringifying CSV

This feature allows you to convert arrays or objects into CSV strings. The code sample shows how to stringify an array of arrays into a CSV string.

"use strict";

const stringify = require('csv-stringify');

const assert = require('assert');

const input = [['a', 'b', 'c'], ['d', 'e', 'f']];

stringify(input, function(err, output){

assert.equal(

output,

'a,b,c\nd,e,f\n'

);

});Transforming Data

This feature allows you to apply a transformation to the CSV data. The code sample demonstrates how to convert all the values in the CSV to uppercase.

"use strict";

const transform = require('stream-transform');

const assert = require('assert');

const input = [['a', 'b', 'c'], ['d', 'e', 'f']];

const transformer = transform(function(record, callback){

callback(null, record.map(value => value.toUpperCase()));

});

transformer.write(input[0]);

transformer.write(input[1]);

transformer.end();

const output = [];

transformer.on('readable', function(){

let row;

while ((row = transformer.read()) !== null) {

output.push(row);

}

});

transformer.on('end', function(){

assert.deepEqual(

output,

[['A', 'B', 'C'], ['D', 'E', 'F']]

);

});Streaming API

This feature provides a streaming API for working with large CSV files without loading the entire file into memory. The code sample demonstrates how to read a CSV file as a stream and parse it.

"use strict";

const fs = require('fs');

const parse = require('csv-parse');

const parser = parse({columns: true});

const input = fs.createReadStream('/path/to/input.csv');

input.pipe(parser);

parser.on('readable', function(){

let record;

while ((record = parser.read()) !== null) {

// Work with each record

}

});

parser.on('end', function(){

// Handle end of parsing

});PapaParse is a robust and powerful CSV parser for JavaScript with a similar feature set to csv. It supports browser and server-side parsing, auto-detection of delimiters, and streaming large files. Compared to csv, PapaParse is known for its ease of use and strong browser-side capabilities.

fast-csv is another popular CSV parsing and formatting library for Node.js. It offers a simple API, flexible parsing options, and support for streams. While csv provides a comprehensive set of tools for various CSV operations, fast-csv focuses on performance and ease of use for common tasks.

The csv project provides CSV generation, parsing, transformation and serialization for Node.js.

It has been tested and used by a large community over the years and should be considered reliable. It provides every option you would expect from an advanced CSV parser and stringifier.

This package exposes 4 packages:

csv-generate

(GitHub),

a flexible generator of CSV string and Javascript objects.csv-parse

(GitHub),

a parser converting CSV text into arrays or objects.csv-stringify

(GitHub),

a stringifier converting records into a CSV text.stream-transform

(GitHub),

a transformation framework.The full documentation for the current version is available here.

Installation command is npm install csv.

Each package is fully compatible with the Node.js stream 2 and 3 specifications. Also, a simple callback-based API is always provided for convenience.

This example uses the Stream API to create a processing pipeline.

// Import the package

import * as csv from "../lib/index.js";

// Run the pipeline

csv

// Generate 20 records

.generate({

delimiter: "|",

length: 20,

})

// Transform CSV data into records

.pipe(

csv.parse({

delimiter: "|",

}),

)

// Transform each value into uppercase

.pipe(

csv.transform((record) => {

return record.map((value) => {

return value.toUpperCase();

});

}),

)

// Convert objects into a stream

.pipe(

csv.stringify({

quoted: true,

}),

)

// Print the CSV stream to stdout

.pipe(process.stdout);

This parent project doesn't have tests itself but instead delegates the tests to its child projects.

Read the documentation of the child projects for additional information.

The project is sponsored by Adaltas, an Big Data consulting firm based in Paris, France.

FAQs

A mature CSV toolset with simple api, full of options and tested against large datasets.

The npm package csv receives a total of 1,253,287 weekly downloads. As such, csv popularity was classified as popular.

We found that csv demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

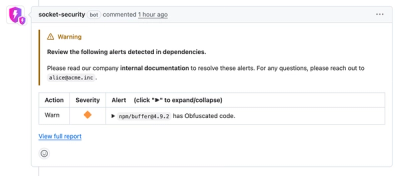

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.