Product

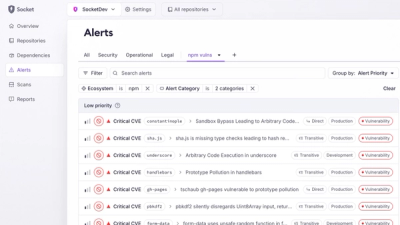

Introducing Custom Tabs for Org Alerts

Create and share saved alert views with custom tabs on the org alerts page, making it easier for teams to return to consistent, named filter sets.

A simple wrapper for OpenAI's chat API - that includes ability to use custom baseURL's

A lightweight and easy-to-use wrapper for OpenAI's Chat API. DecaChat provides a simple interface for creating chat-based applications with OpenAI's GPT models.

npm install deca-chat

import { DecaChat } from 'deca-chat';

// Initialize the chat client

const chat = new DecaChat({

apiKey: 'your-openai-api-key'

});

// Send a message and get a response

async function example() {

const response = await chat.chat('Hello, how are you?');

console.log(response);

}

The DecaChat constructor accepts a configuration object with the following options:

interface DecaChatConfig {

apiKey: string; // Required: Your OpenAI API key

model?: string; // Optional: Default 'gpt-4o-mini'

baseUrl?: string; // Optional: Default 'https://api.openai.com/v1'

maxTokens?: number; // Optional: Default 1000

temperature?: number; // Optional: Default 0.7

intro?: string; // Optional: Custom introduction message

systemMessage?: string; // Optional: Initial system message

useBrowser?: boolean; // Optional: Enable browser usage (Ensure API keys are secured!)

}

const chat = new DecaChat(config: DecaChatConfig);

setSystemMessage(message: string): voidSets the system message for the conversation. This resets the conversation history and starts with the new system message.

chat.setSystemMessage('You are a helpful assistant specialized in JavaScript.');

setIntro(message: string): voidSets a custom introduction message that will be sent to the user when starting a new conversation.

chat.setIntro('Hi! I'm your AI assistant. How can I help you today?');

async chat(message: string): Promise<string>Sends a message and returns the assistant's response. The message and response are automatically added to the conversation history.

const response = await chat.chat('What is a closure in JavaScript?');

clearConversation(): voidClears the entire conversation history, including the system message.

chat.clearConversation();

getConversation(): ChatMessage[]Returns the current conversation history, including system messages, user messages, and assistant responses.

const history = chat.getConversation();

import { DecaChat } from 'deca-chat';

async function example() {

// Initialize with custom configuration including system message

const chat = new DecaChat({

apiKey: 'your-openai-api-key',

model: 'gpt-4',

maxTokens: 2000,

temperature: 0.8,

intro: 'Hello! I'm your coding assistant. Ask me anything about programming!',

systemMessage: 'You are a helpful coding assistant specialized in JavaScript.'

});

// The system message can also be set after initialization

chat.setSystemMessage('You are now a Python expert.');

// The intro message will be automatically sent when starting a conversation

const response = await chat.chat('How do I create a React component?');

console.log('Assistant:', response);

}

const chat = new DecaChat({

apiKey: 'your-api-key',

baseUrl: 'https://your-custom-endpoint.com/v1',

model: 'gpt-4o-mini'

});

// Start with a system message

chat.setSystemMessage('You are a helpful assistant.');

// Have a conversation

await chat.chat('What is TypeScript?');

await chat.chat('How does it differ from JavaScript?');

// Get the conversation history

const history = chat.getConversation();

console.log(history);

/* Output:

[

{ role: 'system', content: 'You are a helpful assistant.' },

{ role: 'user', content: 'What is TypeScript?' },

{ role: 'assistant', content: '...' },

{ role: 'user', content: 'How does it differ from JavaScript?' },

{ role: 'assistant', content: '...' }

]

*/

// Clear the conversation and start fresh

chat.clearConversation();

The chat method throws errors when:

Always wrap API calls in try-catch blocks for proper error handling:

try {

const response = await chat.chat('Hello');

console.log(response);

} catch (error) {

console.error('Chat error:', error);

}

Contributions are welcome! Please feel free to submit a Pull Request. For major changes, please open an issue first to discuss what you would like to change.

MIT License - see the LICENSE file for details.

FAQs

A simple wrapper for OpenAI's chat API - that includes ability to use custom baseURL's

The npm package deca-chat receives a total of 0 weekly downloads. As such, deca-chat popularity was classified as not popular.

We found that deca-chat demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 0 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Create and share saved alert views with custom tabs on the org alerts page, making it easier for teams to return to consistent, named filter sets.

Product

Socket’s Rust and Cargo support is now generally available, providing dependency analysis and supply chain visibility for Rust projects.

Security News

Chrome 144 introduces the Temporal API, a modern approach to date and time handling designed to fix long-standing issues with JavaScript’s Date object.