Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

doc_chat_ai

Advanced tools

Backend SDK for ChatAI - Simple and efficient communication with ChatAI API

A simple and efficient backend SDK for communicating with the ChatAI API. This SDK provides easy-to-use methods for both streaming and non-streaming chat interactions.

npm install doc_chat_ai

const { ChatAIClient } = require("doc_chat_ai");

const client = new ChatAIClient({

apiKey: "your-api-key-here",

});

// Simple chat

const response = await client.chat("What is machine learning?");

console.log(response.response);

const { ChatAIClient } = require("doc_chat_ai");

const client = new ChatAIClient({

apiKey: "your-api-key",

});

async function basicChat() {

try {

const response = await client.chat("What is artificial intelligence?");

console.log("AI Response:", response.response);

console.log("Session ID:", response.sessionId);

} catch (error) {

console.error("Error:", error.message);

}

}

basicChat();

const { ChatAIClient } = require("doc_chat_ai");

const client = new ChatAIClient({

apiKey: "your-api-key",

});

async function streamingChat() {

try {

const stream = await client.chatStream("Explain deep learning in detail");

for await (const chunk of stream) {

if (chunk.type === "content") {

process.stdout.write(chunk.content); // Print streaming content

}

if (chunk.type === "done") {

console.log("\n[Stream complete]");

}

}

} catch (error) {

console.error("Streaming error:", error.message);

}

}

streamingChat();

const client = new ChatAIClient({

apiKey: "your-api-key",

});

async function conversation() {

const sessionId = "user-session-123";

// First message

const response1 = await client.chat("Hello, my name is John", {

sessionId: sessionId,

});

console.log("AI:", response1.response);

// Follow-up message (AI will remember the conversation)

const response2 = await client.chat("What is my name?", {

sessionId: sessionId,

});

console.log("AI:", response2.response);

}

const client = new ChatAIClient({

apiKey: "your-api-key",

origin: "https://my-frontend.com",

headers: {

"X-Request-ID": "abc123",

"X-Client-Version": "1.0.0",

},

});

// Chat with advanced parameters

const response = await client.chat("Explain quantum computing", {

sessionId: "user-session-123",

model: "gpt-4",

temperature: 0.7,

maxTokens: 1000,

context: "User is a beginner in physics",

metadata: {

userId: "user-123",

source: "web-app",

timestamp: new Date().toISOString(),

},

});

try {

const response = await client.chat("Tell me about AI");

console.log(response.response);

} catch (error) {

if (error.status) {

console.error(`API Error ${error.status}:`, error.message);

} else {

console.error("Network Error:", error.message);

}

}

new ChatAIClient(config);

Config Options:

apiKey (required): Your ChatAI API keytimeout (optional): Request timeout in milliseconds (default: 30000)origin (optional): Origin header for CORS (default: *)headers (optional): Additional custom headerschat(query, options)Send a chat message and get a complete response.

Parameters:

query (string): The message to sendoptions (object, optional):

sessionId (string, optional): Session ID for conversation continuitystream (boolean, optional): Force non-streaming mode (default: false)Returns: Promise

chatStream(query, options)Send a chat message and get a streaming response.

Parameters:

query (string): The message to sendoptions (object, optional):

sessionId (string, optional): Session ID for conversation continuityReturns: AsyncGenerator

interface ChatResponse {

error: boolean;

sessionId: string;

response: string;

outOfContext?: boolean;

}

interface ChatChunk {

type: "session" | "content" | "done" | "error";

sessionId?: string;

content?: string;

timestamp?: string;

timing?: {

total: number;

};

outOfContext?: boolean;

message?: string;

}

This SDK communicates with the ChatAI backend endpoint:

POST /api/v1/chat

Content-Type: application/json

Authorization: Bearer {API_KEY}

{

"query": "User's question/query",

"stream": true,

"sessionId": "optional-session-id",

"model": "optional-model-name",

"temperature": 0.7,

"maxTokens": 1000,

"context": "optional-context",

"metadata": { "custom": "data" }

}

Parameters:

- query (required): User's question/query

- stream (required): true for streaming response, false for complete response

- sessionId (optional): Session ID for conversation continuity

- model (optional): AI model to use

- temperature (optional): Response creativity (0.0-1.0)

- maxTokens (optional): Maximum response length

- context (optional): Additional context for the query

- metadata (optional): Custom metadata object

MIT

FAQs

Backend SDK for ChatAI - Simple and efficient communication with ChatAI API

We found that doc_chat_ai demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

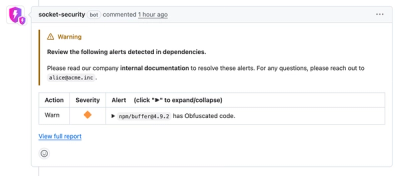

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.