Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

The JGPTMem class is designed to handle chat interactions utilizing the OpenAI GPT-3.5 Turbo model, with a specific focus on managing a short-term memory. This memory is intended to continuously condense, update, and store interactions between the user an

The JGPTMem class is designed to facilitate conversations with OpenAI's GPT-3.5-turbo model, while managing a short-term memory to provide a more context-aware interaction.

openai package.openai package.new JGPTMem(apiKey, orgId)Create a new JGPTMem instance.

apiKey (String): The OpenAI API key.orgId (String): The organization ID.async talk(userInput)Receives user input, constructs a prompt with short-term memory instructions, and returns the response from OpenAI's GPT-3.5-turbo model.

userInput (String): The user's question or command.{"condensedMemory":condensedSummary, "response": "your answer"}.const jgptMem = new JGPTMem(process.env.OPENAI_API_KEY, process.env.OPENAI_ORG_ID);

const userInput = "Tell me about the weather today";

jgptMem.talk(userInput).then(result => console.log(result));

This code snippet creates an instance of JGPTMem, provides a user question, and prints the response.

OPENAI_API_KEY, OPENAI_ORG_ID) in your .env file.talk method returns a Promise. Handle it appropriately in your code.JGPTMem provides a sophisticated way to utilize OpenAI's GPT-3.5-turbo model for interactive conversations, considering previous interactions and maintaining a condensed short-term memory for more contextual responses.

FAQs

The JGPTMem class is designed to handle chat interactions utilizing the OpenAI GPT-3.5 Turbo model, with a specific focus on managing a short-term memory. This memory is intended to continuously condense, update, and store interactions between the user an

We found that jgptmem demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

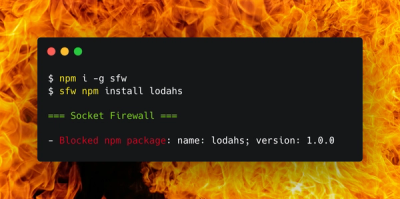

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.