Product

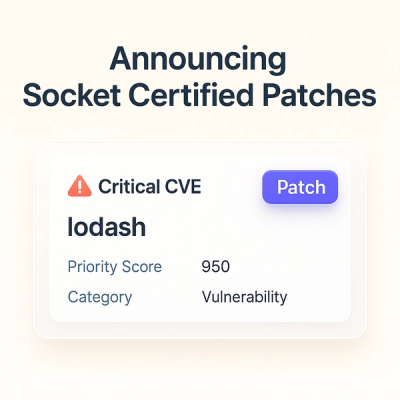

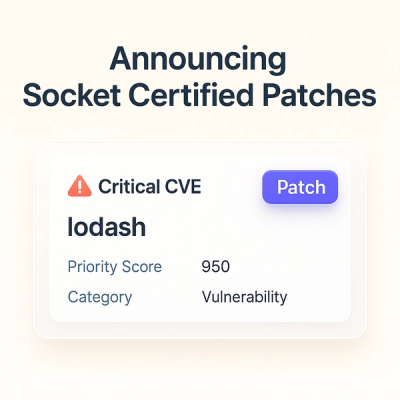

Announcing Socket Certified Patches: One-Click Fixes for Vulnerable Dependencies

A safer, faster way to eliminate vulnerabilities without updating dependencies

naver-finance-crawl-mcp

Advanced tools

Naver Finance web crawler with MCP server support - crawl Korean stock market data

MCP (Model Context Protocol) server for crawling Korean stock market data from Naver Finance.

A Node.js-based web crawler built with TypeScript, Axios, and Cheerio, with MCP server support for AI assistants.

src/

├── crawlers/ # Crawler implementations

│ ├── baseCrawler.ts # Base class for all crawlers

│ ├── exampleCrawler.ts # Example crawler implementation

│ └── index.ts

├── utils/ # Utility functions

│ ├── request.ts # HTTP client with retry logic

│ ├── parser.ts # HTML parsing helper

│ └── index.ts

├── types/ # TypeScript type definitions

│ └── index.ts

└── index.ts # Main entry point

tests/

├── unit/ # Unit tests

├── integration/ # Integration tests

└── e2e/ # E2E tests

npm install -g naver-finance-crawl-mcp

To install Naver Finance Crawl MCP Server for any client automatically via Smithery:

npx -y @smithery/cli@latest install naver-finance-crawl-mcp --client <CLIENT_NAME>

Available clients: cursor, claude, vscode, windsurf, cline, zed, etc.

Example for Cursor:

npx -y @smithery/cli@latest install naver-finance-crawl-mcp --client cursor

This will automatically configure the MCP server in your chosen client.

pnpm install

Naver Finance Crawl MCP can be integrated with various AI coding assistants and IDEs that support the Model Context Protocol (MCP).

Go to: Settings -> Cursor Settings -> MCP -> Add new global MCP server

Add the following configuration to your ~/.cursor/mcp.json file:

{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

With HTTP transport:

{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp", "--transport", "http", "--port", "5000"]

}

}

}

Run this command:

claude mcp add naver-finance -- npx -y naver-finance-crawl-mcp

Or with HTTP transport:

claude mcp add naver-finance -- npx -y naver-finance-crawl-mcp --transport http --port 5000

Add this to your VS Code MCP config file. See VS Code MCP docs for more info.

"mcp": {

"servers": {

"naver-finance": {

"type": "stdio",

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

Add this to your Windsurf MCP config file:

{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

mcpServers:{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

Open Claude Desktop developer settings and edit your claude_desktop_config.json file:

{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

Add this to your Zed settings.json:

{

"context_servers": {

"naver-finance": {

"source": "custom",

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

Add this to your Roo Code MCP configuration file:

{

"mcpServers": {

"naver-finance": {

"command": "npx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

{

"mcpServers": {

"naver-finance": {

"command": "bunx",

"args": ["-y", "naver-finance-crawl-mcp"]

}

}

}

# Type check

pnpm typecheck

# Lint code

pnpm lint

pnpm lint:fix

# Run tests

pnpm test

pnpm test:ui # UI mode

pnpm test:coverage # With coverage report

# Build project

pnpm build

# Watch mode

pnpm dev

# Start MCP server (STDIO transport)

pnpm start

# Start MCP server (HTTP transport)

pnpm start --transport http --port 5000

# Start HTTP REST API server

pnpm start:http

STDIO Transport (default):

naver-finance-crawl-mcp

HTTP Transport:

naver-finance-crawl-mcp --transport http --port 5000

The server provides two MCP tools that can be used by LLMs:

crawl_top_stocks: Fetches the most searched stocks from Naver Financecrawl_stock_detail: Fetches detailed information for a specific stock by its 6-digit codeNaver Finance Crawl MCP provides the following tools that can be used by LLMs:

Crawl top searched stocks from Naver Finance. Returns a list of the most searched stocks with their codes, names, current prices, and change rates.

Parameters: None

Example Response:

{

"success": true,

"count": 10,

"data": [

{

"code": "005930",

"name": "삼성전자",

"currentPrice": "71,000",

"changeRate": "+2.50%"

}

],

"timestamp": "2025-01-29T12:00:00.000Z"

}

Crawl detailed information for a specific stock by its 6-digit code. Returns comprehensive data including company info, stock prices, trading volume, and financial metrics.

Parameters:

stockCode (string, required): 6-digit stock code (e.g., "005930" for Samsung Electronics)Example Request:

{

"stockCode": "005930"

}

Example Response:

{

"success": true,

"stockCode": "005930",

"data": {

"companyName": "삼성전자",

"currentPrice": "71,000",

"changeRate": "+2.50%",

"tradingVolume": "1,234,567",

"marketCap": "423조원"

},

"metadata": {

"url": "https://finance.naver.com/item/main.naver?code=005930",

"statusCode": 200,

"timestamp": "2025-01-29T12:00:00.000Z"

}

}

In Cursor/Claude Code:

Get the top searched stocks from Naver Finance

The tool will return:

In Cursor/Claude Code:

Get detailed information for Samsung Electronics (stock code: 005930)

The tool will return:

In Cursor/Claude Code:

First, show me the top searched stocks.

Then, fetch detailed information for the top 3 stocks.

Analyze which stocks show the most significant price changes.

You can also use the crawlers directly in your Node.js applications:

import { ExampleCrawler } from './src/crawlers/exampleCrawler.js';

const crawler = new ExampleCrawler({

timeout: 10000,

retries: 3,

});

const result = await crawler.crawl('https://example.com');

console.log(result);

import { BaseCrawler } from './src/crawlers/baseCrawler.js';

import { CrawlResult } from './src/types/index.js';

class MyCrawler extends BaseCrawler {

async crawl(url: string): Promise<CrawlResult> {

const html = await this.fetchHtml(url);

const parser = this.parseHtml(html);

const data = parser.parseStructure('div.item', {

title: 'h2',

price: 'span.price',

});

return {

url,

data,

timestamp: new Date(),

statusCode: 200,

};

}

}

import { HtmlParser } from './src/utils/parser.js';

const html = '<h1>Hello</h1><p>World</p>';

const parser = new HtmlParser(html);

// Get text from elements

const title = parser.getFirstText('h1');

console.log(title); // "Hello"

// Get attributes

const links = parser.getAttributes('a[href]', 'href');

// Parse structured data

const items = parser.parseStructure('div.item', {

name: 'h2',

description: 'p',

});

axios: HTTP client librarycheerio: jQuery-like HTML parsingtypescript: TypeScript compilervitest: Unit testing framework@typescript-eslint/*: TypeScript lintingeslint: Code lintingprettier: Code formattingtsx: TypeScript executorThe project includes comprehensive tests covering:

Run tests with:

pnpm test # Run all tests

pnpm test:ui # Interactive UI

pnpm test:coverage # With coverage report

docker build -t naver-finance-crawl-mcp .

With STDIO transport:

docker run -i --rm naver-finance-crawl-mcp

With HTTP transport:

docker run -d -p 5000:5000 \

--name naver-finance \

naver-finance-crawl-mcp \

node dist/mcp-server.js --transport http --port 5000

Create a docker-compose.yml:

version: '3.8'

services:

naver-finance-crawl-mcp:

build: .

ports:

- "5000:5000"

environment:

- PORT=5000

- NODE_ENV=production

command: ["node", "dist/mcp-server.js", "--transport", "http", "--port", "5000"]

restart: unless-stopped

healthcheck:

test: ["CMD", "node", "-e", "require('http').get('http://localhost:5000/mcp', (r) => {process.exit(r.statusCode === 200 ? 0 : 1)})"]

interval: 30s

timeout: 3s

retries: 3

start_period: 5s

Run with Docker Compose:

docker-compose up -d

Configure your MCP client to use the Docker container:

{

"mcpServers": {

"naver-finance": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"naver-finance-crawl-mcp"

]

}

}

}

The project follows a modular architecture:

baseCrawler.ts: Base class for all crawlersnaverFinanceCrawler.ts: Naver Finance stock detail crawlerTopStocksCrawler.ts: Top searched stocks crawlerexampleCrawler.ts: Example crawler implementationcrawl-top-stocks.ts: MCP tool for top stockscrawl-stock-detail.ts: MCP tool for stock detailsrequest.ts: HTTP client with retry logicparser.ts: HTML parsing helpersContributions are welcome! Please feel free to submit a Pull Request.

greatsumini

MIT

FAQs

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

A safer, faster way to eliminate vulnerabilities without updating dependencies

Product

Reachability analysis for Ruby is now in beta, helping teams identify which vulnerabilities are truly exploitable in their applications.

Research

/Security News

Malicious npm packages use Adspect cloaking and fake CAPTCHAs to fingerprint visitors and redirect victims to crypto-themed scam sites.