Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

whisper-stream-js

Advanced tools

CLI tool for real-time audio transcription using OpenAI's Whisper API

This repository is a NodeJS port of the original Whisper Stream Speech-to-Text Transcriber by Yoichiro Hasebe.

This is a Node.js script that utilizes the OpenAI Whisper API to transcribe continuous voice input into text. It uses SoX for audio recording and includes a built-in feature that detects silence between speech segments.

The script is designed to convert voice audio into text each time the system identifies a specified duration of silence. This enables the Whisper API to function as if it were capable of real-time speech-to-text conversion. It is also possible to specify the audio file to be converted by Whisper.

After transcription, the text is automatically copied to your system's clipboard for immediate use. It can also be saved in a specified directory as a text file.

Make sure you have Node.js and npm installed on your system. You also need the following dependencies:

sox - For audio recording and processingxclip and optionally alsa-utilsOn macOS:

brew install sox

On Debian-based Linux distributions:

sudo apt-get install sox xclip alsa-utils

You can install the CLI tool globally using npm:

npm install -g whisper-stream

This will make the whisper-stream command available globally on your system.

If you prefer to install from source:

npm install

npm link

The CLI tool depends on the following packages:

If you installed the package globally, you can use the CLI command:

whisper-stream [options]

If you installed from source, you can start the script with:

node whisper-stream.js [options]

Or if you've set up the script to be executable:

./whisper-stream.js [options]

The available options are:

-v, --volume <value>: Set the minimum volume threshold (default: 1%)-s, --silence <value>: Set the minimum silence length (default: 1.5)-o, --oneshot: Enable one-shot mode-d, --duration <value>: Set the recording duration in seconds (default: 0, continuous)-t, --token <value>: Set the OpenAI API token-p, --path <value>: Set the output directory path to create the transcription file-g, --granularities <value>: Set the timestamp granularities (segment or word)-r, --prompt <value>: Set the prompt for the API call-l, --language <value>: Set the input language in ISO-639-1 format-f, --file <value>: Set the audio file to be transcribed-tr, --translate: Translate the transcribed text to English-p2, --pipe-to <cmd>: Pipe the transcribed text to the specified command (e.g., 'wc -w')-q, --quiet: Suppress the banner and settings-V, --version: Show the version number-h, --help: Display the help messageHere are some usage examples with a brief comment on each of them. If you installed the package globally, replace node whisper-stream.js with whisper-stream in these examples.

> whisper-stream

This will start the tool with the default settings, recording audio continuously and transcribing it into text using the default volume threshold and silence length. If the OpenAI API token is not provided as an argument, it will automatically use the value of the OPENAI_API_KEY environment variable if it is set.

> whisper-stream -l ja

This will start the tool with the input language specified as Japanese; see the Wikipedia page for ISO-639-1 language codes.

> whisper-stream -tr

It transcribes the spoken audio in whatever language and presents the text translated into English. Currently, the target language for translation is limited to English.

> whisper-stream -v 2% -s 2 -o -d 60 -t your_openai_api_token

This example sets the minimum volume threshold to 2%, the minimum silence length to 2 seconds, enables one-shot mode, sets the recording duration to 60 seconds, and specifies the OpenAI API token.

> whisper-stream -f ~/Desktop/interview.mp3 -p ~/Desktop/transcripts -l en

This will transcribe the audio file located at ~/Desktop/interview.mp3. The input language is specified as English. The output directory is set to ~Desktop/transcripts to create a transcription text file.

> whisper-stream -p2 'wc -w'

This will start the tool with the default settings for recording audio and transcribing it. After transcription, the transcribed text will be piped to the wc -w command, which counts the number of words in the text. The result, indicating the total word count, will be printed below the original transcribed output.

> whisper-stream -g segment -p ~/Desktop

The -g option allows you to specify the mode for timestamp granularities. The available modes are segment or word, and specifying either will display detailed transcript data in JSON format. When used in conjunction with the -p option to specify a directory, the results will be saved as a JSON file. For more information, see the timestamp_granularities[] section in OpenAI Whisper API reference.

Restrictions such as the languages that can be converted by this program, the types of audio files that can be input, and the size of data that can be converted at one time depend on what the Whisper API specifies. Please refer to Whisper API FAQ.

This software is distributed under the MIT License.

FAQs

CLI tool for real-time audio transcription using OpenAI's Whisper API

We found that whisper-stream-js demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 0 open source maintainers collaborating on the project.

Did you know?

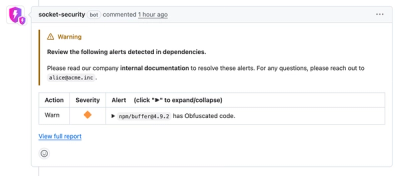

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.