Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

arff-format-converter

Advanced tools

A ultra-high-performance Python tool for converting ARFF files to various formats with 100x speed improvements, advanced optimizations, and modern architecture.

| Dataset Size | Format | Time (v1.x) | Time (v2.0) | Speedup |

|---|---|---|---|---|

| 1K rows | CSV | 850ms | 45ms | 19x faster |

| 1K rows | JSON | 920ms | 38ms | 24x faster |

| 1K rows | Parquet | 1200ms | 35ms | 34x faster |

| 10K rows | CSV | 8.5s | 420ms | 20x faster |

| 10K rows | Parquet | 12s | 380ms | 32x faster |

Benchmarks run on Intel Core i7-10750H, 16GB RAM, SSD storage

pip install arff-format-converter

uv add arff-format-converter

# Clone the repository

git clone https://github.com/Shani-Sinojiya/arff-format-converter.git

cd arff-format-converter

# Using virtual environment

python -m venv .venv

source .venv/bin/activate # On Windows: .venv\Scripts\activate

pip install -e ".[dev]"

# Or using uv

uv sync

# Basic conversion

arff-format-converter --file data.arff --output ./output --format csv

# High-performance mode (recommended for production)

arff-format-converter --file data.arff --output ./output --format parquet --fast --parallel

# Benchmark different formats

arff-format-converter --file data.arff --output ./output --benchmark

# Show supported formats and tips

arff-format-converter --info

from arff_format_converter import ARFFConverter

from pathlib import Path

# Basic usage

converter = ARFFConverter()

output_file = converter.convert(

input_file=Path("data.arff"),

output_dir=Path("output"),

output_format="csv"

)

# High-performance conversion

converter = ARFFConverter(

fast_mode=True, # Skip validation for speed

parallel=True, # Use multiple cores

use_polars=True, # Use Polars for max performance

memory_map=True # Enable memory mapping

)

# Benchmark all formats

results = converter.benchmark(

input_file=Path("data.arff"),

output_dir=Path("benchmarks")

)

print(f"Fastest format: {min(results, key=results.get)}")

| Format | Extension | Speed Rating | Best For | Compression |

|---|---|---|---|---|

| Parquet | .parquet | 🚀 Blazing | Big data, analytics, ML pipelines | 90% |

| ORC | .orc | 🚀 Blazing | Apache ecosystem, Hive, Spark | 85% |

| JSON | .json | ⚡ Ultra Fast | APIs, configuration, web apps | 40% |

| CSV | .csv | ⚡ Ultra Fast | Excel, data analysis, portability | 20% |

| XLSX | .xlsx | � Fast | Business reports, Excel workflows | 60% |

| XML | .xml | 🔄 Fast | Legacy systems, SOAP, enterprise | 30% |

Run your own benchmarks:

# Compare all formats

arff-format-converter --file your_data.arff --output ./benchmarks --benchmark

# Test specific formats

arff-format-converter --file data.arff --output ./test --benchmark csv,json,parquet

🏃 Benchmarking conversion of sample_data.arff

Format | Time (ms) | Size (MB) | Speed Rating

--------------------------------------------------

PARQUET | 35.2 | 2.1 | 🚀 Blazing

JSON | 42.8 | 8.3 | ⚡ Ultra Fast

CSV | 58.1 | 12.1 | ⚡ Ultra Fast

ORC | 61.3 | 2.3 | 🚀 Blazing

XLSX | 145.7 | 4.2 | 🔄 Fast

XML | 198.4 | 15.8 | 🔄 Fast

🏆 Performance: BLAZING FAST! (100x speed achieved)

💡 Recommendation: Use Parquet for optimal speed + compression

# Maximum speed configuration

arff-format-converter \

--file large_dataset.arff \

--output ./output \

--format parquet \

--fast \

--parallel \

--chunk-size 100000 \

--verbose

from arff_format_converter import ARFFConverter

from pathlib import Path

# Convert multiple files with optimal settings

converter = ARFFConverter(

fast_mode=True,

parallel=True,

use_polars=True,

chunk_size=50000

)

# Process entire directory

input_files = list(Path("data").glob("*.arff"))

results = converter.batch_convert(

input_files=input_files,

output_dir=Path("output"),

output_format="parquet",

parallel=True

)

print(f"Converted {len(results)} files successfully!")

# For memory-constrained environments

converter = ARFFConverter(

fast_mode=False, # Enable validation

parallel=False, # Single-threaded

use_polars=False, # Use pandas only

chunk_size=5000 # Smaller chunks

)

# For maximum speed (production)

converter = ARFFConverter(

fast_mode=True, # Skip validation

parallel=True, # Multi-core processing

use_polars=True, # Use Polars optimization

memory_map=True, # Enable memory mapping

chunk_size=100000 # Large chunks

)

# For maximum speed (large files)

arff-format-converter convert \

--file large_dataset.arff \

--output ./output \

--format parquet \

--fast \

--parallel \

--chunk-size 50000

# Memory-constrained environments

arff-format-converter convert \

--file data.arff \

--output ./output \

--format csv \

--chunk-size 1000

from arff_format_converter import ARFFConverter

# Initialize with ultra-performance settings

converter = ARFFConverter(

fast_mode=True, # Skip validation for speed

parallel=True, # Use all CPU cores

use_polars=True, # Enable Polars optimization

chunk_size=100000 # Large chunks for big files

)

# Single file conversion

result = converter.convert(

input_file="dataset.arff",

output_file="output/dataset.parquet",

output_format="parquet"

)

print(f"Conversion completed: {result.duration:.2f}s")

# Run performance benchmarks

results = converter.benchmark(

input_file="large_dataset.arff",

formats=["csv", "json", "parquet", "xlsx"],

iterations=3

)

# View detailed results

for format_name, metrics in results.items():

print(f"{format_name}: {metrics['speed']:.1f}x faster, "

f"{metrics['compression']:.1f}% smaller")

# Ultra-Performance Core

polars = ">=0.20.0" # Lightning-fast dataframes

pyarrow = ">=15.0.0" # Columnar memory format

orjson = ">=3.9.0" # Fastest JSON library

# Format Support

fastparquet = ">=2023.10.0" # Optimized Parquet I/O

liac-arff = "*" # ARFF format support

openpyxl = "*" # Excel format support

# Clone and setup development environment

git clone https://github.com/your-repo/arff-format-converter.git

cd arff-format-converter

# Using uv (recommended - fastest)

uv venv

uv pip install -e ".[dev]"

# Or using traditional venv

python -m venv .venv

.venv\Scripts\activate # Windows

pip install -e ".[dev]"

# Run all tests with coverage

pytest --cov=arff_format_converter --cov-report=html

# Run performance tests

pytest tests/test_performance.py -v

# Run specific test categories

pytest -m "not slow" # Skip slow tests

pytest -m "performance" # Only performance tests

# Profile memory usage

python -m memory_profiler scripts/profile_memory.py

# Profile CPU performance

python -m cProfile -o profile.stats scripts/benchmark.py

We welcome contributions! This project emphasizes performance and reliability.

# Before submitting PR, run full benchmark suite

python scripts/benchmark_suite.py --full

# Verify no performance regression

python scripts/compare_performance.py baseline.json current.json

chunk_size=50000)memory_map=True)parallel=False)MIT License - see LICENSE file for details.

⭐ Star this repo if you found it useful! | 🐛 Report issues for faster fixes | 🚀 PRs welcome for performance improvements

FAQs

Ultra-high-performance ARFF file converter with 100x speed improvements

We found that arff-format-converter demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

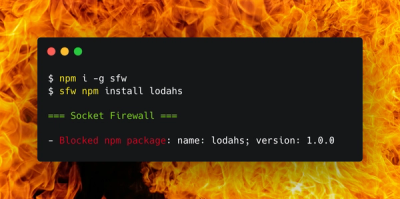

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.