Security News

PodRocket Podcast: Inside the Recent npm Supply Chain Attacks

Socket CEO Feross Aboukhadijeh discusses the recent npm supply chain attacks on PodRocket, covering novel attack vectors and how developers can protect themselves.

chatting-with-pdfs

Advanced tools

Load your PDFs data folder and ask questions via llama_index and GPT.

LlamaIndex (GPT Index) is a data framework for your LLM application.

That’s where LlamaIndex comes in. LlamaIndex is a “data framework” to help you build LLM apps. It provides the following tools:

Offers data connectors to ingest your existing data sources and data formats (APIs, PDFs, docs, SQL, etc.)

Provides ways to structure your data (indices, graphs) so that this data can be easily used with LLMs.

Provides an advanced retrieval/query interface over your data: Feed in any LLM input prompt, get back retrieved context and knowledge-augmented output.

Allows easy integrations with your outer application framework (e.g. with LangChain, Flask, Docker, ChatGPT, anything else).

LlamaIndex provides tools for both beginner users and advanced users. Our high-level API allows beginner users to use LlamaIndex to ingest and query their data in 5 lines of code. Our lower-level APIs allow advanced users to customize and extend any module (data connectors, indices, retrievers, query engines, reranking modules), to fit their needs.

load_index_from_storage is a function that loads an index from a StorageContext object. It takes in a StorageContext object and an optional index_id as parameters. If the index_id is not specified, it assumes there is only one index in the index store and loads it. It then passes the index_ids and any additional keyword arguments to the load_indices_from_storage function. This function then retrieves the index structs from the index store and creates a list of BaseGPTIndex objects. If the index_ids are specified, it will only load the indices with the specified ids. Finally, the function returns the list of BaseGPTIndex objects.

pip install -r requirements.txt

Get a GPT API key from OpenAI if you don't have one already.

Run the script.

python3 chat_with_pdfs.py <"data_folder_path"> <"open_api_key">

from chat_pdf.chat_with_pdfs import ask_a_question

import sys

folder_name = sys.argv[1]

api_key = sys.argv[2]

print(ask_a_question(folder_name, api_key))

FAQs

Load a PDF file and ask questions via llama_index and GPT.

We found that chatting-with-pdfs demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Socket CEO Feross Aboukhadijeh discusses the recent npm supply chain attacks on PodRocket, covering novel attack vectors and how developers can protect themselves.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

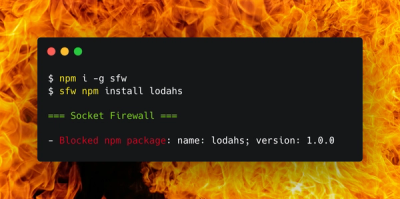

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.