Security News

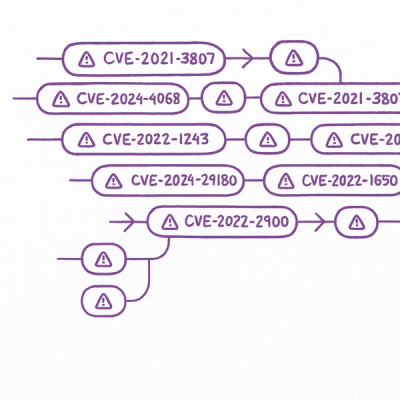

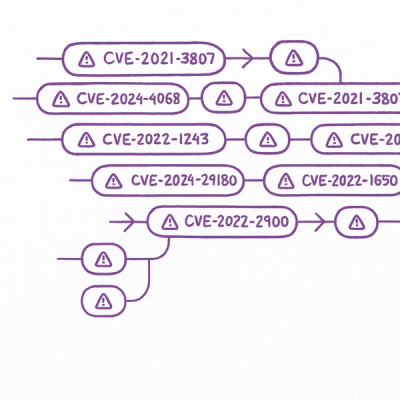

Static vs. Runtime Reachability: Insights from Latio’s On the Record Podcast

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.

A Python utility for scraping Cloudflare-protected websites using screenshot + OCR fallback

A powerful Python utility that can scrape any website—even those protected by Cloudflare. When traditional scraping fails, CloudflarePeek automatically falls back to taking a full-page screenshot and extracting text using Google's Gemini OCR.

# Install in development mode

pip install -e git+https://github.com/Talha-Ali-5365/CloudflarePeek.git#egg=cloudflare-peek

# Or clone and install locally

git clone https://github.com/Talha-Ali-5365/CloudflarePeek.git

cd CloudflarePeek

pip install -e .

playwright install chromium

export GEMINI_API_KEY="your-gemini-api-key-here"

from cloudflare_peek import peek

# Scrape any website - automatically handles Cloudflare

text = peek("https://example.com")

print(text)

from cloudflare_peek import peek, behind_cloudflare

# Check if a site is behind Cloudflare

if behind_cloudflare("https://example.com"):

print("Site is protected by Cloudflare")

# Force OCR method (useful for dynamic content)

text = peek("https://example.com", force_ocr=True)

# Use with custom API key and timeout (5 minutes)

text = peek("https://example.com", api_key="your-gemini-key", timeout=300000)

CloudflarePeek also comes with a powerful command-line interface.

Scrape a website:

cloudflare-peek scrape https://example.com

Check if a site is behind Cloudflare:

cloudflare-peek check-cloudflare https://example.com

Save content to a file:

cloudflare-peek scrape https://example.com -o content.txt

Advanced options:

# Force OCR, run in non-headless mode, and set a 60s timeout

cloudflare-peek scrape https://example.com --force-ocr --no-headless --timeout 60

# See all commands and options

cloudflare-peek --help

cloudflare-peek scrape --help

# Required for OCR functionality

export GEMINI_API_KEY="your-gemini-api-key"

CloudflarePeek uses a default timeout of 2 minutes (120,000ms) for page loading during OCR extraction. You can customize this:

# Quick timeout (30 seconds) for fast sites

text = peek("https://example.com", timeout=30000)

# Extended timeout (5 minutes) for slow/complex sites

text = peek("https://example.com", timeout=300000)

# Very long timeout (10 minutes) for extremely slow sites

text = peek("https://example.com", timeout=600000)

CloudflarePeek provides detailed progress information during scraping:

import logging

from cloudflare_peek import peek

# Enable detailed debug logging to see all steps

logging.getLogger('cloudflare_peek').setLevel(logging.DEBUG)

# You'll see progress like:

# 🎯 Starting CloudflarePeek for: https://example.com

# 🔍 Checking if https://example.com is behind Cloudflare...

# 🚀 No Cloudflare detected - attempting fast scraping...

# ✅ Fast scraping successful! (1234 characters extracted)

text = peek("https://example.com")

CloudflarePeek automatically handles asyncio event loop conflicts, so it works seamlessly in:

No need for nest_asyncio.apply() or other workarounds - it's all handled internally!

peek(url, api_key=None, force_ocr=False, timeout=120000)The main function that intelligently chooses between fast scraping and OCR extraction.

Parameters:

url (str): The URL to scrapeapi_key (str, optional): Gemini API key (uses GEMINI_API_KEY env var if not provided)force_ocr (bool): Skip fast scraping and use OCR method directlytimeout (int): Page load timeout in milliseconds for OCR method (default: 120000 = 2 minutes)Returns: Extracted text content as string

behind_cloudflare(url)Check if a website is protected by Cloudflare.

Parameters:

url (str): The URL to checkReturns: True if behind Cloudflare, False otherwise

from cloudflare_peek import peek

# Works with any website

websites = [

"https://httpbin.org/html",

"https://quotes.toscrape.com",

"https://scrapethissite.com"

]

for url in websites:

content = peek(url)

print(f"Content from {url}:")

print(content[:200] + "...")

print("-" * 50)

import asyncio

from cloudflare_peek import peek

async def scrape_multiple(urls):

results = {}

for url in urls:

try:

content = peek(url)

results[url] = content

print(f"✅ Successfully scraped {url}")

except Exception as e:

print(f"❌ Failed to scrape {url}: {e}")

results[url] = None

return results

urls = ["https://example1.com", "https://example2.com"]

results = asyncio.run(scrape_multiple(urls))

from cloudflare_peek import peek

def safe_scrape(url):

try:

return peek(url)

except ValueError as e:

if "API key" in str(e):

print("❌ Gemini API key not found. Please set GEMINI_API_KEY environment variable.")

return None

except Exception as e:

print(f"❌ Scraping failed: {e}")

return None

content = safe_scrape("https://example.com")

if content:

print("Scraping successful!")

# Clone the repository

git clone https://github.com/your-username/CloudflarePeek.git

cd CloudflarePeek

# Install in development mode with dev dependencies

pip install -e ".[dev]"

# Install Playwright browsers

playwright install chromium

# Set up your API key

export GEMINI_API_KEY="your-key-here"

# Run tests

pytest

# Run tests with coverage

pytest --cov=cloudflare_peek

# Format code

black cloudflare_peek/

# Check types

mypy cloudflare_peek/

git checkout -b feature/amazing-feature)git commit -m 'Add amazing feature')git push origin feature/amazing-feature)This project is licensed under the MIT License - see the LICENSE file for details.

This tool is for educational purposes only. The author is not responsible for any misuse or damage caused by this tool.

This tool is intended for legitimate web scraping purposes only. Always respect websites' robots.txt files and terms of service. Be mindful of rate limiting and don't overload servers with requests.

FAQs

A Python utility for scraping Cloudflare-protected websites using screenshot + OCR fallback

We found that cloudflare-peek demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.

Security News

The latest Opengrep releases add Apex scanning, precision rule tuning, and performance gains for open source static code analysis.

Security News

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.