Product

Introducing Socket Fix for Safe, Automated Dependency Upgrades

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

collections-cache is a Python package for managing data collections across multiple SQLite databases. It allows efficient storage, retrieval, and updating of key-value pairs, supporting various data types serialized with pickle. The package uses parallel processing for fast access and manipulation of large collections.

collections-cache is a fast and scalable key–value caching solution built on top of SQLite. It allows you to store, update, and retrieve data using unique keys, and it supports complex Python data types (thanks to pickle). Designed to harness the power of multiple CPU cores, the library shards data across multiple SQLite databases, enabling impressive performance scaling.

pickle.multiprocessing and concurrent.futures modules to perform operations in parallel.Use Poetry to install and manage dependencies:

Clone the repository:

git clone https://github.com/Luiz-Trindade/collections_cache.git

cd collections-cache

Install the package with Poetry:

poetry install

Simply import and start using the main class, Collection_Cache, to interact with your collection:

from collections_cache import Collection_Cache

# Create a new collection named "STORE"

cache = Collection_Cache("STORE")

# Set a key-value pair

cache.set_key("products", ["apple", "orange", "onion"])

# Retrieve the value by key

products = cache.get_key("products")

print(products) # Output: ['apple', 'orange', 'onion']

For faster insertions, accumulate your data and use set_multi_keys:

from collections_cache import Collection_Cache

from random import uniform, randint

from time import time

cache = Collection_Cache("web_cache")

insertions = 100_000

data = {}

# Generate data

for i in range(insertions):

key = str(uniform(0.0, 100.0))

value = "some text :)" * randint(1, 100)

data[key] = value

# Bulk insert keys using multi-threaded execution

cache.set_multi_keys(data)

print(f"Inserted {len(cache.keys())} keys successfully!")

After optimizing SQLite settings (including setting synchronous = OFF), the library has shown a significant performance improvement. The insertion performance has been accelerated dramatically, allowing for much faster data insertions and better scalability.

For 100,000 insertions:

synchronous = OFF), reducing the total insertion time from 125 seconds to 15.02 seconds.With the optimized configuration, the library scales nearly linearly with the number of CPU cores. For example:

Note: Actual performance may vary depending on system architecture, disk I/O, and specific workload, but benchmarks indicate a substantial increase in insertion rate as the number of CPU cores increases.

set_key(key, value): Stores a key–value pair. Updates the value if the key already exists.set_multi_keys(key_and_value): (Experimental) Inserts multiple key–value pairs in parallel.get_key(key): Retrieves the value associated with a given key.delete_key(key): Removes a key and its corresponding value.keys(): Returns a list of all stored keys.export_to_json(): (Future feature) Exports your collection to a JSON file.To contribute or run tests:

Install development dependencies:

poetry install --dev

Run tests using:

poetry run pytest

Feel free to submit issues, pull requests, or feature suggestions. Your contributions help make collections-cache even better!

This project is licensed under the MIT License. See the LICENSE file for details.

Give collections-cache a try and let it power your high-performance caching needs! 🚀

FAQs

collections-cache is a Python package for managing data collections across multiple SQLite databases. It allows efficient storage, retrieval, and updating of key-value pairs, supporting various data types serialized with pickle. The package uses parallel processing for fast access and manipulation of large collections.

We found that collections-cache demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

Security News

CISA denies CVE funding issues amid backlash over a new CVE foundation formed by board members, raising concerns about transparency and program governance.

Product

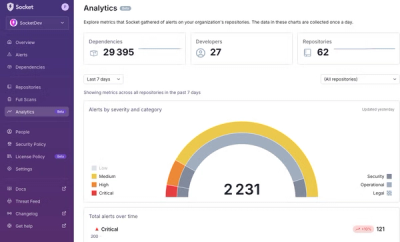

We’re excited to announce a powerful new capability in Socket: historical data and enhanced analytics.