Research

2025 Report: Destructive Malware in Open Source Packages

Destructive malware is rising across open source registries, using delays and kill switches to wipe code, break builds, and disrupt CI/CD.

dataoceanai-dolphin

Advanced tools

Paper Github Huggingface Modelscope Openi Wisemodel

Dolphin is a multilingual, multitask ASR model developed through a collaboration between Dataocean AI and Tsinghua University. It supports 40 Eastern languages across East Asia, South Asia, Southeast Asia, and the Middle East, while also supporting 22 Chinese dialects. It is trained on over 210,000 hours of data, which includes both DataoceanAI's proprietary datasets and open-source datasets. The model can perform speech recognition, voice activity detection (VAD), segmentation, and language identification (LID).

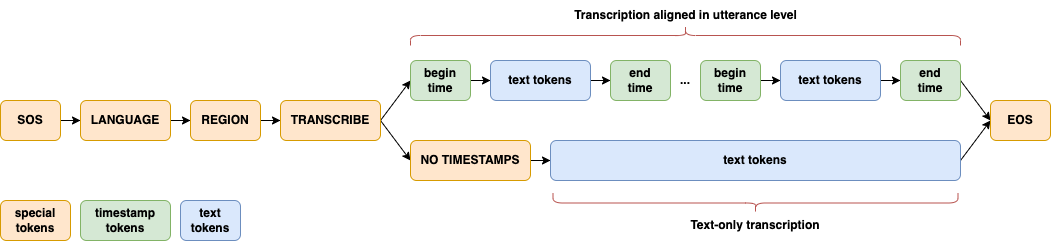

Dolphin largely follows the innovative design approach of Whisper and OWSM. A joint CTC-Attention architecture is adopted, with encoder based on E-Branchformer and decoder based on standard Transformer. Several key modifications are introduced for its specific focus on ASR. Dolphin does not support translation tasks, and eliminates the use of previous text and its related tokens.

Dolphin largely follows the innovative design approach of Whisper and OWSM. A joint CTC-Attention architecture is adopted, with encoder based on E-Branchformer and decoder based on standard Transformer. Several key modifications are introduced for its specific focus on ASR. Dolphin does not support translation tasks, and eliminates the use of previous text and its related tokens.

A significant enhancement in Dolphin is the introduction of a two-level language token system to better handle linguistic and regional diversity, especially in Dataocean AI dataset. The first token specifies the language (e.g., <zh>, <ja>), while the second token indicates the region (e.g., <CN>, <JP>). See details in paper.

Dolphin requires FFmpeg to convert audio file to WAV format. If FFmpeg is not installed on your system, please install it first:

# Ubuntu or Debian

sudo apt update && sudo apt install ffmpeg

# MacOS

brew install ffmpeg

# Windows

choco install ffmpeg

You can install the latest version of Dolphin using the following command:

pip install -U dataoceanai-dolphin

Alternatively, it can also be installed from the source:

pip install git+https://github.com/SpeechOceanTech/Dolphin.git

There are 4 models in Dolphin, and 2 of them are available now. See details in paper.

| Model | Parameters | Average WER | Publicly Available |

|---|---|---|---|

| base | 140 M | 33.3 | ✅ |

| small | 372 M | 25.2 | ✅ |

| medium | 910 M | 23.1 | |

| large | 1679 M | 21.6 |

Dolphin supports 40 Eastern languages and 22 Chinese dialects. For a complete list of supported languages, see languages.md.

dolphin audio.wav

# Download model and specify the model path

dolphin audio.wav --model small --model_dir /data/models/dolphin/

# Specify language and region

dolphin audio.wav --model small --model_dir /data/models/dolphin/ --lang_sym "zh" --region_sym "CN"

# padding speech to 30 seconds

dolphin audio.wav --model small --model_dir /data/models/dolphin/ --lang_sym "zh" --region_sym "CN" --padding_speech true

import dolphin

waveform = dolphin.load_audio("audio.wav")

model = dolphin.load_model("small", "/data/models/dolphin", "cuda")

result = model(waveform)

# Specify language

result = model(waveform, lang_sym="zh")

# Specify language and region

result = model(waveform, lang_sym="zh", region_sym="CN")

print(result.text)

Dolphin's code and model weights are released under the Apache 2.0 License.

FAQs

DataoceanAI Open-source Large Speech Model

We found that dataoceanai-dolphin demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Destructive malware is rising across open source registries, using delays and kill switches to wipe code, break builds, and disrupt CI/CD.

Security News

Socket CTO Ahmad Nassri shares practical AI coding techniques, tools, and team workflows, plus what still feels noisy and why shipping remains human-led.

Research

/Security News

A five-month operation turned 27 npm packages into durable hosting for browser-run lures that mimic document-sharing portals and Microsoft sign-in, targeting 25 organizations across manufacturing, industrial automation, plastics, and healthcare for credential theft.