Product

Introducing Module Reachability: Focus on the Vulnerabilities That Matter

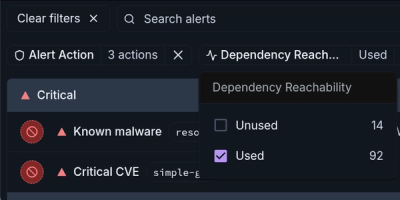

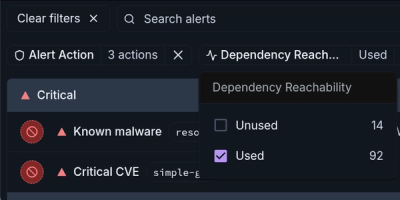

Module Reachability filters out unreachable CVEs so you can focus on vulnerabilities that actually matter to your application.

Foolbox is an adversarial attacks library that works natively with PyTorch, TensorFlow and JAX

.. image:: https://badge.fury.io/py/foolbox.svg :target: https://badge.fury.io/py/foolbox

.. image:: https://readthedocs.org/projects/foolbox/badge/?version=latest :target: https://foolbox.readthedocs.io/en/latest/

.. image:: https://img.shields.io/badge/code%20style-black-000000.svg :target: https://github.com/ambv/black

.. image:: https://joss.theoj.org/papers/10.21105/joss.02607/status.svg :target: https://doi.org/10.21105/joss.02607

Foolbox <https://foolbox.jonasrauber.de>_ is a Python library that lets you easily run adversarial attacks against machine learning models like deep neural networks. It is built on top of EagerPy and works natively with models in PyTorch <https://pytorch.org>, TensorFlow <https://www.tensorflow.org>, and JAX <https://github.com/google/jax>_.

Foolbox 3 has been rewritten from scratch

using EagerPy <https://github.com/jonasrauber/eagerpy>_ instead of

NumPy to achieve native performance on models

developed in PyTorch, TensorFlow and JAX, all with one code base without code duplication.

guide <https://foolbox.jonasrauber.de>_.Jupyter notebook <https://github.com/jonasrauber/foolbox-native-tutorial/blob/master/foolbox-native-tutorial.ipynb>_ |colab|.ReadTheDocs <https://foolbox.readthedocs.io/en/stable/>_... |colab| image:: https://colab.research.google.com/assets/colab-badge.svg :target: https://colab.research.google.com/github/jonasrauber/foolbox-native-tutorial/blob/master/foolbox-native-tutorial.ipynb

.. code-block:: bash

pip install foolbox

Foolbox is tested with Python 3.8 and newer - however, it will most likely also work with version 3.6 - 3.8. To use it with PyTorch <https://pytorch.org>, TensorFlow <https://www.tensorflow.org>, or JAX <https://github.com/google/jax>_, the respective framework needs to be installed separately. These frameworks are not declared as dependencies because not everyone wants to use and thus install all of them and because some of these packages have different builds for different architectures and CUDA versions. Besides that, all essential dependencies are automatically installed.

You can see the versions we currently use for testing in the Compatibility section <#-compatibility>_ below, but newer versions are in general expected to work.

.. code-block:: python

import foolbox as fb

model = ... fmodel = fb.PyTorchModel(model, bounds=(0, 1))

attack = fb.attacks.LinfPGD() epsilons = [0.0, 0.001, 0.01, 0.03, 0.1, 0.3, 0.5, 1.0] _, advs, success = attack(fmodel, images, labels, epsilons=epsilons)

More examples can be found in the examples <./examples/>_ folder, e.g.

a full ResNet-18 example <./examples/single_attack_pytorch_resnet18.py>_.

If you use Foolbox for your work, please cite our JOSS paper on Foolbox Native (i.e., Foolbox 3.0) <https://doi.org/10.21105/joss.02607>_ and our ICML workshop paper on Foolbox <https://arxiv.org/abs/1707.04131>_ using the following BibTeX entries:

.. code-block::

@article{rauber2017foolboxnative, doi = {10.21105/joss.02607}, url = {https://doi.org/10.21105/joss.02607}, year = {2020}, publisher = {The Open Journal}, volume = {5}, number = {53}, pages = {2607}, author = {Jonas Rauber and Roland Zimmermann and Matthias Bethge and Wieland Brendel}, title = {Foolbox Native: Fast adversarial attacks to benchmark the robustness of machine learning models in PyTorch, TensorFlow, and JAX}, journal = {Journal of Open Source Software} }

.. code-block::

@inproceedings{rauber2017foolbox, title={Foolbox: A Python toolbox to benchmark the robustness of machine learning models}, author={Rauber, Jonas and Brendel, Wieland and Bethge, Matthias}, booktitle={Reliable Machine Learning in the Wild Workshop, 34th International Conference on Machine Learning}, year={2017}, url={http://arxiv.org/abs/1707.04131}, }

We welcome contributions of all kind, please have a look at our

development guidelines <https://foolbox.jonasrauber.de/guide/development.html>.

In particular, you are invited to contribute

new adversarial attacks <https://foolbox.jonasrauber.de/guide/adding_attacks.html>.

If you would like to help, you can also have a look at the issues that are

marked with contributions welcome <https://github.com/bethgelab/foolbox/issues?q=is%3Aopen+is%3Aissue+label%3A%22contributions+welcome%22>_.

If you have a question or need help, feel free to open an issue on GitHub. Once GitHub Discussions becomes publicly available, we will switch to that.

Foolbox 3.0 is much faster than Foolbox 1 and 2. A basic performance comparison_ can be found in the performance folder.

We currently test with the following versions:

.. _performance comparison: performance/README.md

FAQs

Foolbox is an adversarial attacks library that works natively with PyTorch, TensorFlow and JAX

We found that foolbox demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Module Reachability filters out unreachable CVEs so you can focus on vulnerabilities that actually matter to your application.

Company News

Socket is bringing best-in-class reachability analysis into the platform — cutting false positives, accelerating triage, and cementing our place as the leader in software supply chain security.

Product

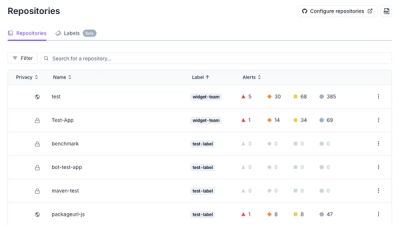

Socket is introducing a new way to organize repositories and apply repository-specific security policies.