Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

gha-utils CLI + reusable workflowsThanks to this project, I am able to release Python packages multiple times a day with only 2-clicks.

This repository contains a collection of reusable workflows and its companion CLI called gha-utils (which stands for GitHub action workflows utilities).

It is designed for uv-based Python projects (and Awesome List projects as a bonus).

It takes care of:

mypy, YAML, zsh, GitHub actions, links, Awesome lists, secretsx86_64 & arm64uv.lock, .gitignore, .mailmap and Mermaid dependency graphautodoc updatesNothing is done behind your back. A PR is created every time a change is proposed, so you can inspect it, ala dependabot.

gha-utils CLIThanks to uv, you can install and run gha-utils in one command, without polluting your system:

$ uvx gha-utils

Usage: gha-utils [OPTIONS] COMMAND [ARGS]...

Options:

--time / --no-time Measure and print elapsed execution time. [default:

no-time]

--color, --ansi / --no-color, --no-ansi

Strip out all colors and all ANSI codes from output.

[default: color]

-C, --config CONFIG_PATH Location of the configuration file. Supports glob

pattern of local path and remote URL. [default:

~/Library/Application Support/gha-

utils/*.{toml,yaml,yml,json,ini,xml}]

--show-params Show all CLI parameters, their provenance, defaults

and value, then exit.

--verbosity LEVEL Either CRITICAL, ERROR, WARNING, INFO, DEBUG.

[default: WARNING]

-v, --verbose Increase the default WARNING verbosity by one level

for each additional repetition of the option.

[default: 0]

--version Show the version and exit.

-h, --help Show this message and exit.

Commands:

changelog Maintain a Markdown-formatted changelog

mailmap-sync Update Git's .mailmap file with missing contributors

metadata Output project metadata

test-plan Run a test plan from a file against a binary

$ uvx gha-utils --version

gha-utils, version 4.9.0

That's the best way to get started with gha-utils and experiment with it.

To ease deployment, standalone executables of gha-utils's latest version are available as direct downloads for several platforms and architectures:

ABI targets:

$ file ./gha-utils-*

./gha-utils-linux-arm64.bin: ELF 64-bit LSB pie executable, ARM aarch64, version 1 (SYSV), dynamically linked, interpreter /lib/ld-linux-aarch64.so.1, BuildID[sha1]=520bfc6f2bb21f48ad568e46752888236552b26a, for GNU/Linux 3.7.0, stripped

./gha-utils-linux-x64.bin: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=56ba24bccfa917e6ce9009223e4e83924f616d46, for GNU/Linux 3.2.0, stripped

./gha-utils-macos-arm64.bin: Mach-O 64-bit executable arm64

./gha-utils-macos-x64.bin: Mach-O 64-bit executable x86_64

./gha-utils-windows-arm64.exe: PE32+ executable (console) Aarch64, for MS Windows

./gha-utils-windows-x64.exe: PE32+ executable (console) x86-64, for MS Windows

To play with the latest development version of gha-utils, you can install it directly from the repository:

$ git clone https://github.com/kdeldycke/workflows

$ cd workflows

$ python -m pip install uv

$ uv venv

$ source .venv/bin/activate

$ uv sync

$ uv run -- gha-utils

This repository contains workflows to automate most of the boring tasks.

These workflows are mostly used for Python projects and their documentation, but not only. They're all reusable GitHub actions workflows.

Reasons for a centralized workflow repository:

I don't want to copy-n-past, keep in sync and maintain another Nth CI/CD file at the root of my repositories.

So my policy is: move every repository-specific config in a pyproject.toml file, or hide the gory details in a reused workflow.

.github/workflows/docs.yaml jobsAutofix typos

Optimize images

Keep .mailmap up to date

Update dependency graph of Python projects

pyproject.toml fileBuild Sphinx-based documentation and publish it to GitHub Pages

pyproject.toml filedocs extra dependency group:

[project.optional-dependencies]

docs = [

"furo == 2024.1.29",

"myst-parser ~= 3.0.0",

"sphinx >= 6",

...

]

docs/conf.pySync awesome projects from awesome-template repository

requirements/*.txt files?Let's look for example at the lint-yaml job from .github/workflows/lint.yaml. Here we only need the yamllint CLI. This CLI is distributed on PyPi. So before executing it, we could have simply run the following step:

- name: Install yamllint

run: |

pip install yamllint

Instead, we install it via the requirements/yamllint.txt file.

Why? Because I want the version of yamllint to be pinned. By pinning it, I make the workflow stable, predictable and reproducible.

So why use a dedicated requirements file? Why don't we simply add the version? Like this:

- name: Install yamllint

run: |

pip install yamllint==1.35.1

That would indeed pin the version. But it requires the maintainer (me) to keep track of new release and update manually the version string. That's a lot of work. And I'm lazy. So this should be automated.

To automate that, the only practical way I found was to rely on dependabot. But dependabot cannot update arbitrary versions in run: YAML blocks. It only supports requirements.txt and pyproject.toml files for Python projects.

So to keep track of new versions of dependencies while keeping them stable, we've hard-coded all Python libraries and CLIs in the requirements/*.txt files. All with pinned versions.

And for the case we need to install all dependencies in one go, we have a requirements.txt file at the root that is referencing all files from the requirements/ subfolder.

This repository updates itself via GitHub actions. It particularly updates its own YAML files in .github/workflows. That's forbidden by default. So we need extra permissions.

Usually, to grant special permissions to some jobs, you use the permissions parameter in workflow files. It looks like this:

on: (...)

jobs:

my-job:

runs-on: ubuntu-latest

permissions:

contents: write

pull-requests: write

steps: (...)

But the contents: write permission doesn't allow write access to the workflow files in the .github subfolder. There is actions: write, but it only covers workflow runs, not their YAML source file. Even a permissions: write-all doesn't work. So you cannot use the permissions parameter to allow a repository's workflow update its own workflow files.

You will always end up with this kind or errors:

! [remote rejected] branch_xxx -> branch_xxx (refusing to allow a GitHub App to create or update workflow `.github/workflows/my_workflow.yaml` without `workflows` permission)

error: failed to push some refs to 'https://github.com/kdeldycke/my-repo'

[!NOTE] That's also why the Settings > Actions > General > Workflow permissions parameter on your repository has no effect on this issue, even with the

Read and write permissionsset:

To bypass the limitation, we rely on a custom access token. By convention, we call it WORKFLOW_UPDATE_GITHUB_PAT. It will be used, in place of the default secrets.GITHUB_TOKEN, in steps in which we need to change the workflow YAML files.

To create this custom WORKFLOW_UPDATE_GITHUB_PAT:

Settings > Developer Settings > Personal Access Tokens > Fine-grained tokensGenerate new token buttonworkflow-self-update to make your intention clearOnly select repositories and the list the repositories in needs of updating their workflow YAML filesRepository permissions drop-down, sets:

Contents: Access: **Read and Write**Metadata (mandatory): Access: **Read-only**Pull Requests: Access: **Read and Write**Workflows: Access: **Read and Write**

[!NOTE] This is the only place where I can have control over the

Workflowspermission, which is not supported by thepermissions:parameter in YAML files.

github_pat_XXXX secret tokenSettings > Security > Secrets and variables > Actions > Secrets > Repository secrets and click New repository secretsWORKFLOW_UPDATE_GITHUB_PAT and copy the github_pat_XXXX token in the Secret fieldNow re-run your actions and they should be able to update the workflow files in .github folder without the refusing to allow a GitHub App to create or update workflow error.

It turns out Release Engineering is a full-time job, and full of edge-cases.

Things have improved a lot in the Python ecosystem with uv. But there are still a lot of manual steps to do to release.

So I made up this release.yaml workflow, which:

pyproject.tomluvA detailed changelog is available.

Check these projects to get real-life examples of usage and inspiration:

Awesome Falsehood - Falsehoods Programmers Believe in.

Awesome Falsehood - Falsehoods Programmers Believe in. Awesome Engineering Team Management - How to transition from software development to engineering management.

Awesome Engineering Team Management - How to transition from software development to engineering management. Awesome IAM - Identity and Access Management knowledge for cloud platforms.

Awesome IAM - Identity and Access Management knowledge for cloud platforms. Awesome Billing - Billing & Payments knowledge for cloud platforms.

Awesome Billing - Billing & Payments knowledge for cloud platforms. Meta Package Manager - A unifying CLI for multiple package managers.

Meta Package Manager - A unifying CLI for multiple package managers. Mail Deduplicate - A CLI to deduplicate similar emails.

Mail Deduplicate - A CLI to deduplicate similar emails. dotfiles - macOS dotfiles for Python developers.

dotfiles - macOS dotfiles for Python developers. Click Extra - Extra colorization and configuration loading for Click.

Click Extra - Extra colorization and configuration loading for Click. Wiki bot - A bot which provides features from Wikipedia like summary, title searches, location API etc.

Wiki bot - A bot which provides features from Wikipedia like summary, title searches, location API etc. workflows - Itself. Eat your own dog-food.

workflows - Itself. Eat your own dog-food. Stock Analysis - Simple to use interfaces for basic technical analysis of stocks.

Stock Analysis - Simple to use interfaces for basic technical analysis of stocks. GeneticTabler - Time Table Scheduler using Genetic Algorithms.

GeneticTabler - Time Table Scheduler using Genetic Algorithms. Excel Write - Optimised way to write in excel files.

Excel Write - Optimised way to write in excel files.Feel free to send a PR to add your project in this list if you are relying on these scripts.

All steps of the release process and version management are automated in the

changelog.yaml

and

release.yaml

workflows.

All there's left to do is to:

prepare-release PR

and its changes,Ready for review button,Rebase and merge button,main branch into a

development state.FAQs

⚙️ CLI helpers for GitHub Actions + reuseable workflows

We found that gha-utils demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

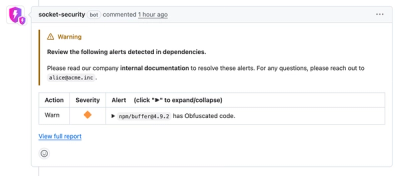

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.