Research

/Security News

Malicious npm Packages Target WhatsApp Developers with Remote Kill Switch

Two npm packages masquerading as WhatsApp developer libraries include a kill switch that deletes all files if the phone number isn’t whitelisted.

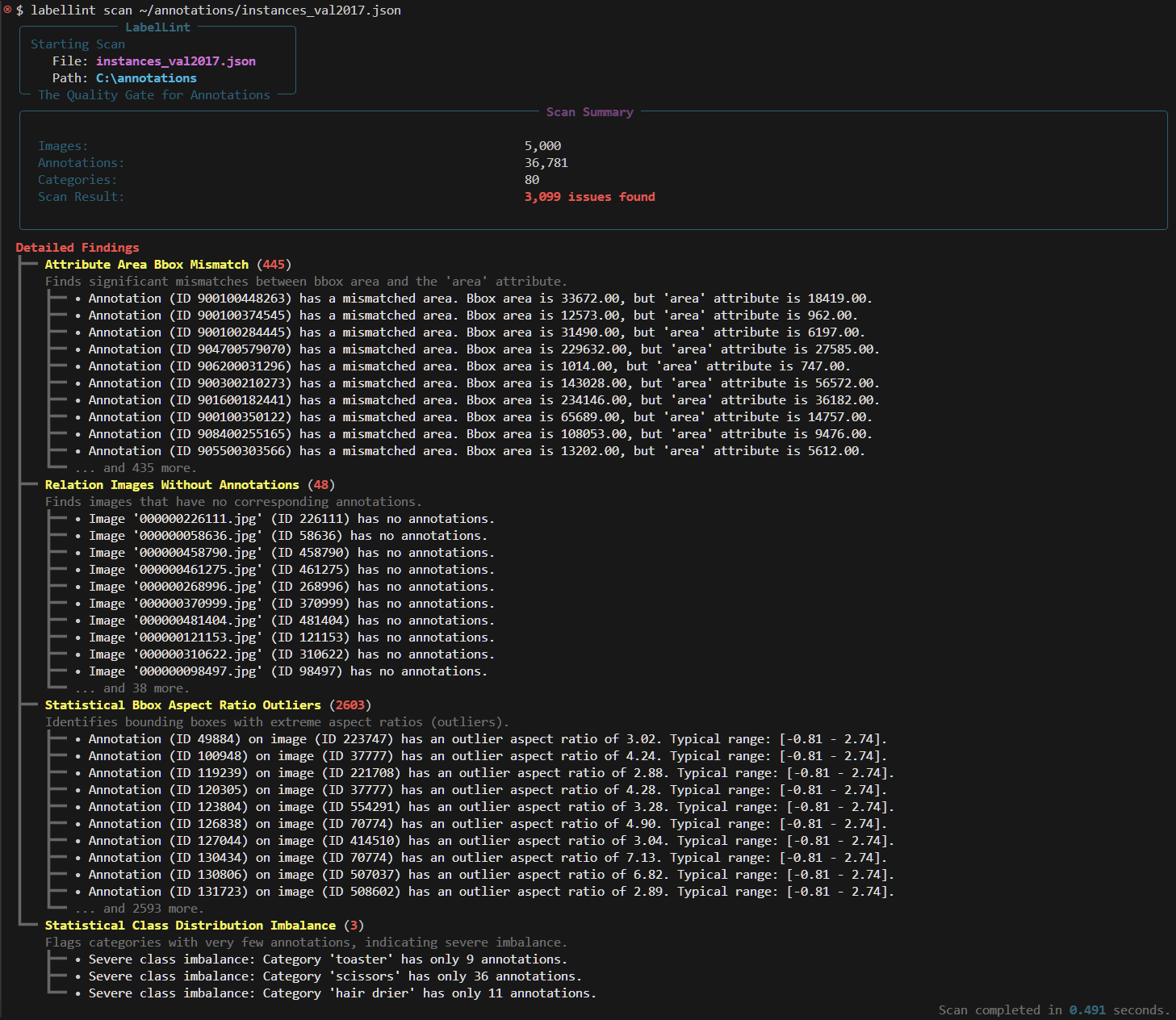

A high-precision CLI for finding errors in your computer vision annotation files before you waste GPU hours.

Stop wasting GPU hours. Lint your labels first.

A high-precision, zero-BS command-line tool that finds thousands of logical inconsistencies and statistical anomalies in your computer vision annotation files before they silently kill your model's performance.

You've done everything right. You've architected a state-of-the-art model, curated a massive dataset, and launched a multi-day training job on a multi-GPU node. The cloud provider bill is climbing into the thousands.

You wait 48 hours. The result is 45% mAP.

Your first instinct is to blame the code. You spend the next week in a demoralizing cycle of debugging the model, tweaking the optimizer, and re-tuning the learning rate. You are looking in the wrong place.

The problem isn't your model. It's your data. Buried deep within your annotations.json are thousands of tiny, invisible errors—the "data-centric bugs" that no amount of code-centric debugging can fix:

"Car" while everyone else labeled "car", fracturing your most important class and poisoning your class distribution.width=0. These are landmines for your data loader, causing silent failures or NaN losses that are impossible to trace.KeyError deep inside your training loop.These errors are the silent killers of MLOps. They are invisible to the naked eye and catastrophic to the training process. labellint is the quality gate that makes them visible.

labellint is a standalone Python CLI tool. It requires Python 3.8+ and has no heavy dependencies. Get it with pip.

# It is recommended to install labellint within your project's virtual environment

pip install labellint

Verify the installation. You should see the help menu.

labellint --help

That's it. You're ready to scan.

The workflow is designed to be brutally simple. Point the scan command at your annotation file.

This is your first-pass diagnostic. It provides a rich, color-coded summary directly in your terminal, truncated for readability.

labellint scan /path/to/your/coco_annotations.json

The output will immediately tell you the scale of your data quality problem.

When the interactive scan reveals thousands of issues, you need the full, unfiltered list. Use the --out flag to dump a complete report in machine-readable JSON. The summary will still be printed to the terminal for context.

labellint scan /path/to/your/coco_annotations.json --out detailed_report.json

You now have a detailed_report.json file containing every single annotation ID, image ID, and error message, ready to be parsed by your data cleaning scripts or inspected in your editor.

labellint is built for automation. The scan command exits with a status code of 0 if no issues are found and 1 if any issues are found. This allows you to use it as a non-negotiable quality gate in your training scripts or CI/CD pipelines.

Prevent a bad dataset from ever reaching a GPU:

# In your training script or Jenkins/GitHub Actions workflow

echo "--- Running Data Quality Check ---"

labellint scan ./data/train.json && {

echo "✅ Data quality check passed. Starting training job...";

python train.py --data ./data/;

} || {

echo "❌ Data quality check failed. Aborting training job.";

exit 1;

}

This simple command prevents thousands of dollars in wasted compute by ensuring that only validated, high-quality data enters the training pipeline.

labellint is not a blunt instrument. It is a suite of precision tools, each designed to find a specific category of data error.

Schema & Relational Integrity: This is the foundation. Does your data respect its own structure?

Geometric & Attribute Validation: This checks the physical properties of your labels.

bbox area (w*h) is consistent with the area field for non-polygonal labels, catching errors from bad data conversion.Logical Consistency: This catches the "human errors" that plague team-based annotation projects.

"Car" vs. "car" problem that can silently halve the number of examples for your most important class.Statistical Anomaly Detection: This is where labellint goes beyond simple errors to find high-risk patterns.

For a complete, up-to-date list of all rules and their descriptions, run labellint rules.

For a deep dive into the logic and implementation of each rule, please see the Project Wiki.

labellint Philosophylabellint is a deterministic, rules-based engine. It does not use AI to "guess" at errors. Its findings are repeatable, verifiable, and precise.labellint can analyze millions of annotations in seconds. This ensures it can be integrated into any workflow without becoming a bottleneck.This is a new tool solving an old problem. Bug reports, feature requests, and pull requests are welcome. The project is built on a foundation of clean code and is enforced by a strict CI pipeline.

pip install -e ".[dev]" to install the project in editable mode with all testing and linting dependencies.pytest) and the linter (ruff check .). We maintain 99% or Above test coverage.Project updates and version history are documented in the CHANGELog.md file.

FAQs

A high-precision CLI for finding errors in your computer vision annotation files before you waste GPU hours.

We found that labellint demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

/Security News

Two npm packages masquerading as WhatsApp developer libraries include a kill switch that deletes all files if the phone number isn’t whitelisted.

Research

/Security News

Socket uncovered 11 malicious Go packages using obfuscated loaders to fetch and execute second-stage payloads via C2 domains.

Security News

TC39 advances 11 JavaScript proposals, with two moving to Stage 4, bringing better math, binary APIs, and more features one step closer to the ECMAScript spec.