Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

llama-index-llms-mistralai

Advanced tools

Install the required packages using the following commands:

%pip install llama-index-llms-mistralai

!pip install llama-index

To use the MistralAI model, create an instance and provide your API key:

from llama_index.llms.mistralai import MistralAI

llm = MistralAI(api_key="<replace-with-your-key>")

To generate a text completion for a prompt, use the complete method:

resp = llm.complete("Paul Graham is ")

print(resp)

You can also chat with the model using a list of messages. Here’s an example:

from llama_index.core.llms import ChatMessage

messages = [

ChatMessage(role="system", content="You are CEO of MistralAI."),

ChatMessage(role="user", content="Tell me the story about La plateforme"),

]

resp = MistralAI().chat(messages)

print(resp)

To set a random seed for reproducibility, initialize the model with the random_seed parameter:

resp = MistralAI(random_seed=42).chat(messages)

print(resp)

You can stream responses using the stream_complete method:

resp = llm.stream_complete("Paul Graham is ")

for r in resp:

print(r.delta, end="")

To stream chat messages, use the following code:

messages = [

ChatMessage(role="system", content="You are CEO of MistralAI."),

ChatMessage(role="user", content="Tell me the story about La plateforme"),

]

resp = llm.stream_chat(messages)

for r in resp:

print(r.delta, end="")

To use a specific model configuration, initialize the model with the desired model name:

llm = MistralAI(model="mistral-medium")

resp = llm.stream_complete("Paul Graham is ")

for r in resp:

print(r.delta, end="")

You can call functions from the model by defining tools. Here’s an example:

from llama_index.llms.mistralai import MistralAI

from llama_index.core.tools import FunctionTool

def multiply(a: int, b: int) -> int:

"""Multiply two integers and return the result."""

return a * b

def mystery(a: int, b: int) -> int:

"""Mystery function on two integers."""

return a * b + a + b

mystery_tool = FunctionTool.from_defaults(fn=mystery)

multiply_tool = FunctionTool.from_defaults(fn=multiply)

llm = MistralAI(model="mistral-large-latest")

response = llm.predict_and_call(

[mystery_tool, multiply_tool],

user_msg="What happens if I run the mystery function on 5 and 7",

)

print(str(response))

https://docs.llamaindex.ai/en/stable/examples/llm/mistralai/

FAQs

llama-index llms mistral ai integration

We found that llama-index-llms-mistralai demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

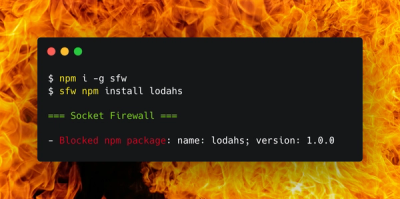

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.