Product

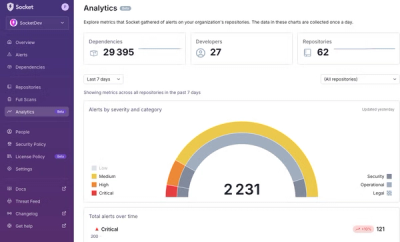

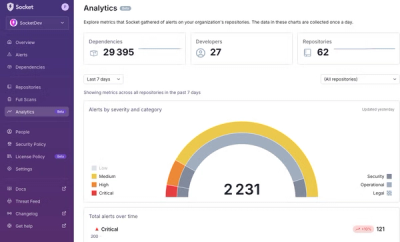

Introducing Historical Analytics – Now in Beta

We’re excited to announce a powerful new capability in Socket: historical data and enhanced analytics.

llama-index-packs-infer-retrieve-rerank

Advanced tools

This is our implementation of the paper "In-Context Learning for Extreme Multi-Label Classification by Oosterlinck et al.

The paper proposes "infer-retrieve-rerank", a simple paradigm using frozen LLM/retriever models that can do "extreme"-label classification (the label space is huge).

All of these can be implemented as LlamaIndex abstractions.

A full notebook guide can be found here.

You can download llamapacks directly using llamaindex-cli, which comes installed with the llama-index python package:

llamaindex-cli download-llamapack InferRetrieveRerankPack --download-dir ./infer_retrieve_rerank_pack

You can then inspect the files at ./infer_retrieve_rerank_pack and use them as a template for your own project!

You can download the pack to a ./infer_retrieve_rerank_pack directory:

from llama_index.core.llama_pack import download_llama_pack

# download and install dependencies

InferRetrieveRerankPack = download_llama_pack(

"InferRetrieveRerankPack", "./infer_retrieve_rerank_pack"

)

From here, you can use the pack, or inspect and modify the pack in ./infer_retrieve_rerank_pack.

Then, you can set up the pack like so:

# create the pack

pack = InferRetrieveRerankPack(

labels, # list of all label strings

llm=llm,

pred_context="<pred_context>",

reranker_top_n=3,

verbose=True,

)

The run() function runs predictions.

pred_reactions = pack.run(inputs=[s["text"] for s in samples])

You can also use modules individually.

# call the llm.complete()

llm = pack.llm

label_retriever = pack.label_retriever

FAQs

llama-index packs infer retrieve rerank integration

We found that llama-index-packs-infer-retrieve-rerank demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

We’re excited to announce a powerful new capability in Socket: historical data and enhanced analytics.

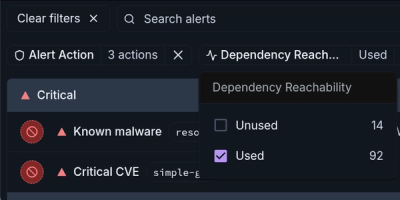

Product

Module Reachability filters out unreachable CVEs so you can focus on vulnerabilities that actually matter to your application.

Company News

Socket is bringing best-in-class reachability analysis into the platform — cutting false positives, accelerating triage, and cementing our place as the leader in software supply chain security.