Introduction

MEALPY is the largest python library in the world for most of the cutting-edge meta-heuristic algorithms

(nature-inspired algorithms, black-box optimization, global search optimizers, iterative learning algorithms,

continuous optimization, derivative free optimization, gradient free optimization, zeroth order optimization,

stochastic search optimization, random search optimization). These algorithms belong to population-based algorithms

(PMA), which are the most popular algorithms in the field of approximate optimization.

- Free software: GNU General Public License (GPL) V3 license

- Total algorithms: 215 (190 official (original, hybrid, variants), 25 developed)

- Documentation: https://mealpy.readthedocs.io/en/latest/

- Python versions: >=3.7x

- Dependencies: numpy, scipy, pandas, matplotlib

Citation Request

Please include these citations if you plan to use this library:

@article{van2023mealpy,

title={MEALPY: An open-source library for latest meta-heuristic algorithms in Python},

author={Van Thieu, Nguyen and Mirjalili, Seyedali},

journal={Journal of Systems Architecture},

year={2023},

publisher={Elsevier},

doi={10.1016/j.sysarc.2023.102871}

}

@article{van2023groundwater,

title={Groundwater level modeling using Augmented Artificial Ecosystem Optimization},

author={Van Thieu, Nguyen and Barma, Surajit Deb and Van Lam, To and Kisi, Ozgur and Mahesha, Amai},

journal={Journal of Hydrology},

volume={617},

pages={129034},

year={2023},

publisher={Elsevier},

doi={https://doi.org/10.1016/j.jhydrol.2022.129034}

}

@article{ahmed2021comprehensive,

title={A comprehensive comparison of recent developed meta-heuristic algorithms for streamflow time series forecasting problem},

author={Ahmed, Ali Najah and Van Lam, To and Hung, Nguyen Duy and Van Thieu, Nguyen and Kisi, Ozgur and El-Shafie, Ahmed},

journal={Applied Soft Computing},

volume={105},

pages={107282},

year={2021},

publisher={Elsevier},

doi={10.1016/j.asoc.2021.107282}

}

Usage

Goals

Our goals are to implement all classical as well as the state-of-the-art nature-inspired algorithms, create a simple interface that helps researchers access optimization algorithms as quickly as possible, and share knowledge of the optimization field with everyone without a fee. What you can do with mealpy:

- Analyse parameters of meta-heuristic algorithms.

- Perform Qualitative and Quantitative Analysis of algorithms.

- Analyse rate of convergence of algorithms.

- Test and Analyse the scalability and the robustness of algorithms.

- Save results in various formats (csv, json, pickle, png, pdf, jpeg)

- Export and import models can also be done with Mealpy.

- Solve any optimization problem

Installation

$ pip install mealpy==3.0.2

- Install the alpha/beta version from PyPi

$ pip install mealpy==2.5.4a6

- Install the pre-release version directly from the source code:

$ git clone https://github.com/thieu1995/mealpy.git

$ cd mealpy

$ python setup.py install

- In case, you want to install the development version from Github:

$ pip install git+https://github.com/thieu1995/permetrics

After installation, you can import Mealpy as any other Python module:

$ python

>>> import mealpy

>>> mealpy.__version__

>>> print(mealpy.get_all_optimizers())

>>> model = mealpy.get_optimizer_by_name("OriginalWOA")(epoch=100, pop_size=50)

Examples

Before dive into some examples, let me ask you a question. What type of problem are you trying to solve?

Additionally, what would be the solution for your specific problem?

Based on the table below, you can select an appropriate type of decision variables to use.

| FloatVar | FloatVar(lb=(-10., )*7, ub=(10., )*7, name="delta") | Continuous Problem |

| IntegerVar | IntegerVar(lb=(-10., )*7, ub=(10., )*7, name="delta") | LP, IP, NLP, QP, MIP |

| StringVar | StringVar(valid_sets=(("auto", "backward", "forward"), ("leaf", "branch", "root")), name="delta") | ML, AI-optimize |

| BinaryVar | BinaryVar(n_vars=11, name="delta") | Networks |

| BoolVar | BoolVar(n_vars=11, name="delta") | ML, AI-optimize |

| PermutationVar | PermutationVar(valid_set=(-10, -4, 10, 6, -2), name="delta") | Combinatorial Optimization |

| CategoricalVar | CategoricalVar(valid_sets=(("auto", 2, 3, "backward", True), (0, "tournament", "round-robin")), name="delta") | MIP, MILP |

| SequenceVar | SequenceVar(valid_sets=((1, ), {2, 3}, [3, 5, 1]), return_type=list, name='delta') | Hyper-parameter tuning |

| TransferBoolVar | TransferBoolVar(n_vars=11, name="delta", tf_func="sstf_02") | ML, AI-optimize, Feature |

| TransferBinaryVar | TransferBinaryVar(n_vars=11, name="delta", tf_func="vstf_04") | Networks, Feature Selection |

Let's go through a basic and advanced example.

Simple Benchmark Function

Using Problem dict

from mealpy import FloatVar, SMA

import numpy as np

def objective_function(solution):

return np.sum(solution**2)

problem = {

"obj_func": objective_function,

"bounds": FloatVar(lb=(-100., )*30, ub=(100., )*30),

"minmax": "min",

"log_to": None,

}

model = SMA.OriginalSMA(epoch=100, pop_size=50, pr=0.03)

g_best = model.solve(problem)

print(f"Best solution: {g_best.solution}, Best fitness: {g_best.target.fitness}")

Define a custom Problem class

Please note that, there is no more generate_position, amend_solution, and fitness_function in Problem class.

We take care everything under the DataType Class above. Just choose which one fit for your problem.

We recommend you define a custom class that inherit Problem class if your decision variable is not FloatVar

from mealpy import Problem, FloatVar, BBO

import numpy as np

class Squared(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, solution):

x = self.decode_solution(solution)["my_var"]

return np.sum(x ** 2)

bound = FloatVar(lb=(-10., )*20, ub=(10., )*20, name="my_var")

problem = Squared(bounds=bound, minmax="min", name="Squared", data="Amazing")

model = BBO.OriginalBBO(epoch=100, pop_size=20)

g_best = model.solve(problem)

Set Seed for Optimizer (So many people asking for this feature)

You can set random seed number for each run of single optimizer.

model = SMA.OriginalSMA(epoch=100, pop_size=50, pr=0.03)

g_best = model.solve(problem=problem, seed=10)

Large-Scale Optimization

from mealpy import FloatVar, SHADE

import numpy as np

def objective_function(solution):

return np.sum(solution**2)

problem = {

"obj_func": objective_function,

"bounds": FloatVar(lb=(-1000., )*10000, ub=(1000.,)*10000),

"minmax": "min",

"log_to": "console",

}

optimizer = SHADE.OriginalSHADE(epoch=10000, pop_size=100)

g_best = optimizer.solve(problem)

print(f"Best solution: {g_best.solution}, Best fitness: {g_best.target.fitness}")

Distributed Optimization / Parallelization Optimization

Please read the article titled MEALPY: An open-source library for latest meta-heuristic algorithms in Python to

gain a clear understanding of the concept of parallelization (distributed

optimization) in metaheuristics. Not all metaheuristics can be run in parallel.

from mealpy import FloatVar, SMA

import numpy as np

def objective_function(solution):

return np.sum(solution**2)

problem = {

"obj_func": objective_function,

"bounds": FloatVar(lb=(-100., )*100, ub=(100., )*100),

"minmax": "min",

"log_to": "console",

}

optimizer = SMA.OriginalSMA(epoch=10000, pop_size=100, pr=0.03)

optimizer.solve(problem, mode="thread", n_workers=10)

print(f"Best solution: {optimizer.g_best.solution}, Best fitness: {optimizer.g_best.target.fitness}")

optimizer.solve(problem, mode="process", n_workers=8)

print(f"Best solution: {optimizer.g_best.solution}, Best fitness: {optimizer.g_best.target.fitness}")

The Benefit Of Using Custom Problem Class (BEST PRACTICE)

Optimize Machine Learning model

In this example, we use SMA optimize to optimize the hyper-parameters of SVC model.

from sklearn.svm import SVC

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn import datasets, metrics

from mealpy import FloatVar, StringVar, IntegerVar, BoolVar, CategoricalVar, SMA, Problem

X, y = datasets.load_breast_cancer(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=1, stratify=y)

sc = StandardScaler()

X_train_std = sc.fit_transform(X_train)

X_test_std = sc.transform(X_test)

data = {

"X_train": X_train_std,

"X_test": X_test_std,

"y_train": y_train,

"y_test": y_test

}

class SvmOptimizedProblem(Problem):

def __init__(self, bounds=None, minmax="max", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

C_paras, kernel_paras = x_decoded["C_paras"], x_decoded["kernel_paras"]

degree, gamma, probability = x_decoded["degree_paras"], x_decoded["gamma_paras"], x_decoded["probability_paras"]

svc = SVC(C=C_paras, kernel=kernel_paras, degree=degree,

gamma=gamma, probability=probability, random_state=1)

svc.fit(self.data["X_train"], self.data["y_train"])

y_predict = svc.predict(self.data["X_test"])

return metrics.accuracy_score(self.data["y_test"], y_predict)

my_bounds = [

FloatVar(lb=0.01, ub=1000., name="C_paras"),

StringVar(valid_sets=('linear', 'poly', 'rbf', 'sigmoid'), name="kernel_paras"),

IntegerVar(lb=1, ub=5, name="degree_paras"),

CategoricalVar(valid_sets=('scale', 'auto', 0.01, 0.05, 0.1, 0.5, 1.0), name="gamma_paras"),

BoolVar(n_vars=1, name="probability_paras"),

]

problem = SvmOptimizedProblem(bounds=my_bounds, minmax="max", data=data)

model = SMA.OriginalSMA(epoch=100, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best accuracy: {model.g_best.target.fitness}")

print(f"Best parameters: {model.problem.decode_solution(model.g_best.solution)}")

Solving Combinatorial Problems

Traveling Salesman Problem (TSP)

In the context of the Mealpy for the Traveling Salesman Problem (TSP), a solution is a possible route that

represents a tour of visiting all the cities exactly once and returning to the starting city. The solution is

typically represented as a permutation of the cities, where each city appears exactly once in the permutation.

For example, let's consider a TSP instance with 5 cities labeled as A, B, C, D, and E. A possible solution could be

represented as the permutation [A, B, D, E, C], which indicates the order in which the cities are visited. This

solution suggests that the tour starts at city A, then moves to city B, then D, E, and finally C before returning to city A.

import numpy as np

from mealpy import PermutationVar, WOA, Problem

city_positions = np.array([[60, 200], [180, 200], [80, 180], [140, 180], [20, 160],

[100, 160], [200, 160], [140, 140], [40, 120], [100, 120],

[180, 100], [60, 80], [120, 80], [180, 60], [20, 40],

[100, 40], [200, 40], [20, 20], [60, 20], [160, 20]])

num_cities = len(city_positions)

data = {

"city_positions": city_positions,

"num_cities": num_cities,

}

class TspProblem(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

@staticmethod

def calculate_distance(city_a, city_b):

return np.linalg.norm(city_a - city_b)

@staticmethod

def calculate_total_distance(route, city_positions):

total_distance = 0

num_cities = len(route)

for idx in range(num_cities):

current_city = route[idx]

next_city = route[(idx + 1) % num_cities]

total_distance += TspProblem.calculate_distance(city_positions[current_city], city_positions[next_city])

return total_distance

def obj_func(self, x):

x_decoded = self.decode_solution(x)

route = x_decoded["per_var"]

fitness = self.calculate_total_distance(route, self.data["city_positions"])

return fitness

bounds = PermutationVar(valid_set=list(range(0, num_cities)), name="per_var")

problem = TspProblem(bounds=bounds, minmax="min", data=data)

model = WOA.OriginalWOA(epoch=100, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)}")

Job Shop Scheduling Problem Using Woa Optimizer

Note that this implementation assumes that the job times and machine times are provided as 2D lists, where

job_times[i][j] represents the processing time of job i on machine j.

Keep in mind that this is a simplified implementation, and you may need to modify it according to the specific

requirements and constraints of your Job Shop Scheduling problem.

import numpy as np

from mealpy import PermutationVar, WOA, Problem

job_times = [[2, 1, 3], [4, 2, 1], [3, 3, 2]]

machine_times = [[3, 2, 1], [1, 4, 2], [2, 3, 2]]

n_jobs = len(job_times)

n_machines = len(machine_times)

data = {

"job_times": job_times,

"machine_times": machine_times,

"n_jobs": n_jobs,

"n_machines": n_machines

}

class JobShopProblem(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["per_var"]

makespan = np.zeros((self.data["n_jobs"], self.data["n_machines"]))

for gene in x:

job_idx = gene // self.data["n_machines"]

machine_idx = gene % self.data["n_machines"]

if job_idx == 0 and machine_idx == 0:

makespan[job_idx][machine_idx] = job_times[job_idx][machine_idx]

elif job_idx == 0:

makespan[job_idx][machine_idx] = makespan[job_idx][machine_idx - 1] + job_times[job_idx][machine_idx]

elif machine_idx == 0:

makespan[job_idx][machine_idx] = makespan[job_idx - 1][machine_idx] + job_times[job_idx][machine_idx]

else:

makespan[job_idx][machine_idx] = max(makespan[job_idx][machine_idx - 1], makespan[job_idx - 1][machine_idx]) + job_times[job_idx][machine_idx]

return np.max(makespan)

bounds = PermutationVar(valid_set=list(range(0, n_jobs*n_machines)), name="per_var")

problem = JobShopProblem(bounds=bounds, minmax="min", data=data)

model = WOA.OriginalWOA(epoch=100, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)}")

Shortest Path Problem

In this example, the graph is represented as a NumPy array where each element represents the cost or distance between two nodes.

Note that this implementation assumes that the graph is represented by a symmetric matrix, where graph[i,j]

represents the distance between nodes i and j. If your graph representation is different, you may need to modify

the code accordingly.

Please keep in mind that this implementation is a basic example and may not be optimized for large-scale problems.

Further modifications and optimizations may be required depending on your specific use case.

import numpy as np

from mealpy import PermutationVar, WOA, Problem

graph = np.array([

[0, 2, 4, 0, 7, 9],

[2, 0, 1, 4, 2, 8],

[4, 1, 0, 1, 3, 0],

[6, 4, 5, 0, 3, 2],

[0, 2, 3, 3, 0, 2],

[9, 0, 4, 2, 2, 0]

])

class ShortestPathProblem(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

self.eps = 1e10

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

individual = x_decoded["path"]

total_distance = 0

for idx in range(len(individual) - 1):

start_node = individual[idx]

end_node = individual[idx + 1]

weight = self.data[start_node, end_node]

if weight == 0:

return self.eps

total_distance += weight

return total_distance

num_nodes = len(graph)

bounds = PermutationVar(valid_set=list(range(0, num_nodes)), name="path")

problem = ShortestPathProblem(bounds=bounds, minmax="min", data=graph)

model = WOA.OriginalWOA(epoch=100, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)}")

Location Optimization

Let's consider an example of location optimization in the context of a retail company that wants to open a certain

number of new stores in a region to maximize market coverage while minimizing operational costs.

A company wants to open five new stores in a region with several potential locations. The objective is to

determine the optimal locations for these stores while considering factors such as population density and

transportation costs. The goal is to maximize market coverage by locating stores in areas with high demand while

minimizing the overall transportation costs required to serve customers.

By applying location optimization techniques, the retail company can make informed decisions about where to open new

stores, considering factors such as population density and transportation costs. This approach allows the company to

maximize market coverage, make efficient use of resources, and ultimately improve customer service and profitability.

Note that this example is a simplified illustration, and in real-world scenarios, location optimization problems can

involve more complex constraints, additional factors, and larger datasets. However, the general process remains

similar, involving data analysis, mathematical modeling, and optimization techniques to determine the optimal

locations for facilities.

import numpy as np

from mealpy import BinaryVar, WOA, Problem

locations = np.array([

[2, 4],

[5, 6],

[9, 3],

[7, 8],

[1, 10],

[3, 2],

[5, 5],

[8, 2],

[7, 6],

[1, 9]

])

distance_matrix = np.linalg.norm(locations[:, np.newaxis] - locations, axis=2)

num_stores = 5

max_distance = 10

data = {

"num_stores": num_stores,

"max_distance": max_distance,

"penalty": 1e10

}

class LocationOptProblem(Problem):

def __init__(self, bounds=None, minmax=None, data=None, **kwargs):

self.data = data

self.eps = 1e10

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["placement_var"]

total_coverage = np.sum(x)

total_dist = np.sum(x[:, np.newaxis] * distance_matrix)

if total_dist == 0:

return self.eps

if total_coverage < self.data["num_stores"]:

return self.eps

return total_dist

bounds = BinaryVar(n_vars=len(locations), name="placement_var")

problem = LocationOptProblem(bounds=bounds, minmax="min", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)}")

Supply Chain Optimization

Let's assume we have a supply chain network with 5 distribution centers (DC1, DC2, DC3, DC4, DC5) and 10 products

(P1, P2, P3, ..., P10). Our goal is to determine the optimal allocation of products to the distribution centers in

a way that minimizes the total transportation cost.

Each solution represents an allocation of products to distribution centers. We can use a binary matrix with

dimensions (10, 5) where each element (i, j) represents whether product i is allocated to distribution center j.

For example, a chromosome [1, 0, 1, 0, 1] would mean that product 1 is allocated to DC1, DC3, DC5.

We can add the maximum capacity of each distribution center, therefor we need penalty term to the fitness

evaluation function to penalize solutions that violate this constraint. The penalty can be based on the degree of

violation or a fixed penalty value.

import numpy as np

from mealpy import BinaryVar, WOA, Problem

num_products = 10

num_distribution_centers = 5

transportation_cost = np.random.randint(1, 10, size=(num_products, num_distribution_centers))

data = {

"num_products": num_products,

"num_distribution_centers": num_distribution_centers,

"transportation_cost": transportation_cost,

"max_capacity": 4,

"penalty": 1e10

}

class SupplyChainProblem(Problem):

def __init__(self, bounds=None, minmax=None, data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["placement_var"].reshape((self.data["num_products"], self.data["num_distribution_centers"]))

if np.any(np.all(x==0, axis=1)):

return 0

total_cost = np.sum(self.data["transportation_cost"] * x)

excess_capacity = np.maximum(np.sum(x, axis=0) - self.data["max_capacity"], 0)

penalty = np.sum(excess_capacity)

fitness = 1 / (total_cost + penalty)

return fitness

bounds = BinaryVar(n_vars=num_products * num_distribution_centers, name="placement_var")

problem = SupplyChainProblem(bounds=bounds, minmax="max", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)['placement_var'].reshape((num_products, num_distribution_centers))}")

Healthcare Workflow Optimization Problem

Define a chromosome representation that encodes the allocation of resources and patient flow in the emergency

department. This could be a binary matrix where each row represents a patient and each column represents a resource

(room). If the element is 1, it means the patient is assigned to that particular room, and if the element is 0, it

means the patient is not assigned to that room

Please note that this implementation is a basic template and may require further customization based on the specific

objectives, constraints, and evaluation criteria of your healthcare workflow optimization problem. You'll need to

define the specific fitness function and optimization objectives based on the factors you want to optimize, such as

patient waiting times, resource utilization, and other relevant metrics in the healthcare workflow context.

import numpy as np

from mealpy import BinaryVar, WOA, Problem

num_patients = 50

num_resources = 10

waiting_matrix = np.random.randint(1, 10, size=(num_patients, num_resources))

data = {

"num_patients": num_patients,

"num_resources": num_resources,

"waiting_matrix": waiting_matrix,

"max_resource_capacity": 10,

"max_waiting_time": 60,

"penalty_value": 1e2,

"penalty_patient": 1e10

}

class SupplyChainProblem(Problem):

def __init__(self, bounds=None, minmax=None, data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["placement_var"].reshape(self.data["num_patients"], self.data["num_resources"])

if np.any(np.all(x==0, axis=1)) or np.any(np.sum(x>3, axis=1)):

return self.data["penalty_patient"]

room_used = np.sum(x, axis=0)

wait_time = np.sum(x * self.data["waiting_matrix"], axis=1)

violated_constraints = np.sum(room_used > self.data["max_resource_capacity"]) + np.sum(wait_time > self.data["max_waiting_time"])

resource_utilization_fitness = 1 - np.mean(room_used) / self.data["max_resource_capacity"]

waiting_time_fitness = 1 - np.mean(wait_time) / self.data["max_waiting_time"]

fitness = resource_utilization_fitness + waiting_time_fitness + self.data['penalty_value'] * violated_constraints

return fitness

bounds = BinaryVar(n_vars=num_patients * num_resources, name="placement_var")

problem = SupplyChainProblem(bounds=bounds, minmax="min", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)['placement_var'].reshape((num_patients, num_resources))}")

Production Optimization Problem

Let's consider a simplified example of production optimization in the context of a manufacturing company that

produces electronic devices, such as smartphones. The objective is to maximize production output while minimizing

production costs.

This example uses binary representations for production configurations, assuming each task can be assigned to a

resource (1) or not (0). You may need to adapt the representation and operators to suit your specific production

optimization problem.

import numpy as np

from mealpy import BinaryVar, WOA, Problem

num_tasks = 10

num_resources = 5

task_processing_times = np.array([2, 3, 4, 2, 3, 2, 3, 4, 2, 3])

resource_capacity = np.array([10, 8, 6, 12, 15])

production_costs = np.array([5, 6, 4, 7, 8, 9, 5, 6, 7, 8])

production_outputs = np.array([20, 18, 16, 22, 25, 24, 20, 18, 19, 21])

max_total_time = 50

max_defect_rate = 0.2

penalty = -1000

data = {

"num_tasks": num_tasks,

"num_resources": num_resources,

"task_processing_times": task_processing_times,

"resource_capacity": resource_capacity,

"production_costs": production_costs,

"production_outputs": production_outputs,

"max_defect_rate": max_defect_rate,

"penalty": penalty

}

class SupplyChainProblem(Problem):

def __init__(self, bounds=None, minmax=None, data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["placement_var"].reshape((self.data["num_tasks"], self.data["num_resources"]))

if np.any(np.all(x==0, axis=1)) or np.any(np.all(x==0, axis=0)):

return self.data["penalty"]

violated_constraints = 0

resource_utilization = np.sum(x, axis=0)

if np.any(resource_utilization > self.data["resource_capacity"]):

violated_constraints += 1

total_time = np.sum(np.dot(self.data["task_processing_times"].reshape(1, -1), x))

if total_time > max_total_time:

violated_constraints += 1

defect_rate = np.dot(self.data["production_costs"].reshape(1, -1), x) / np.dot(self.data["production_outputs"], x)

if np.any(defect_rate > max_defect_rate):

violated_constraints += 1

profit = np.sum(np.dot(self.data["production_outputs"].reshape(1, -1), x)) - np.sum(np.dot(self.data["production_costs"].reshape(1, -1), x))

if violated_constraints > 0:

return profit + self.data["penalty"] * violated_constraints

return profit

bounds = BinaryVar(n_vars=num_tasks * num_resources, name="placement_var")

problem = SupplyChainProblem(bounds=bounds, minmax="max", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)['placement_var'].reshape((num_tasks, num_resources))}")

Employee Rostering Problem Using Woa Optimizer

The goal is to create an optimal schedule that assigns employees to shifts while satisfying various

constraints and objectives. Note that this implementation assumes that shift_requirements array

has dimensions (num_employees, num_shifts), and shift_costs is a 1D array of length num_shifts.

Please keep in mind that this is a simplified implementation, and you may need to modify it according to the

specific requirements and constraints of your employee rostering problem. Additionally, you might want to

introduce additional mechanisms or constraints such as fairness, employee preferences, or shift

dependencies to enhance the model's effectiveness in real-world scenarios.

For example, if you have 5 employees and 3 shifts, a chromosome could be represented as [2, 1, 0, 2, 0],

where employee 0 is assigned to shift 2, employee 1 is assigned to shift 1,

employee 2 is assigned to shift 0, and so on.

import numpy as np

from mealpy import IntegerVar, WOA, Problem

shift_requirements = np.array([[2, 1, 3], [4, 2, 1], [3, 3, 2]])

shift_costs = np.array([10, 8, 12])

num_employees = shift_requirements.shape[0]

num_shifts = shift_requirements.shape[1]

data = {

"shift_requirements": shift_requirements,

"shift_costs": shift_costs,

"num_employees": num_employees,

"num_shifts": num_shifts

}

class EmployeeRosteringProblem(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["shift_var"]

shifts_covered = np.zeros(self.data["num_shifts"])

total_cost = 0

for idx in range(self.data["num_employees"]):

shift_idx = x[idx]

shifts_covered[shift_idx] += 1

total_cost += self.data["shift_costs"][shift_idx]

coverage_diff = self.data["shift_requirements"] - shifts_covered

coverage_penalty = np.sum(np.abs(coverage_diff))

return total_cost + coverage_penalty

bounds = IntegerVar(lb=[0, ]*num_employees, ub=[num_shifts-1, ]*num_employees, name="shift_var")

problem = EmployeeRosteringProblem(bounds=bounds, minmax="min", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution)}")

Maintenance Scheduling

In maintenance scheduling, the goal is to optimize the schedule for performing maintenance tasks on

various assets or equipment. The objective is to minimize downtime and maximize the utilization of

assets while considering various constraints such as resource availability, task dependencies, and time constraints.

Each element in the solution represents whether a task is assigned to an asset (1) or not (0). The schedule

specifies when each task should start and which asset it is assigned to, aiming to minimize the total downtime.

By using the Mealpy, you can find an efficient maintenance schedule that minimizes downtime,

maximizes asset utilization, and satisfies various constraints, ultimately optimizing

the maintenance operations for improved reliability and productivity.

import numpy as np

from mealpy import BinaryVar, WOA, Problem

num_tasks = 10

num_assets = 5

task_durations = np.random.randint(1, 10, size=(num_tasks, num_assets))

data = {

"num_tasks": num_tasks,

"num_assets": num_assets,

"task_durations": task_durations,

"unassigned_penalty": -100

}

class MaintenanceSchedulingProblem(Problem):

def __init__(self, bounds=None, minmax=None, data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, x):

x_decoded = self.decode_solution(x)

x = x_decoded["task_var"]

downtime = -np.sum(x.reshape((self.data["num_tasks"], self.data["num_assets"])) * self.data["task_durations"])

if np.sum(x) == 0:

downtime += self.data["unassigned_penalty"]

return downtime

bounds = BinaryVar(n_vars=num_tasks * num_assets, name="task_var")

problem = MaintenanceSchedulingProblem(bounds=bounds, minmax="max", data=data)

model = WOA.OriginalWOA(epoch=50, pop_size=20)

model.solve(problem)

print(f"Best agent: {model.g_best}")

print(f"Best solution: {model.g_best.solution}")

print(f"Best fitness: {model.g_best.target.fitness}")

print(f"Best real scheduling: {model.problem.decode_solution(model.g_best.solution).reshape((num_tasks, num_assets))}")

Constrained Benchmark Function

from mealpy import FloatVar, SMA

import numpy as np

def objective_function(solution):

def g1(x):

return 2*x[0] + 2*x[1] + x[9] + x[10] - 10

def g2(x):

return 2 * x[0] + 2 * x[2] + x[9] + x[10] - 10

def g3(x):

return 2 * x[1] + 2 * x[2] + x[10] + x[11] - 10

def g4(x):

return -8*x[0] + x[9]

def g5(x):

return -8*x[1] + x[10]

def g6(x):

return -8*x[2] + x[11]

def g7(x):

return -2*x[3] - x[4] + x[9]

def g8(x):

return -2*x[5] - x[6] + x[10]

def g9(x):

return -2*x[7] - x[8] + x[11]

def violate(value):

return 0 if value <= 0 else value

fx = 5 * np.sum(solution[:4]) - 5*np.sum(solution[:4]**2) - np.sum(solution[4:13])

fx += violate(g1(solution))**2 + violate(g2(solution)) + violate(g3(solution)) + \

2*violate(g4(solution)) + violate(g5(solution)) + violate(g6(solution))+ \

violate(g7(solution)) + violate(g8(solution)) + violate(g9(solution))

return fx

problem = {

"obj_func": objective_function,

"bounds": FloatVar(lb=[0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0], ub=[1, 1, 1, 1, 1, 1, 1, 1, 1, 100, 100, 100, 1]),

"minmax": "min",

}

optimizer = SMA.OriginalSMA(epoch=100, pop_size=50, pr=0.03)

optimizer.solve(problem)

print(f"Best solution: {optimizer.g_best.solution}, Best fitness: {optimizer.g_best.target.fitness}")

Multi-objective Benchmark Function

from mealpy import FloatVar, SMA

import numpy as np

def objective_function(solution):

def booth(x, y):

return (x + 2*y - 7)**2 + (2*x + y - 5)**2

def bukin(x, y):

return 100 * np.sqrt(np.abs(y - 0.01 * x**2)) + 0.01 * np.abs(x + 10)

def matyas(x, y):

return 0.26 * (x**2 + y**2) - 0.48 * x * y

return [booth(solution[0], solution[1]), bukin(solution[0], solution[1]), matyas(solution[0], solution[1])]

problem = {

"obj_func": objective_function,

"bounds": FloatVar(lb=(-10, -10), ub=(10, 10)),

"minmax": "min",

"obj_weights": [0.4, 0.1, 0.5]

}

optimizer = SMA.OriginalSMA(epoch=100, pop_size=50, pr=0.03)

optimizer.solve(problem)

print(f"Best solution: {optimizer.g_best.solution}, Best fitness: {optimizer.g_best.target.fitness}")

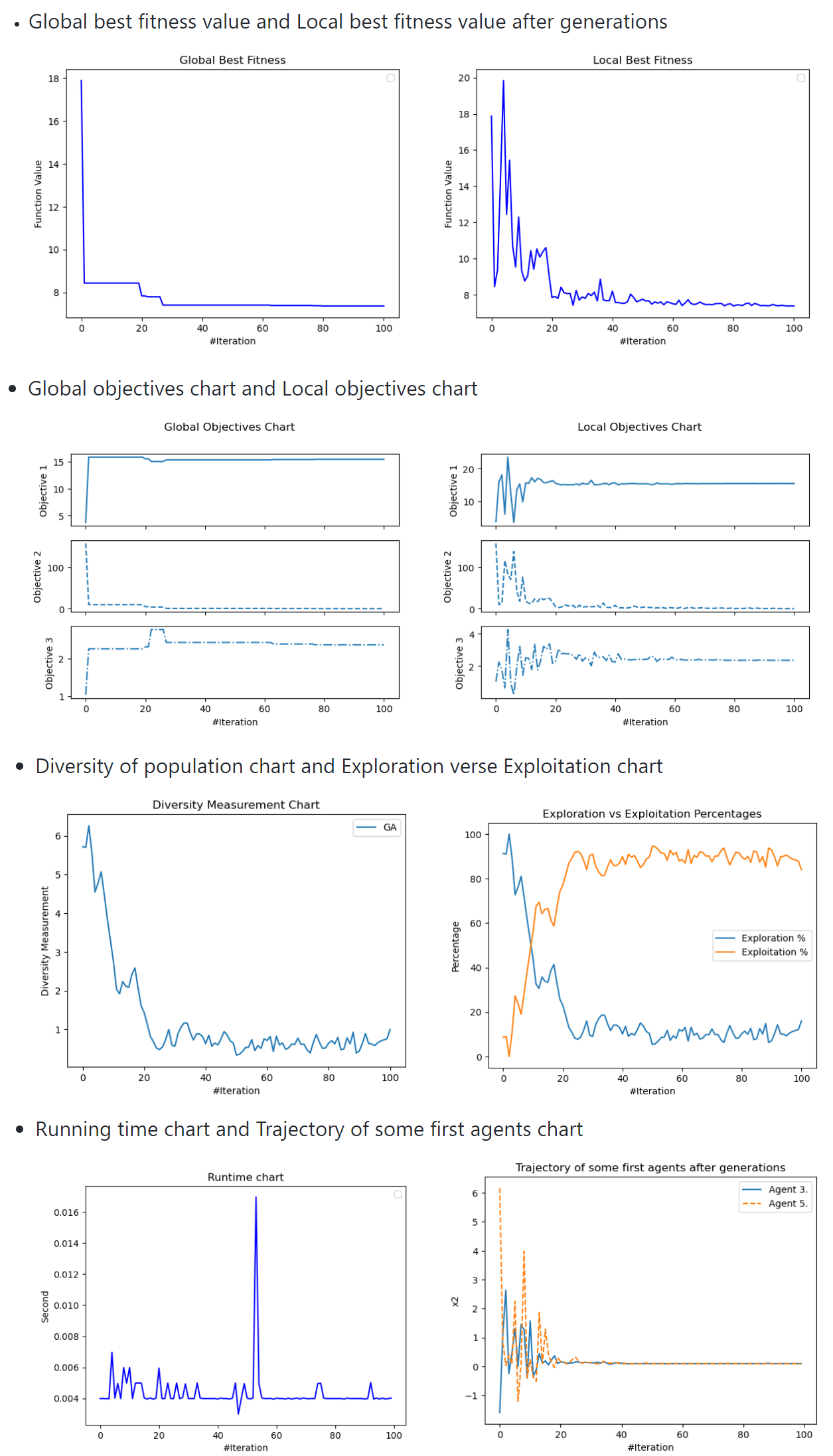

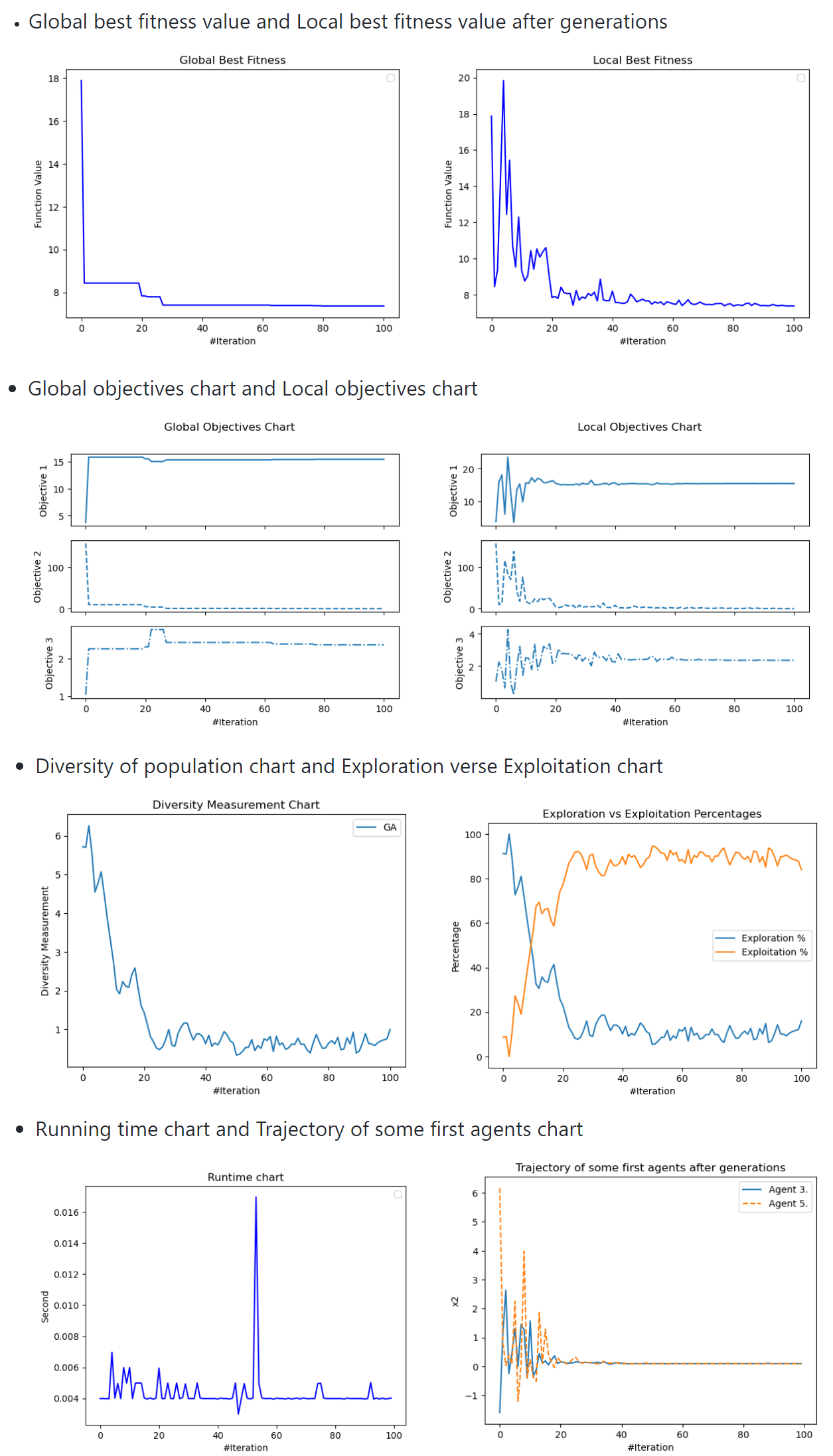

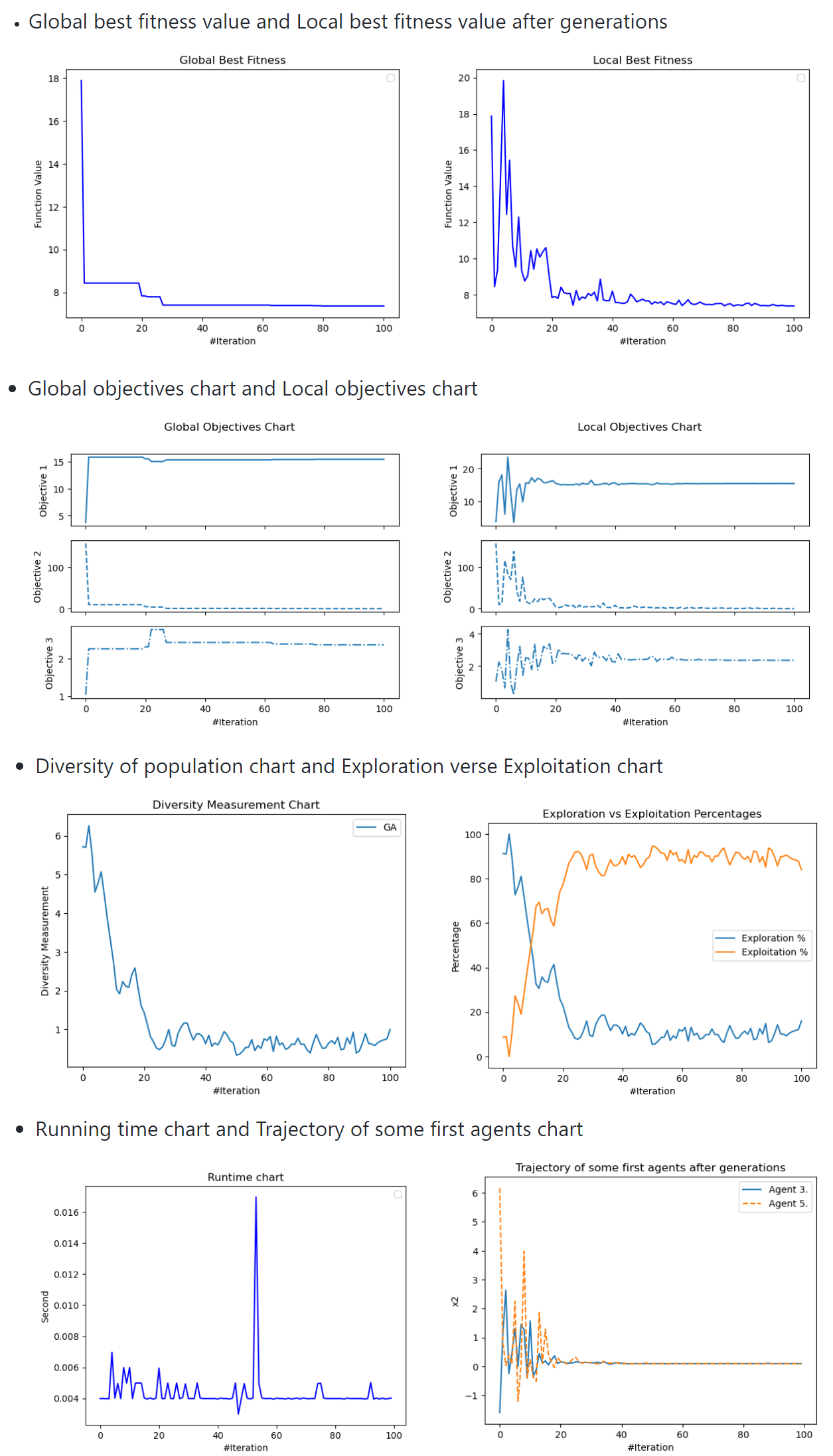

optimizer.history.save_global_objectives_chart(filename="hello/goc")

optimizer.history.save_local_objectives_chart(filename="hello/loc")

optimizer.history.save_global_best_fitness_chart(filename="hello/gbfc")

optimizer.history.save_local_best_fitness_chart(filename="hello/lbfc")

optimizer.history.save_runtime_chart(filename="hello/rtc")

optimizer.history.save_exploration_exploitation_chart(filename="hello/eec")

optimizer.history.save_diversity_chart(filename="hello/dc")

optimizer.history.save_trajectory_chart(list_agent_idx=[3, 5], selected_dimensions=[2], filename="hello/tc")

Custom Problem

For our custom problem, we can create a class and inherit from the Problem class, named the child class the

'Squared' class. In the initialization method of the 'Squared' class, we have to set the bounds, and minmax

of the problem (bounds: a problem's type, and minmax: a string specifying whether the problem is a 'min' or 'max' problem).

Afterwards, we have to override the abstract method obj_func(), which takes a parameter 'solution' (the solution

to be evaluated) and returns the function value. The resulting code should look something like the code snippet

below. 'Name' is an additional parameter we want to include in this class, and you can include any other additional

parameters you need. But remember to set up all additional parameters before super() called.

from mealpy import Problem, FloatVar, BBO

import numpy as np

class Squared(Problem):

def __init__(self, bounds=None, minmax="min", data=None, **kwargs):

self.data = data

super().__init__(bounds, minmax, **kwargs)

def obj_func(self, solution):

return np.sum(solution ** 2)

problem = Squared(bounds=FloatVar(lb=(-10., )*20, ub=(10., )*20), minmax="min", name="Squared", data="Amazing")

model = BBO.OriginalBBO(epoch=10, pop_size=50)

g_best = model.solve(problem)

print(g_best.solution)

print(g_best.target.fitness)

print(g_best.target.objectives)

print(g_best)

print(model.get_parameters())

print(model.get_name())

print(model.get_attributes()["g_best"])

print(model.problem.get_name())

print(model.problem.n_dims)

print(model.problem.bounds)

print(model.problem.lb)

print(model.problem.ub)

Tuner class (GridSearchCV/ParameterSearch, Hyper-parameter tuning)

We build a dedicated class, Tuner, that can help you tune your algorithm's parameters.

from opfunu.cec_based.cec2017 import F52017

from mealpy import FloatVar, BBO, Tuner

f1 = F52017(30, f_bias=0)

p1 = {

"bounds": FloatVar(lb=f1.lb, ub=f1.ub),

"obj_func": f1.evaluate,

"minmax": "min",

"name": "F5",

"log_to": "console",

}

paras_bbo_grid = {

"epoch": [10, 20, 30, 40],

"pop_size": [50, 100, 150],

"n_elites": [2, 3, 4, 5],

"p_m": [0.01, 0.02, 0.05]

}

term = {

"max_epoch": 200,

"max_time": 20,

"max_fe": 10000

}

if __name__ == "__main__":

model = BBO.OriginalBBO()

tuner = Tuner(model, paras_bbo_grid)

tuner.execute(problem=p1, termination=term, n_trials=5, n_jobs=4, mode="thread", n_workers=4, verbose=True)

print(tuner.best_row)

print(tuner.best_score)

print(tuner.best_params)

print(type(tuner.best_params))

print(tuner.best_algorithm)

tuner.export_results(save_path="history", file_name="tuning_best_fit.csv")

tuner.export_figures()

g_best = tuner.resolve(mode="thread", n_workers=4, termination=term)

print(g_best.solution, g_best.target.fitness)

print(tuner.algorithm.problem.get_name())

print(tuner.best_algorithm.get_name())

Multitask class (Multitask solver)

We also build a dedicated class, Multitask, that can help you run several scenarios. For example:

- Run 1 algorithm with 1 problem, and multiple trials

- Run 1 algorithm with multiple problems, and multiple trials

- Run multiple algorithms with 1 problem, and multiple trials

- Run multiple algorithms with multiple problems, and multiple trials

from opfunu.cec_based.cec2017 import F52017, F102017, F292017

from mealpy import FloatVar

from mealpy import BBO, DE

from mealpy import Multitask

f1 = F52017(30, f_bias=0)

f2 = F102017(30, f_bias=0)

f3 = F292017(30, f_bias=0)

p1 = {

"bounds": FloatVar(lb=f1.lb, ub=f1.ub),

"obj_func": f1.evaluate,

"minmax": "min",

"name": "F5",

"log_to": "console",

}

p2 = {

"bounds": FloatVar(lb=f2.lb, ub=f2.ub),

"obj_func": f2.evaluate,

"minmax": "min",

"name": "F10",

"log_to": "console",

}

p3 = {

"bounds": FloatVar(lb=f3.lb, ub=f3.ub),

"obj_func": f3.evaluate,

"minmax": "min",

"name": "F29",

"log_to": "console",

}

model1 = BBO.DevBBO(epoch=10000, pop_size=50)

model2 = BBO.OriginalBBO(epoch=10000, pop_size=50)

model3 = DE.OriginalDE(epoch=10000, pop_size=50)

model4 = DE.SAP_DE(epoch=10000, pop_size=50)

term = {

"max_fe": 3000

}

if __name__ == "__main__":

multitask = Multitask(algorithms=(model1, model2, model3, model4), problems=(p1, p2, p3), terminations=(term, ), modes=("thread", ), n_workers=4)

multitask.execute(n_trials=5, n_jobs=None, save_path="history", save_as="csv", save_convergence=True, verbose=False)

For more usage examples please look at examples folder.

More advanced examples can also be found in the Mealpy-examples repository.

Get Visualize Figures

All Optimizers

import numpy as np

from opfunu.cec_based.cec2017 import F292017

from mealpy import BBO, PSO, GA, ALO, AO, ARO, AVOA, BA, BBOA, BMO, EOA, IWO

from mealpy import FloatVar

from mealpy import GJO, FOX, FOA, FFO, FFA, FA, ESOA, EHO, DO, DMOA, CSO, CSA, CoatiOA, COA, BSA

from mealpy import HCO, ICA, LCO, WarSO, TOA, TLO, SSDO, SPBO, SARO, QSA, ArchOA, ASO, CDO, EFO, EO, EVO, FLA

from mealpy import HGSO, MVO, NRO, RIME, SA, WDO, TWO, ABC, ACOR, AGTO, BeesA, BES, BFO, ZOA, WOA, WaOA, TSO

from mealpy import PFA, OOA, NGO, NMRA, MSA, MRFO, MPA, MGO, MFO, JA, HHO, HGS, HBA, GWO, GTO, GOA

from mealpy import Problem

from mealpy import SBO, SMA, SOA, SOS, TPO, TSA, VCS, WHO, AOA, CEM, CGO, CircleSA, GBO, HC, INFO, PSS, RUN, SCA

from mealpy import SHIO, TS, HS, AEO, GCO, WCA, CRO, DE, EP, ES, FPA, MA, SHADE, BRO, BSO, CA, CHIO, FBIO, GSKA, HBO

from mealpy import TDO, STO, SSpiderO, SSpiderA, SSO, SSA, SRSR, SLO, SHO, SFO, ServalOA, SeaHO, SCSO, POA

from mealpy import (StringVar, FloatVar, BoolVar, PermutationVar, CategoricalVar, IntegerVar, BinaryVar,

TransferBinaryVar, TransferBoolVar)

from mealpy import Tuner, Multitask, Problem, Optimizer, Termination, ParameterGrid

from mealpy import get_all_optimizers, get_optimizer_by_name

if __name__ == "__main__":

model = BBO.OriginalBBO(epoch=10, pop_size=30, p_m=0.01, n_elites=2)

model = PSO.OriginalPSO(epoch=100, pop_size=50, c1=2.05, c2=20.5, w=0.4)

model = PSO.LDW_PSO(epoch=100, pop_size=50, c1=2.05, c2=20.5, w_min=0.4, w_max=0.9)

model = PSO.AIW_PSO(epoch=100, pop_size=50, c1=2.05, c2=20.5, alpha=0.4)

model = PSO.P_PSO(epoch=100, pop_size=50)

model = PSO.HPSO_TVAC(epoch=100, pop_size=50, ci=0.5, cf=0.1)

model = PSO.C_PSO(epoch=100, pop_size=50, c1=2.05, c2=2.05, w_min=0.4, w_max=0.9)

model = PSO.CL_PSO(epoch=100, pop_size=50, c_local=1.2, w_min=0.4, w_max=0.9, max_flag=7)

model = GA.BaseGA(epoch=100, pop_size=50, pc=0.9, pm=0.05, selection="tournament", k_way=0.4, crossover="multi_points", mutation="swap")

model = GA.SingleGA(epoch=100, pop_size=50, pc=0.9, pm=0.8, selection="tournament", k_way=0.4, crossover="multi_points", mutation="swap")

model = GA.MultiGA(epoch=100, pop_size=50, pc=0.9, pm=0.8, selection="tournament", k_way=0.4, crossover="multi_points", mutation="swap")

model = GA.EliteSingleGA(epoch=100, pop_size=50, pc=0.95, pm=0.8, selection="roulette", crossover="uniform", mutation="swap", k_way=0.2, elite_best=0.1,

elite_worst=0.3, strategy=0)

model = GA.EliteMultiGA(epoch=100, pop_size=50, pc=0.95, pm=0.8, selection="roulette", crossover="uniform", mutation="swap", k_way=0.2, elite_best=0.1,

elite_worst=0.3, strategy=0)

model = ABC.OriginalABC(epoch=1000, pop_size=50, n_limits=50)

model = ACOR.OriginalACOR(epoch=1000, pop_size=50, sample_count=25, intent_factor=0.5, zeta=1.0)

model = AGTO.OriginalAGTO(epoch=1000, pop_size=50, p1=0.03, p2=0.8, beta=3.0)

model = AGTO.MGTO(epoch=1000, pop_size=50, pp=0.03)

model = ALO.OriginalALO(epoch=100, pop_size=50)

model = ALO.DevALO(epoch=100, pop_size=50)

model = AO.OriginalAO(epoch=100, pop_size=50)

model = ARO.OriginalARO(epoch=100, pop_size=50)

model = ARO.LARO(epoch=100, pop_size=50)

model = ARO.IARO(epoch=100, pop_size=50)

model = AVOA.OriginalAVOA(epoch=100, pop_size=50, p1=0.6, p2=0.4, p3=0.6, alpha=0.8, gama=2.5)

model = BA.OriginalBA(epoch=100, pop_size=50, loudness=0.8, pulse_rate=0.95, pf_min=0.1, pf_max=10.0)

model = BA.AdaptiveBA(epoch=100, pop_size=50, loudness_min=1.0, loudness_max=2.0, pr_min=-2.5, pr_max=0.85, pf_min=0.1, pf_max=10.)

model = BA.DevBA(epoch=100, pop_size=50, pulse_rate=0.95, pf_min=0., pf_max=10.)

model = BBOA.OriginalBBOA(epoch=100, pop_size=50)

model = BMO.OriginalBMO(epoch=100, pop_size=50, pl=4)

model = EOA.OriginalEOA(epoch=100, pop_size=50, p_c=0.9, p_m=0.01, n_best=2, alpha=0.98, beta=0.9, gama=0.9)

model = IWO.OriginalIWO(epoch=100, pop_size=50, seed_min=3, seed_max=9, exponent=3, sigma_start=0.6, sigma_end=0.01)

model = SBO.DevSBO(epoch=100, pop_size=50, alpha=0.9, p_m=0.05, psw=0.02)

model = SBO.OriginalSBO(epoch=100, pop_size=50, alpha=0.9, p_m=0.05, psw=0.02)

model = SMA.OriginalSMA(epoch=100, pop_size=50, p_t=0.03)

model = SMA.DevSMA(epoch=100, pop_size=50, p_t=0.03)

model = SOA.OriginalSOA(epoch=100, pop_size=50, fc=2)

model = SOA.DevSOA(epoch=100, pop_size=50, fc=2)

model = SOS.OriginalSOS(epoch=100, pop_size=50)

model = TPO.DevTPO(epoch=100, pop_size=50, alpha=0.3, beta=50., theta=0.9)

model = TSA.OriginalTSA(epoch=100, pop_size=50)

model = VCS.OriginalVCS(epoch=100, pop_size=50, lamda=0.5, sigma=0.3)

model = VCS.DevVCS(epoch=100, pop_size=50, lamda=0.5, sigma=0.3)

model = WHO.OriginalWHO(epoch=100, pop_size=50, n_explore_step=3, n_exploit_step=3, eta=0.15, p_hi=0.9, local_alpha=0.9, local_beta=0.3, global_alpha=0.2,

global_beta=0.8, delta_w=2.0, delta_c=2.0)

model = AOA.OriginalAOA(epoch=100, pop_size=50, alpha=5, miu=0.5, moa_min=0.2, moa_max=0.9)

model = CEM.OriginalCEM(epoch=100, pop_size=50, n_best=20, alpha=0.7)

model = CGO.OriginalCGO(epoch=100, pop_size=50)

model = CircleSA.OriginalCircleSA(epoch=100, pop_size=50, c_factor=0.8)

model = GBO.OriginalGBO(epoch=100, pop_size=50, pr=0.5, beta_min=0.2, beta_max=1.2)

model = HC.OriginalHC(epoch=100, pop_size=50, neighbour_size=50)

model = HC.SwarmHC(epoch=100, pop_size=50, neighbour_size=10)

model = INFO.OriginalINFO(epoch=100, pop_size=50)

model = PSS.OriginalPSS(epoch=100, pop_size=50, acceptance_rate=0.8, sampling_method="LHS")

model = RUN.OriginalRUN(epoch=100, pop_size=50)

model = SCA.OriginalSCA(epoch=100, pop_size=50)

model = SCA.DevSCA(epoch=100, pop_size=50)

model = SCA.QleSCA(epoch=100, pop_size=50, alpha=0.1, gama=0.9)

model = SHIO.OriginalSHIO(epoch=100, pop_size=50)

model = TS.OriginalTS(epoch=100, pop_size=50, tabu_size=5, neighbour_size=20, perturbation_scale=0.05)

model = HS.OriginalHS(epoch=100, pop_size=50, c_r=0.95, pa_r=0.05)

model = HS.DevHS(epoch=100, pop_size=50, c_r=0.95, pa_r=0.05)

model = AEO.OriginalAEO(epoch=100, pop_size=50)

model = AEO.EnhancedAEO(epoch=100, pop_size=50)

model = AEO.ModifiedAEO(epoch=100, pop_size=50)

model = AEO.ImprovedAEO(epoch=100, pop_size=50)

model = AEO.AugmentedAEO(epoch=100, pop_size=50)

model = GCO.OriginalGCO(epoch=100, pop_size=50, cr=0.7, wf=1.25)

model = GCO.DevGCO(epoch=100, pop_size=50, cr=0.7, wf=1.25)

model = WCA.OriginalWCA(epoch=100, pop_size=50, nsr=4, wc=2.0, dmax=1e-6)

model = CRO.OriginalCRO(epoch=100, pop_size=50, po=0.4, Fb=0.9, Fa=0.1, Fd=0.1, Pd=0.5, GCR=0.1, gamma_min=0.02, gamma_max=0.2, n_trials=5)

model = CRO.OCRO(epoch=100, pop_size=50, po=0.4, Fb=0.9, Fa=0.1, Fd=0.1, Pd=0.5, GCR=0.1, gamma_min=0.02, gamma_max=0.2, n_trials=5, restart_count=50)

model = DE.OriginalDE(epoch=100, pop_size=50, wf=0.7, cr=0.9, strategy=0)

model = DE.JADE(epoch=100, pop_size=50, miu_f=0.5, miu_cr=0.5, pt=0.1, ap=0.1)

model = DE.SADE(epoch=100, pop_size=50)

model = DE.SAP_DE(epoch=100, pop_size=50, branch="ABS")

model = EP.OriginalEP(epoch=100, pop_size=50, bout_size=0.05)

model = EP.LevyEP(epoch=100, pop_size=50, bout_size=0.05)

model = ES.OriginalES(epoch=100, pop_size=50, lamda=0.75)

model = ES.LevyES(epoch=100, pop_size=50, lamda=0.75)

model = ES.CMA_ES(epoch=100, pop_size=50)

model = ES.Simple_CMA_ES(epoch=100, pop_size=50)

model = FPA.OriginalFPA(epoch=100, pop_size=50, p_s=0.8, levy_multiplier=0.2)

model = MA.OriginalMA(epoch=100, pop_size=50, pc=0.85, pm=0.15, p_local=0.5, max_local_gens=10, bits_per_param=4)

model = SHADE.OriginalSHADE(epoch=100, pop_size=50, miu_f=0.5, miu_cr=0.5)

model = SHADE.L_SHADE(epoch=100, pop_size=50, miu_f=0.5, miu_cr=0.5)

model = BRO.OriginalBRO(epoch=100, pop_size=50, threshold=3)

model = BRO.DevBRO(epoch=100, pop_size=50, threshold=3)

model = BSO.OriginalBSO(epoch=100, pop_size=50, m_clusters=5, p1=0.2, p2=0.8, p3=0.4, p4=0.5, slope=20)

model = BSO.ImprovedBSO(epoch=100, pop_size=50, m_clusters=5, p1=0.25, p2=0.5, p3=0.75, p4=0.6)

model = CA.OriginalCA(epoch=100, pop_size=50, accepted_rate=0.15)

model = CHIO.OriginalCHIO(epoch=100, pop_size=50, brr=0.15, max_age=10)

model = CHIO.DevCHIO(epoch=100, pop_size=50, brr=0.15, max_age=10)

model = FBIO.OriginalFBIO(epoch=100, pop_size=50)

model = FBIO.DevFBIO(epoch=100, pop_size=50)

model = GSKA.OriginalGSKA(epoch=100, pop_size=50, pb=0.1, kf=0.5, kr=0.9, kg=5)

model = GSKA.DevGSKA(epoch=100, pop_size=50, pb=0.1, kr=0.9)

model = HBO.OriginalHBO(epoch=100, pop_size=50, degree=3)

model = HCO.OriginalHCO(epoch=100, pop_size=50, wfp=0.65, wfv=0.05, c1=1.4, c2=1.4)

model = ICA.OriginalICA(epoch=100, pop_size=50, empire_count=5, assimilation_coeff=1.5, revolution_prob=0.05, revolution_rate=0.1, revolution_step_size=0.1,

zeta=0.1)

model = LCO.OriginalLCO(epoch=100, pop_size=50, r1=2.35)

model = LCO.ImprovedLCO(epoch=100, pop_size=50)

model = LCO.DevLCO(epoch=100, pop_size=50, r1=2.35)

model = WarSO.OriginalWarSO(epoch=100, pop_size=50, rr=0.1)

model = TOA.OriginalTOA(epoch=100, pop_size=50)

model = TLO.OriginalTLO(epoch=100, pop_size=50)

model = TLO.ImprovedTLO(epoch=100, pop_size=50, n_teachers=5)

model = TLO.DevTLO(epoch=100, pop_size=50)

model = SSDO.OriginalSSDO(epoch=100, pop_size=50)

model = SPBO.OriginalSPBO(epoch=100, pop_size=50)

model = SPBO.DevSPBO(epoch=100, pop_size=50)

model = SARO.OriginalSARO(epoch=100, pop_size=50, se=0.5, mu=50)

model = SARO.DevSARO(epoch=100, pop_size=50, se=0.5, mu=50)

model = QSA.OriginalQSA(epoch=100, pop_size=50)

model = QSA.DevQSA(epoch=100, pop_size=50)

model = QSA.OppoQSA(epoch=100, pop_size=50)

model = QSA.LevyQSA(epoch=100, pop_size=50)

model = QSA.ImprovedQSA(epoch=100, pop_size=50)

model = ArchOA.OriginalArchOA(epoch=100, pop_size=50, c1=2, c2=5, c3=2, c4=0.5, acc_max=0.9, acc_min=0.1)

model = ASO.OriginalASO(epoch=100, pop_size=50, alpha=50, beta=0.2)

model = CDO.OriginalCDO(epoch=100, pop_size=50)

model = EFO.OriginalEFO(epoch=100, pop_size=50, r_rate=0.3, ps_rate=0.85, p_field=0.1, n_field=0.45)

model = EFO.DevEFO(epoch=100, pop_size=50, r_rate=0.3, ps_rate=0.85, p_field=0.1, n_field=0.45)

model = EO.OriginalEO(epoch=100, pop_size=50)

model = EO.AdaptiveEO(epoch=100, pop_size=50)

model = EO.ModifiedEO(epoch=100, pop_size=50)

model = EVO.OriginalEVO(epoch=100, pop_size=50)

model = FLA.OriginalFLA(epoch=100, pop_size=50, C1=0.5, C2=2.0, C3=0.1, C4=0.2, C5=2.0, DD=0.01)

model = HGSO.OriginalHGSO(epoch=100, pop_size=50, n_clusters=3)

model = MVO.OriginalMVO(epoch=100, pop_size=50, wep_min=0.2, wep_max=1.0)

model = MVO.DevMVO(epoch=100, pop_size=50, wep_min=0.2, wep_max=1.0)

model = NRO.OriginalNRO(epoch=100, pop_size=50)

model = RIME.OriginalRIME(epoch=100, pop_size=50, sr=5.0)

model = SA.OriginalSA(epoch=100, pop_size=50, temp_init=100, step_size=0.1)

model = SA.GaussianSA(epoch=100, pop_size=50, temp_init=100, cooling_rate=0.99, scale=0.1)

model = SA.SwarmSA(epoch=100, pop_size=50, max_sub_iter=5, t0=1000, t1=1, move_count=5, mutation_rate=0.1, mutation_step_size=0.1,

mutation_step_size_damp=0.99)

model = WDO.OriginalWDO(epoch=100, pop_size=50, RT=3, g_c=0.2, alp=0.4, c_e=0.4, max_v=0.3)

model = TWO.OriginalTWO(epoch=100, pop_size=50)

model = TWO.EnhancedTWO(epoch=100, pop_size=50)

model = TWO.OppoTWO(epoch=100, pop_size=50)

model = TWO.LevyTWO(epoch=100, pop_size=50)

model = ABC.OriginalABC(epoch=100, pop_size=50, n_limits=50)

model = ACOR.OriginalACOR(epoch=100, pop_size=50, sample_count=25, intent_factor=0.5, zeta=1.0)

model = AGTO.OriginalAGTO(epoch=100, pop_size=50, p1=0.03, p2=0.8, beta=3.0)

model = AGTO.MGTO(epoch=100, pop_size=50, pp=0.03)

model = BeesA.OriginalBeesA(epoch=100, pop_size=50, selected_site_ratio=0.5, elite_site_ratio=0.4, selected_site_bee_ratio=0.1, elite_site_bee_ratio=2.0,

dance_radius=0.1, dance_reduction=0.99)

model = BeesA.CleverBookBeesA(epoch=100, pop_size=50, n_elites=16, n_others=4, patch_size=5.0, patch_reduction=0.985, n_sites=3, n_elite_sites=1)

model = BeesA.ProbBeesA(epoch=100, pop_size=50, recruited_bee_ratio=0.1, dance_radius=0.1, dance_reduction=0.99)

model = BES.OriginalBES(epoch=100, pop_size=50, a_factor=10, R_factor=1.5, alpha=2.0, c1=2.0, c2=2.0)

model = BFO.OriginalBFO(epoch=100, pop_size=50, Ci=0.01, Ped=0.25, Nc=5, Ns=4, d_attract=0.1, w_attract=0.2, h_repels=0.1, w_repels=10)

model = BFO.ABFO(epoch=100, pop_size=50, C_s=0.1, C_e=0.001, Ped=0.01, Ns=4, N_adapt=2, N_split=40)

model = ZOA.OriginalZOA(epoch=100, pop_size=50)

model = WOA.OriginalWOA(epoch=100, pop_size=50)

model = WOA.HI_WOA(epoch=100, pop_size=50, feedback_max=10)

model = WaOA.OriginalWaOA(epoch=100, pop_size=50)

model = TSO.OriginalTSO(epoch=100, pop_size=50)

model = TDO.OriginalTDO(epoch=100, pop_size=50)

model = STO.OriginalSTO(epoch=100, pop_size=50)

model = SSpiderO.OriginalSSpiderO(epoch=100, pop_size=50, fp_min=0.65, fp_max=0.9)

model = SSpiderA.OriginalSSpiderA(epoch=100, pop_size=50, r_a=1.0, p_c=0.7, p_m=0.1)

model = SSO.OriginalSSO(epoch=100, pop_size=50)

model = SSA.OriginalSSA(epoch=100, pop_size=50, ST=0.8, PD=0.2, SD=0.1)

model = SSA.DevSSA(epoch=100, pop_size=50, ST=0.8, PD=0.2, SD=0.1)

model = SRSR.OriginalSRSR(epoch=100, pop_size=50)

model = SLO.OriginalSLO(epoch=100, pop_size=50)

model = SLO.ModifiedSLO(epoch=100, pop_size=50)

model = SLO.ImprovedSLO(epoch=100, pop_size=50, c1=1.2, c2=1.5)

model = SHO.OriginalSHO(epoch=100, pop_size=50, h_factor=5.0, n_trials=10)

model = SFO.OriginalSFO(epoch=100, pop_size=50, pp=0.1, AP=4.0, epsilon=0.0001)

model = SFO.ImprovedSFO(epoch=100, pop_size=50, pp=0.1)

model = ServalOA.OriginalServalOA(epoch=100, pop_size=50)

model = SeaHO.OriginalSeaHO(epoch=100, pop_size=50)

model = SCSO.OriginalSCSO(epoch=100, pop_size=50)

model = POA.OriginalPOA(epoch=100, pop_size=50)

model = PFA.OriginalPFA(epoch=100, pop_size=50)

model = OOA.OriginalOOA(epoch=100, pop_size=50)

model = NGO.OriginalNGO(epoch=100, pop_size=50)

model = NMRA.OriginalNMRA(epoch=100, pop_size=50, pb=0.75)

model = NMRA.ImprovedNMRA(epoch=100, pop_size=50, pb=0.75, pm=0.01)

model = MSA.OriginalMSA(epoch=100, pop_size=50, n_best=5, partition=0.5, max_step_size=1.0)

model = MRFO.OriginalMRFO(epoch=100, pop_size=50, somersault_range=2.0)

model = MRFO.WMQIMRFO(epoch=100, pop_size=50, somersault_range=2.0, pm=0.5)

model = MPA.OriginalMPA(epoch=100, pop_size=50)

model = MGO.OriginalMGO(epoch=100, pop_size=50)

model = MFO.OriginalMFO(epoch=100, pop_size=50)

model = JA.OriginalJA(epoch=100, pop_size=50)

model = JA.LevyJA(epoch=100, pop_size=50)

model = JA.DevJA(epoch=100, pop_size=50)

model = HHO.OriginalHHO(epoch=100, pop_size=50)

model = HGS.OriginalHGS(epoch=100, pop_size=50, PUP=0.08, LH=10000)

model = HBA.OriginalHBA(epoch=100, pop_size=50)

model = GWO.OriginalGWO(epoch=100, pop_size=50)

model = GWO.GWO_WOA(epoch=100, pop_size=50)

model = GWO.RW_GWO(epoch=100, pop_size=50)

model = GTO.OriginalGTO(epoch=100, pop_size=50, A=0.4, H=2.0)

model = GTO.Matlab101GTO(epoch=100, pop_size=50)

model = GTO.Matlab102GTO(epoch=100, pop_size=50)

model = GOA.OriginalGOA(epoch=100, pop_size=50, c_min=0.00004, c_max=1.0)

model = GJO.OriginalGJO(epoch=100, pop_size=50)

model = FOX.OriginalFOX(epoch=100, pop_size=50, c1=0.18, c2=0.82)

model = FOA.OriginalFOA(epoch=100, pop_size=50)

model = FOA.WhaleFOA(epoch=100, pop_size=50)

model = FOA.DevFOA(epoch=100, pop_size=50)

model = FFO.OriginalFFO(epoch=100, pop_size=50)

model = FFA.OriginalFFA(epoch=100, pop_size=50, gamma=0.001, beta_base=2, alpha=0.2, alpha_damp=0.99, delta=0.05, exponent=2)

model = FA.OriginalFA(epoch=100, pop_size=50, max_sparks=50, p_a=0.04, p_b=0.8, max_ea=40, m_sparks=50)

model = ESOA.OriginalESOA(epoch=100, pop_size=50)

model = EHO.OriginalEHO(epoch=100, pop_size=50, alpha=0.5, beta=0.5, n_clans=5)

model = DO.OriginalDO(epoch=100, pop_size=50)

model = DMOA.OriginalDMOA(epoch=100, pop_size=50, n_baby_sitter=3, peep=2)

model = DMOA.DevDMOA(epoch=100, pop_size=50, peep=2)

model = CSO.OriginalCSO(epoch=100, pop_size=50, mixture_ratio=0.15, smp=5, spc=False, cdc=0.8, srd=0.15, c1=0.4, w_min=0.4, w_max=0.9)

model = CSA.OriginalCSA(epoch=100, pop_size=50, p_a=0.3)

model = CoatiOA.OriginalCoatiOA(epoch=100, pop_size=50)

model = COA.OriginalCOA(epoch=100, pop_size=50, n_coyotes=5)

model = BSA.OriginalBSA(epoch=100, pop_size=50, ff=10, pff=0.8, c1=1.5, c2=1.5, a1=1.0, a2=1.0, fc=0.5)

Mealpy Application

Mealpy + Neural Network (Replace the Gradient Descent Optimizer)

- Time-series Problem:

- Traditional MLP

code: Link

- Hybrid code (Mealpy +

MLP): Link

- Classification Problem:

- Traditional MLP

code: Link

- Hybrid code (Mealpy +

MLP): Link

Mealpy + Neural Network (Optimize Neural Network Hyper-parameter)

Code: Link

Other Applications

Get Visualize Figures

Tutorial Videos

All tutorial videos: Link

All code examples: Link

All visualization examples: Link

Documents

Official Channels (questions, problems)

-

Meta-heuristic Categories: (Based on this article: link)

- Evolutionary-based: Idea from Darwin's law of natural selection, evolutionary computing

- Swarm-based: Idea from movement, interaction of birds, organization of social ...

- Physics-based: Idea from physics law such as Newton's law of universal gravitation, black hole, multiverse

- Human-based: Idea from human interaction such as queuing search, teaching learning, ...

- Biology-based: Idea from biology creature (or microorganism),...

- System-based: Idea from eco-system, immune-system, network-system, ...

- Math-based: Idea from mathematical form or mathematical law such as sin-cosin

- Music-based: Idea from music instrument

-

Difficulty - Difficulty Level (Personal Opinion): Objective observation from author. Depend on the number of

parameters, number of equations, the original ideas, time spend for coding, source lines of code (SLOC).

- Easy: A few paras, few equations, SLOC very short

- Medium: more equations than Easy level, SLOC longer than Easy level

- Hard: Lots of equations, SLOC longer than Medium level, the paper hard to read.

- Hard* - Very hard: Lots of equations, SLOC too long, the paper is very hard to read.

** For newbie, we recommend to read the paper of algorithms which difficulty is "easy" or "medium" difficulty level.

| Evolutionary | Evolutionary Programming | EP | OriginalEP | 1964 | 3 | easy |

|---|

| Evolutionary | * | * | LevyEP | * | 3 | easy |

|---|

| Evolutionary | Evolution Strategies | ES | OriginalES | 1971 | 3 | easy |

|---|

| Evolutionary | * | * | LevyES | * | 3 | easy |

|---|

| Evolutionary | * | * | CMA_ES | 2003 | 2 | hard |

|---|

| Evolutionary | * | * | Simple_CMA_ES | 2023 | 2 | medium |

|---|

| Evolutionary | Memetic Algorithm | MA | OriginalMA | 1989 | 7 | easy |

|---|

| Evolutionary | Genetic Algorithm | GA | BaseGA | 1992 | 4 | easy |

|---|

| Evolutionary | * | * | SingleGA | * | 7 | easy |

|---|

| Evolutionary | * | * | MultiGA | * | 7 | easy |

|---|

| Evolutionary | * | * | EliteSingleGA | * | 10 | easy |

|---|

| Evolutionary | * | * | EliteMultiGA | * | 10 | easy |

|---|

| Evolutionary | Differential Evolution | DE | BaseDE | 1997 | 5 | easy |

|---|

| Evolutionary | * | * | JADE | 2009 | 6 | medium |

|---|

| Evolutionary | * | * | SADE | 2005 | 2 | medium |

|---|

| Evolutionary | * | * | SAP_DE | 2006 | 3 | medium |

|---|

| Evolutionary | Success-History Adaptation Differential Evolution | SHADE | OriginalSHADE | 2013 | 4 | medium |

|---|

| Evolutionary | * | * | L_SHADE | 2014 | 4 | medium |

|---|

| Evolutionary | Flower Pollination Algorithm | FPA | OriginalFPA | 2014 | 4 | medium |

|---|

| Evolutionary | Coral Reefs Optimization | CRO | OriginalCRO | 2014 | 11 | medium |

|---|

| Evolutionary | * | * | OCRO | 2019 | 12 | medium |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Swarm | Particle Swarm Optimization | PSO | OriginalPSO | 1995 | 6 | easy |

|---|

| Swarm | * | * | PPSO | 2019 | 2 | medium |

|---|

| Swarm | * | * | HPSO_TVAC | 2017 | 4 | medium |

|---|

| Swarm | * | * | C_PSO | 2015 | 6 | medium |

|---|

| Swarm | * | * | CL_PSO | 2006 | 6 | medium |

|---|

| Swarm | Bacterial Foraging Optimization | BFO | OriginalBFO | 2002 | 10 | hard |

|---|

| Swarm | * | * | ABFO | 2019 | 8 | medium |

|---|

| Swarm | Bees Algorithm | BeesA | OriginalBeesA | 2005 | 8 | medium |

|---|

| Swarm | * | * | ProbBeesA | 2015 | 5 | medium |

|---|

| Swarm | * | * | CleverBookBeesA | 2006 | 8 | medium |

|---|

| Swarm | Cat Swarm Optimization | CSO | OriginalCSO | 2006 | 11 | hard |

|---|

| Swarm | Artificial Bee Colony | ABC | OriginalABC | 2007 | 8 | medium |

|---|

| Swarm | Ant Colony Optimization | ACOR | OriginalACOR | 2008 | 5 | easy |

|---|

| Swarm | Cuckoo Search Algorithm | CSA | OriginalCSA | 2009 | 3 | medium |

|---|

| Swarm | Firefly Algorithm | FFA | OriginalFFA | 2009 | 8 | easy |

|---|

| Swarm | Fireworks Algorithm | FA | OriginalFA | 2010 | 7 | medium |

|---|

| Swarm | Bat Algorithm | BA | OriginalBA | 2010 | 6 | medium |

|---|

| Swarm | * | * | AdaptiveBA | 2010 | 8 | medium |

|---|

| Swarm | * | * | ModifiedBA | * | 5 | medium |

|---|

| Swarm | Fruit-fly Optimization Algorithm | FOA | OriginalFOA | 2012 | 2 | easy |

|---|

| Swarm | * | * | BaseFOA | * | 2 | easy |

|---|

| Swarm | * | * | WhaleFOA | 2020 | 2 | medium |

|---|

| Swarm | Social Spider Optimization | SSpiderO | OriginalSSpiderO | 2018 | 4 | hard* |

|---|

| Swarm | Grey Wolf Optimizer | GWO | OriginalGWO | 2014 | 2 | easy |

|---|

| Swarm | * | * | RW_GWO | 2019 | 2 | easy |

|---|

| Swarm | Social Spider Algorithm | SSpiderA | OriginalSSpiderA | 2015 | 5 | medium |

|---|

| Swarm | Ant Lion Optimizer | ALO | OriginalALO | 2015 | 2 | easy |

|---|

| Swarm | * | * | BaseALO | * | 2 | easy |

|---|

| Swarm | Moth Flame Optimization | MFO | OriginalMFO | 2015 | 2 | easy |

|---|

| Swarm | * | * | BaseMFO | * | 2 | easy |

|---|

| Swarm | Elephant Herding Optimization | EHO | OriginalEHO | 2015 | 5 | easy |

|---|

| Swarm | Jaya Algorithm | JA | OriginalJA | 2016 | 2 | easy |

|---|

| Swarm | * | * | BaseJA | * | 2 | easy |

|---|

| Swarm | * | * | LevyJA | 2021 | 2 | easy |

|---|

| Swarm | Whale Optimization Algorithm | WOA | OriginalWOA | 2016 | 2 | medium |

|---|

| Swarm | * | * | HI_WOA | 2019 | 3 | medium |

|---|

| Swarm | Dragonfly Optimization | DO | OriginalDO | 2016 | 2 | medium |

|---|

| Swarm | Bird Swarm Algorithm | BSA | OriginalBSA | 2016 | 9 | medium |

|---|

| Swarm | Spotted Hyena Optimizer | SHO | OriginalSHO | 2017 | 4 | medium |

|---|

| Swarm | Salp Swarm Optimization | SSO | OriginalSSO | 2017 | 2 | easy |

|---|

| Swarm | Swarm Robotics Search And Rescue | SRSR | OriginalSRSR | 2017 | 2 | hard* |

|---|

| Swarm | Grasshopper Optimisation Algorithm | GOA | OriginalGOA | 2017 | 4 | easy |

|---|

| Swarm | Coyote Optimization Algorithm | COA | OriginalCOA | 2018 | 3 | medium |

|---|

| Swarm | Moth Search Algorithm | MSA | OriginalMSA | 2018 | 5 | easy |

|---|

| Swarm | Sea Lion Optimization | SLO | OriginalSLO | 2019 | 2 | medium |

|---|

| Swarm | * | * | ModifiedSLO | * | 2 | medium |

|---|

| Swarm | * | * | ImprovedSLO | 2022 | 4 | medium |

|---|

| Swarm | Nake Mole*Rat Algorithm | NMRA | OriginalNMRA | 2019 | 3 | easy |

|---|

| Swarm | * | * | ImprovedNMRA | * | 4 | medium |

|---|

| Swarm | Pathfinder Algorithm | PFA | OriginalPFA | 2019 | 2 | medium |

|---|

| Swarm | Sailfish Optimizer | SFO | OriginalSFO | 2019 | 5 | easy |

|---|

| Swarm | * | * | ImprovedSFO | * | 3 | medium |

|---|

| Swarm | Harris Hawks Optimization | HHO | OriginalHHO | 2019 | 2 | medium |

|---|

| Swarm | Manta Ray Foraging Optimization | MRFO | OriginalMRFO | 2020 | 3 | medium |

|---|

| Swarm | Bald Eagle Search | BES | OriginalBES | 2020 | 7 | easy |

|---|

| Swarm | Sparrow Search Algorithm | SSA | OriginalSSA | 2020 | 5 | medium |

|---|

| Swarm | * | * | BaseSSA | * | 5 | medium |

|---|

| Swarm | Hunger Games Search | HGS | OriginalHGS | 2021 | 4 | medium |

|---|

| Swarm | Aquila Optimizer | AO | OriginalAO | 2021 | 2 | easy |

|---|

| Swarm | Hybrid Grey Wolf * Whale Optimization Algorithm | GWO | GWO_WOA | 2022 | 2 | easy |

|---|

| Swarm | Marine Predators Algorithm | MPA | OriginalMPA | 2020 | 2 | medium |

|---|

| Swarm | Honey Badger Algorithm | HBA | OriginalHBA | 2022 | 2 | easy |

|---|

| Swarm | Sand Cat Swarm Optimization | SCSO | OriginalSCSO | 2022 | 2 | easy |

|---|

| Swarm | Tuna Swarm Optimization | TSO | OriginalTSO | 2021 | 2 | medium |

|---|

| Swarm | African Vultures Optimization Algorithm | AVOA | OriginalAVOA | 2022 | 7 | medium |

|---|

| Swarm | Artificial Gorilla Troops Optimization | AGTO | OriginalAGTO | 2021 | 5 | medium |

|---|

| Swarm | * | * | MGTO | 2023 | 3 | medium |

|---|

| Swarm | Artificial Rabbits Optimization | ARO | OriginalARO | 2022 | 2 | easy |

|---|

| Swarm | * | * | LARO | 2022 | 2 | easy |

|---|

| Swarm | * | * | IARO | 2022 | 2 | easy |

|---|

| Swarm | Egret Swarm Optimization Algorithm | ESOA | OriginalESOA | 2022 | 2 | medium |

|---|

| Swarm | Fox Optimizer | FOX | OriginalFOX | 2023 | 4 | easy |

|---|

| Swarm | Golden Jackal Optimization | GJO | OriginalGJO | 2022 | 2 | easy |

|---|

| Swarm | Giant Trevally Optimization | GTO | OriginalGTO | 2022 | 4 | medium |

|---|

| Swarm | * | * | Matlab101GTO | 2022 | 2 | medium |

|---|

| Swarm | * | * | Matlab102GTO | 2023 | 2 | hard |

|---|

| Swarm | Mountain Gazelle Optimizer | MGO | OriginalMGO | 2022 | 2 | easy |

|---|

| Swarm | Sea-Horse Optimization | SeaHO | OriginalSeaHO | 2022 | 2 | medium |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Physics | Simulated Annealling | SA | OriginalSA | 1983 | 9 | medium |

|---|

| Physics | * | * | GaussianSA | * | 5 | medium |

|---|

| Physics | * | * | SwarmSA | 1987 | 9 | medium |

|---|

| Physics | Wind Driven Optimization | WDO | OriginalWDO | 2013 | 7 | easy |

|---|

| Physics | Multi*Verse Optimizer | MVO | OriginalMVO | 2016 | 4 | easy |

|---|

| Physics | * | * | BaseMVO | * | 4 | easy |

|---|

| Physics | Tug of War Optimization | TWO | OriginalTWO | 2016 | 2 | easy |

|---|

| Physics | * | * | OppoTWO | * | 2 | medium |

|---|

| Physics | * | * | LevyTWO | * | 2 | medium |

|---|

| Physics | * | * | EnhancedTWO | 2020 | 2 | medium |

|---|

| Physics | Electromagnetic Field Optimization | EFO | OriginalEFO | 2016 | 6 | easy |

|---|

| Physics | * | * | BaseEFO | * | 6 | medium |

|---|

| Physics | Nuclear Reaction Optimization | NRO | OriginalNRO | 2019 | 2 | hard* |

|---|

| Physics | Henry Gas Solubility Optimization | HGSO | OriginalHGSO | 2019 | 3 | medium |

|---|

| Physics | Atom Search Optimization | ASO | OriginalASO | 2019 | 4 | medium |

|---|

| Physics | Equilibrium Optimizer | EO | OriginalEO | 2019 | 2 | easy |

|---|

| Physics | * | * | ModifiedEO | 2020 | 2 | medium |

|---|

| Physics | * | * | AdaptiveEO | 2020 | 2 | medium |

|---|

| Physics | Archimedes Optimization Algorithm | ArchOA | OriginalArchOA | 2021 | 8 | medium |

|---|

| Physics | Chernobyl Disaster Optimization | CDO | OriginalCDO | 2023 | 2 | easy |

|---|

| Physics | Energy Valley Optimization | EVO | OriginalEVO | 2023 | 2 | medium |

|---|

| Physics | Fick's Law Algorithm | FLA | OriginalFLA | 2023 | 8 | hard |

|---|

| Physics | Physical Phenomenon of RIME-ice | RIME | OriginalRIME | 2023 | 3 | easy |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Human | Culture Algorithm | CA | OriginalCA | 1994 | 3 | easy |

|---|

| Human | Imperialist Competitive Algorithm | ICA | OriginalICA | 2007 | 8 | hard* |

|---|

| Human | Teaching Learning*based Optimization | TLO | OriginalTLO | 2011 | 2 | easy |

|---|

| Human | * | * | BaseTLO | 2012 | 2 | easy |

|---|

| Human | * | * | ITLO | 2013 | 3 | medium |

|---|

| Human | Brain Storm Optimization | BSO | OriginalBSO | 2011 | 8 | medium |

|---|

| Human | * | * | ImprovedBSO | 2017 | 7 | medium |

|---|

| Human | Queuing Search Algorithm | QSA | OriginalQSA | 2019 | 2 | hard |

|---|

| Human | * | * | BaseQSA | * | 2 | hard |

|---|

| Human | * | * | OppoQSA | * | 2 | hard |

|---|

| Human | * | * | LevyQSA | * | 2 | hard |

|---|

| Human | * | * | ImprovedQSA | 2021 | 2 | hard |

|---|

| Human | Search And Rescue Optimization | SARO | OriginalSARO | 2019 | 4 | medium |

|---|

| Human | * | * | BaseSARO | * | 4 | medium |

|---|

| Human | Life Choice*Based Optimization | LCO | OriginalLCO | 2019 | 3 | easy |

|---|

| Human | * | * | BaseLCO | * | 3 | easy |

|---|

| Human | * | * | ImprovedLCO | * | 2 | easy |

|---|

| Human | Social Ski*Driver Optimization | SSDO | OriginalSSDO | 2019 | 2 | easy |

|---|

| Human | Gaining Sharing Knowledge*based Algorithm | GSKA | OriginalGSKA | 2019 | 6 | medium |

|---|

| Human | * | * | BaseGSKA | * | 4 | medium |

|---|

| Human | Coronavirus Herd Immunity Optimization | CHIO | OriginalCHIO | 2020 | 4 | medium |

|---|

| Human | * | * | BaseCHIO | * | 4 | medium |

|---|

| Human | Forensic*Based Investigation Optimization | FBIO | OriginalFBIO | 2020 | 2 | medium |

|---|

| Human | * | * | BaseFBIO | * | 2 | medium |

|---|

| Human | Battle Royale Optimization | BRO | OriginalBRO | 2020 | 3 | medium |

|---|

| Human | * | * | BaseBRO | * | 3 | medium |

|---|

| Human | Student Psychology Based Optimization | SPBO | OriginalSPBO | 2020 | 2 | medium |

|---|

| Human | * | * | DevSPBO | * | 2 | medium |

|---|

| Human | Heap-based Optimization | HBO | OriginalHBO | 2020 | 3 | medium |

|---|

| Human | Human Conception Optimization | HCO | OriginalHCO | 2022 | 6 | medium |

|---|

| Human | Dwarf Mongoose Optimization Algorithm | DMOA | OriginalDMOA | 2022 | 4 | medium |

|---|

| Human | * | * | DevDMOA | * | 3 | medium |

|---|

| Human | War Strategy Optimization | WarSO | OriginalWarSO | 2022 | 3 | easy |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Bio | Invasive Weed Optimization | IWO | OriginalIWO | 2006 | 7 | easy |

|---|

| Bio | Biogeography*Based Optimization | BBO | OriginalBBO | 2008 | 4 | easy |

|---|

| Bio | * | * | BaseBBO | * | 4 | easy |

|---|

| Bio | Virus Colony Search | VCS | OriginalVCS | 2016 | 4 | hard* |

|---|

| Bio | * | * | BaseVCS | * | 4 | hard* |

|---|

| Bio | Satin Bowerbird Optimizer | SBO | OriginalSBO | 2017 | 5 | easy |

|---|

| Bio | * | * | BaseSBO | * | 5 | easy |

|---|

| Bio | Earthworm Optimisation Algorithm | EOA | OriginalEOA | 2018 | 8 | medium |

|---|

| Bio | Wildebeest Herd Optimization | WHO | OriginalWHO | 2019 | 12 | hard |

|---|

| Bio | Slime Mould Algorithm | SMA | OriginalSMA | 2020 | 3 | easy |

|---|

| Bio | * | * | BaseSMA | * | 3 | easy |

|---|

| Bio | Barnacles Mating Optimizer | BMO | OriginalBMO | 2018 | 3 | easy |

|---|

| Bio | Tunicate Swarm Algorithm | TSA | OriginalTSA | 2020 | 2 | easy |

|---|

| Bio | Symbiotic Organisms Search | SOS | OriginalSOS | 2014 | 2 | medium |

|---|

| Bio | Seagull Optimization Algorithm | SOA | OriginalSOA | 2019 | 3 | easy |

|---|

| Bio | * | * | DevSOA | * | 3 | easy |

|---|

| Bio | Brown-Bear Optimization Algorithm | BBOA | OriginalBBOA | 2023 | 2 | medium |

|---|

| Bio | Tree Physiology Optimization | TPO | OriginalTPO | 2017 | 5 | medium |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| System | Germinal Center Optimization | GCO | OriginalGCO | 2018 | 4 | medium |

|---|

| System | * | * | BaseGCO | * | 4 | medium |

|---|

| System | Water Cycle Algorithm | WCA | OriginalWCA | 2012 | 5 | medium |

|---|

| System | Artificial Ecosystem*based Optimization | AEO | OriginalAEO | 2019 | 2 | easy |

|---|

| System | * | * | EnhancedAEO | 2020 | 2 | medium |

|---|

| System | * | * | ModifiedAEO | 2020 | 2 | medium |

|---|

| System | * | * | ImprovedAEO | 2021 | 2 | medium |

|---|

| System | * | * | AugmentedAEO | 2022 | 2 | medium |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Math | Hill Climbing | HC | OriginalHC | 1993 | 3 | easy |

|---|

| Math | * | * | SwarmHC | * | 3 | easy |

|---|

| Math | Cross-Entropy Method | CEM | OriginalCEM | 1997 | 4 | easy |

|---|

| Math | Tabu Search | TS | OriginalTS | 2004 | 5 | easy |

|---|

| Math | Sine Cosine Algorithm | SCA | OriginalSCA | 2016 | 2 | easy |

|---|

| Math | * | * | BaseSCA | * | 2 | easy |

|---|

| Math | * | * | QLE-SCA | 2022 | 4 | hard |

|---|

| Math | Gradient-Based Optimizer | GBO | OriginalGBO | 2020 | 5 | medium |

|---|

| Math | Arithmetic Optimization Algorithm | AOA | OrginalAOA | 2021 | 6 | easy |

|---|

| Math | Chaos Game Optimization | CGO | OriginalCGO | 2021 | 2 | easy |

|---|

| Math | Pareto-like Sequential Sampling | PSS | OriginalPSS | 2021 | 4 | medium |

|---|

| Math | weIghted meaN oF vectOrs | INFO | OriginalINFO | 2022 | 2 | medium |

|---|

| Math | RUNge Kutta optimizer | RUN | OriginalRUN | 2021 | 2 | hard |

|---|

| Math | Circle Search Algorithm | CircleSA | OriginalCircleSA | 2022 | 3 | easy |

|---|

| Math | Success History Intelligent Optimization | SHIO | OriginalSHIO | 2022 | 2 | easy |

|---|

| *** | *** | *** | *** | *** | *** | *** |

|---|

| Music | Harmony Search | HS | OriginalHS | 2001 | 4 | easy |

|---|