Security News

curl Shuts Down Bug Bounty Program After Flood of AI Slop Reports

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

portkey-ai

Advanced tools

pip install portkey-ai

The Portkey SDK is built on top of the OpenAI SDK, allowing you to seamlessly integrate Portkey's advanced features while retaining full compatibility with OpenAI methods. With Portkey, you can enhance your interactions with OpenAI or any other OpenAI-like provider by leveraging robust monitoring, reliability, prompt management, and more features - without modifying much of your existing code.

| Unified API Signature If you've used OpenAI, you already know how to use Portkey with any other provider. | Interoperability Write once, run with any provider. Switch between any model from_any provider seamlessly. |

| Automated Fallbacks & Retries Ensure your application remains functional even if a primary service fails. | Load Balancing Efficiently distribute incoming requests among multiple models. |

| Semantic Caching Reduce costs and latency by intelligently caching results. | Virtual Keys Secure your LLM API keys by storing them in Portkey vault and using disposable virtual keys. |

| Request Timeouts Manage unpredictable LLM latencies effectively by setting custom request timeouts on requests. |

| Logging Keep track of all requests for monitoring and debugging. | Requests Tracing Understand the journey of each request for optimization. |

| Custom Metadata Segment and categorize requests for better insights. | Feedbacks Collect and analyse weighted feedback on requests from users. |

| Analytics Track your app & LLM's performance with 40+ production-critical metrics in a single place. |

# Installing the SDK

$ pip install portkey-ai

$ export PORTKEY_API_KEY=PORTKEY_API_KEY

from openai import OpenAI with from portkey_ai import Portkey:from portkey_ai import Portkey

portkey = Portkey(

api_key="PORTKEY_API_KEY",

virtual_key="VIRTUAL_KEY"

)

chat_completion = portkey.chat.completions.create(

messages = [{ "role": 'user', "content": 'Say this is a test' }],

model = 'gpt-4'

)

print(chat_completion)

AsyncPortkey instead of Portkey with await:import asyncio

from portkey_ai import AsyncPortkey

portkey = AsyncPortkey(

api_key="PORTKEY_API_KEY",

virtual_key="VIRTUAL_KEY"

)

async def main():

chat_completion = await portkey.chat.completions.create(

messages=[{'role': 'user', 'content': 'Say this is a test'}],

model='gpt-4'

)

print(chat_completion)

asyncio.run(main())

Installation:

pip install 'portkey-ai[strands]'

Usage with Strands:

from strands.agent import Agent

from portkey_ai.integrations.strands import PortkeyStrands

model = PortkeyStrands(

api_key="PORTKEY_API_KEY",

model_id="@openai/gpt-4o-mini",

# base_url="https://api.portkey.ai/v1", ## Optional

)

agent = Agent(model=model)

import asyncio

async def main():

result = await agent.invoke_async("Tell me a short programming joke.")

print(getattr(result, "text", result))

asyncio.run(main())

Installation:

pip install 'portkey-ai[adk]'

Usage with ADK:

import asyncio

from google.adk.models.llm_request import LlmRequest

from google.genai import types

from portkey_ai.integrations.adk import PortkeyAdk

llm = PortkeyAdk(

api_key="PORTKEY_API_KEY",

model="@openai/gpt-4o-mini",

# base_url="https://api.portkey.ai/v1", ## Optional

)

req = LlmRequest(

model="@openai/gpt-4o-mini",

contents=[

types.Content(

role="user",

parts=[types.Part.from_text(text="Tell me a short programming joke.")],

)

],

)

async def main():

# Print only partial chunks to avoid duplicate final output

async for resp in llm.generate_content_async(req, stream=True):

if getattr(resp, "partial", False) and resp.content and resp.content.parts:

for p in resp.content.parts:

if getattr(p, "text", None):

print(p.text, end="")

print()

asyncio.run(main())

Non-streaming example (single final response):

import asyncio

from google.adk.models.llm_request import LlmRequest

from google.genai import types

from portkey_ai.integrations.adk import PortkeyAdk

llm = PortkeyAdk(

api_key="PORTKEY_API_KEY",

model="@openai/gpt-4o-mini",

)

req = LlmRequest(

model="@openai/gpt-4o-mini",

contents=[

types.Content(

role="user",

parts=[types.Part.from_text(text="Give me a one-line programming joke (final only).")],

)

],

)

async def main():

final_text = []

async for resp in llm.generate_content_async(req, stream=False):

if resp.content and resp.content.parts:

for p in resp.content.parts:

if getattr(p, "text", None):

final_text.append(p.text)

print("".join(final_text))

asyncio.run(main())

Configuration notes:

system_role: By default, the adapter sends the system instruction as a developer role message to align with ADK. If your provider expects a strict system role, pass system_role="system" when constructing PortkeyAdk.

llm = PortkeyAdk(

model="@openai/gpt-4o-mini",

api_key="PORTKEY_API_KEY",

system_role="system", # switch from default "developer"

)

Tools: When tools are present in the ADK request, the adapter sets tool_choice="auto" to enable function calling by default (mirrors the Strands adapter behavior).

Portkey currently supports all the OpenAI methods, including the legacy ones.

| Methods | OpenAI V1.26.0 | Portkey V1.3.1 |

|---|---|---|

| Audio | ✅ | ✅ |

| Chat | ✅ | ✅ |

| Embeddings | ✅ | ✅ |

| Images | ✅ | ✅ |

| Fine-tuning | ✅ | ✅ |

| Batch | ✅ | ✅ |

| Files | ✅ | ✅ |

| Models | ✅ | ✅ |

| Moderations | ✅ | ✅ |

| Assistants | ✅ | ✅ |

| Threads | ✅ | ✅ |

| Thread - Messages | ✅ | ✅ |

| Thread - Runs | ✅ | ✅ |

| Thread - Run - Steps | ✅ | ✅ |

| Vector Store | ✅ | ✅ |

| Vector Store - Files | ✅ | ✅ |

| Vector Store - Files Batches | ✅ | ✅ |

| Generations | ❌ (Deprecated) | ✅ |

| Completions | ❌ (Deprecated) | ✅ |

| Methods | Portkey V1.3.1 |

|---|---|

| Feedback | ✅ |

| Prompts | ✅ |

Get started by checking out Github issues. Email us at support@portkey.ai or just ping on Discord to chat.

FAQs

Python client library for the Portkey API

We found that portkey-ai demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

Product

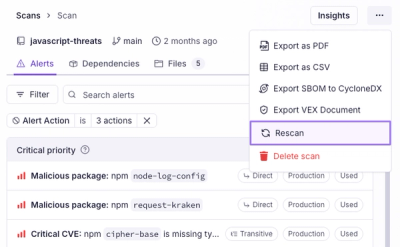

Scan results now load faster and remain consistent over time, with stable URLs and on-demand rescans for fresh security data.

Product

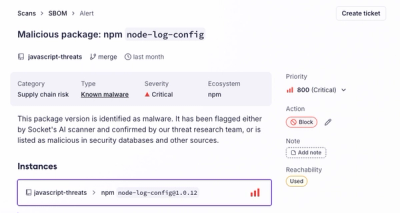

Socket's new Alert Details page is designed to surface more context, with a clearer layout, reachability dependency chains, and structured review.