Product

Introducing Socket Fix for Safe, Automated Dependency Upgrades

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

protein-metamorphisms-is

Advanced tools

Comprehensive Python Module for Protein Data Management: Designed for streamlined integration and processing of protein information from both UniProt and PDB. Equipped with features for concurrent data fetching, robust error handling, and database synchronization.

Protein Information System (PIS) is an integrated biological information system focused on extracting, processing, and managing protein-related data. PIS consolidates data from UniProt, PDB, and GOA, enabling the efficient retrieval and organization of protein sequences, structures, and functional annotations.

The primary goal of PIS is to provide a robust framework for large-scale protein data extraction, facilitating downstream functional analysis and annotation transfer. The system is designed for high-performance computing (HPC) environments, ensuring scalability and efficiency.

🔄 FANTASIA has been completely redesigned and is now available at:

FANTASIA Repository

This new version is a pipeline for annotating GO (Gene Ontology) terms in protein sequence files (FASTAs). The redesign focuses on long-term support, updated dependencies, and improved integration with High-Performance Computing (HPC) environments.

🛠️ A stable version of the information system for working with UniProt and annotation transfer is available at:

Zenodo Stable Release

This version serves as a reference implementation and provides a consistent environment for annotation transfer tasks.

Ensure Docker is installed on your system. If it’s not, you can download it from here.

Ensure PostgreSQL and RabbitMQ services are running.

docker run -d --name pgvectorsql \

-e POSTGRES_USER=usuario \

-e POSTGRES_PASSWORD=clave \

-e POSTGRES_DB=BioData \

-p 5432:5432 \

pgvector/pgvector:pg16

You can use pgAdmin 4, a graphical interface for managing and interacting with PostgreSQL databases, or any other SQL client.

Start a RabbitMQ container using the command below:

docker run -d --name rabbitmq \

-p 15672:15672 \

-p 5672:5672 \

rabbitmq:management

Once RabbitMQ is running, you can access its management interface at RabbitMQ Management Interface.

To execute the full extraction process, simply run:

python main.py

This command will trigger the complete workflow, starting from the initial data preprocessing stages and continuing through to the final data organization and storage.

You can customize the sequence of tasks executed by modifying main.py or adjusting the relevant parameters in the config.yaml file. This allows you to tailor the extraction process to meet specific research needs or to experiment with different data processing configurations.

FAQs

Comprehensive Python Module for Protein Data Management: Designed for streamlined integration and processing of protein information from both UniProt and PDB. Equipped with features for concurrent data fetching, robust error handling, and database synchronization.

We found that protein-metamorphisms-is demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Automatically fix and test dependency updates with socket fix—a new CLI tool that turns CVE alerts into safe, automated upgrades.

Security News

CISA denies CVE funding issues amid backlash over a new CVE foundation formed by board members, raising concerns about transparency and program governance.

Product

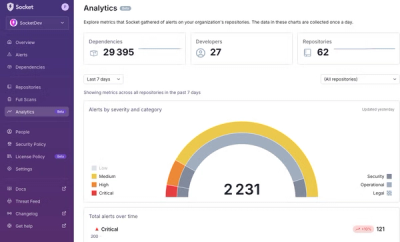

We’re excited to announce a powerful new capability in Socket: historical data and enhanced analytics.