Security Fundamentals

Turtles, Clams, and Cyber Threat Actors: Shell Usage

The Socket Threat Research Team uncovers how threat actors weaponize shell techniques across npm, PyPI, and Go ecosystems to maintain persistence and exfiltrate data.

A library for deserializing schemaless avro encoded bytes into Apache Arrow record batches. This library was created as an experiment to gauge potential improvements in kafka messages deserialization speed - particularly from the python ecosystem.

The main speed-ups in this code are from releasing python's gil during deserialization and the use of multiple cores. The speed-ups are much more noticeable on larger datasets or more complex avro schemas.

This library is still experimental and has not been tested in production. Please use with caution.

Running pyruhvro serialize

20 loops, best of 5: 13.8 msec per loop

running fastavro serialize

5 loops, best of 5: 71.7 msec per loop

running pyruhvro deserialize

50 loops, best of 5: 6.59 msec per loop

running fastavro deserialize

5 loops, best of 5: 55.3 msec per loop

pip install pyruhvro

pip install fastavro

pip install pyarrow

cd scripts

bash benchmark.sh

see scripts/generate_avro.py for a working example

from typing import List

from pyarrow import RecordBatch

from pyruhvro import deserialize_array_threaded, serialize_record_batch

schema = """

{

"type": "record",

"name": "userdata",

"namespace": "com.example",

"fields": [

{

"name": "userid",

"type": "string"

},

{

"name": "age",

"type": "int"

},

... more fields...

}

"""

# serialized values from kafka messages

serialized_messages: list[bytes] = [serialized_message1, serialized_message2, ...]

# num_chunks is the number of chunks to break the data down into. These chunks can be picked up by other threads/cores on your machine

num_chunks = 8

record_batches: List[RecordBatch] = deserialize_array_threaded(serialized_messages, schema, num_chunks)

# serialize the record batches back to avro

serialized_records = [serialize_record_batch(r, schema, 8) for r in record_batches]

requires rust tools to be installed

pip install maturinmaturin build --release/users/currentuser/rust/pyruhvro/target/wheels/pyruhvro-0.1.0-cp312-cp312-macosx_11_0_arm64.whlpip install /users/currentuser/rust/pyruhvro/target/wheels/pyruhvro-0.1.0-cp312-cp312-macosx_11_0_arm64.whlFAQs

Fast, multi-threaded deserialization of schema-less avro encoded messages

We found that pyruhvro demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

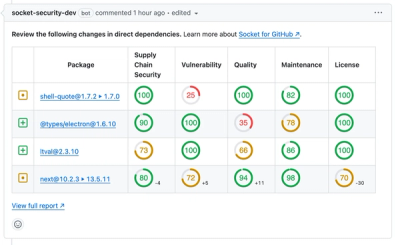

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security Fundamentals

The Socket Threat Research Team uncovers how threat actors weaponize shell techniques across npm, PyPI, and Go ecosystems to maintain persistence and exfiltrate data.

Security News

At VulnCon 2025, NIST scrapped its NVD consortium plans, admitted it can't keep up with CVEs, and outlined automation efforts amid a mounting backlog.

Product

We redesigned our GitHub PR comments to deliver clear, actionable security insights without adding noise to your workflow.