Product

Introducing Webhook Events for Pull Request Scans

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.

StreamXfer is a powerful tool for streaming data from SQL Server to local or object storage(S3) for seamless transfer using UNIX pipe, supporting various general data formats(CSV, TSV, JSON).

StreamXfer is a powerful tool for streaming data from SQL Server to local or object storage(S3) for seamless transfer using UNIX pipe, supporting various general data formats(CSV, TSV, JSON).

Supported OS: Linux, macOS

I've migrated 10TB data from SQL Server into Amazon Redshift using this tool.

Before installing StreamXfer, you need to install the following dependencies:

Then, install StreamXfer from PyPI:

$ python3 -m pip install streamxfer

Alternatively, install from source:

$ git clone https://github.com/zhiweio/StreamXfer.git && cd StreamXfer/

$ python3 setup.py install

StreamXfer can be used as a command-line tool or as a library in Python.

$ stx [OPTIONS] PYMSSQL_URL TABLE OUTPUT_PATH

Here is an example command:

$ stx 'mssql+pymssql:://user:pass@host:port/db' '[dbo].[test]' /local/path/to/dir/

You can also use the following options:

-F, --format: The data format (CSV, TSV, or JSON).--compress-type: The compression type (LZOP or GZIP).For more information on the options, run stx --help.

$ stx --help

Usage: stx [OPTIONS] PYMSSQL_URL TABLE OUTPUT_PATH

StreamXfer is a powerful tool for streaming data from SQL Server to local or

object storage(S3) for seamless transfer using UNIX pipe, supporting various

general data formats(CSV, TSV, JSON).

Examples:

stx 'mssql+pymssql:://user:pass@host:port/db' '[dbo].[test]' /local/path/to/dir/

stx 'mssql+pymssql:://user:pass@host:port/db' '[dbo].[test]' s3://bucket/path/to/dir/

Options:

-F, --format [CSV|TSV|JSON] [default: JSON]

-C, --compress-type [LZOP|GZIP]

-h, --help Show this message and exit.

To use StreamXfer as a library in Python, you can import the StreamXfer class, and use them to build and pump the data stream.

Here is an example code snippet:

from streamxfer import StreamXfer

from streamxfer.format import Format

from streamxfer.compress import CompressType

sx = StreamXfer(

"mssql+pymssql:://user:pass@host:port/db",

format=Format.CSV,

compress_type=CompressType.LZOP,

chunk_size=1000000,

)

sx.build("[dbo].[test]", path="s3://bucket/path/to/dir/")

sx.pump()

Here are some related articles

FAQs

StreamXfer is a powerful tool for streaming data from SQL Server to local or object storage(S3) for seamless transfer using UNIX pipe, supporting various general data formats(CSV, TSV, JSON).

We found that streamxfer demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

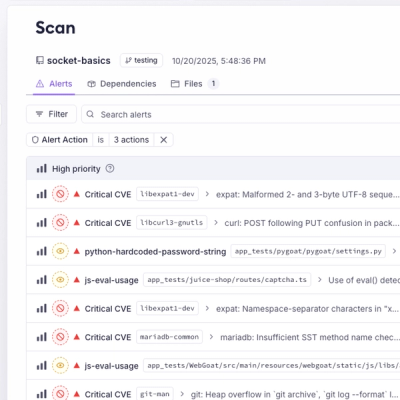

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.

Research

The Socket Threat Research Team uncovered malicious NuGet packages typosquatting the popular Nethereum project to steal wallet keys.

Product

A single platform for static analysis, secrets detection, container scanning, and CVE checks—built on trusted open source tools, ready to run out of the box.