Security News

/Research

Wallet-Draining npm Package Impersonates Nodemailer to Hijack Crypto Transactions

Malicious npm package impersonates Nodemailer and drains wallets by hijacking crypto transactions across multiple blockchains.

Provide a universal solution for crawler platforms. Read more: https://github.com/ClericPy/uniparser.

Provides a general low-code page parsing solution.

Backwards Compatibility Breaking Warning:

uniparserwill not install any default parsers after version v3.0.0. You can install some of them manually ('selectolax', 'jsonpath-rw-ext', 'objectpath', 'bs4', 'toml', 'pyyaml>=5.3', 'lxml', 'jmespath'). This warning will keep 2 versions.

pip install uniparser -Uor

pip install uniparser[parsers]with default 3rd parsers

app.mount("/uniparser", uniparser_app)

1. css (HTML)

1. bs4

2. xml

1. lxml

3. regex

4. jsonpath

1. jsonpath-rw-ext

5. objectpath

1. objectpath

6. jmespath

1. jmespath

7. time

8. loader

1. json / yaml / toml

1. toml

2. pyyaml

9. udf

1. source code for exec & eval which named as **parse**

10. python

1. some common python methods, getitem, split, join...

11. *waiting for new ones...*

Mission: Crawl python Meta-PEPs

Only less than 25 lines necessary code besides the rules(which can be saved outside and auto loaded).

HostRules will be saved at

$HOME/host_rules.jsonby default, not need to init every time.

# These rules will be saved at `$HOME/host_rules.json`

crawler = Crawler(

storage=JSONRuleStorage.loads(

r'{"www.python.org": {"host": "www.python.org", "crawler_rules": {"main": {"name":"list","request_args":{"method":"get","url":"https://www.python.org/dev/peps/","headers":{"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"}},"parse_rules":[{"name":"__request__","chain_rules":[["css","#index-by-category #meta-peps-peps-about-peps-or-processes td.num>a","@href"],["re","^/","@https://www.python.org/"],["python","getitem","[:3]"]],"childs":""}],"regex":"^https://www.python.org/dev/peps/$","encoding":""}, "subs": {"name":"detail","request_args":{"method":"get","url":"https://www.python.org/dev/peps/pep-0001/","headers":{"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"}},"parse_rules":[{"name":"title","chain_rules":[["css","h1.page-title","$text"],["python","getitem","[0]"]],"childs":""}],"regex":"^https://www.python.org/dev/peps/pep-\\d+$","encoding":""}}}}'

))

expected_result = {

'list': {

'__request__': [

'https://www.python.org/dev/peps/pep-0001',

'https://www.python.org/dev/peps/pep-0004',

'https://www.python.org/dev/peps/pep-0005'

],

'__result__': [{

'detail': {

'title': 'PEP 1 -- PEP Purpose and Guidelines'

}

}, {

'detail': {

'title': 'PEP 4 -- Deprecation of Standard Modules'

}

}, {

'detail': {

'title': 'PEP 5 -- Guidelines for Language Evolution'

}

}]

}

}

from uniparser import Crawler, JSONRuleStorage

import asyncio

crawler = Crawler(

storage=JSONRuleStorage.loads(

r'{"www.python.org": {"host": "www.python.org", "crawler_rules": {"main": {"name":"list","request_args":{"method":"get","url":"https://www.python.org/dev/peps/","headers":{"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"}},"parse_rules":[{"name":"__request__","chain_rules":[["css","#index-by-category #meta-peps-peps-about-peps-or-processes td.num>a","@href"],["re","^/","@https://www.python.org/"],["python","getitem","[:3]"]],"childs":""}],"regex":"^https://www.python.org/dev/peps/$","encoding":""}, "subs": {"name":"detail","request_args":{"method":"get","url":"https://www.python.org/dev/peps/pep-0001/","headers":{"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36"}},"parse_rules":[{"name":"title","chain_rules":[["css","h1.page-title","$text"],["python","getitem","[0]"]],"childs":""}],"regex":"^https://www.python.org/dev/peps/pep-\\d+$","encoding":""}}}}'

))

expected_result = {

'list': {

'__request__': [

'https://www.python.org/dev/peps/pep-0001',

'https://www.python.org/dev/peps/pep-0004',

'https://www.python.org/dev/peps/pep-0005'

],

'__result__': [{

'detail': {

'title': 'PEP 1 -- PEP Purpose and Guidelines'

}

}, {

'detail': {

'title': 'PEP 4 -- Deprecation of Standard Modules'

}

}, {

'detail': {

'title': 'PEP 5 -- Guidelines for Language Evolution'

}

}]

}

}

def test_sync_crawler():

result = crawler.crawl('https://www.python.org/dev/peps/')

print('sync result:', result)

assert result == expected_result

def test_async_crawler():

async def _test():

result = await crawler.acrawl('https://www.python.org/dev/peps/')

print('sync result:', result)

assert result == expected_result

asyncio.run(_test())

test_sync_crawler()

test_async_crawler()

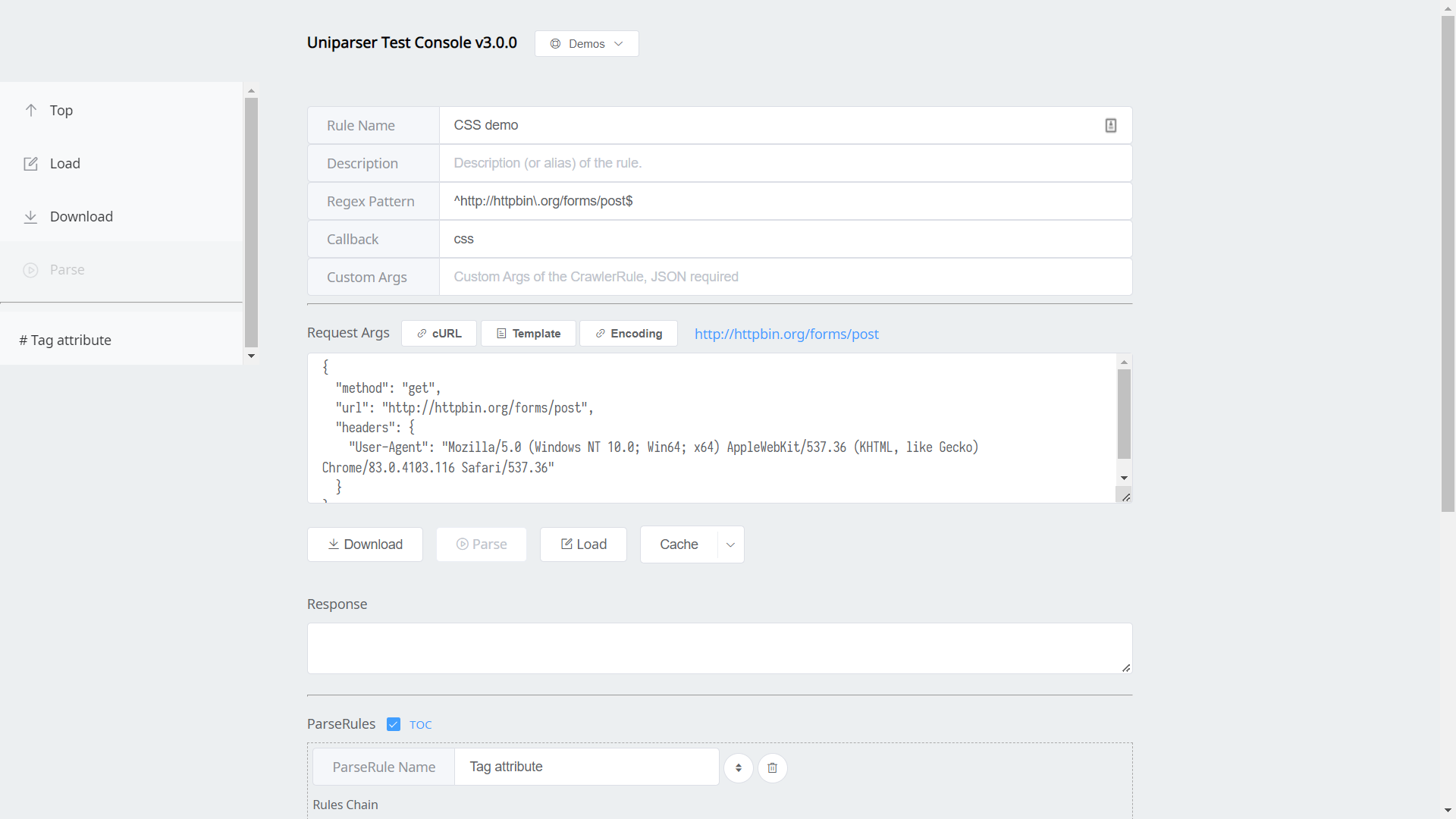

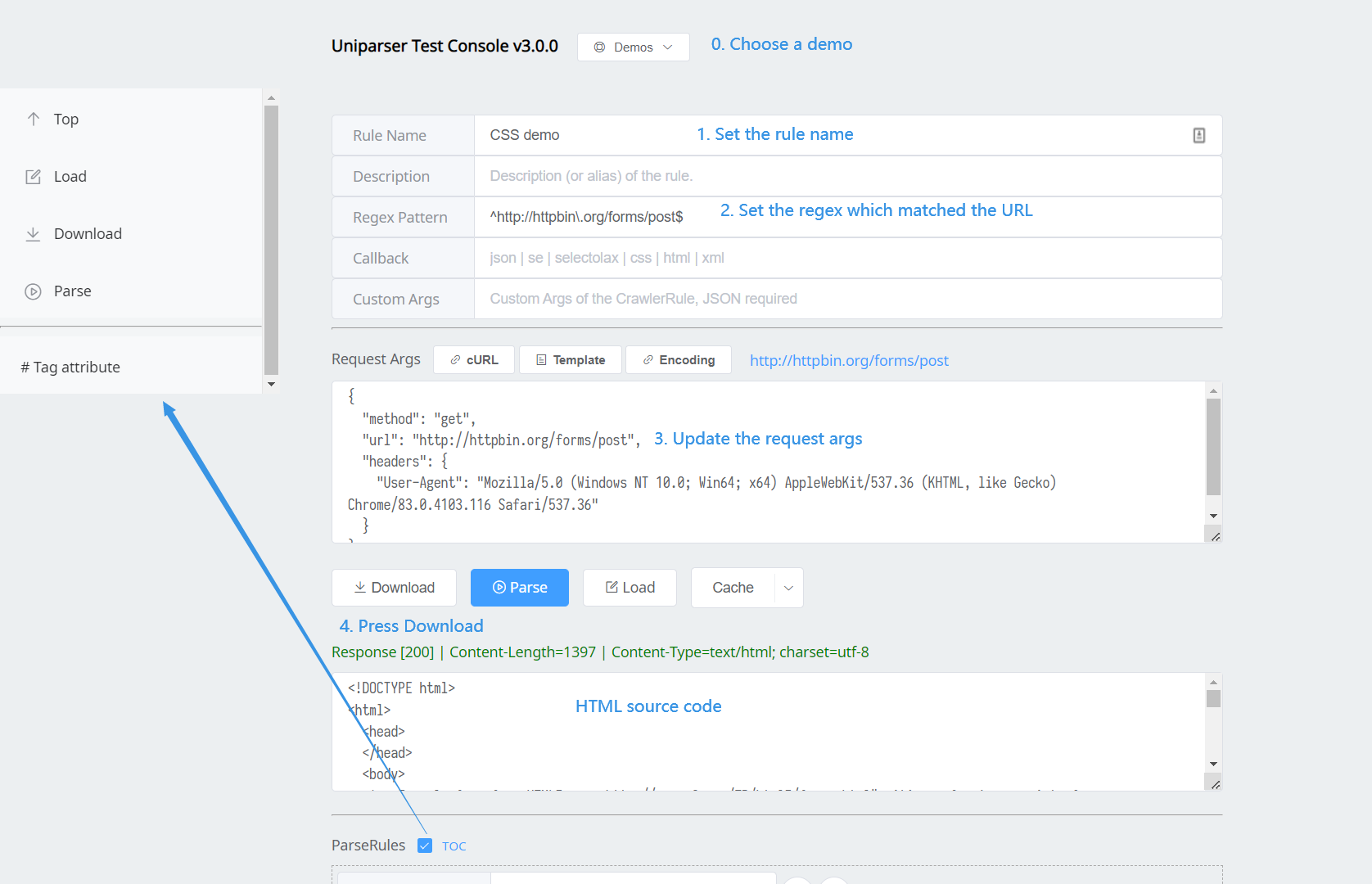

- pip install bottle uniparser

- python -m uniparser 8080

- open browser => http://127.0.0.1:8080/

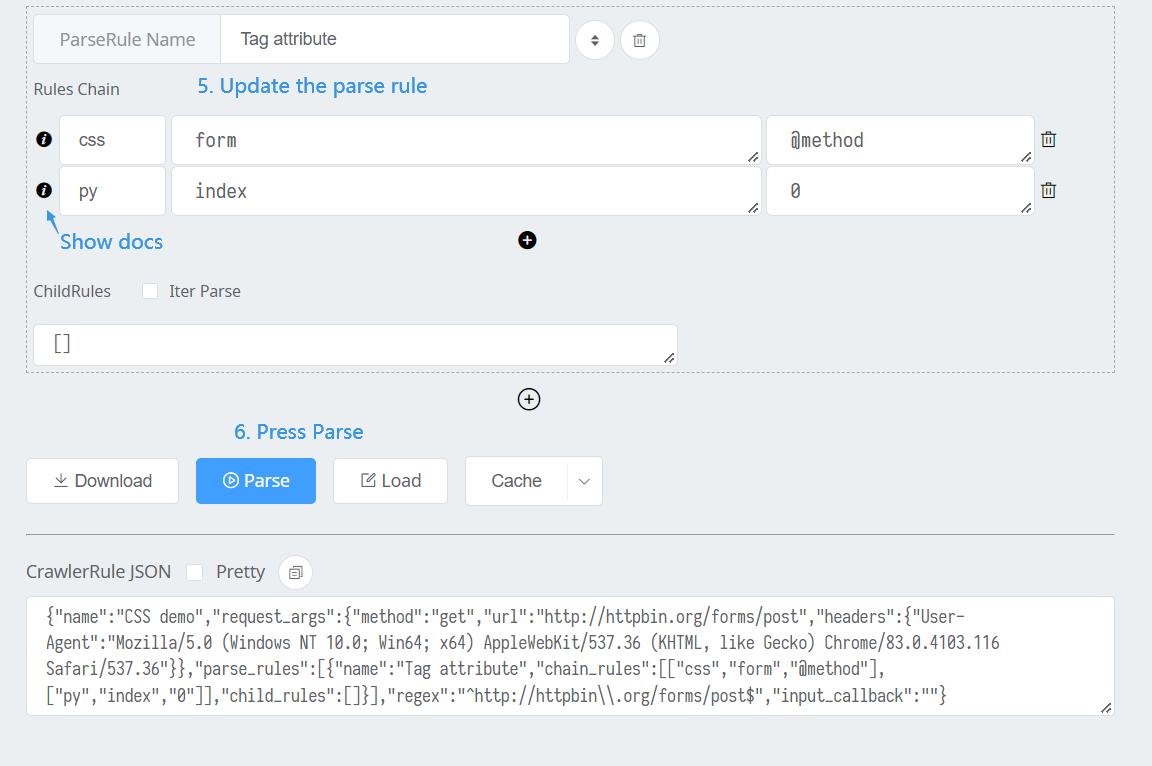

Show result as JSON

{"CSS demo":{"Tag attribute":"post"}}

As we can see, CrawlerRule's name is the root key, and ParseRule's name as the others.

import uvicorn

from uniparser.fastapi_ui import app

if __name__ == "__main__":

uvicorn.run(app, port=8080)

# http://127.0.0.1:8080

import uvicorn

from fastapi import FastAPI

from uniparser.fastapi_ui import app as sub_app

app = FastAPI()

app.mount('/uniparser', sub_app)

if __name__ == "__main__":

uvicorn.run(app, port=8080)

# http://127.0.0.1:8080/uniparser/

Some Demos: Click the dropdown buttons on top of the Web UI

Test Code: test_parsers.py

Advanced Usage: Create crawler rule for watchdogs

Generate parsers doc

from uniparser import Uniparser

for i in Uniparser().parsers:

print(f'## {i.__class__.__name__} ({i.name})\n\n```\n{i.doc}\n```')

Compare parsers and choose a faster one

css: 2558 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '@href']

css: 2491 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$text']

css: 2385 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$innerHTML']

css: 2495 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$html']

css: 2296 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$outerHTML']

css: 2182 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$string']

css: 2130 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$self']

=================================================================================

css1: 2525 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '@href']

css1: 2402 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$text']

css1: 2321 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$innerHTML']

css1: 2256 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$html']

css1: 2122 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$outerHTML']

css1: 2142 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$string']

css1: 2483 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$self']

=================================================================================

selectolax: 15187 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '@href']

selectolax: 19164 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$text']

selectolax: 19699 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$html']

selectolax: 20659 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$outerHTML']

selectolax: 20369 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$self']

=================================================================================

selectolax1: 17572 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '@href']

selectolax1: 19096 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$text']

selectolax1: 17997 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$html']

selectolax1: 18100 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$outerHTML']

selectolax1: 19137 calls / sec, ['<a class="url" href="/">title</a>', 'a.url', '$self']

=================================================================================

xml: 3171 calls / sec, ['<dc:creator><![CDATA[author]]></dc:creator>', 'creator', '$text']

=================================================================================

re: 220240 calls / sec, ['a a b b c c', 'a|c', '@b']

re: 334206 calls / sec, ['a a b b c c', 'a', '']

re: 199572 calls / sec, ['a a b b c c', 'a (a b)', '$0']

re: 203122 calls / sec, ['a a b b c c', 'a (a b)', '$1']

re: 256544 calls / sec, ['a a b b c c', 'b', '-']

=================================================================================

jsonpath: 28 calls / sec, [{'a': {'b': {'c': 1}}}, '$..c', '']

=================================================================================

objectpath: 42331 calls / sec, [{'a': {'b': {'c': 1}}}, '$..c', '']

=================================================================================

jmespath: 95449 calls / sec, [{'a': {'b': {'c': 1}}}, 'a.b.c', '']

=================================================================================

udf: 58236 calls / sec, ['a b c d', 'input_object[::-1]', '']

udf: 64846 calls / sec, ['a b c d', 'context["key"]', {'key': 'value'}]

udf: 55169 calls / sec, ['a b c d', 'md5(input_object)', '']

udf: 45388 calls / sec, ['["string"]', 'json_loads(input_object)', '']

udf: 50741 calls / sec, ['["string"]', 'json_loads(obj)', '']

udf: 48974 calls / sec, [['string'], 'json_dumps(input_object)', '']

udf: 41670 calls / sec, ['a b c d', 'parse = lambda input_object: input_object', '']

udf: 31930 calls / sec, ['a b c d', 'def parse(input_object): context["key"]="new";return context', {'key': 'new'}]

=================================================================================

python: 383293 calls / sec, [[1, 2, 3], 'getitem', '[-1]']

python: 350290 calls / sec, [[1, 2, 3], 'getitem', '[:2]']

python: 325668 calls / sec, ['abc', 'getitem', '[::-1]']

python: 634737 calls / sec, [{'a': '1'}, 'getitem', 'a']

python: 654257 calls / sec, [{'a': '1'}, 'get', 'a']

python: 642111 calls / sec, ['a b\tc \n \td', 'split', '']

python: 674048 calls / sec, [['a', 'b', 'c', 'd'], 'join', '']

python: 478239 calls / sec, [['aaa', ['b'], ['c', 'd']], 'chain', '']

python: 191430 calls / sec, ['python', 'template', '1 $input_object 2']

python: 556022 calls / sec, [[1], 'index', '0']

python: 474540 calls / sec, ['python', 'index', '-1']

python: 619489 calls / sec, [{'a': '1'}, 'index', 'a']

python: 457317 calls / sec, ['adcb', 'sort', '']

python: 494608 calls / sec, [[1, 3, 2, 4], 'sort', 'desc']

python: 581480 calls / sec, ['aabbcc', 'strip', 'a']

python: 419745 calls / sec, ['aabbcc', 'strip', 'ac']

python: 615518 calls / sec, [' \t a ', 'strip', '']

python: 632536 calls / sec, ['a', 'default', 'b']

python: 655448 calls / sec, ['', 'default', 'b']

python: 654189 calls / sec, [' ', 'default', 'b']

python: 373153 calls / sec, ['a', 'base64_encode', '']

python: 339589 calls / sec, ['YQ==', 'base64_decode', '']

python: 495246 calls / sec, ['a', '0', 'b']

python: 358796 calls / sec, ['', '0', 'b']

python: 356988 calls / sec, [None, '0', 'b']

python: 532092 calls / sec, [{0: 'a'}, '0', 'a']

=================================================================================

loader: 159737 calls / sec, ['{"a": "b"}', 'json', '']

loader: 38540 calls / sec, ['a = "a"', 'toml', '']

loader: 3972 calls / sec, ['animal: pets', 'yaml', '']

loader: 461297 calls / sec, ['a', 'b64encode', '']

loader: 412507 calls / sec, ['YQ==', 'b64decode', '']

=================================================================================

time: 39241 calls / sec, ['2020-02-03 20:29:45', 'encode', '']

time: 83251 calls / sec, ['1580732985.1873155', 'decode', '']

time: 48469 calls / sec, ['2020-02-03T20:29:45', 'encode', '%Y-%m-%dT%H:%M:%S']

time: 74481 calls / sec, ['1580732985.1873155', 'decode', '%b %d %Y %H:%M:%S']

FAQs

Provide a universal solution for crawler platforms. Read more: https://github.com/ClericPy/uniparser.

We found that uniparser demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

/Research

Malicious npm package impersonates Nodemailer and drains wallets by hijacking crypto transactions across multiple blockchains.

Security News

This episode explores the hard problem of reachability analysis, from static analysis limits to handling dynamic languages and massive dependency trees.

Security News

/Research

Malicious Nx npm versions stole secrets and wallet info using AI CLI tools; Socket’s AI scanner detected the supply chain attack and flagged the malware.