Security News

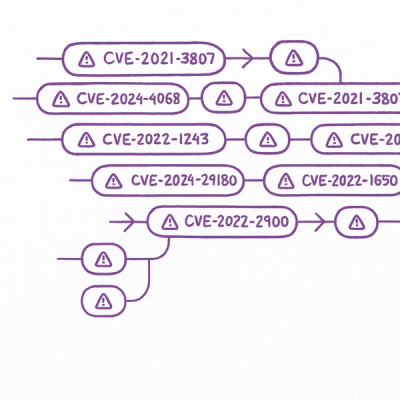

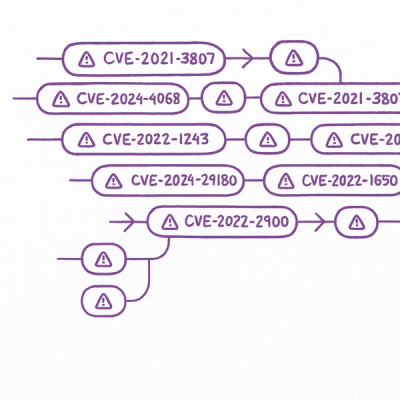

Static vs. Runtime Reachability: Insights from Latio’s On the Record Podcast

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.

whisper-parallel-cpu

Advanced tools

High-performance audio and video transcription using whisper.cpp with automatic model downloading and CPU parallelism

A minimal, robust Python package for whisper.cpp with CPU-optimized threading and integrated model management. Transcribe both audio and video files with high performance. Targeting distributed cloud deployments and transcription workflows.

Install from PyPI:

pip install whisper-parallel-cpu

Use in Python:

import whisper_parallel_cpu

# Transcribe audio files

text = whisper_parallel_cpu.transcribe("audio.mp3", model="base")

# Transcribe video files

text = whisper_parallel_cpu.transcribe("video.mp4", model="base")

# Or use specific functions

text = whisper_parallel_cpu.transcribe_audio("audio.wav", model="small")

text = whisper_parallel_cpu.transcribe_video("video.mkv", model="medium")

Or use the CLI:

# Transcribe audio

whisper_parallel_cpu transcribe audio.mp3 --model base

# Transcribe video

whisper_parallel_cpu transcribe video.mp4 --model base

pip install and go.mp3, .wav, .flac, .aac, .ogg, .m4a) and video (.mp4, .mkv, .avi, .mov) formatspip install whisper-parallel-cpu

# Clone the repository

git clone https://github.com/krisfur/whisper-parallel-cpu.git

cd whisper-parallel-cpu

# Install in editable mode

pip install -e .

# Test the installation

python test_transcribe.py video.mp4

g++, clang++) - automatically handled by pipmacOS:

brew install ffmpeg

Ubuntu/Debian:

sudo apt update && sudo apt install ffmpeg

Windows: Download from ffmpeg.org or use Chocolatey:

choco install ffmpeg

import whisper_parallel_cpu

# Transcribe any audio or video file (auto-detects format)

text = whisper_parallel_cpu.transcribe("audio.mp3", model="base", threads=4)

text = whisper_parallel_cpu.transcribe("video.mp4", model="small")

# Use specific functions for audio or video

text = whisper_parallel_cpu.transcribe_audio("audio.wav", model="base", threads=4)

text = whisper_parallel_cpu.transcribe_video("video.mkv", model="medium", threads=8)

# CPU-only mode (no GPU)

text = whisper_parallel_cpu.transcribe("audio.flac", model="base", use_gpu=False)

For better performance when transcribing multiple files, use the WhisperModel class to load the model once and reuse it:

from whisper_parallel_cpu import WhisperModel

# Create a model instance (model is loaded on first use)

model = WhisperModel(model="base", use_gpu=False, threads=4)

# Transcribe multiple files using the same loaded model

files = ["audio1.mp3", "audio2.wav", "video1.mp4", "video2.mkv"]

for file_path in files:

text = model.transcribe(file_path)

print(f"Transcribed {file_path}: {text[:100]}...")

# Use as context manager

with WhisperModel(model="small", use_gpu=True) as model:

text1 = model.transcribe("audio1.mp3")

text2 = model.transcribe("audio2.wav")

# Model is automatically managed

# Memory management

model.clear_contexts() # Free memory

print(f"Active contexts: {model.get_context_count()}")

Audio Formats:

.mp3, .wav, .flac, .aac, .ogg, .m4a, .wma, .opus, .webm, .3gp, .amr, .au, .ra, .mid, .midiVideo Formats:

.mp4, .avi, .mov, .mkv, .wmv, .flv, .webm, .m4v, .3gp, .ogv, .ts, .mts, .m2tsThe following models are available and will be downloaded automatically:

| Model | Size | Accuracy | Speed | Use Case |

|---|---|---|---|---|

tiny | 74MB | Good | Fastest | Quick transcriptions |

base | 141MB | Better | Fast | General purpose |

small | 444MB | Better | Medium | High accuracy needed |

medium | 1.4GB | Best | Slow | Maximum accuracy |

large | 2.9GB | Best | Slowest | Professional use |

# List available models

whisper_parallel_cpu list

# Download a specific model

whisper_parallel_cpu download base

# Transcribe audio files

whisper_parallel_cpu transcribe audio.mp3 --model base --threads 4

whisper_parallel_cpu transcribe audio.wav --model small

# Transcribe video files

whisper_parallel_cpu transcribe video.mp4 --model base --threads 4

whisper_parallel_cpu transcribe video.mkv --model medium

# Transcribe without GPU (CPU-only)

whisper_parallel_cpu transcribe audio.flac --model small --no-gpu

import whisper_parallel_cpu

# List available models

whisper_parallel_cpu.list_models()

# Download a specific model

whisper_parallel_cpu.download_model("medium")

# Force re-download

whisper_parallel_cpu.download_model("base", force=True)

# Test with 5 audio/video copies

python benchmark.py audio.mp3 5

python benchmark.py video.mp4 5

WhisperModel class for multiple transcriptions to avoid reloading the model each timeWhen transcribing multiple files, using the WhisperModel class can provide significant performance improvements:

from whisper_parallel_cpu import WhisperModel

import time

# Method 1: Using WhisperModel (model reuse) - FASTER

model = WhisperModel(model="base")

start = time.time()

for file in files:

text = model.transcribe(file)

model_time = time.time() - start

# Method 2: Using transcribe function (no reuse) - SLOWER

start = time.time()

for file in files:

text = whisper_parallel_cpu.transcribe(file, model="base")

function_time = time.time() - start

print(f"Speedup with model reuse: {function_time / model_time:.2f}x")

Typical speedups:

transcribe(file_path, model, threads, use_gpu)Transcribes an audio or video file using Whisper. Automatically detects file type.

Parameters:

file_path (str): Path to the audio or video filemodel (str): Model name (e.g. "base", "tiny", etc.) or path to Whisper model binary (.bin file)threads (int): Number of CPU threads to use (default: 4)use_gpu (bool): Whether to use GPU acceleration (default: True)Returns:

str: Transcribed texttranscribe_audio(audio_path, model, threads, use_gpu)Transcribes an audio file using Whisper.

Parameters:

audio_path (str): Path to the audio filemodel (str): Model name (e.g. "base", "tiny", etc.) or path to Whisper model binary (.bin file)threads (int): Number of CPU threads to use (default: 4)use_gpu (bool): Whether to use GPU acceleration (default: True)Returns:

str: Transcribed texttranscribe_video(video_path, model, threads, use_gpu)Transcribes a video file using Whisper.

Parameters:

video_path (str): Path to the video filemodel (str): Model name (e.g. "base", "tiny", etc.) or path to Whisper model binary (.bin file)threads (int): Number of CPU threads to use (default: 4)use_gpu (bool): Whether to use GPU acceleration (default: True)Returns:

str: Transcribed textExample:

import whisper_parallel_cpu

# Basic usage

text = whisper_parallel_cpu.transcribe_video("sample.mp4")

# Advanced usage

text = whisper_parallel_cpu.transcribe_video(

"sample.mp4",

model="medium",

threads=8,

use_gpu=False

)

WhisperModel(model, use_gpu, threads)A class for efficient model reuse across multiple transcriptions.

Parameters:

model (str): Model name (e.g. "base", "tiny", etc.) or path to Whisper model binary (.bin file)use_gpu (bool): Whether to use GPU acceleration (default: False)threads (int): Number of CPU threads to use (default: 4)Methods:

transcribe(file_path): Transcribe any audio or video filetranscribe_audio(audio_path): Transcribe an audio filetranscribe_video(video_path): Transcribe a video fileclear_contexts(): Clear all cached contexts to free memoryget_context_count(): Get number of cached contextsExample:

from whisper_parallel_cpu import WhisperModel

# Create model instance

model = WhisperModel(model="base", use_gpu=False, threads=4)

# Transcribe multiple files efficiently

files = ["audio1.mp3", "audio2.wav", "video1.mp4"]

for file_path in files:

text = model.transcribe(file_path)

print(f"Transcribed: {text[:50]}...")

# Memory management

model.clear_contexts()

clear_contexts()Clear all cached whisper contexts to free memory.

Example:

import whisper_parallel_cpu

# Clear all cached contexts

whisper_parallel_cpu.clear_contexts()

get_context_count()Get the number of currently cached whisper contexts.

Returns:

int: Number of cached contextsExample:

import whisper_parallel_cpu

# Check how many contexts are cached

count = whisper_parallel_cpu.get_context_count()

print(f"Active contexts: {count}")

git checkout -b feature-namegit commit -m 'Add feature'git push origin feature-nameMIT License - see LICENSE file for details.

FAQs

High-performance audio and video transcription using whisper.cpp with automatic model downloading and CPU parallelism

We found that whisper-parallel-cpu demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.

Security News

The latest Opengrep releases add Apex scanning, precision rule tuning, and performance gains for open source static code analysis.

Security News

npm now supports Trusted Publishing with OIDC, enabling secure package publishing directly from CI/CD workflows without relying on long-lived tokens.