Research

Malicious npm Packages Impersonate Flashbots SDKs, Targeting Ethereum Wallet Credentials

Four npm packages disguised as cryptographic tools steal developer credentials and send them to attacker-controlled Telegram infrastructure.

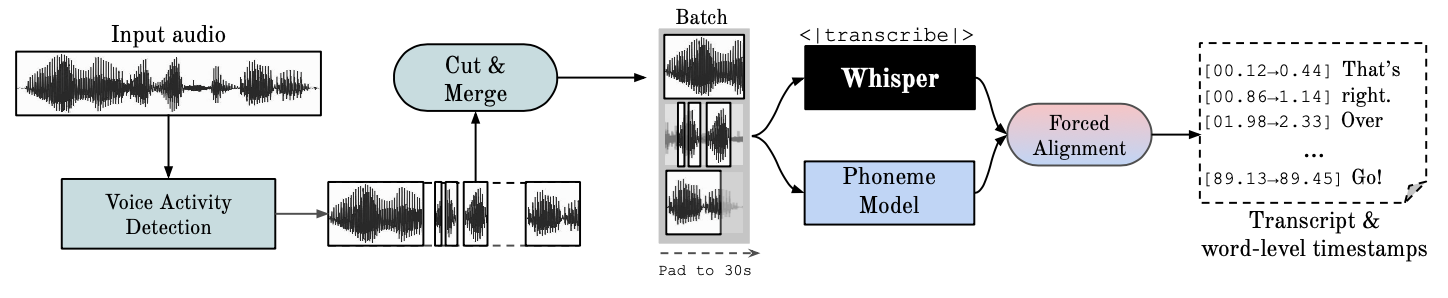

This repository provides fast automatic speech recognition (70x realtime with large-v2) with word-level timestamps and speaker diarization.

Whisper is an ASR model developed by OpenAI, trained on a large dataset of diverse audio. Whilst it does produces highly accurate transcriptions, the corresponding timestamps are at the utterance-level, not per word, and can be inaccurate by several seconds. OpenAI's whisper does not natively support batching.

Phoneme-Based ASR A suite of models finetuned to recognise the smallest unit of speech distinguishing one word from another, e.g. the element p in "tap". A popular example model is wav2vec2.0.

Forced Alignment refers to the process by which orthographic transcriptions are aligned to audio recordings to automatically generate phone level segmentation.

Voice Activity Detection (VAD) is the detection of the presence or absence of human speech.

Speaker Diarization is the process of partitioning an audio stream containing human speech into homogeneous segments according to the identity of each speaker.

The easiest way to install WhisperX is through PyPi:

pip install whisperx

Or if using uvx:

uvx whisperx

These installation methods are for developers or users with specific needs. If you're not sure, stick with the simple installation above.

To install directly from the GitHub repository:

uvx git+https://github.com/m-bain/whisperX.git

If you want to modify the code or contribute to the project:

git clone https://github.com/m-bain/whisperX.git

cd whisperX

uv sync --all-extras --dev

Note: The development version may contain experimental features and bugs. Use the stable PyPI release for production environments.

You may also need to install ffmpeg, rust etc. Follow openAI instructions here https://github.com/openai/whisper#setup.

If you're using WhisperX with GPU support and encounter errors like:

Could not load library libcudnn_ops_infer.so.8Unable to load any of {libcudnn_cnn.so.9.1.0, libcudnn_cnn.so.9.1, libcudnn_cnn.so.9, libcudnn_cnn.so}libcudnn_ops_infer.so.8: cannot open shared object file: No such file or directoryThis means your system is missing the CUDA Deep Neural Network library (cuDNN). This library is needed for GPU acceleration but isn't always installed by default.

Install cuDNN (example for apt based systems):

sudo apt update

sudo apt install libcudnn8 libcudnn8-dev -y

To enable Speaker Diarization, include your Hugging Face access token (read) that you can generate from Here after the --hf_token argument and accept the user agreement for the following models: Segmentation and Speaker-Diarization-3.1 (if you choose to use Speaker-Diarization 2.x, follow requirements here instead.)

Note

As of Oct 11, 2023, there is a known issue regarding slow performance with pyannote/Speaker-Diarization-3.0 in whisperX. It is due to dependency conflicts between faster-whisper and pyannote-audio 3.0.0. Please see this issue for more details and potential workarounds.

Run whisper on example segment (using default params, whisper small) add --highlight_words True to visualise word timings in the .srt file.

whisperx path/to/audio.wav

Result using WhisperX with forced alignment to wav2vec2.0 large:

Compare this to original whisper out the box, where many transcriptions are out of sync:

For increased timestamp accuracy, at the cost of higher gpu mem, use bigger models (bigger alignment model not found to be that helpful, see paper) e.g.

whisperx path/to/audio.wav --model large-v2 --align_model WAV2VEC2_ASR_LARGE_LV60K_960H --batch_size 4

To label the transcript with speaker ID's (set number of speakers if known e.g. --min_speakers 2 --max_speakers 2):

whisperx path/to/audio.wav --model large-v2 --diarize --highlight_words True

To run on CPU instead of GPU (and for running on Mac OS X):

whisperx path/to/audio.wav --compute_type int8

The phoneme ASR alignment model is language-specific, for tested languages these models are automatically picked from torchaudio pipelines or huggingface.

Just pass in the --language code, and use the whisper --model large.

Currently default models provided for {en, fr, de, es, it} via torchaudio pipelines and many other languages via Hugging Face. Please find the list of currently supported languages under DEFAULT_ALIGN_MODELS_HF on alignment.py. If the detected language is not in this list, you need to find a phoneme-based ASR model from huggingface model hub and test it on your data.

whisperx --model large-v2 --language de path/to/audio.wav

See more examples in other languages here.

import whisperx

import gc

device = "cuda"

audio_file = "audio.mp3"

batch_size = 16 # reduce if low on GPU mem

compute_type = "float16" # change to "int8" if low on GPU mem (may reduce accuracy)

# 1. Transcribe with original whisper (batched)

model = whisperx.load_model("large-v2", device, compute_type=compute_type)

# save model to local path (optional)

# model_dir = "/path/"

# model = whisperx.load_model("large-v2", device, compute_type=compute_type, download_root=model_dir)

audio = whisperx.load_audio(audio_file)

result = model.transcribe(audio, batch_size=batch_size)

print(result["segments"]) # before alignment

# delete model if low on GPU resources

# import gc; import torch; gc.collect(); torch.cuda.empty_cache(); del model

# 2. Align whisper output

model_a, metadata = whisperx.load_align_model(language_code=result["language"], device=device)

result = whisperx.align(result["segments"], model_a, metadata, audio, device, return_char_alignments=False)

print(result["segments"]) # after alignment

# delete model if low on GPU resources

# import gc; import torch; gc.collect(); torch.cuda.empty_cache(); del model_a

# 3. Assign speaker labels

diarize_model = whisperx.diarize.DiarizationPipeline(use_auth_token=YOUR_HF_TOKEN, device=device)

# add min/max number of speakers if known

diarize_segments = diarize_model(audio)

# diarize_model(audio, min_speakers=min_speakers, max_speakers=max_speakers)

result = whisperx.assign_word_speakers(diarize_segments, result)

print(diarize_segments)

print(result["segments"]) # segments are now assigned speaker IDs

If you don't have access to your own GPUs, use the links above to try out WhisperX.

For specific details on the batching and alignment, the effect of VAD, as well as the chosen alignment model, see the preprint paper.

To reduce GPU memory requirements, try any of the following (2. & 3. can affect quality):

--batch_size 4--model base--compute_type int8Transcription differences from openai's whisper:

--without_timestamps True, this ensures 1 forward pass per sample in the batch. However, this can cause discrepancies the default whisper output.--condition_on_prev_text is set to False by default (reduces hallucination)If you are multilingual, a major way you can contribute to this project is to find phoneme models on huggingface (or train your own) and test them on speech for the target language. If the results look good send a pull request and some examples showing its success.

Bug finding and pull requests are also highly appreciated to keep this project going, since it's already diverging from the original research scope.

Multilingual init

Automatic align model selection based on language detection

Python usage

Incorporating speaker diarization

Model flush, for low gpu mem resources

Faster-whisper backend

Add max-line etc. see (openai's whisper utils.py)

Sentence-level segments (nltk toolbox)

Improve alignment logic

update examples with diarization and word highlighting

Subtitle .ass output <- bring this back (removed in v3)

Add benchmarking code (TEDLIUM for spd/WER & word segmentation)

Allow silero-vad as alternative VAD option

Improve diarization (word level). Harder than first thought...

Contact maxhbain@gmail.com for queries.

This work, and my PhD, is supported by the VGG (Visual Geometry Group) and the University of Oxford.

Of course, this is builds on openAI's whisper. Borrows important alignment code from PyTorch tutorial on forced alignment And uses the wonderful pyannote VAD / Diarization https://github.com/pyannote/pyannote-audio

Valuable VAD & Diarization Models from:

Great backend from faster-whisper and CTranslate2

Those who have supported this work financially 🙏

Finally, thanks to the OS contributors of this project, keeping it going and identifying bugs.

@article{bain2022whisperx,

title={WhisperX: Time-Accurate Speech Transcription of Long-Form Audio},

author={Bain, Max and Huh, Jaesung and Han, Tengda and Zisserman, Andrew},

journal={INTERSPEECH 2023},

year={2023}

}

FAQs

Time-Accurate Automatic Speech Recognition using Whisper.

We found that whisperx demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Research

Four npm packages disguised as cryptographic tools steal developer credentials and send them to attacker-controlled Telegram infrastructure.

Security News

Ruby maintainers from Bundler and rbenv teams are building rv to bring Python uv's speed and unified tooling approach to Ruby development.

Security News

Following last week’s supply chain attack, Nx published findings on the GitHub Actions exploit and moved npm publishing to Trusted Publishers.