Security News

Astral Launches pyx: A Python-Native Package Registry

Astral unveils pyx, a Python-native package registry in beta, designed to speed installs, enhance security, and integrate deeply with uv.

== Medusa: a ruby crawler framework {rdoc-image:https://badge.fury.io/rb/medusa-crawler.svg}[https://rubygems.org/gems/medusa-crawler] rdoc-image:https://github.com/brutuscat/medusa-crawler/workflows/Ruby/badge.svg?event=push

Medusa is a framework for the ruby language to crawl and collect useful information about the pages it visits. It is versatile, allowing you to write your own specialized tasks quickly and easily.

=== Features

Do you have an idea or a suggestion? {Open an issue and talk about it}[https://github.com/brutuscat/medusa-crawler/issues/new]

=== Examples

Medusa is versatile and to be used programatically, you can start with one or multiple URIs:

require 'medusa'

Medusa.crawl('https://www.example.com', depth_limit: 2)

Or you can pass a block and it will yield the crawler back, to manage configuration or drive its crawling focus:

require 'medusa'

Medusa.crawl('https://www.example.com', depth_limit: 2) do |crawler|

crawler.discard_page_bodies = some_flag

# Persist all the pages state across crawl-runs.

crawler.clear_on_startup = false

crawler.storage = Medusa::Storage.Moneta(:Redis, 'redis://redis.host.name:6379/0')

crawler.skip_links_like(/private/)

crawler.on_pages_like(/public/) do |page|

logger.debug "[public page] #{page.url} took #{page.response_time} found #{page.links.count}"

end

# Use an arbitrary logic, page by page, to continue customize the crawling.

crawler.focus_crawl(/public/) do |page|

page.links.first

end

end

=== Requirements

moneta:: for the key/value storage adapters nokogiri:: for parsing the HTML of webpages robotex:: for support of the robots.txt directives

=== Development

To test and develop this gem, additional requirements are:

=== About

Medusa is a revamped version of the defunk anemone gem.

=== License

Copyright (c) 2009 Vertive, Inc.

Copyright (c) 2020 Mauro Asprea

Released under the {MIT License}[https://github.com/brutuscat/medusa-crawler/blob/master/LICENSE.txt]

FAQs

Unknown package

We found that medusa-crawler demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

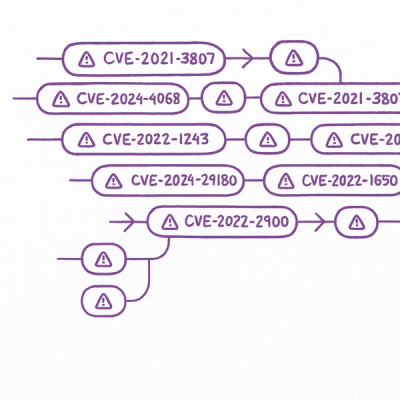

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Astral unveils pyx, a Python-native package registry in beta, designed to speed installs, enhance security, and integrate deeply with uv.

Security News

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.

Security News

The latest Opengrep releases add Apex scanning, precision rule tuning, and performance gains for open source static code analysis.