Security News

New Website “Is It Really FOSS?” Tracks Transparency in Open Source Distribution Models

A new site reviews software projects to reveal if they’re truly FOSS, making complex licensing and distribution models easy to understand.

A Ruby gem that integrates with OpenAI's API to detect whether given text contains obscene, inappropriate, or NSFW content. It provides a simple interface for content moderation using AI.

Add this line to your application's Gemfile:

gem 'obscene_gpt'

And then execute:

bundle install

Or install it yourself as:

gem install obscene_gpt

You'll need an OpenAI API key to use this gem. You can either:

export OPENAI_API_KEY="your-openai-api-key-here"

ObsceneGpt.configure do |config|

config.api_key = "your-openai-api-key-here"

config.model = "gpt-4.1-nano"

config.request_timeout = 5 # Optional, defaults to 10 seconds

end

detector = ObsceneGpt::Detector.new(api_key: "your-openai-api-key-here")

See the examples/usage.rb file for usage examples.

require 'obscene_gpt'

# Configure once in your app initialization

ObsceneGpt.configure do |config|

config.api_key = "your-openai-api-key-here"

config.model = "gpt-4.1-nano"

end

detector = ObsceneGpt::Detector.new

result = detector.detect("Hello, how are you today?")

puts result

# => {"obscene" => false, "confidence" => 0.95, "reasoning" => "The text is a polite greeting with no inappropriate content.", "categories" => []}

result = detector.detect("Some offensive text with BAAD words")

puts result

# => {"obscene" => true, "confidence" => 0.85, "reasoning" => "The text contains vulgar language with the word 'BAAD', which is likely intended as a vulgar or inappropriate term.", "categories" => ["profanity"]}

When ActiveModel is available, you can use the built-in validator to automatically check for obscene content in your models:

class Post < ActiveRecord::Base

validates :content, obscene_content: true

validates :title, obscene_content: { message: "Title contains inappropriate language" }

validates :description, obscene_content: { threshold: 0.9 }

validates :comment, obscene_content: {

threshold: 0.8,

message: "Comment violates community guidelines"

}

end

# The validator automatically caches results to avoid duplicate API calls

post = Post.new(content: "Some potentially inappropriate content")

if post.valid?

puts "Post is valid"

else

puts "Validation errors: #{post.errors.full_messages}"

end

Important: The validator uses Rails caching to ensure only one API call is made per unique text content. Results are cached for 1 hour to avoid repeated API calls for the same content.

The cost of using this gem is based on the number of API calls made. A very short input text will have roughly 170 tokens, and each 200 characters adds roughly another 50 tokens. The simple schema has 17 output tokens and the full schema has ~50 (depending on the length of the reasoning and the attributes). Using the simple schema and with an average of 200 chars per request, the cost (with the gpt-4.1-nano model) is roughly $1 per 35,000 requests.

The OpenAI API has rate limits that depends on the model you are using. The gpt-4.1-nano model has a rate limit of 500 requests per minute with a normal paid subscription.

Calling an API will obviously add some latency to your application. The latency is dependent on the model you are using and the length of the text you are analyzing. We do not recommend using this gem in latency-sensitive application.

Configure the gem globally.

ObsceneGpt.configure do |config|

config.api_key = "your-api-key"

config.model = "gpt-4.1-nano"

config.schema = ObsceneGpt::Prompts::SIMPLE_SCHEMA

config.prompt = ObsceneGpt::Prompts::SYSTEM_PROMPT

end

Get the current configuration object.

Creates a new detector instance.

Detects whether the given text contains obscene content.

Parameters:

text (String): Text to analyze.Returns: Hash with detection results. See Response Format for more details.

Raises:

ObsceneGpt::Error: If there's an OpenAI API errorDetects whether the given texts contain obscene content.

Parameters:

texts (Array): Texts to analyze.Returns: Array of hashes with detection results. See Response Format for more details.

Raises:

ObsceneGpt::Error: If there's an OpenAI API errorThe detection methods return a hash (or array of hashes) with the following structure:

{

obscene: true, # Boolean: whether content is inappropriate

confidence: 0.85, # Float: confidence score (0.0-1.0)

reasoning: "Contains explicit language and profanity",

categories: ["profanity", "explicit"] # Array of detected categories (["sexual", "profanity", "hate", "violent", "other"])

}

The default configuration is:

config.api_key = ENV["OPENAI_API_KEY"]

config.model = "gpt-4.1-nano"

config.schema = ObsceneGpt::Prompts::SIMPLE_SCHEMA

config.prompt = ObsceneGpt::Prompts::SYSTEM_PROMPT

config.test_mode = false

config.test_detector_class = ObsceneGpt::TestDetector

To avoid making API calls during testing, you can enable test mode:

ObsceneGpt.configure do |config|

config.test_mode = true

end

When test mode is enabled, the detector will return mock responses based on simple pattern matching instead of making actual API calls. This is useful for:

Note: Test mode uses basic pattern matching and is not as accurate as the actual AI model. It's intended for testing purposes only.

You can also configure a custom test detector class for more sophisticated test behavior:

class MyCustomTestDetector

attr_reader :schema

def initialize(schema: nil)

@schema = schema || ObsceneGpt::Prompts::SIMPLE_SCHEMA

end

def detect_many(texts)

texts.map do |text|

{

obscene: text.include?("bad_word"),

confidence: 0.9

}

end

end

def detect(text)

detect_many([text])[0]

end

end

ObsceneGpt.configure do |config|

config.test_mode = true

config.test_detector_class = MyCustomTestDetector

end

Custom test detectors must implement:

#initialize(schema: nil) - Accepts an optional schema parameter#detect_many(texts) - Returns an array of result hashesSee examples/custom_test_detector.rb for more examples.

We recommend using the gpt-4.1-nano model for cost efficiency.

Given the simplicity of the task, it's typically not necessary to use a more expensive model.

See OpenAI's documentation for more information.

The system prompt can be found in lib/obscene_gpt/prompts.rb.

This is a basic prompt that can be used to detect obscene content.

You can use a custom prompt if you need to by setting the prompt option in the configuration.

This library uses a JSON schema to enforce the response from the OpenAI API. There are two schemas available:

ObsceneGpt::Prompts::SIMPLE_SCHEMA: A simple schema that only includes the obscene and confidence fields.ObsceneGpt::Prompts::FULL_SCHEMA: A full schema that includes the obscene, confidence, reasoning, and categories fields.You can use a custom schema if you need to by setting the schema option in the configuration.

The ObsceneContentValidator is available when ActiveModel is loaded.

active_model needs to be required before obscene_gpt.

class Post < ActiveRecord::Base

validates :content, :title, :description, obscene_content: true

end

Note: Each instance of this validator will make a request to the OpenAI API. Therefore, it is recommended to pass all the attributes you want to check to the validator at once as shown above.

threshold (Float): Custom confidence threshold (0.0-1.0) for determining when content is considered inappropriate. Default: Uses ObsceneGpt.configuration.profanity_thresholdmessage (String): Custom error message to display when validation fails. Default: Uses AI reasoning if available, otherwise "contains inappropriate content"ignore_errors (Boolean): If true, the validator will not raise an error if the OpenAI API returns an error. Default: falseYou can also configure different options for different attributes in a single validation call:

class Post < ActiveRecord::Base

validates :title, :content, obscene_content: {

title: { threshold: 0.8, message: "Title is too inappropriate" },

content: { threshold: 0.7, message: "Content needs moderation" }

}

end

The validator uses the following precedence for options:

Threshold:

title: { threshold: 0.8 })threshold: 0.8)ObsceneGpt.configuration.profanity_threshold)Message:

title: { message: "..." })message: "...")ObsceneGpt::Prompts::FULL_SCHEMA)Basic validation:

class Post < ActiveRecord::Base

validates :content, obscene_content: true

end

Basic validation with ignore_errors:

If there is an error from the OpenAI API, the validator will just skip the validation and not raise an error.

class Post < ActiveRecord::Base

validates :content, obscene_content: { ignore_errors: true }

end

With custom message:

class Post < ActiveRecord::Base

validates :title, obscene_content: { message: "Title contains inappropriate content" }

end

With custom threshold:

class Post < ActiveRecord::Base

validates :description, obscene_content: { threshold: 0.9 }

end

With both custom threshold and message:

class Post < ActiveRecord::Base

validates :comment, obscene_content: {

threshold: 0.8,

message: "Comment violates community guidelines"

}

end

Per-attribute configuration:

class Post < ActiveRecord::Base

validates :title, :content, obscene_content: {

title: { threshold: 0.8, message: "Title is too inappropriate" },

content: { threshold: 0.7, message: "Content needs moderation" }

}

end

Mixed global and per-attribute options:

class Post < ActiveRecord::Base

validates :title, :content, obscene_content: {

threshold: 0.8, # Global threshold

message: "Contains inappropriate content", # Global message

title: { threshold: 0.9 } # Override threshold for title only

}

end

After checking out the repo, run bin/setup to install dependencies. Then, run rake spec to run the tests. You can also run bin/console for an interactive prompt that will allow you to experiment.

Bug reports and pull requests are welcome on GitHub at https://github.com/danhper/obscene_gpt.

The gem is available as open source under the terms of the MIT License.

FAQs

Unknown package

We found that obscene_gpt demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

A new site reviews software projects to reveal if they’re truly FOSS, making complex licensing and distribution models easy to understand.

Security News

Astral unveils pyx, a Python-native package registry in beta, designed to speed installs, enhance security, and integrate deeply with uv.

Security News

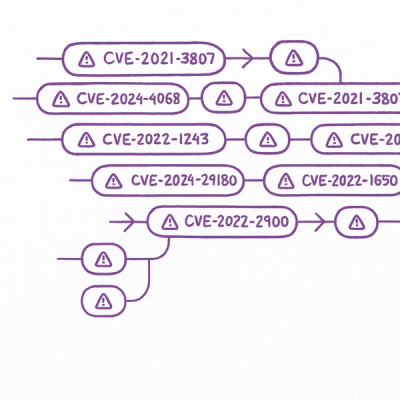

The Latio podcast explores how static and runtime reachability help teams prioritize exploitable vulnerabilities and streamline AppSec workflows.