Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Wenxin Jiang

October 20, 2025

We’re excited to announce Socket’s experimental support for Hugging Face, marking our first step toward securing the AI model ecosystem. This expansion brings Socket’s proven supply chain protection to a new frontier: scanning AI models themselves for hidden malware, backdoors, and malicious payloads that can silently compromise systems during inference or deserialization.

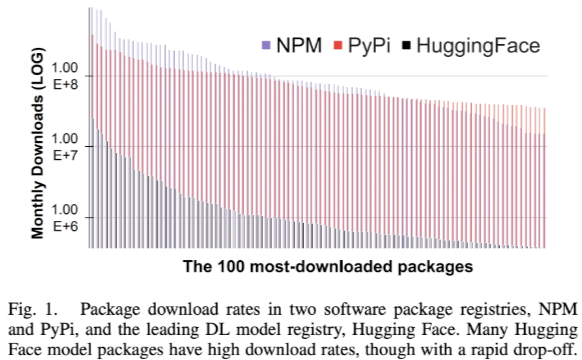

A 2023 study showed that Hugging Face usage now rivals traditional software registries like npm and PyPI. In 2025, the Hugging Face Hub has grown into a massive open ecosystem with over 2 million models, 500 thousand datasets, and 1 million demo apps (Spaces), all publicly available and increasingly integrated into production environments.

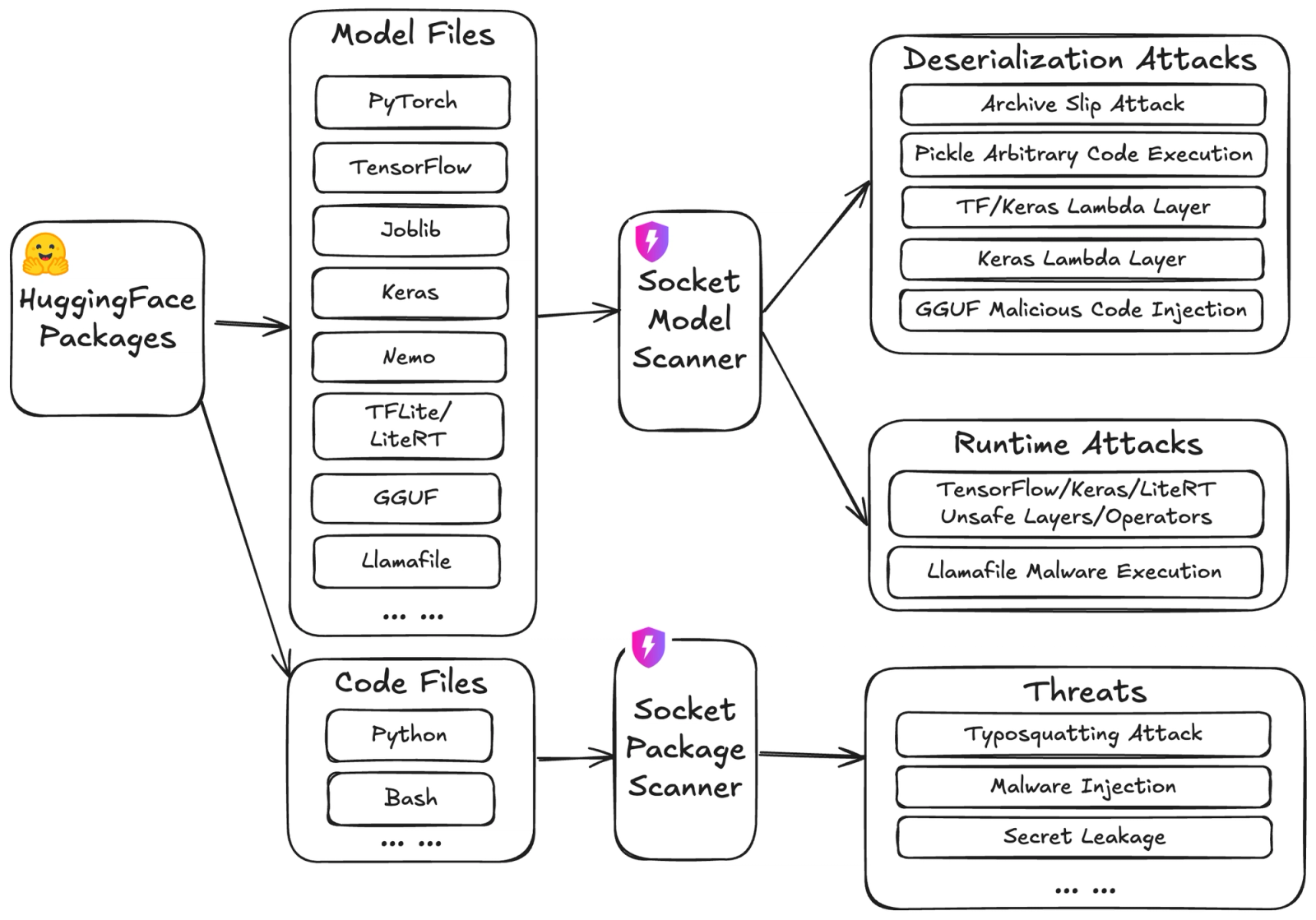

Traditional software security focuses on source code and dependencies. But in AI, models themselves are software, capable of executing code during deserialization or inference. Socket’s AI scanners now detect deserialization and runtime attacks across common model formats such as PyTorch, TensorFlow, Keras, TFLite, GGUF, and Llamafile. Real-world incidents have already shown that model artifacts can hide malicious payloads capable of exfiltrating data or executing arbitrary code on load, much like traditional software supply-chain compromises:

Socket’s new experimental integration for Hugging Face brings our proven software supply chain protections to the AI supply chain.

The architecture below illustrates how Socket’s scanners analyze both model files and code files to detect threats ranging from deserialization exploits to malicious runtime layers.

Socket’s new Model Scanner analyzes model files from Hugging Face for multiple classes of attacks:

Meanwhile, the Package Scanner continues to detect:

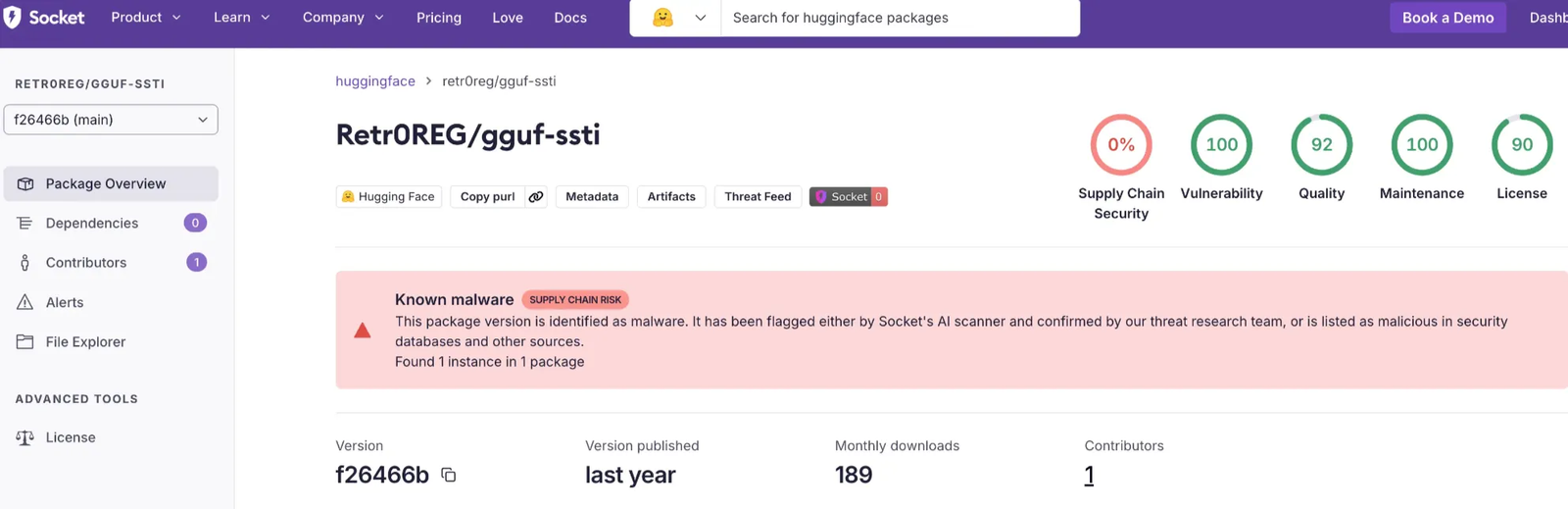

We provide package pages for Hugging Face models which include the alerts found by Socket’s AI scanner, as well as the package scores.

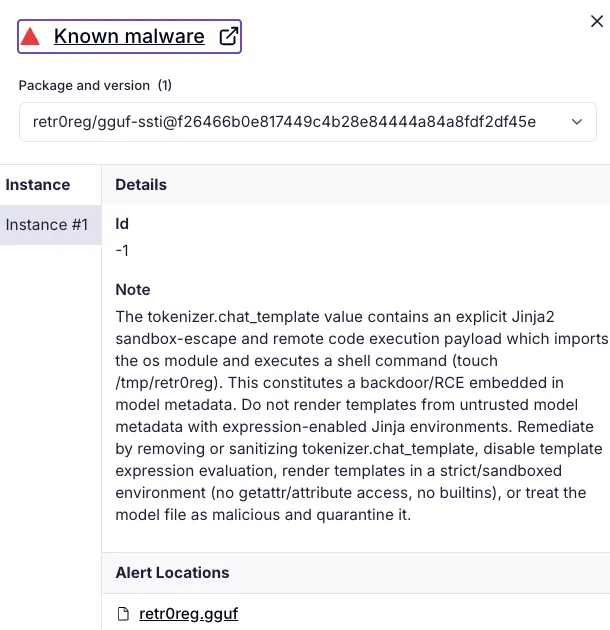

Socket alerts also provide details on why the model files are detected as malware:

Socket also supports License Overlays for the Hugging Face ecosystem, enabling teams to automatically reconcile nonstandard or custom licenses across AI models, datasets, and Spaces. This helps maintain consistent license compliance even when model authors use unconventional license identifiers.

You can learn more about how License Overlays work in our announcement post: Introducing License Overlays.

In addition to the package pages, we also provide a PURL API endpoint:

curl --request POST \

--url "https://api.socket.dev/v0/purl?alerts=true" \

--header 'accept: application/x-ndjson' \

--header "authorization: Bearer $SOCKET_API_KEY" \

--header 'content-type: application/json' \

--data '

{

"components": [

{

"purl": "pkg:huggingface/fra0599/tensorflow-injection@0c1f762b88845c66b7f6f202fd8aca59900ba3bd"

}

]

}

' | jq

{

"id": "90695048695",

"size": 17743,

"repositoryType": "model",

"type": "huggingface",

"namespace": "fra0599",

"name": "tensorflow-injection",

"version": "0c1f762b88845c66b7f6f202fd8aca59900ba3bd",

"alerts": [

{

"key": "QcJibmHNSYCQaSelx6tPKBaKtI3JQ1Lc67KGIkjz8ndk",

"type": "malware",

"severity": "critical",

"category": "supplyChainRisk",

"file": "layer.py",

"props": {

"id": 831431,

"note": "This code is a high-confidence remote code execution backdoor: it executes arbitrary Python passed in via the f parameter and is registered for Keras serialization, enabling the payload to be embedded in saved model configs and executed on model load. Treat models or code containing this layer as compromised and do not load or execute them in trusted environments. Replace with safe patterns (no exec, explicit parameterization, or sandboxed/deserialized-only-safe constructs)."

},

"action": "error",

"actionSource": {

"type": "org-policy",

"candidates": [

{

"type": "org-policy",

"action": "error",

"actionPolicyIndex": 0,

"repoLabelId": ""

}

]

},

"fix": {

"type": "remove",

"description": "Remove this package and either replace with a verified alternative from a trusted source or revert to a known-safe previous version."

}

},

{

"key": "Qrq4Sz5okg0AohcO0PW9J-gWEy6OVc1Jt4gOaYh9i0Gc",

"type": "noLicenseFound",

"severity": "low",

"category": "license",

"action": "ignore",

"actionSource": {

"type": "org-policy",

"candidates": [

{

"type": "org-policy",

"action": "ignore",

"actionPolicyIndex": 0,

"repoLabelId": ""

}

]

}

},

{

"key": "QSypql2YmBl7-zOhZfk9vXU6Q2aVO1rp6sUAm6OI7Qq8",

"type": "malware",

"severity": "critical",

"category": "supplyChainRisk",

"file": "model.keras/config.json",

"props": {

"id": 831433,

"note": "The model configuration contains an explicit, executable Python payload designed to run a shell command that reads a sensitive host file (/etc/passwd). Given the presence of pickled/custom objects and the explicit os.system call, loading or deserializing this model in an unsafe manner will very likely result in arbitrary code execution and data exposure. Treat the artifact as malicious; do not load it in trusted environments and analyze only within a hardened sandbox."

},

"action": "error",

"actionSource": {

"type": "org-policy",

"candidates": [

{

"type": "org-policy",

"action": "error",

"actionPolicyIndex": 0,

"repoLabelId": ""

}

]

},

"fix": {

"type": "remove",

"description": "Remove this package and either replace with a verified alternative from a trusted source or revert to a known-safe previous version."

}

},

{

"key": "QuCcW6UfxKC0m1TbX7IGhq0xMKD4jU-XXPhOLHZ-3Zks",

"type": "usesEval",

"severity": "middle",

"category": "supplyChainRisk",

"file": "layer.py",

"start": 210,

"end": 214,

"action": "ignore",

"actionSource": {

"type": "org-policy",

"candidates": [

{

"type": "org-policy",

"action": "ignore",

"actionPolicyIndex": 0,

"repoLabelId": ""

}

]

}

}

],

"score": {

"license": 1,

"maintenance": 1,

"overall": 0,

"quality": 1,

"supplyChain": 0,

"vulnerability": 1

},

"inputPurl": "pkg:huggingface/fra0599/tensorflow-injection@0c1f762b88845c66b7f6f202fd8aca59900ba3bd",

"batchIndex": 0,

"licenseDetails": []

}We also support scanning by loading AI Bill of Materials (AIBOM) files generated by other tools, such as the Aetheris AI AIBOM Generator on Hugging Face Spaces, which is listed in the CycloneDX Tool Center.

This tool produces a CycloneDX AIBOM file that includes the PURL of a Hugging Face package. Socket can ingest and analyze these AIBOM files directly, scanning the Hugging Face models listed within for malware and supply chain risks.

This enables users to seamlessly integrate Socket’s model and package scanning into existing CycloneDX-based AI SBOM workflows, without requiring any additional tooling:

curl --request POST \

--url 'https://api.socket.dev/v0/purl?alerts=true' \

--header "Content-Type: application/json" \

--header "Authorization: Bearer $SOCKET_API_KEY" \

--data "@retr0reg_llamafile-ape-runtime-injection_aibom.cdx.json" | jq

{

"id": "90694891617",

"size": 698957786,

"repositoryType": "model",

"type": "huggingface",

"namespace": "retr0reg",

"name": "llamafile-ape-runtime-injection",

"version": "382973092323cd8ee0c7f7faf164f315bed75545",

"alerts": [

{

"key": "Q_lpl0LbhhRy5rt7dV4IWsA0XR2xNCvU0PiJDPMYNDy8",

"type": "malware",

"severity": "critical",

"category": "supplyChainRisk",

"file": "mxbai-embed-large-v1-f16.llamafile",

"props": {

"id": 831322,

"note": "The APE bootstrap contains a malicious/tainted pattern: a backtick expression executed during startup ('`touch /tmp/r`') embedded in a conditional that will run each time the program is launched without --assimilate. That construct is a shell injection/command-execution vector. Even though the specific command here is 'touch /tmp/r' (harmless by itself), the presence of an executable backtick in the control flow is a strong indicator the bootstrap was tampered with or contains a potential supply-chain/backdoor insertion point. Combined with the normal behavior of extracting and executing embedded binaries, this package should be treated as potentially dangerous until the bootstrap and all embedded binaries are audited. Recommended action: do not run the file on production systems; inspect/remove backtick commands or obtain the artifact from a trusted source and verify signatures; analyze extracted binaries before executing."

},

"action": "error",

"actionSource": {

"type": "org-policy",

"candidates": [

{

"type": "org-policy",

"action": "error",

"actionPolicyIndex": 0,

"repoLabelId": ""

}

]

},

"fix": {

"type": "remove",

"description": "Remove this package and either replace with a verified alternative from a trusted source or revert to a known-safe previous version."

}

}

],

"score": {

"license": 1,

"maintenance": 1,

"overall": 0,

"quality": 1,

"supplyChain": 0,

"vulnerability": 1

},

"inputPurl": "pkg:huggingface/retr0reg/llamafile-ape-runtime-injection@1.0",

"batchIndex": 0,

"license": "MIT",

"licenseDetails": []

}Package pages for Hugging Face models, including model scores and alert details, are available to all Free and Team plan users.

The PURL API and AIBOM scanning capabilities are part of Socket Enterprise, designed for organizations integrating AI model scanning into automated workflows and CI/CD pipelines.

We’re extending Socket’s protection to cover Hugging Face Datasets and Spaces, enabling:

Our long-term goal is to make AI ecosystems as safe and auditable as modern software ecosystems. With this experimental launch, we invite developers and researchers using Hugging Face to explore Socket’s AI security capabilities and help shape the future of safer, more transparent AI supply chains.

Try Socket’s Hugging Face scanning today through the PURL API or by uploading your own AIBOM.

Subscribe to our newsletter

Get notified when we publish new security blog posts!

Try it now

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Product

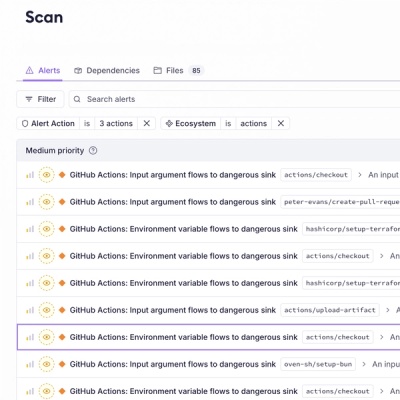

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

Product

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.