Product

Introducing Socket Firewall Enterprise: Flexible, Configurable Protection for Modern Package Ecosystems

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

@arizeai/openinference-instrumentation-beeai

Advanced tools

OpenInference Instrumentation for BeeAI framework

This module provides automatic instrumentation for BeeAI framework. It integrates seamlessly with the @opentelemetry/sdk-trace-node to collect and export telemetry data.

npm install --save @arizeai/openinference-instrumentation-beeai beeai-framework

npm install --save @opentelemetry/sdk-node @opentelemetry/exporter-trace-otlp-http @opentelemetry/semantic-conventions @arizeai/openinference-semantic-conventions

To instrument your application, import and enable BeeAIInstrumentation

import { NodeSDK, node, resources } from "@opentelemetry/sdk-node";

import { OTLPTraceExporter } from "@opentelemetry/exporter-trace-otlp-proto";

import { ATTR_SERVICE_NAME } from "@opentelemetry/semantic-conventions";

import { SEMRESATTRS_PROJECT_NAME } from "@arizeai/openinference-semantic-conventions";

import { BeeAIInstrumentation } from "@arizeai/openinference-instrumentation-beeai";

import * as beeaiFramework from "beeai-framework";

// Initialize Instrumentation Manually

const beeAIInstrumentation = new BeeAIInstrumentation();

const provider = new NodeSDK({

resource: new resources.Resource({

[ATTR_SERVICE_NAME]: "beeai",

[SEMRESATTRS_PROJECT_NAME]: "beeai-project",

}),

spanProcessors: [

new node.SimpleSpanProcessor(

new OTLPTraceExporter({

url: "http://localhost:6006/v1/traces",

}),

),

],

instrumentations: [beeAIInstrumentation],

});

await provider.start();

// Manually Patch BeeAgent (This is needed when the module is not loaded via require (commonjs))

console.log("🔧 Manually instrumenting BeeAgent...");

beeAIInstrumentation.manuallyInstrument(beeaiFramework);

console.log("✅ BeeAgent manually instrumented.");

// eslint-disable-next-line no-console

console.log("👀 OpenInference initialized");

import "./instrumentation.js";

import { BeeAgent } from "beeai-framework/agents/bee/agent";

import { TokenMemory } from "beeai-framework/memory/tokenMemory";

import { DuckDuckGoSearchTool } from "beeai-framework/tools/search/duckDuckGoSearch";

import { OpenMeteoTool } from "beeai-framework/tools/weather/openMeteo";

import { OllamaChatModel } from "beeai-framework/adapters/ollama/backend/chat";

const llm = new OllamaChatModel("llama3.1");

const agent = new BeeAgent({

llm,

memory: new TokenMemory(),

tools: [new DuckDuckGoSearchTool(), new OpenMeteoTool()],

});

const response = await agent.run({

prompt: "What's the current weather in Berlin?",

});

console.log(`Agent 🤖 : `, response.result.text);

For more information on OpenTelemetry Node.js SDK, see the OpenTelemetry Node.js SDK documentation.

You can specify a custom tracer provider when creating the BeeAI instrumentation. This is useful when you want to use a non-global tracer provider or have more control over the tracing configuration.

import { NodeTracerProvider } from "@opentelemetry/sdk-trace-node";

import { Resource } from "@opentelemetry/resources";

import { SEMRESATTRS_PROJECT_NAME } from "@arizeai/openinference-semantic-conventions";

import { BeeAIInstrumentation } from "@arizeai/openinference-instrumentation-beeai";

import * as beeaiFramework from "beeai-framework";

// Create a custom tracer provider

const customTracerProvider = new NodeTracerProvider({

resource: new Resource({

[SEMRESATTRS_PROJECT_NAME]: "my-beeai-project",

}),

});

// Pass the custom tracer provider to the instrumentation

const beeAIInstrumentation = new BeeAIInstrumentation({

tracerProvider: customTracerProvider,

});

// Manually instrument the BeeAI framework

beeAIInstrumentation.manuallyInstrument(beeaiFramework);

Alternatively, you can set the tracer provider after creating the instrumentation:

const beeAIInstrumentation = new BeeAIInstrumentation();

beeAIInstrumentation.setTracerProvider(customTracerProvider);

FAQs

OpenInference Instrumentation for BeeAI framework

We found that @arizeai/openinference-instrumentation-beeai demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 7 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket Firewall Enterprise is now available with flexible deployment, configurable policies, and expanded language support.

Security News

Open source dashboard CNAPulse tracks CVE Numbering Authorities’ publishing activity, highlighting trends and transparency across the CVE ecosystem.

Product

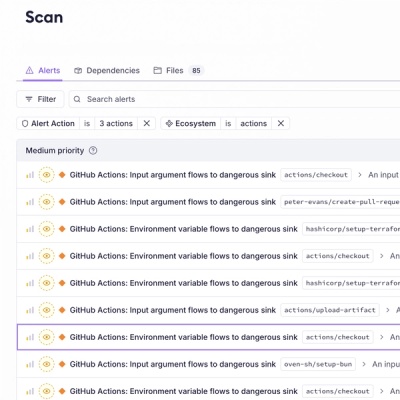

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.