Product

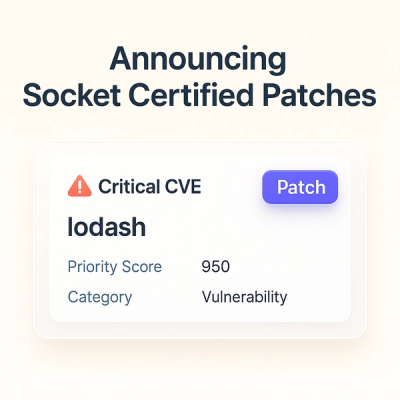

Introducing Socket Scanning for OpenVSX Extensions

Socket now scans OpenVSX extensions, giving teams early detection of risky behaviors, hidden capabilities, and supply chain threats in developer tools.

@azure-rest/ai-document-intelligence

Advanced tools

Extracts content, layout, and structured data from documents.

Please rely heavily on our REST client docs to use this library

NOTE: Form Recognizer has been rebranded to Document Intelligence. Please check the Migration Guide from

@azure/ai-form-recognizerto@azure-rest/ai-document-intelligence.

Key links:

This version of the client library defaults to the

"2024-11-30"version of the service.

This table shows the relationship between SDK versions and supported API versions of the service:

| SDK version | Supported API version of service |

|---|---|

| 1.0.0 | 2024-11-30 |

Please rely on the older

@azure/ai-form-recognizerlibrary through the older service API versions for retired models, such as"prebuilt-businessCard"and"prebuilt-document". For more information, see Changelog.

The below table describes the relationship of each client and its supported API version(s):

| Service API version | Supported clients | Package |

|---|---|---|

| 2024-11-30 | DocumentIntelligenceClient | @azure-rest/ai-document-intelligence version ^1.0.0 |

| 2023-07-31 | DocumentAnalysisClient and DocumentModelAdministrationClient | @azure/ai-form-recognizer version ^5.0.0 |

| 2022-08-01 | DocumentAnalysisClient and DocumentModelAdministrationClient | @azure/ai-form-recognizer version ^4.0.0 |

@azure-rest/ai-document-intelligence packageInstall the Azure DocumentIntelligence(formerlyFormRecognizer) REST client REST client library for JavaScript with npm:

npm install @azure-rest/ai-document-intelligence

DocumentIntelligenceClientTo use an Azure Active Directory (AAD) token credential, provide an instance of the desired credential type obtained from the @azure/identity library.

To authenticate with AAD, you must first npm install @azure/identity

After setup, you can choose which type of credential from @azure/identity to use.

As an example, DefaultAzureCredential

can be used to authenticate the client.

Set the values of the client ID, tenant ID, and client secret of the AAD application as environment variables: AZURE_CLIENT_ID, AZURE_TENANT_ID, AZURE_CLIENT_SECRET

import DocumentIntelligence from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

import DocumentIntelligence from "@azure-rest/ai-document-intelligence";

const client = DocumentIntelligence(process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"], {

key: process.env["DOCUMENT_INTELLIGENCE_API_KEY"],

});

Connect to alternative Azure cloud environments (such as Azure China or Azure Government) by specifying the scopes field in the credentials option and use the appropriate value from KnownDocumentIntelligenceAudience.

import DocumentIntelligence, {

KnownDocumentIntelligenceAudience,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

{

credentials: {

// Use the correct audience for your cloud environment

scopes: [KnownDocumentIntelligenceAudience.AzureGovernment],

},

},

);

If you do not specify scopes, the client will default to the Azure Public Cloud (https://cognitiveservices.azure.com).

import DocumentIntelligence from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const initialResponse = await client

.path("/documentModels/{modelId}:analyze", "prebuilt-layout")

.post({

contentType: "application/json",

body: {

urlSource:

"https://raw.githubusercontent.com/Azure/azure-sdk-for-js/6704eff082aaaf2d97c1371a28461f512f8d748a/sdk/formrecognizer/ai-form-recognizer/assets/forms/Invoice_1.pdf",

},

queryParameters: { locale: "en-IN" },

});

import DocumentIntelligence, {

isUnexpected,

getLongRunningPoller,

AnalyzeOperationOutput,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

import { join } from "node:path";

import { readFile } from "node:fs/promises";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const filePath = join("./assets", "forms", "Invoice_1.pdf");

const base64Source = await readFile(filePath, { encoding: "base64" });

const initialResponse = await client

.path("/documentModels/{modelId}:analyze", "prebuilt-layout")

.post({

contentType: "application/json",

body: {

base64Source,

},

queryParameters: { locale: "en-IN" },

});

if (isUnexpected(initialResponse)) {

throw initialResponse.body.error;

}

const poller = getLongRunningPoller(client, initialResponse);

const result = (await poller.pollUntilDone()).body as AnalyzeOperationOutput;

console.log(result);

import DocumentIntelligence, {

isUnexpected,

parseResultIdFromResponse,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

// 1. Analyze a batch of documents

const initialResponse = await client

.path("/documentModels/{modelId}:analyzeBatch", "prebuilt-layout")

.post({

contentType: "application/json",

body: {

azureBlobSource: {

containerUrl: process.env["DOCUMENT_INTELLIGENCE_BATCH_TRAINING_DATA_CONTAINER_SAS_URL"],

},

resultContainerUrl:

process.env["DOCUMENT_INTELLIGENCE_BATCH_TRAINING_DATA_RESULT_CONTAINER_SAS_URL"],

resultPrefix: "result",

},

});

if (isUnexpected(initialResponse)) {

throw initialResponse.body.error;

}

const resultId = parseResultIdFromResponse(initialResponse);

console.log("resultId: ", resultId);

// (Optional) You can poll for the batch analysis result but be aware that a job may take unexpectedly long time, and polling could incur additional costs.

// const poller = getLongRunningPoller(client, initialResponse);

// await poller.pollUntilDone();

// 2. At a later time, you can retrieve the operation result using the resultId

const output = await client

.path("/documentModels/{modelId}/analyzeResults/{resultId}", "prebuilt-layout", resultId)

.get();

console.log(output);

Supports output with Markdown content format along with the default plain text. For now, this is only supported for "prebuilt-layout". Markdown content format is deemed a more friendly format for LLM consumption in a chat or automation use scenario.

Service follows the GFM spec (GitHub Flavored Markdown) for the Markdown format. Also introduces a new contentFormat property with value "text" or "markdown" to indicate the result content format.

import DocumentIntelligence from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const initialResponse = await client

.path("/documentModels/{modelId}:analyze", "prebuilt-layout")

.post({

contentType: "application/json",

body: {

urlSource:

"https://raw.githubusercontent.com/Azure/azure-sdk-for-js/6704eff082aaaf2d97c1371a28461f512f8d748a/sdk/formrecognizer/ai-form-recognizer/assets/forms/Invoice_1.pdf",

},

queryParameters: { outputContentFormat: "markdown" }, // <-- new query parameter

});

When this feature flag is specified, the service will further extract the values of the fields specified via the queryFields query parameter to supplement any existing fields defined by the model as fallback.

import DocumentIntelligence from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

await client.path("/documentModels/{modelId}:analyze", "prebuilt-layout").post({

contentType: "application/json",

body: { urlSource: "..." },

queryParameters: {

features: ["queryFields"],

queryFields: ["NumberOfGuests", "StoreNumber"],

}, // <-- new query parameter

});

In the previous API versions supported by the older @azure/ai-form-recognizer library, document splitting and classification operation ("/documentClassifiers/{classifierId}:analyze") always tried to split the input file into multiple documents.

To enable a wider set of scenarios, service introduces a "split" query parameter with the new "2023-10-31-preview" service version. The following values are supported:

split: "auto"

Let service determine where to split.

split: "none"

The entire file is treated as a single document. No splitting is performed.

split: "perPage"

Each page is treated as a separate document. Each empty page is kept as its own document.

import DocumentIntelligence, {

isUnexpected,

getLongRunningPoller,

DocumentClassifierBuildOperationDetailsOutput,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const containerSasUrl = (): string =>

process.env["DOCUMENT_INTELLIGENCE_TRAINING_CONTAINER_SAS_URL"];

const initialResponse = await client.path("/documentClassifiers:build").post({

body: {

classifierId: `customClassifier-12345`,

description: "Custom classifier description",

docTypes: {

foo: {

azureBlobSource: {

containerUrl: containerSasUrl(),

},

},

bar: {

azureBlobSource: {

containerUrl: containerSasUrl(),

},

},

},

},

});

if (isUnexpected(initialResponse)) {

throw initialResponse.body.error;

}

const poller = getLongRunningPoller(client, initialResponse);

const response = (await poller.pollUntilDone())

.body as DocumentClassifierBuildOperationDetailsOutput;

console.log(response);

import DocumentIntelligence, {

isUnexpected,

getLongRunningPoller,

parseResultIdFromResponse,

streamToUint8Array,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

import { join } from "node:path";

import { readFile, writeFile } from "node:fs/promises";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const filePath = join("./assets", "layout-pageobject.pdf");

const base64Source = await readFile(filePath, { encoding: "base64" });

const initialResponse = await client

.path("/documentModels/{modelId}:analyze", "prebuilt-read")

.post({

contentType: "application/json",

body: {

base64Source,

},

queryParameters: { output: ["pdf"] },

});

if (isUnexpected(initialResponse)) {

throw initialResponse.body.error;

}

const poller = getLongRunningPoller(client, initialResponse);

await poller.pollUntilDone();

const output = await client

.path(

"/documentModels/{modelId}/analyzeResults/{resultId}/pdf",

"prebuilt-read",

parseResultIdFromResponse(initialResponse),

)

.get()

.asNodeStream(); // output.body would be NodeJS.ReadableStream

if (output.status !== "200" || !output.body) {

throw new Error("The response was unexpected, expected NodeJS.ReadableStream in the body.");

}

const pdfData = await streamToUint8Array(output.body);

await writeFile(`./output.pdf`, pdfData);

import DocumentIntelligence, {

isUnexpected,

getLongRunningPoller,

AnalyzeOperationOutput,

parseResultIdFromResponse,

streamToUint8Array,

} from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

import { join } from "node:path";

import { readFile, writeFile } from "node:fs/promises";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const filePath = join("./assets", "layout-pageobject.pdf");

const base64Source = await readFile(filePath, { encoding: "base64" });

const initialResponse = await client

.path("/documentModels/{modelId}:analyze", "prebuilt-layout")

.post({

contentType: "application/json",

body: {

base64Source,

},

queryParameters: { output: ["figures"] },

});

if (isUnexpected(initialResponse)) {

throw initialResponse.body.error;

}

const poller = getLongRunningPoller(client, initialResponse);

const result = (await poller.pollUntilDone()).body as AnalyzeOperationOutput;

const figures = result.analyzeResult?.figures;

const figureId = figures?.[0].id || "";

const output = await client

.path(

"/documentModels/{modelId}/analyzeResults/{resultId}/figures/{figureId}",

"prebuilt-layout",

parseResultIdFromResponse(initialResponse),

figureId,

)

.get()

.asNodeStream(); // output.body would be NodeJS.ReadableStream

if (output.status !== "200" || !output.body) {

throw new Error("The response was unexpected, expected NodeJS.ReadableStream in the body.");

}

const imageData = await streamToUint8Array(output.body);

await writeFile(`./figures/${figureId}.png`, imageData);

import DocumentIntelligence, { isUnexpected } from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const response = await client.path("/info").get();

if (isUnexpected(response)) {

throw response.body.error;

}

console.log(response.body.customDocumentModels.limit);

import DocumentIntelligence, { isUnexpected, paginate } from "@azure-rest/ai-document-intelligence";

import { DefaultAzureCredential } from "@azure/identity";

const client = DocumentIntelligence(

process.env["DOCUMENT_INTELLIGENCE_ENDPOINT"],

new DefaultAzureCredential(),

);

const response = await client.path("/documentModels").get();

if (isUnexpected(response)) {

throw response.body.error;

}

for await (const model of paginate(client, response)) {

console.log(model.modelId);

}

Enabling logging may help uncover useful information about failures. In order to see a log of HTTP requests and responses, set the AZURE_LOG_LEVEL environment variable to info. Alternatively, logging can be enabled at runtime by calling setLogLevel in the @azure/logger:

import { setLogLevel } from "@azure/logger";

setLogLevel("info");

For more detailed instructions on how to enable logs, you can look at the @azure/logger package docs.

FAQs

Document Intelligence Rest Client

We found that @azure-rest/ai-document-intelligence demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Socket now scans OpenVSX extensions, giving teams early detection of risky behaviors, hidden capabilities, and supply chain threats in developer tools.

Product

Bringing supply chain security to the next generation of JavaScript package managers

Product

A safer, faster way to eliminate vulnerabilities without updating dependencies