Product

Announcing Bun and vlt Support in Socket

Bringing supply chain security to the next generation of JavaScript package managers

@contiamo/dev

Advanced tools

Get the dev environment fast!

Get started:

make docker-authmake pullGet the latest versions:

git pullmake pullStart everything in normal mode:

make startStop everything:

make stopStop everything and clean up:

make cleanPrepare for Pantheon-external mode (only do this once):

make buildsudo bash -c 'echo "127.0.0.1 metadb" >> /etc/hosts'Start everything in Pantheon-external mode:

make pantheon-startenv METADB_URL="jdbc:postgresql://localhost:5433/pantheon?user=pantheon&password=test" DATASTORE_TYPE=external sbt runEnable TLS verify-full mode on port 5435:

*.dev.contiamo.io: make get-pg-keyecho "127.0.0.1 pg-localhost.dev.contiamo.io" | sudo tee -a /etc/hostsmake buildmake pantheon-startpsql about the IdenTrust root we happen to be using: curl https://letsencrypt.org/certs/trustid-x3-root.pem.txt > ~/.postgresql/root.crtpsql "user=lemon@example.com password=<token> dbname=<project UUID> sslmode=verify-full" -h pg-localhost.dev.contiamo.io -p 5435Local development is supported via Docker Compose.

Before you start, you must install Docker and Docker-Compose.

Additionally, the development requires access to our private docker registry. To access this ask the Ops team for permissions. Once permissions have been granted you must install the gcloud CLI.

Once installed, run

make docker-auth pull

This will attempt to

Finally, to start the development environment, run

make start

Once the environment has started, you should see a message with a URL and credentials, like this

Dev ui: http://localhost:9898/contiamo/profile

Email: lemon@example.com

Password: localdev

We have a dataset available on GCR for internal testing. It is a Postgres database that contains a single table liftdata. This can be used for testing against a known external dataset.

After starting the local dev environment, run

docker run --name liftdata --rm --network dev_default eu.gcr.io/dev-and-test-env/deutschebahn-liftdata-postgres:v1.0.0

In the Data Hub, you can now add a external Postgres datasource using:

| field | value |

|---|---|

HOST | liftdata |

PORT | 5432 |

DATABASE | liftdata |

USER | pantheon |

PASS | contiamodatahub19 |

when you are done, run

docker kill liftdata

to stop and cleanup the database container.

You can always cleanly stop the environment using

make stop

Any data in the databases will be preserved between stop and start.

If you need to reclaim space or want to restart your environment from scratch use

make clean

This will stop your current environment and remove any Docker volumes related to it. This includes any data and metadata in the databases.

As time goes on, Docker will download new images, but it does not automatically garbage collect old images. To do so, run docker system prune.

On Mac, all Docker filesystem data is stored in a single file of a fixed size, which is 16GB or 32GB by default. You can configure the size of this file by clicking on the Docker Desktop tray icon -> Preferences -> Disk -> move the slider.

Run make or make help to see all available commands.

You can also run these commands from a different directory, with e.g. make -C /path/to/dev start.

The commands in the Makefile are very useful, but there's some extra stuff available if you use docker-compose straight. For instance, get all logs with docker-compose logs --follow, or only datastore worker logs with docker-compose logs --follow ds-worker. Refer to docker-compose.yml for the definitions of the services.

To use docker-compose without cd'ing to this directory, use e.g. docker-compose -f /path/to/dev/docker-compose.yml logs --follow.

The Compose file supports overriding the Docker tag used for a service by setting several environment variables:

| Server | Environment Variable | Default |

|---|---|---|

| datastore | DATASTORE_TAG | dev |

| idp | IDP_TAG | dev |

| pantheon | PANTHEON_TAG | latest |

| contiamo-ui | CONTIAMOUI_TAG | latest |

In environment variable POSTGRES_ARGS, you can pass extra arguments to the PostgreSQL daemon. By defaults, this is set to -c log_connections=on. To log modification statements in addition to connections, start the dev environment with

env POSTGRES_ARGS="-c log_connections=on -c log_statement=mod" make start

You can inspect these logs with docker-compose logs --follow metadb. The four acceptable values for log_statement are none, ddl, mod, and all. Further Postgres options can be found here: https://www.postgresql.org/docs/11/runtime-config.html .

Local Pantheon debug development is supported by port redirection. To set this up, you first need to run two extra steps.

Run

make build

This builds the eu.gcr.io/dev-and-test-env/pantheon:redir Docker image, a "pseudo-Pantheon" that forwards everything to your local Pantheon on 127.0.0.1 port 4300. Do not push this image!

Modify your /etc/hosts file to add

127.0.0.1 metadb

You can easily do this with sudo bash -c 'echo "127.0.0.1 metadb" >> /etc/hosts'.

This ensures that Pantheon can correctly resolve the storage database service.

Make sure you first set up the prerequisites, and also set up for Pantheon local development.

To start the Pantheon dev environment use

make pantheon-start

This will replace the Pantheon image with a simple port redirection image that will enable transparent redirect of

You can then start your local Pantheon debug build, e.g. from your IDE, and have it bind to those ports on localhost. To configure the meta-DB and enable data store from Pantheon, run SBT with

env METADB_URL="jdbc:postgresql://localhost:5433/pantheon?user=pantheon&password=test" DATASTORE_TYPE=external sbt

or set the same environment variables in IntelliJ. You can also use export METADB_URL="jdbc:postgresql://localhost:5433/pantheon?user=pantheon&password=test" DATASTORE_TYPE=external, to set the environment variables in the current terminal.

The docker-compose configuration will expose the following ports for use from local Pantheon:

127.0.0.1 port 9898 <-- Use this to access Data Hub including UI, IDP, Pantheon, Datastore.127.0.0.1 port 5433, username pantheon, password test.127.0.0.1 port 9191127.0.0.1 port 9000When accessing Pantheon via Nginx on port 9898, you need to prepend /pantheon to Pantheon URLs, for instance: http://localhost:9898/pantheon/api/v1/status . Nginx will strip off the /pantheon, authenticate the request with IDP, and forward the request to Pantheon as /api/v1/status.

Using the pantheon/test credentials for Postgres, you also have access to

metadb database, for datastore,simpleidp database.You can also run Pantheon in prod mode locally, as follows.

sbt shell, run dist.docker build -t eu.gcr.io/dev-and-test-env/pantheon:local . This will download dependencies if they are not cached yet, build a Docker image for Pantheon, and tag it local.env PANTHEON_TAG=local make start.Now datastore and metadb will still be available on the usual ports, but Nginx will proxy to a prod-mode Pantheon which runs inside Docker. Pantheon will automatically be run with appropriate environment variables (https://github.com/contiamo/dev/blob/master/docker-compose.yml#L81).

Warning! Do not push this image to GCR. It may accidentally end up being deployed on dev.contiamo.io .

The Profiler currently lives at http://localhost:8383.

FAQs

Dev environment for contiamo

The npm package @contiamo/dev receives a total of 150 weekly downloads. As such, @contiamo/dev popularity was classified as not popular.

We found that @contiamo/dev demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 3 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Bringing supply chain security to the next generation of JavaScript package managers

Product

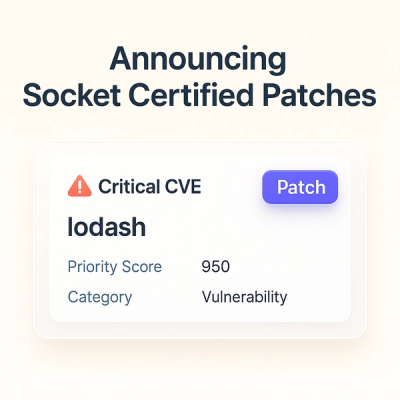

A safer, faster way to eliminate vulnerabilities without updating dependencies

Product

Reachability analysis for Ruby is now in beta, helping teams identify which vulnerabilities are truly exploitable in their applications.