Security News

curl Shuts Down Bug Bounty Program After Flood of AI Slop Reports

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

@fastify/aws-lambda

Advanced tools

Inspired by aws-serverless-express to work with Fastify with inject functionality.

Inspired by the AWSLABS aws-serverless-express library tailor made for the Fastify web framework.

No use of internal sockets, makes use of Fastify's inject function.

Seems faster (as the name implies) than aws-serverless-express and aws-serverless-fastify 😉

$ npm i @fastify/aws-lambda

@fastify/aws-lambda can take options by passing them with : awsLambdaFastify(app, options)

| property | description | default value |

|---|---|---|

| binaryMimeTypes | Array of binary MimeTypes to handle | [] |

| enforceBase64 | Function that receives the response and returns a boolean indicating if the response content is binary or not and should be base64-encoded | undefined |

| disableBase64Encoding | Disable base64 encoding of responses and omit the isBase64Encoded property. When undefined, it is automatically enabled when payloadAsStream is true and the request does not come from an ALB. | undefined |

| serializeLambdaArguments | Activate the serialization of lambda Event and Context in http header x-apigateway-event x-apigateway-context | false (was true for <v2.0.0) |

| decorateRequest | Decorates the fastify request with the lambda Event and Context request.awsLambda.event request.awsLambda.context | true |

| decorationPropertyName | The default property name for request decoration | awsLambda |

| callbackWaitsForEmptyEventLoop | See: Official Documentation | undefined |

| retainStage | Retain the stage part of the API Gateway URL | false |

| pathParameterUsedAsPath | Use a defined pathParameter as path (i.e. 'proxy') | false |

| parseCommaSeparatedQueryParams | Parse querystring with commas into an array of values. Affects the behavior of the querystring parser with commas while using Payload Format Version 2.0 | true |

| payloadAsStream | If set to true the response is a stream and can be used with awslambda.streamifyResponse | false |

| albMultiValueHeaders | Set to true if using ALB with multi value headers attribute | false |

const awsLambdaFastify = require('@fastify/aws-lambda')

const app = require('./app')

const proxy = awsLambdaFastify(app)

// or

// const proxy = awsLambdaFastify(app, { binaryMimeTypes: ['application/octet-stream'], serializeLambdaArguments: false /* default is true */ })

exports.handler = proxy

// or

// exports.handler = (event, context, callback) => proxy(event, context, callback)

// or

// exports.handler = (event, context) => proxy(event, context)

// or

// exports.handler = async (event, context) => proxy(event, context)

const fastify = require('fastify')

const app = fastify()

app.get('/', (request, reply) => reply.send({ hello: 'world' }))

if (require.main === module) {

// called directly i.e. "node app"

app.listen({ port: 3000 }, (err) => {

if (err) console.error(err)

console.log('server listening on 3000')

})

} else {

// required as a module => executed on aws lambda

module.exports = app

}

When executed in your lambda function we don't need to listen to a specific port,

so we just export the app in this case.

The lambda.js file will use this export.

When you execute your Fastify application like always,

i.e. node app.js (the detection for this could be require.main === module),

you can normally listen to your port, so you can still run your Fastify function locally.

The original lambda event and context are passed via Fastify request and can be used like this:

app.get('/', (request, reply) => {

const event = request.awsLambda.event

const context = request.awsLambda.context

// ...

})

If you do not like it, you can disable this by setting the decorateRequest option to false.

Alternatively the original lambda event and context are passed via headers and can be used like this, if setting the serializeLambdaArguments option to true:

app.get('/', (request, reply) => {

const event = JSON.parse(decodeURIComponent(request.headers['x-apigateway-event']))

const context = JSON.parse(decodeURIComponent(request.headers['x-apigateway-context']))

// ...

})

Since AWS Lambda now enables the use of ECMAScript (ES) modules in Node.js 14 runtimes, you could lower the cold start latency when used with Provisioned Concurrency thanks to the top-level await functionality.

We can use this by calling the fastify.ready() function outside of the Lambda handler function, like this:

import awsLambdaFastify from '@fastify/aws-lambda'

import app from './app.js'

export const handler = awsLambdaFastify(app)

await app.ready() // needs to be placed after awsLambdaFastify call because of the decoration: https://github.com/fastify/aws-lambda-fastify/blob/main/index.js#L9

Here you can find the approriate issue discussing this feature.

payloadAsStream)import awsLambdaFastify from '@fastify/aws-lambda'

import { promisify } from 'node:util'

import stream from 'node:stream'

import app from './app.js'

const pipeline = promisify(stream.pipeline)

const proxy = awsLambdaFastify(app, { payloadAsStream: true })

export const handler = awslambda.streamifyResponse(async (event, responseStream, context) => {

const { meta, stream } = await proxy(event, context)

responseStream = awslambda.HttpResponseStream.from(responseStream, meta)

await pipeline(stream, responseStream)

})

await app.ready() // https://github.com/fastify/aws-lambda-fastify/issues/89

Here you can find the approriate issue discussing this feature.

@fastify/aws-lambda (decorateRequest : false) x 56,892 ops/sec ±3.73% (79 runs sampled)

@fastify/aws-lambda x 56,571 ops/sec ±3.52% (82 runs sampled)

@fastify/aws-lambda (serializeLambdaArguments : true) x 56,499 ops/sec ±3.56% (76 runs sampled)

serverless-http x 45,867 ops/sec ±4.42% (83 runs sampled)

aws-serverless-fastify x 17,937 ops/sec ±1.83% (86 runs sampled)

aws-serverless-express x 16,647 ops/sec ±2.88% (87 runs sampled)

Fastest is @fastify/aws-lambda (decorateRequest : false), @fastify/aws-lambda

The logos displayed in this page are property of the respective organisations and they are not distributed under the same license as @fastify/aws-lambda (MIT).

FAQs

Inspired by aws-serverless-express to work with Fastify with inject functionality.

The npm package @fastify/aws-lambda receives a total of 77,815 weekly downloads. As such, @fastify/aws-lambda popularity was classified as popular.

We found that @fastify/aws-lambda demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 18 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

Product

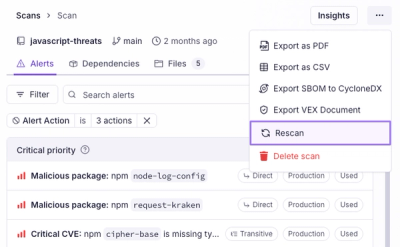

Scan results now load faster and remain consistent over time, with stable URLs and on-demand rescans for fresh security data.

Product

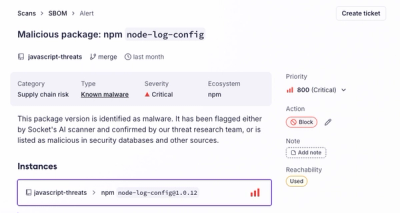

Socket's new Alert Details page is designed to surface more context, with a clearer layout, reachability dependency chains, and structured review.