Security News

PodRocket Podcast: Inside the Recent npm Supply Chain Attacks

Socket CEO Feross Aboukhadijeh discusses the recent npm supply chain attacks on PodRocket, covering novel attack vectors and how developers can protect themselves.

@frontapp/memcache-client

Advanced tools

NodeJS memcached client with the most efficient ASCII protocol parser.

Primary developed to be used at @WalmartLabs to power the http://www.walmart.com eCommerce site.

string, numeric, and JSON values$ npm i memcache-client --save

const MemcacheClient = require("memcache-client");

const expect = require("chai").expect;

const server = "localhost:11211";

// create a normal client

const client = new MemcacheClient({ server });

// Create a client that ignores NOT_STORED response (for McRouter AllAsync mode)

const mrClient = new MemcacheClient({ server, ignoreNotStored: true });

// You can specify maxConnections by using an object for server

// Default maxConnections is 1

const mClient = new MemcacheClient({ server: { server, maxConnections: 5 } });

// with callback

client.set("key", "data", (err, r) => { expect(r).to.deep.equal(["STORED"]); });

client.get("key", (err, data) => { expect(data.value).to.equal("data"); });

// with promise

client.set("key", "data").then((r) => expect(r).to.deep.equal(["STORED"]));

client.get("key").then((data) => expect(data.value).to.equal("data"));

// concurrency using promise

Promise.all([client.set("key1", "data1"), client.set("key2", "data2")])

.then((r) => expect(r).to.deep.equal([["STORED"], ["STORED"]]));

Promise.all([client.get("key1"), client.get("key2")])

.then((r) => {

expect(r[0].value).to.equal("data1");

expect(r[1].value).to.equal("data2");

});

// get multiple keys

client.get(["key1", "key2"]).then((results) => {

expect(results["key1"].value).to.equal("data1");

expect(results["key2"].value).to.equal("data2");

});

// gets and cas

client.gets("key1").then((v) => client.cas("key1", "casData", { casUniq: v.casUniq }));

// enable compression (if data size >= 100 bytes)

const data = Buffer.alloc(500);

client.set("key", data, { compress: true }).then((r) => expect(r).to.deep.equal(["STORED"]));

// fire and forget

client.set("key", data, { noreply: true });

// send any arbitrary command (\r\n will be appended automatically)

client.cmd("stats").then((r) => { console.log(r.STAT) });

client.set("foo", "10", { noreply: true });

client.cmd("incr foo 5").then((v) => expect(+v).to.equal(15));

// you can also send arbitary command with noreply option (noreply will be appended automatically)

client.cmd("incr foo 5", { noreply: true });

// send any arbitrary data (remember \r\n)

client.send("set foo 0 0 5\r\nhello\r\n").then((r) => expect(r).to.deep.equal(["STORED"]));

All take an optional callback. If it's not provided then all return a Promise.

client.get(key, [callback]) or client.get([key1, key2], [callback])client.gets(key, [callback]) or client.gets([key1, key2], [callback])client.set(key, data, [options], [callback])client.add(key, data, [options], [callback])client.replace(key, data, [options], [callback])client.append(key, data, [options], [callback])client.prepend(key, data, [options], [callback])client.cas(key, data, options, [callback])client.delete(key, [options], [callback])client.incr(key, value, [options], [callback])client.decr(key, value, [options], [callback])client.touch(key, exptime, [options], [callback])client.version([callback])For all store commands,

set,add,replace,append,prepend, andcas, the data can be astring,number, or aJSONobject.

The client constructor takes the following values in options.

const options = {

server: { server: "host:port", maxConnections: 3 },

ignoreNotStored: true, // ignore NOT_STORED response

lifetime: 100, // TTL 100 seconds

cmdTimeout: 3000, // command timeout in milliseconds

connectTimeout: 8000, // connect to server timeout in ms

compressor: require("custom-compressor"),

logger: require("./custom-logger")

};

const client = new MemcacheClient(options);

server - required A string in host:port format, or an object:{ server: "host:port", maxConnections: 3 }

Default

maxConnectionsis1

ignoreNotStored - optional If set to true, then will not treat NOT_STORED reply from any store commands as error. Use this for Mcrouter AllAsyncRoute mode.lifetime - optional Your cache TTL in seconds to use for all entries. DEFAULT: 60 seconds.cmdTimeout - optional Command timeout in milliseconds. DEFAULT: 5000 ms.

connectTimeout - optional Custom self connect to server timeout in milliseconds. It's disabled if set to 0. DEFAULT: 0

connecting set to truekeepDangleSocket - optional After connectTimeout trigger, do not destroy the socket but keep listening for errors on it. DEFAULT: falsedangleSocketWaitTimeout - optional How long to wait for errors on dangle socket before destroying it. DEFAULT: 5 minutes (30000 milliseconds)compressor - optional a custom compressor for compressing the data. See data compression for more details.logger - optional Custom logger like this:module.exports = {

debug: (msg) => console.log(msg),

info: (msg) => console.log(msg),

warn: (msg) => console.warn(msg),

error: (msg) => console.error(msg)

};

connectTimeoutNote that the connectTimeout option is a custom timeout this client adds. It will preempt

the system's connect timeout, for which you typically get back a connect ETIMEDOUT error.

Since from NodeJS there's no way to change the system's connect timeout, which is usually

fairly long, this option allows you to set a shorter timeout. When it triggers, the client

will shutdown the connection and destroys the socket, and rejects with an error. The error's

message will be "connect timeout" and has the field connecting set to true.

If you want to let the system connect timeout to take place, then set this option to 0 to completely disable it, or set it to a high value like 10 minutes in milliseconds (60000).

If you set a small custom connectTimeout and do not want to destroy the socket after it

triggers, then you will end up with a dangling socket.

To enable keeping the dangling socket, set the option keepDangleSocket to true.

The client will automatically add a new error handler for the socket in case the system's

ETIMEDOUT eventually comes back. The client also sets a timeout to eventually destroy the

socket in case the system never comes back with anything.

To control the dangling wait timeout, use the option dangleSocketWaitTimeout. It's default

to 5 minutes.

The client will emit the event dangle-wait with the following data:

{ type: "wait", socket }{ type: "timeout" }{ type: "error", err }Generally it's better to just destroy the socket instead of leaving it dangling.

If you have multiple redundant servers, you can pass them to the client with the server option:

{

server: {

servers: [

{

server: "name1.domain.com:11211",

maxConnections: 3

},

{

server: "name2.domain.com:11211",

maxConnections: 3

}

],

config: {

retryFailedServerInterval: 1000, // milliseconds - how often to check failed servers

failedServerOutTime: 30000, // (ms) how long a failed server should be out before retrying it

keepLastServer: false

}

}

}

You can also pass in server.config with the following options:

retryFailedServerInterval - (ms) how often to check failed servers. Default 10000 ms (10 secs)failedServerOutTime - (ms) how long a failed server should be out before retrying it. Default 60000 ms (1 min).keepLastServer - (boolean) Keep at least one server even if it failed connection. Default true.The client supports automatic compression/decompression of the data you set. It's turned off by default.

To enable this, you need to:

compress flag when calling the store commandsBy default, the client is modeled to use node-zstd version 2's APIs, specifically, it requires a compressor with these two methods:

compressSync(value)decompressSync(value)Both must take and return Buffer data.

If you just add node-zstd version 2 to your dependencies, then you can start setting the compress flag when calling the store commands to enable compression.

If you want to use another major version of node-zstd or another compressor that doesn't offer the two APIs expected above, then you need to create a wrapper compressor and pass it to the client constructor.

noreplyAlmost all commands take a noreply field for options, which if set to true, then the command is fire & forget for the memcached server.

Obviously this doesn't apply to commands like get and gets, which exist to retrieve from the server.

lifetime and compressFor all store commands, set, add, replace, append, prepend, and cas, they take:

lifetime field that specify the TTL time in seconds for the entry. If this is not set, then will try to use client options.lifetime or 60 seconds.compress field, which if set to true, will cause any data with size >= 100 bytes to be compressed.

casUniqFor the cas command, options must contain a casUniq value that you received from an gets command you called earlier.

client.send(data, [options], [callback])client.xsend(data, [options])client.cmd(data, [options], [callback])client.store(cmd, key, value, [optons], [callback])client.retrieve(cmd, key, [options], [callback])client.xretrieve(cmd, key)Apache-2.0 © Joel Chen

FAQs

NodeJS memcached client

The npm package @frontapp/memcache-client receives a total of 1 weekly downloads. As such, @frontapp/memcache-client popularity was classified as not popular.

We found that @frontapp/memcache-client demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Socket CEO Feross Aboukhadijeh discusses the recent npm supply chain attacks on PodRocket, covering novel attack vectors and how developers can protect themselves.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

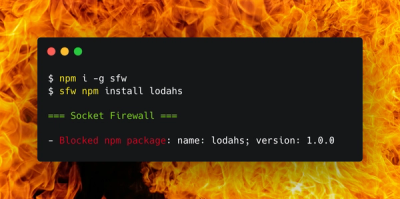

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.