Security News

curl Shuts Down Bug Bounty Program After Flood of AI Slop Reports

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

@gitlab/gitlab-ai-provider

Advanced tools

A comprehensive TypeScript provider for integrating GitLab Duo AI capabilities with the Vercel AI SDK. This package enables seamless access to GitLab's AI-powered features including chat, agentic workflows, and tool calling through a unified interface.

npm install @gitlab/gitlab-ai-provider

npm install @ai-sdk/provider @ai-sdk/provider-utils

import { createGitLab } from '@gitlab/gitlab-ai-provider';

import { generateText } from 'ai';

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

instanceUrl: 'https://gitlab.com', // optional, defaults to gitlab.com

});

// All equivalent ways to create a chat model:

const model = gitlab('duo-chat'); // callable provider

const model2 = gitlab.chat('duo-chat'); // .chat() alias (recommended)

const model3 = gitlab.languageModel('duo-chat'); // explicit method

const { text } = await generateText({

model: gitlab.chat('duo-chat'),

prompt: 'Explain how to create a merge request in GitLab',

});

console.log(text);

import { createGitLab } from '@gitlab/gitlab-ai-provider';

import { generateText } from 'ai';

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

});

// Use agentic model for native tool calling support

const model = gitlab.agenticChat('duo-chat', {

anthropicModel: 'claude-sonnet-4-20250514',

maxTokens: 8192,

});

const { text } = await generateText({

model,

prompt: 'List all open merge requests in my project',

tools: {

// Your custom tools here

},

});

The provider automatically maps specific model IDs to their corresponding provider models (Anthropic or OpenAI) and routes requests to the appropriate AI Gateway proxy:

import { createGitLab } from '@gitlab/gitlab-ai-provider';

import { generateText } from 'ai';

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

});

// Anthropic Models (Claude)

const opusModel = gitlab.agenticChat('duo-chat-opus-4-5');

// Automatically uses: claude-opus-4-5-20251101

const sonnetModel = gitlab.agenticChat('duo-chat-sonnet-4-5');

// Automatically uses: claude-sonnet-4-5-20250929

const haikuModel = gitlab.agenticChat('duo-chat-haiku-4-5');

// Automatically uses: claude-haiku-4-5-20251001

// OpenAI Models (GPT-5)

const gpt5Model = gitlab.agenticChat('duo-chat-gpt-5-1');

// Automatically uses: gpt-5.1-2025-11-13

const gpt5MiniModel = gitlab.agenticChat('duo-chat-gpt-5-mini');

// Automatically uses: gpt-5-mini-2025-08-07

const codexModel = gitlab.agenticChat('duo-chat-gpt-5-codex');

// Automatically uses: gpt-5-codex

// You can still override with explicit providerModel option

const customModel = gitlab.agenticChat('duo-chat-opus-4-5', {

providerModel: 'claude-sonnet-4-5-20250929', // Override mapping

});

Available Model Mappings:

| Model ID | Provider | Backend Model |

|---|---|---|

duo-chat-opus-4-5 | Anthropic | claude-opus-4-5-20251101 |

duo-chat-sonnet-4-5 | Anthropic | claude-sonnet-4-5-20250929 |

duo-chat-haiku-4-5 | Anthropic | claude-haiku-4-5-20251001 |

duo-chat-gpt-5-1 | OpenAI | gpt-5.1-2025-11-13 |

duo-chat-gpt-5-mini | OpenAI | gpt-5-mini-2025-08-07 |

duo-chat-gpt-5-codex | OpenAI | gpt-5-codex |

duo-chat-gpt-5-2-codex | OpenAI | gpt-5.2-codex |

For unmapped Anthropic model IDs, the provider defaults to claude-sonnet-4-5-20250929.

The provider supports OpenAI GPT-5 models through GitLab's AI Gateway proxy. OpenAI models are automatically detected based on the model ID and routed to the appropriate proxy endpoint.

import { createGitLab } from '@gitlab/gitlab-ai-provider';

import { generateText } from 'ai';

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

});

// GPT-5.1 - Most capable model

const { text } = await generateText({

model: gitlab.agenticChat('duo-chat-gpt-5-1'),

prompt: 'Explain GitLab CI/CD pipelines',

});

// GPT-5 Mini - Fast and efficient

const { text: quickResponse } = await generateText({

model: gitlab.agenticChat('duo-chat-gpt-5-mini'),

prompt: 'Summarize this code',

});

// GPT-5 Codex - Optimized for code

const { text: codeExplanation } = await generateText({

model: gitlab.agenticChat('duo-chat-gpt-5-codex'),

prompt: 'Refactor this function for better performance',

});

OpenAI Models with Tool Calling:

import { createGitLab } from '@gitlab/gitlab-ai-provider';

import { generateText, tool } from 'ai';

import { z } from 'zod';

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

});

const { text, toolCalls } = await generateText({

model: gitlab.agenticChat('duo-chat-gpt-5-1', {

maxTokens: 4096,

}),

prompt: 'What is the weather in San Francisco?',

tools: {

getWeather: tool({

description: 'Get the weather for a location',

parameters: z.object({

location: z.string().describe('The city name'),

}),

execute: async ({ location }) => {

return { temperature: 72, condition: 'sunny', location };

},

}),

},

});

You can pass feature flags to enable experimental features in GitLab's AI Gateway proxy:

import { createGitLab } from '@gitlab/gitlab-ai-provider';

// Option 1: Set feature flags globally for all agentic chat models

const gitlab = createGitLab({

apiKey: process.env.GITLAB_TOKEN,

featureFlags: {

duo_agent_platform_agentic_chat: true,

duo_agent_platform: true,

},

});

const model = gitlab.agenticChat('duo-chat');

// Option 2: Set feature flags per model (overrides global flags)

const modelWithFlags = gitlab.agenticChat('duo-chat', {

featureFlags: {

duo_agent_platform_agentic_chat: true,

duo_agent_platform: true,

custom_feature_flag: false,

},

});

// Option 3: Merge both (model-level flags take precedence)

const gitlab2 = createGitLab({

featureFlags: {

duo_agent_platform: true, // will be overridden

},

});

const mergedModel = gitlab2.agenticChat('duo-chat', {

featureFlags: {

duo_agent_platform: false, // overrides provider-level

duo_agent_platform_agentic_chat: true, // adds new flag

},

});

const gitlab = createGitLab({

apiKey: 'glpat-xxxxxxxxxxxxxxxxxxxx',

});

export GITLAB_TOKEN=glpat-xxxxxxxxxxxxxxxxxxxx

const gitlab = createGitLab(); // Automatically uses GITLAB_TOKEN

The provider automatically detects and uses OpenCode authentication if available:

const gitlab = createGitLab({

instanceUrl: 'https://gitlab.com',

// OAuth tokens are loaded from ~/.opencode/auth.json

});

const gitlab = createGitLab({

apiKey: 'your-token',

headers: {

'X-Custom-Header': 'value',

},

});

Main provider factory that creates language models with different capabilities.

interface GitLabProvider {

(modelId: string): LanguageModelV2;

languageModel(modelId: string): LanguageModelV2;

agenticChat(modelId: string, options?: GitLabAgenticOptions): GitLabAgenticLanguageModel;

}

Provides native tool calling through GitLab's Anthropic proxy.

https://cloud.gitlab.com/ai/v1/proxy/anthropic/Provides native tool calling through GitLab's OpenAI proxy.

https://cloud.gitlab.com/ai/v1/proxy/openai/Automatically detects GitLab projects from git remotes.

const detector = new GitLabProjectDetector({

instanceUrl: 'https://gitlab.com',

getHeaders: () => ({ Authorization: `Bearer ${token}` }),

});

const project = await detector.detectProject(process.cwd());

// Returns: { id: 12345, path: 'group/project', namespaceId: 67890 }

Caches project information with TTL.

const cache = new GitLabProjectCache(5 * 60 * 1000); // 5 minutes

cache.set('key', project);

const cached = cache.get('key');

Manages OAuth token lifecycle.

const oauthManager = new GitLabOAuthManager();

// Exchange authorization code

const tokens = await oauthManager.exchangeAuthorizationCode({

instanceUrl: 'https://gitlab.com',

code: 'auth-code',

codeVerifier: 'verifier',

});

// Refresh tokens

const refreshed = await oauthManager.refreshIfNeeded(tokens);

Manages direct access tokens for Anthropic proxy.

const client = new GitLabDirectAccessClient({

instanceUrl: 'https://gitlab.com',

getHeaders: () => ({ Authorization: `Bearer ${token}` }),

});

const directToken = await client.getDirectAccessToken();

// Returns: { token: 'xxx', headers: {...}, expiresAt: 123456 }

interface GitLabProviderSettings {

instanceUrl?: string; // Default: 'https://gitlab.com'

apiKey?: string; // PAT or OAuth access token

refreshToken?: string; // OAuth refresh token

name?: string; // Provider name prefix

headers?: Record<string, string>; // Custom headers

fetch?: typeof fetch; // Custom fetch implementation

aiGatewayUrl?: string; // AI Gateway URL (default: 'https://cloud.gitlab.com')

}

| Variable | Description | Default |

|---|---|---|

GITLAB_TOKEN | GitLab Personal Access Token or OAuth token | - |

GITLAB_AI_GATEWAY_URL | AI Gateway URL for Anthropic proxy | https://cloud.gitlab.com |

interface GitLabAgenticOptions {

providerModel?: string; // Override the backend model (e.g., 'claude-sonnet-4-5-20250929' or 'gpt-5.1-2025-11-13')

maxTokens?: number; // Default: 8192

featureFlags?: Record<string, boolean>; // GitLab feature flags

}

Note: The providerModel option allows you to override the automatically mapped model. The provider will validate that the override is compatible with the model ID's provider (e.g., you cannot use an OpenAI model with a duo-chat-opus-* model ID).

import { GitLabError } from '@gitlab/gitlab-ai-provider';

try {

const result = await generateText({ model, prompt });

} catch (error) {

if (error instanceof GitLabError) {

if (error.isAuthError()) {

console.error('Authentication failed');

} else if (error.isRateLimitError()) {

console.error('Rate limit exceeded');

} else if (error.isServerError()) {

console.error('Server error:', error.statusCode);

}

}

}

npm run build # Build once

npm run build:watch # Build in watch mode

npm test # Run all tests

npm run test:watch # Run tests in watch mode

npm run lint # Lint code

npm run lint:fix # Lint and auto-fix

npm run format # Format code

npm run format:check # Check formatting

npm run type-check # TypeScript type checking

gitlab-ai-provider/

├── src/

│ ├── index.ts # Main exports

│ ├── gitlab-provider.ts # Provider factory

│ ├── gitlab-anthropic-language-model.ts # Anthropic/Claude model

│ ├── gitlab-openai-language-model.ts # OpenAI/GPT model

│ ├── model-mappings.ts # Model ID mappings

│ ├── gitlab-direct-access.ts # Direct access tokens

│ ├── gitlab-oauth-manager.ts # OAuth management

│ ├── gitlab-oauth-types.ts # OAuth types

│ ├── gitlab-project-detector.ts # Project detection

│ ├── gitlab-project-cache.ts # Project caching

│ ├── gitlab-api-types.ts # API types

│ ├── gitlab-error.ts # Error handling

│ └── gitlab-workflow-debug.ts # Debug logging

├── tests/ # Test files

├── dist/ # Build output

├── package.json

├── tsconfig.json

├── tsup.config.ts

└── vitest.config.ts

Contributions are welcome! Please see our Contributing Guide for detailed guidelines on:

Quick Start for Contributors:

Commit Messages: Use conventional commits format

feat(scope): add new feature

fix(scope): fix bug

docs(scope): update documentation

Code Quality: Ensure all checks pass

npm run lint

npm run type-check

npm test

Testing: Add tests for new features

This project is built on top of:

Made with ❤️ for the OpenCode community

FAQs

GitLab Duo provider for Vercel AI SDK

The npm package @gitlab/gitlab-ai-provider receives a total of 22,765 weekly downloads. As such, @gitlab/gitlab-ai-provider popularity was classified as popular.

We found that @gitlab/gitlab-ai-provider demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 6 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

A surge of AI-generated vulnerability reports has pushed open source maintainers to rethink bug bounties and tighten security disclosure processes.

Product

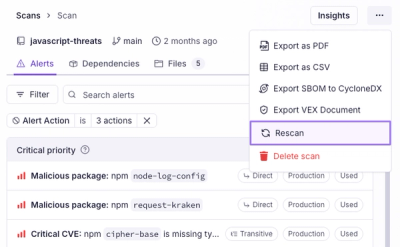

Scan results now load faster and remain consistent over time, with stable URLs and on-demand rescans for fresh security data.

Product

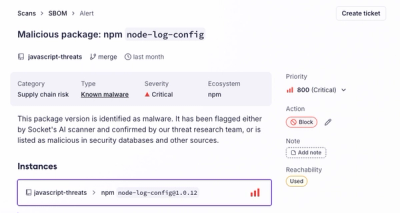

Socket's new Alert Details page is designed to surface more context, with a clearer layout, reachability dependency chains, and structured review.