Product

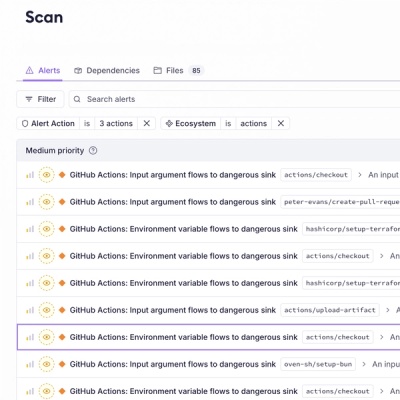

Introducing GitHub Actions Scanning Support

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

@henrygd/queue

Advanced tools

Tiny async queue with concurrency control. Like p-limit or fastq, but smaller and faster.

Tiny async queue with concurrency control. Like p-limit or fastq, but smaller and faster. See comparisons and benchmarks below.

Works with:

Create a queue with the newQueue function. Then add async functions - or promise returning functions - to your queue with the add method.

You can use queue.done() to wait for the queue to be empty.

import { newQueue } from '@henrygd/queue'

// create a new queue with a concurrency of 2

const queue = newQueue(2)

const pokemon = ['ditto', 'hitmonlee', 'pidgeot', 'poliwhirl', 'golem', 'charizard']

for (const name of pokemon) {

queue.add(async () => {

const res = await fetch(`https://pokeapi.co/api/v2/pokemon/${name}`)

const json = await res.json()

console.log(`${json.name}: ${json.height * 10}cm | ${json.weight / 10}kg`)

})

}

console.log('running')

await queue.done()

console.log('done')

The return value of queue.add is the same as the return value of the supplied function.

const response = await queue.add(() =>

fetch("https://pokeapi.co/api/v2/pokemon")

);

console.log(response.ok, response.status, response.headers);

queue.all is like Promise.all with concurrency control:

import { newQueue } from "@henrygd/queue";

const queue = newQueue(2);

const tasks = ["ditto", "hitmonlee", "pidgeot", "poliwhirl"].map(

(name) => async () => {

const res = await fetch(`https://pokeapi.co/api/v2/pokemon/${name}`);

return await res.json();

}

);

// Process all tasks concurrently (limited by queue concurrency) and wait for all to complete

const results = await queue.all(tasks);

console.log(results); // [{ name: 'ditto', ... }, { name: 'hitmonlee', ... }, ...]

You can also mix existing promises and function wrappers.

const existingPromise = fetch("https://pokeapi.co/api/v2/pokemon/ditto").then(

(r) => r.json()

);

const results = await queue.all([

existingPromise,

() =>

fetch("https://pokeapi.co/api/v2/pokemon/pidgeot").then((r) => r.json()),

]);

Note that only the wrapper functions are queued, since existing promises start running as soon as you create them.

[!TIP] If you need support for Node's AsyncLocalStorage, import

@henrygd/queue/async-storageinstead.

/** Add an async function / promise wrapper to the queue */

queue.add<T>(promiseFunction: () => PromiseLike<T>): Promise<T>

/** Adds promises (or wrappers) to the queue and resolves like Promise.all */

queue.all<T>(promiseFunctions: Array<PromiseLike<T> | (() => PromiseLike<T>)>): Promise<T[]>

/** Returns a promise that resolves when the queue is empty */

queue.done(): Promise<void>

/** Empties the queue (active promises are not cancelled) */

queue.clear(): void

/** Returns the number of promises currently running */

queue.active(): number

/** Returns the total number of promises in the queue */

queue.size(): number

| Library | Version | Bundle size (B) | Weekly downloads |

|---|---|---|---|

| @henrygd/queue | 1.1.0 | 420 | hundreds :) |

| p-limit | 5.0.0 | 1,763 | 118,953,973 |

| async.queue | 3.2.5 | 6,873 | 53,645,627 |

| fastq | 1.17.1 | 3,050 | 39,257,355 |

| queue | 7.0.0 | 2,840 | 4,259,101 |

| promise-queue | 2.2.5 | 2,200 | 1,092,431 |

All libraries run the exact same test. Each operation measures how quickly the queue can resolve 1,000 async functions. The function just increments a counter and checks if it has reached 1,000.1

We check for completion inside the function so that promise-queue and p-limit are not penalized by having to use Promise.all (they don't provide a promise that resolves when the queue is empty).

This test was run in Chromium. Chrome and Edge are the same. Firefox and Safari are slower and closer, with @henrygd/queue just edging out promise-queue. I think both are hitting the upper limit of what those browsers will allow.

You can run or tweak for yourself here: https://jsbm.dev/TKyOdie0sbpOh

Note:

p-limit6.1.0 now places betweenasync.queueandqueuein Node and Deno.

Ryzen 5 4500U | 8GB RAM | Node 22.3.0

Ryzen 7 6800H | 32GB RAM | Node 22.3.0

Note:

p-limit6.1.0 now places betweenasync.queueandqueuein Node and Deno.

Ryzen 5 4500U | 8GB RAM | Deno 1.44.4

Ryzen 7 6800H | 32GB RAM | Deno 1.44.4

Ryzen 5 4500U | 8GB RAM | Bun 1.1.17

Ryzen 7 6800H | 32GB RAM | Bun 1.1.17

Uses oha to make 1,000 requests to each worker. Each request creates a queue and resolves 5,000 functions.

This was run locally using Wrangler on a Ryzen 7 6800H laptop. Wrangler uses the same workerd runtime as workers deployed to Cloudflare, so the relative difference should be accurate. Here's the repository for this benchmark.

| Library | Requests/sec | Total (sec) | Average | Slowest |

|---|---|---|---|---|

| @henrygd/queue | 816.1074 | 1.2253 | 0.0602 | 0.0864 |

| promise-queue | 647.2809 | 1.5449 | 0.0759 | 0.1149 |

| fastq | 336.7031 | 3.0877 | 0.1459 | 0.2080 |

| async.queue | 198.9986 | 5.0252 | 0.2468 | 0.3544 |

| queue | 85.6483 | 11.6757 | 0.5732 | 0.7629 |

| p-limit | 77.7434 | 12.8628 | 0.6316 | 0.9585 |

@henrygd/semaphore - Fastest javascript inline semaphores and mutexes using async / await.

In real applications, you may not be running so many jobs at once, and your jobs will take much longer to resolve. So performance will depend more on the jobs themselves. ↩

FAQs

Tiny async queue with concurrency control. Like p-limit or fastq, but smaller and faster.

The npm package @henrygd/queue receives a total of 1,114 weekly downloads. As such, @henrygd/queue popularity was classified as popular.

We found that @henrygd/queue demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

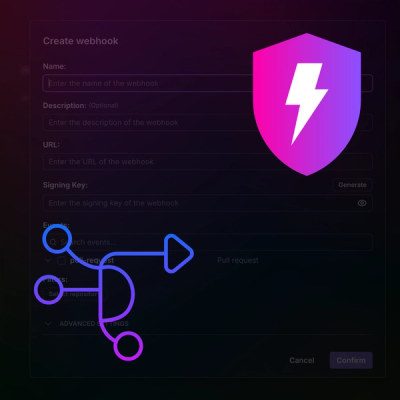

Product

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.

Research

The Socket Threat Research Team uncovered malicious NuGet packages typosquatting the popular Nethereum project to steal wallet keys.