@huggingface/gguf

A GGUF parser that works on remotely hosted files.

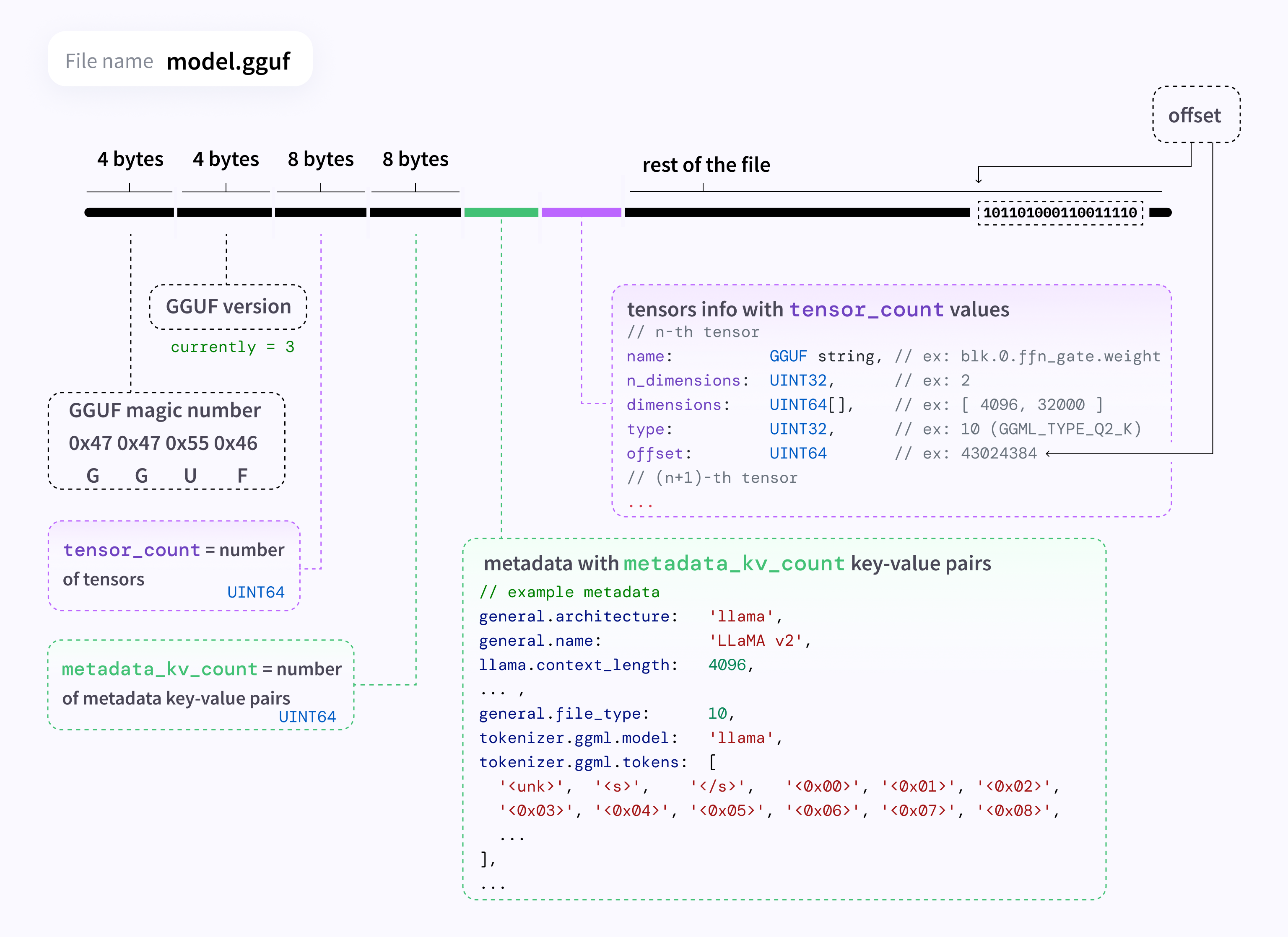

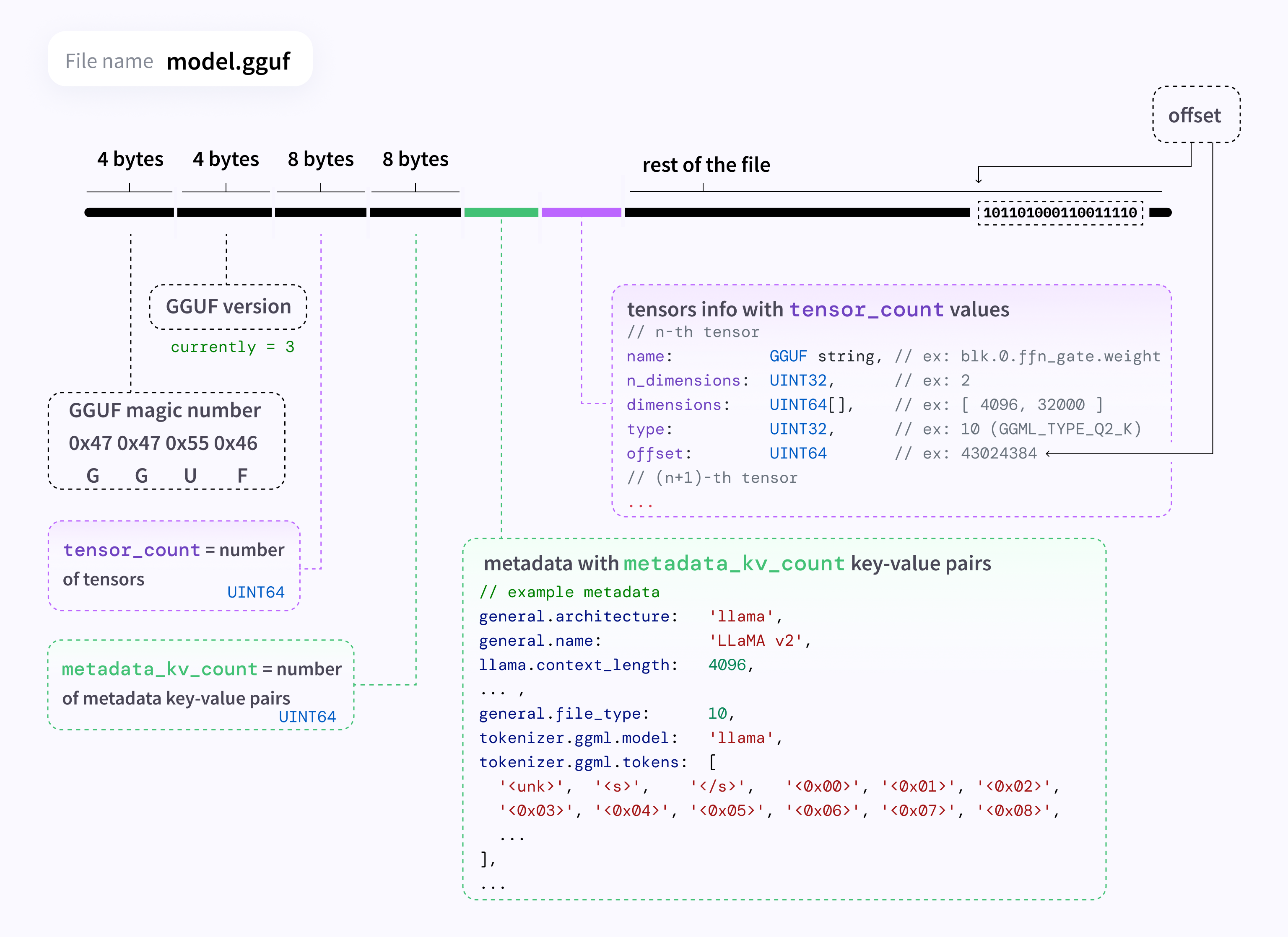

Spec

Spec: https://github.com/ggerganov/ggml/blob/master/docs/gguf.md

Reference implementation (Python): https://github.com/ggerganov/llama.cpp/blob/master/gguf-py/gguf/gguf_reader.py

Install

npm install @huggingface/gguf

Usage

Basic usage

import { GGMLQuantizationType, gguf } from "@huggingface/gguf";

const URL_LLAMA = "https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGUF/resolve/191239b/llama-2-7b-chat.Q2_K.gguf";

const { metadata, tensorInfos } = await gguf(URL_LLAMA);

console.log(metadata);

console.log(tensorInfos);

Reading a local file

const { metadata, tensorInfos } = await gguf(

'./my_model.gguf',

{ allowLocalFile: true },

);

Strictly typed

By default, known fields in metadata are typed. This includes various fields found in llama.cpp, whisper.cpp and ggml.

const { metadata, tensorInfos } = await gguf(URL_MODEL);

if (metadata["general.architecture"] === "llama") {

console.log(model["llama.attention.head_count"]);

console.log(model["mamba.ssm.conv_kernel"]);

}

Disable strictly typed

Because GGUF format can be used to store tensors, we can technically use it for other usages. For example, storing control vectors, lora weights, etc.

In case you want to use your own GGUF metadata structure, you can disable strict typing by casting the parse output to GGUFParseOutput<{ strict: false }>:

const { metadata, tensorInfos }: GGUFParseOutput<{ strict: false }> = await gguf(URL_LLAMA);

Command line interface

This package provides a CLI equivalent to gguf_dump.py script. You can dump GGUF metadata and list of tensors using this command:

npx @huggingface/gguf my_model.gguf

Example for the output:

* Dumping 36 key/value pair(s)

Idx | Count | Value

----|--------|----------------------------------------------------------------------------------

1 | 1 | version = 3

2 | 1 | tensor_count = 292

3 | 1 | kv_count = 33

4 | 1 | general.architecture = "llama"

5 | 1 | general.type = "model"

6 | 1 | general.name = "Meta Llama 3.1 8B Instruct"

7 | 1 | general.finetune = "Instruct"

8 | 1 | general.basename = "Meta-Llama-3.1"

[truncated]

* Dumping 292 tensor(s)

Idx | Num Elements | Shape | Data Type | Name

----|--------------|--------------------------------|-----------|--------------------------

1 | 64 | 64, 1, 1, 1 | F32 | rope_freqs.weight

2 | 525336576 | 4096, 128256, 1, 1 | Q4_K | token_embd.weight

3 | 4096 | 4096, 1, 1, 1 | F32 | blk.0.attn_norm.weight

4 | 58720256 | 14336, 4096, 1, 1 | Q6_K | blk.0.ffn_down.weight

[truncated]

Alternatively, you can install this package as global, which will provide the gguf-view command:

npm i -g @huggingface/gguf

gguf-view my_model.gguf

Hugging Face Hub

The Hub supports all file formats and has built-in features for GGUF format.

Find more information at: http://hf.co/docs/hub/gguf.

Acknowledgements & Inspirations

🔥❤️