Security News

Package Maintainers Call for Improvements to GitHub’s New npm Security Plan

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

@kwhinnery-openai/realtime-client

Advanced tools

OpenAI Realtime API Client for JavaScript and TypeScript

This is a client library for OpenAI's Realtime API. Use it either on the server or in the browser to interact with Realtime models.

Install from npm:

npm i @openai/realtime-client

yarn add @openai/realtime-client

pnpm add @openai/realtime-client

Install from JSR:

deno add jsr:@openai/realtime-client

This client library helps initialize a connection to the OpenAI Realtime API over either WebRTC or a WebSocket. You can then send and receive typed events over the channel you selected. WebRTC is the default connection in environments that support it.

Browser implementation

The following shows basic usage of the SDK in the browser. This code assumes

that you have an API endpoint GET /session which returns an ephemeral OpenAI

API token that can be used to initialize the client SDK.

See below for more info on handling media in the browser.

import OpenAIRealtimeClient from "@openai/realtime-client";

// Client is a typed EventEmitter - takes a URL where an ephemeral key

// can be fetched from - can also pass `apiKey` directly if you need to fetch

// it another way

const client = new OpenAIRealtimeClient({ apiKeyUrl: "/session" });

// Listen for server-sent events (typed in IDEs)

client.on("session.created", (e) => {

// First server-sent event

console.log(e);

// Emit client-side events (typed in IDEs)

client.send({

type: "session.update",

session: {

/* session config */

},

});

});

// Start the Realtime session with default media handling

await client.start();

Check out the event reference to see all the available client and server events.

Token server implementation

A basic Node.js server which would implement the /session endpoint would look

like this (using the official

OpenAI REST API client).

import OpenAI from "openai";

import Fastify from "fastify";

const client = new OpenAI();

const app = Fastify({ logger: true });

// Return session resource on the developer's server using an API token

app.get("/session", async (_req, res) => {

const sessionConfig = {

modalities: ["audio", "text"],

/* ... realtime session config ... */

};

const sessionResponse = await client.realtime.sessions

.create(sessionConfig)

.asResponse();

res.type("application/json");

res.send(sessionResponse.body);

});

app.listen({ port: 3000 });

Middle tier implementation (Node.js or Deno)

For server-to-server use cases, you can also use a WebSocket interface and a regular OpenAI API key.

import OpenAIRealtimeClient from "@openai/realtime-client";

// reads OPENAI_API_KEY from system environment

const client = new OpenAIRealtimeClient();

client.on("session.created", (e) => {

console.log(e);

// Send a client event

client.send({

type: "session.update",

session: {

/* session config */

},

});

});

// WebSocket is the default in Node and Deno

await client.start();

When using WebRTC in the browser to handle media streams (recommended), the SDK provides two modes of operation:

client.mediaManager object.navigator.mediaDevices.* and the WebRTC peer connection.Before jumping into how this works in the SDK, remember that using the SDK is not strictly necessary if you would like to use WebRTC APIs in the browser directly. Check out the docs for more information on how that would work.

In the SDK, you can get more granular control over media streams and output

devices (and opt out of the media manager provided by the SDK entirely), by

passing a function into the client.start method call that will have the

opportunity to configure the peer connection object:

import OpenAIRealtimeClient from "@openai/realtime-client";

// Can still use typed event emitters and init helpers

const client = new OpenAIRealtimeClient({ apiKeyUrl: "/session" });

client.on("session.created", (e) => {/* ... */});

// Start the Realtime session with custom media stream handling

function configurePeerConnection(pc: RTCPeerConnection): void {

// Add remote media stream...

const audioElement = document.createElement("audio");

audioElement.autoplay = true;

pc.ontrack = (e) => {

audioElement.srcObject = e.streams[0];

};

// Add local media stream...

const ms = await navigator.mediaDevices.getUserMedia({ audio: true });

pc.addTrack(ms.getTracks()[0]);

}

// Passing this handler opts out of the default media handling behavior

await client.start({ configurePeerConnection });

The SDK provides basic media handling via client.mediaManager - it plays audio

out from the model using a managed audio HTML element, and uses the default

audio input (if available) to stream audio to the model. If input devices change

during the session, we will ensure we continue to have an input device if

possible.

The media manager provides a few basic controls:

pauseInputAudio and resumeInputAudio - will mute input audio over the

current input device, but keep the connection to the Realtime peer active.pauseOutputAudio and resumeOutputAudio - will mute the audio element

playing audio from the model. This only controls playback - the model will

continue to stream output until you stop it using other client-sent events

like

response.cancel.See CONTRIBUTING.md.

MIT

FAQs

OpenAI Realtime API Client for JavaScript and TypeScript

The npm package @kwhinnery-openai/realtime-client receives a total of 0 weekly downloads. As such, @kwhinnery-openai/realtime-client popularity was classified as not popular.

We found that @kwhinnery-openai/realtime-client demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Maintainers back GitHub’s npm security overhaul but raise concerns about CI/CD workflows, enterprise support, and token management.

Product

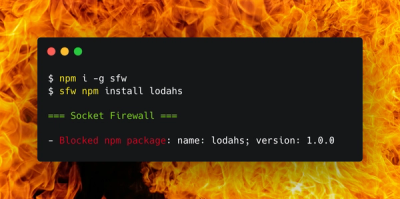

Socket Firewall is a free tool that blocks malicious packages at install time, giving developers proactive protection against rising supply chain attacks.

Research

Socket uncovers malicious Rust crates impersonating fast_log to steal Solana and Ethereum wallet keys from source code.