Security News

Another Round of TEA Protocol Spam Floods npm, But It’s Not a Worm

Recent coverage mislabels the latest TEA protocol spam as a worm. Here’s what’s actually happening.

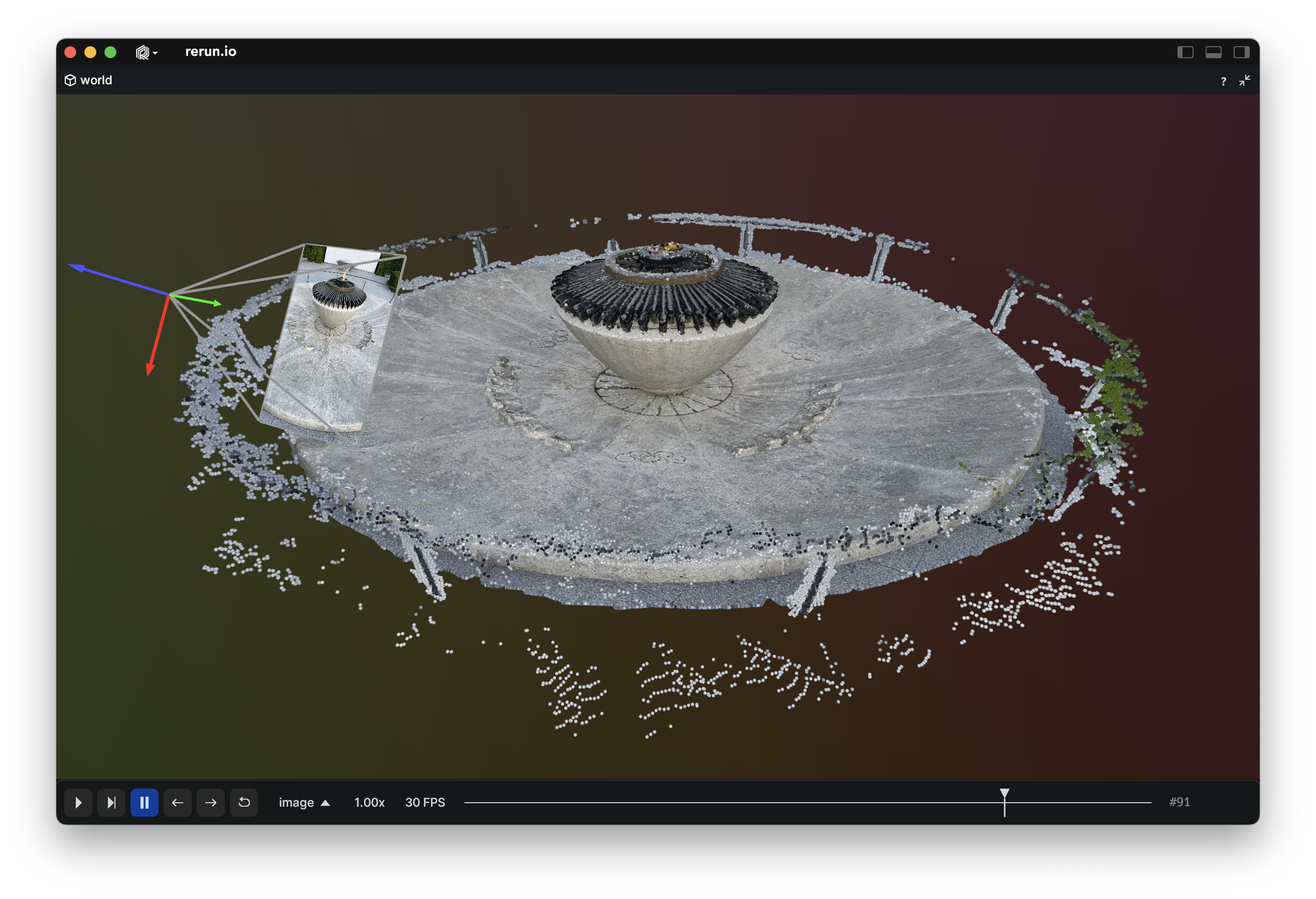

@rerun-io/web-viewer

Advanced tools

Embed the Rerun web viewer within your app.

This package is framework-agnostic. A React wrapper is available at https://www.npmjs.com/package/@rerun-io/web-viewer-react.

$ npm i @rerun-io/web-viewer

ℹ️ Note:

The package version is equal to the supported Rerun SDK version, and RRD files are not yet stable across different versions.

This means that @rerun-io/web-viewer@0.10.0 can only connect to a data source (.rrd file, websocket connection, etc.) that originates from a Rerun SDK with version 0.10.0!

The web viewer is an object which manages a canvas element:

import { WebViewer } from "@rerun-io/web-viewer";

const rrd = "…";

const parentElement = document.body;

const viewer = new WebViewer();

await viewer.start(rrd, parentElement, { width: "800px", height: "600px" });

// …

viewer.stop();

The rrd in the snippet above should be a URL pointing to either:

.rrd file, such as https://app.rerun.io/version/0.18.0/examples/dna.rrdserve APIIf rrd is not set, the Viewer will display the same welcome screen as https://app.rerun.io.

This can be disabled by setting hide_welcome_screen to true in the options object of viewer.start.

⚠ It's important to set the viewer's width and height, as without it the viewer may not display correctly. Setting the values to empty strings is valid, as long as you style the canvas through other means.

For a full example, see https://github.com/rerun-io/web-viewer-example. You can open the example via CodeSandbox: https://codesandbox.io/s/github/rerun-io/web-viewer-example

ℹ️ Note: This package only targets recent versions of browsers. If your target browser does not support Wasm imports or top-level await, you may need to install additional plugins for your bundler.

FAQs

Embed the Rerun web viewer in your app

The npm package @rerun-io/web-viewer receives a total of 2,169 weekly downloads. As such, @rerun-io/web-viewer popularity was classified as popular.

We found that @rerun-io/web-viewer demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 2 open source maintainers collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

Recent coverage mislabels the latest TEA protocol spam as a worm. Here’s what’s actually happening.

Security News

PyPI adds Trusted Publishing support for GitLab Self-Managed as adoption reaches 25% of uploads

Research

/Security News

A malicious Chrome extension posing as an Ethereum wallet steals seed phrases by encoding them into Sui transactions, enabling full wallet takeover.