Security News

Crates.io Users Targeted by Phishing Emails

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

@small-tech/site.js

Advanced tools

Develop, test, and deploy your secure static or dynamic personal web site with zero configuration.

Site.js is a small personal web tool for Linux, macOS, and Windows 10.

Most tools today are built for startups and enterprises. Site.js is built for people.

Zero-configuration – It Just Works 🤞™.

Automatically provisions locally-trusted TLS for development (courtesy of mkcert seamlessly integrated via Nodecert).

Automatically provisions globally-trusted TLS for staging and production (courtesy of Let’s Encrypt seamlessly integrated via ACME TLS and systemd. Your server will score an A on the SSL Labs SSL Server Test.)

Supports static web sites, dynamic web sites written in JavaScript, and hybrid sites (via integrated Node.js and Express).

Can be used as a proxy server (via integrated http-proxy-middleware).

Supports WebSockets (via integrated express-ws, which itself wraps ws).

Supports DotJS (PHP-like simple routing for Node.js to quickly prototype and build dynamic sites).

And, for full flexibility, you can define your HTTPS and WebSocket (WSS) routes entirely in code in the traditional way for Express apps.

Live reload on static pages.

Automatic server reload when the source code of your dynamic routes change.

Auto updates of production servers.

Note: Production use via startup daemon is only supported on Linux distributions with systemd.

Copy and paste the following commands into your terminal:

Before you pipe any script into your computer, always view the source code (Linux and macOS, Windows) and make sure you understand what it does.

wget -qO- https://sitejs.org/install | bash

curl -s https://sitejs.org/install | bash

iex(iwr -UseBasicParsing https://sitejs.org/install.txt).Content

npm i -g @small-tech/site.js

Any recent Linux distribution should work. However, Site.js is most thoroughly tested on Ubuntu 19.04/Pop!_OS 19.04 (development and staging) and Ubuntu 18.04 LTS (production) at Small Technology Foundation.

There are builds available for x64 and ARM (tested and supported on armv6l and armv7l only. Tested on Rasperry Pi Zero W, 3B+, 4B).

For production use, systemd is required.

macOS 10.14 Mojave and macOS 10.15 Catalina are supported (the latter as of Site.js 12.5.1).

Production use is not possible under macOS.

The current version of Windows 10 is supported with PowerShell running under Windows Terminal.

Windows Subsystem for Linux is not supported.

Production use is not possible under Windows.

Site.js tries to install the dependencies it needs seamlessly while running. That said, there are certain basic components it expects on a Linux-like system. These are:

sudolibcap2-bin (we use setcap to escalate privileges on the binary as necessary)For production use, passwordless sudo is required. On systems where the sudo configuration directory is set to /etc/sudoers.d, Site.js will automatically install this rule. On other systems, you might have to set it up yourself.

If it turns out that any of these prerequisites are a widespread reason for first-run breakage, we can look into having them installed automatically in the future. Please open an issue if any of these is an issue in your deployments or everyday usage.

Of course, you will need wget (or curl) installed to download the install script. You can install wget via your distribution’s package manager (e.g., sudo apt install wget on Ubuntu-like systems).

To seamlessly update the native binary if a newer version exists:

site update

This command will automatically restart a running Site.js daemon if one exists. If you are running Site.js as a regular process, it will continue to run and you will run the newer version the next time you launch a regular Site.js process.

Note: There is a bug in the semantic version comparison in the original release with the update feature (version 12.9.5) that will prevent upgrades between minor versions (i.e., between 12.9.5 and 12.10.x and beyond). This was fixed in version 12.9.6. If you’re still on 12.9.5 and you’re reading this after we’ve moved to 12.10.0 and beyond, please stop Site.js if it’s running and install the latest Site.js manually.

Production servers started with the enable command will automatically check for updates on first launch and then again at a set interval (currently every 5 hours) and update themselves as and when necessary.

This is a primary security feature given that Site.js is meant for use by individuals, not startups or enterprises with operations teams that can (in theory, at least) maintain servers with the latest updates.

To uninstall the native binary (and any created artifacts, like TLS certificates, systemd services, etc.):

site uninstall

Start serving the current directory at https://localhost as a regular process using locally-trusted certificates:

$ site

This is a shorthand for the full form of the serve command which, for the above example, is:

$ site serve . @localhost:443

Just specify the port explicitly as in the following example:

$ site @localhost:666

That, again, is shorthand for the full version of the command, which is:

$ site serve . @localhost:666

You can use Site.js as a development-time reverse proxy for HTTP and WebSocket connections. For example, if you use Hugo and you’re running hugo server on the default HTTP port 1313. You can run a HTTPS reverse proxy at https://localhost with LiveReload support using:

$ site :1313

Again, this is a convenient shortcut. The full form of this command is:

$ site serve :1313 @localhost:443

This will create and serve the following proxies:

Start serving the my-site directory at your hostname as a regular process using globally-trusted Let’s Encrypt certificates:

$ site my-site @hostname

Start serving http://localhost:1313 and ws://localhost:1313 at your hostname:

$ site :1313 @hostname

To set your hostname under macOS (e.g., to example.small-tech.org), run the following command:

$ sudo scutil --set HostName example.small-tech.org

On Windows 10, you must add quotation marks around @hostname and @localhost. So the first example, above, would be written in the following way on Windows 10:

$ site my-site "@hostname"

Also, Windows 10, unlike Linux and macOS, does not have the concept of a hostname. The closest thing to it is your full computer name. Setting your full computer name is a somewhat convoluted process so we’ve documented it here for you.

Say you want to set your hostname to my-windows-laptop.small-tech.org:

my-windows-laptop)small-tech.org) in the Primary DNS suffix of this computer field.Use a service like ngrok (Pro+) to point a custom domain name to your temporary staging server. Make sure you set your hostname file (e.g., in /etc/hostname or via hostnamectl set-hostname <hostname> or the equivalent for your platform) to match your domain name. The first time you hit your server via your hostname it will take a little longer to load as your Let’s Encrypt certificates are being automatically provisioned by ACME TLS.

When you start your server, it will run as a regular process. It will not be restarted if it crashes or if you exit the foreground process or restart the computer.

Site.js can also help you when you want to deploy your site to your live server with its sync feature. You can even have Site.js watch for changes and sync them to your server in real-time (e.g., if you want to live blog something or want to keep a page updated with local data you’re collecting from a sensor):

$ site my-demo --sync-to=my-demo.site

The above command will start a local development server at https://localhost. Additionally, it will watch the folder my-demo for changes and sync any changes to its contents via rsync over ssh to the host my-demo.site.

If don’t want Site.js to start a server and you want to perform just a one-time sync, use the --exit-on-sync flag.

$ site my-demo --sync-to=my-demo.site --exit-on-sync

Without any customisations, the sync feature assumes that your account on your remote server has the same name as your account on your local machine and that the folder you are watching (my-demo, in the example above) is located at /home/your-account/my-demo on the remote server. Also, by default, the contents of the folder will be synced, not the folder itself. You can change these defaults by specifying a full-qualified remote connection string as the --sync-to value.

The remote connection string has the format:

remoteAccount@host:/absolute/path/to/remoteFolder

For example:

$ site my-folder --sync-to=someOtherAccount@my-demo.site:/var/www

If you want to sync a different folder to the one you’re serving or if you’re running a proxy server (or if you just want to be as explicit as possible about your intent) you can use the --sync-from option to specify the folder to sync:

$ site :1313 --sync-from=public --sync-to=my-demo.site

(The above command will start a proxy server that forwards requests to and responses from http://localhost to https://localhost and sync the folder called public to the host my-demo.site.)

If you want to sync not the folder’s contents but the folder itself, use the --sync-folder-and-contents flag. e.g.,

$ site my-local-folder --sync-to=me@my.site:my-remote-folder --sync-folder-and-contents

The above command will result in the following directory structure on the remote server: /home/me/my-remote-folder/my-local-folder. It also demonstrates that if you specify a relative folder, Site.js assumes you mean the folder exists in the home directory of the account on the remote server.

Available on Linux distributions with systemd (most Linux distributions, but not these ones or on macOS or Windows).

On your live, public server, you can start serving the my-site directory at your hostname as a daemon that is automatically run at system startup and restarted if it crashes with:

$ site enable my-site

The enable command sets up your server to start automatically when your server starts and restart automatically if it crashes. Requires superuser privileges on first run to set up the launch item.

For example, if you run the command on a connected server that has the ar.al domain pointing to it and ar.al set in /etc/hostname, you will be able to access the site at https://ar.al. (Yes, of course, ar.al runs on Site.js.) The first time you hit your live site, it will take a little longer to load as your Let’s Encrypt certificates are being automatically provisioned by ACME TLS.

The automatic TLS certificate provisioning will get certificates for the naked domain and the www subdomain. There is currently no option to add other subdomains. Also, please ensure that both the naked domain and the www subdomain are pointing to your server before you enable your server and hit it to ensure that the provisioning works. This is especially important if you are migrating an existing site.

When the server is enabled, you can also use the following commands:

start: Start server.stop: Stop server.restart: Restart server.disable: Stop server and remove from startup.logs: Display and tail server logs.status: Display detailed server information (press ‘q’ to exit).Site.js uses the systemd to start and manage the daemon. Beyond the commands listed above that Site.js supports natively (and proxies to systemd), you can make use of all systemd functionality via the systemctl and journalctl commands.

Site.js is built using and supports the latest Node.js LTS (currently version 10.16.0; after October 22nd, 2019, we are scheduled to move to Node 12 LTS when it becomes the active branch).

# Clone and install.

mkdir site.js && cd site.js

git clone https://source.ind.ie/site.js/app.git

cd app

./install

# Run the app once (so that it can get your Node.js binary

# permission to bind to ports < 1024 on Linux – otherwise

# the tests will fail.)

bin/site.js test/site

# You should be able to see the site at https://localhost

# now. Press Ctrl+C to stop the server.

# Run unit tests.

npm test

Note: If you upgrade your Node.js binary, please run bin/site.js again before running the tests (or using Site.js as a module in your own app) so that it can get permissions for your Node.js binary to bind to ports < 1024. Otherwise, it will fail with Error: listen EACCES: permission denied 0.0.0.0:443.

After you install the source and run tests:

# Install the binary as a global module

npm i -g

# Serve the test site locally (visit https://localhost to view).

site test/site

Note: for commands that require root privileges (i.e., enable and disable), Site.js will automatically restart itself using sudo and Node must be available for the root account. If you’re using nvm, you can enable this via:

# Replace v10.16.3 with the version of node you want to make available globally.

sudo ln -s "$NVM_DIR/versions/node/v10.16.3/bin/node" "/usr/local/bin/node"

sudo ln -s "$NVM_DIR/versions/node/v10.16.3/bin/npm" "/usr/local/bin/npm"

After you install the source and run tests:

# Build the native binary for your platform.

# To build for all platforms, use npm run build -- --all

npm run build

# Serve the test site (visit https://localhost to view).

# e.g., To Linux binary:

dist/linux/12.8.0/site test/site

After you install the source and run tests:

npm run install-locally

You cannot currently cross-compile for ARM so you must build Site.js on an ARM device. The release build is built on a Raspberry Pi 3B+. Note that Aral had issues compiling it on a Pi 4. To build the Site.js binary (e.g., version 12.8.0) on an ARM device, do:

mkdir -p dist/linux-arm/12.8.0

node_modules/nexe/index.js bin/site.js --build --verbose -r package.json -r "bin/commands/*" -r "node_modules/le-store-certbot/renewal.conf.tpl" -r "node_modules/@ind.ie/nodecert/mkcert-bin/mkcert-v1.4.0-linux-arm" -o dist/linux-arm/12.8.0/site

You can then find the site binary in your dist/linux-arm/12.8.0/ folder. To install it, do:

sudo cp dist/linux-arm/12.8.0/site /usr/local/bin

# To cross-compile binaries for Linux (x64), macOS, and Windows

# and also copy them over to the Site.js web Site for deployment.

# (You will most likely not need to do this.)

npm run deploy

site [command] [folder|:port] [@host[:port]] [--options]

command: serve | enable | disable | start | stop | logs | status | update | uninstall | version | helpfolder|:port: Path of folder to serve (defaults to current folder) or port on localhost to proxy.@host[:port]: Host (and, optionally port) to sync. Valid hosts are @localhost and @hostname.--options: Settings that alter command behaviour.Key: [] = optional | = or

serve: Serve specified folder (or proxy specified :port) on specified @host (at :port, if given). The order of arguments is:

site serve my-folder @localhost

If a port (e.g., :1313) is specified instead of my-folder, start an HTTP/WebSocket proxy.

enable: Start server as daemon with globally-trusted certificates and add to startup.

disable: Stop server daemon and remove from startup.

start: Start server as daemon with globally-trusted certificates.

stop: Stop server daemon.

restart: Restart server daemon.

logs: Display and tail server logs.

status: Display detailed server information.

update: Check for Site.js updates and update if new version is found.

uninstall: Uninstall Site.js.

version: Display version and exit.

help: Display help screen and exit.

If command is omitted, behaviour defaults to serve.

serve and enable commands:--aliases: Comma-separated list of additional domains to obtain TLS certificates for and respond to.serve command:--sync-to: The host to sync to.

--sync-from: The folder to sync from (only relevant if --sync-to is specified).

--exit-on-sync: Exit once the first sync has occurred (only relevant if --sync-to is specified). Useful in deployment scripts.

--sync-folder-and-contents: Sync folder and contents (default is to sync the folder’s contents only).

enable command:--ensure-can-sync: Ensure server can rsync via ssh.All command-line arguments are optional. By default, Site.js will serve your current working folder over port 443 with locally-trusted certificates.

When you serve a site at @hostname or use the enable command, globally-trusted Let’s Encrypt TLS certificates are automatically provisioned for you using ACME TLS the first time you hit your hostname. The hostname for the certificates is automatically set from the hostname of your system (and the www. subdomain is also automatically provisioned).

| Goal | Command |

|---|---|

| Serve current folder* | site |

| site serve | |

| site serve . | |

| site serve . @localhost | |

| site serve . @localhost:443 | |

| Serve folder demo (shorthand) | site demo |

| Serve folder demo on port 666 | site serve demo @localhost:666 |

| Proxy localhost:1313 to https://localhost* | site :1313 |

| site serve :1313 @localhost:443 | |

| Serve current folder, sync it to my.site* | site --sync-to=my.site |

| site serve . @localhost:443 --sync-to=my.site | |

| Serve demo folder, sync it to my.site | site serve demo --sync-to=my.site |

| Ditto, but use account me on my.site | site serve demo --sync-to=me@my.site |

| Ditto, but sync to remote folder ~/www | site serve demo --sync-to=me@my.site:www |

| Ditto, but specify absolute path | site serve demo --sync-to=me@my.site:/home/me/www |

| Sync current folder, proxy localhost:1313 | site serve :1313 --sync-from=. --sync-to=my.site |

| Sync current folder to my.site and exit | site --sync-to=my.site --exit-on-sync |

| Sync demo folder to my.site and exit* | site demo --sync-to=my.site --exit-on-sync |

| site --sync-from=demo --sync-to=my.site --exit-on-sync |

| Goal | Command |

|---|---|

| Serve current folder | site @hostname |

| Serve current folder also at aliases | site @hostname --aliases=other.site,www.other.site |

| Serve folder demo* | site demo @hostname |

| site serve demo @hostname | |

| Proxy localhost:1313 to https://hostname | site serve :1313 @hostname |

| Goal | Command |

|---|---|

| Serve current folder as daemon | site enable |

| Ditto & also ensure it can rsync via ssh | site enable --ensure-can-sync |

| Get status of daemon | site status |

| Start server | site start |

| Stop server | site stop |

| Restart server | site restart |

| Display server logs | site logs |

| Stop current daemon | site disable |

| Goal | Command |

|---|---|

| Check for updates and update if found | site update |

* Alternative, equivalent forms listed (some commands have shorthands).

What if links never died? What if we never broke the Web? What if it didn’t involve any extra work? It’s possible. And, with Site.js, it’s effortless.

If you have a static archive of the previous version of your site, you can have Site.js automatically serve it for you. For example, if your site is being served from the my-site folder, just put the archive of your site into a folder named my-site-archive-1:

|- my-site

|- my-site-archive-1

If a path cannot be found in my-site, it will be served from my-site-archive-1.

And you’re not limited to a single archive (and hence the “cascade” bit in the name of the feature). As you have multiple older versions of your site, just add them to new folders and increment the archive index in the name. e.g., my-site-archive-2, my-site-archive-3, etc.

Paths in my-site will override those in my-site-archive-3 and those in my-site-archive-3 will, similarly, override those in my-site-archive-2 and so on.

What this means that your old links will never die but if you do replace them with never content in never versions, those will take precedence.

But what if the previous version of your site is a dynamic site and you either don’t want to lose the dynamic functionality or you simply cannot take a static backup. No worries. Just move it to a different subdomain or domain and make your 404s into 302s.

Site.js has native support for the 404 to 302 technique to ensure an evergreen web. Just serve the old version of your site (e.g., your WordPress site, etc.) from a different subdomain and tell Site.js to forward any unknown requests on your new static site to that subdomain so that all your existing links magically work.

To do so, create a simple file called 4042302 in the root directory of your web content and add the URL of the server that is hosting your older content. e.g.,

https://the-previous-version-of.my.site

You can chain the 404 → 302 method any number of times to ensure that none of your links ever break without expending any additional effort to migrate your content.

For more information and examples, see 4042302.org.

You can specify a custom error page for 404 (not found) and 500 (internal server error) errors. To do so, create a folder with the status code you want off of the root of your web content (i.e., /404 and/or /500) and place at least an index.html file in the folder. You can also, optionally, put any assets you want to display on your error pages into those folders and load them in via relative URLs. Your custom error pages will be served with the proper error code and at the URL that was being accessed.

If you do not create custom error pages, the built-in default error pages will be displayed for 404 and 500 errors.

When creating your own servers (see API), you can generate the default error pages programmatically using the static methods Site.default404ErrorPage() and Site.default500ErrorPage(), passing in the missing path and the error message as the argument, respectively to get the HTML string of the error page returned.

You can specify routes with dynamic functionality by specifying HTTPS and WebSocket (WSS) routes in two ways: either using DotJS – a simple file system routing convention ala PHP, but for JavaScript – or through code in a routes.js file.

In either case, your dynamic routes go into a directory named .dynamic in the root of your site.

DotJS maps JavaScript modules in a file system hierarchy to routes on your web site in a manner that will be familiar to anyone who has ever used PHP.

The easiest way to get started with dynamic routes is to simply create a JavaScript file in a folder called .dynamic in the root folder of your site. Any routes added in this manner will be served via HTTPS GET.

For example, to have a dynamic route at https://localhost, create the following file:

.dynamic/

└ index.js

Inside index.js, all you need to do is to export your route handler:

let counter = 0

module.exports = (request, response) => {

response

.type('html')

.end(`

<h1>Hello, world!</h1>

<p>I’ve been called ${++counter} time${counter > 1 ? 's': ''} since the server started.</p>

`)

}

To test it, run a local server (site) and go to https://localhost. Refresh the page a couple of times to see the counter increase.

Congratulations, you’ve just made your first dynamic route using DotJS.

In the above example, index.js is special in that the file name is ignored and the directory that the file is in becomes the name of the route. In this case, since we put it in the root of our site, the route becomes /.

Usually, you will have more than just the index route (or your index route might be a static one). In those cases, you can either use directories with index.js files in them to name and organise your routes or you can use the names of .js files themselves as the route names. Either method is fine but you should choose one and stick to it in order not to confuse yourself later on (see Precedence, below).

So, for example, if you wanted to have a dynamic route that showed the server CPU load and free memory, you could create a file called .dynamic/server-stats.js in your web folder with the following content:

const os = require('os')

function serverStats (request, response) {

const loadAverages = `<p> ${os.loadavg().reduce((a, c, i) => `${a}\n<li><strong>CPU ${i+1}:</strong> ${c}</li>`, '<ul>') + '</ul>'}</p>`

const freeMemory = `<p>${os.freemem()} bytes</p>`

const page = `<html><head><title>Server statistics</title><style>body {font-family: sans-serif;}</style></head><body><h1>Server statistics</h1><h2>Load averages</h2>${loadAverages}<h2>Free memory</h2>${freeMemory}</body></html>`

response

.type('html')

.end(page)

}

module.exports = serverStats

Site.js will load your dynamic route at startup and you can test it by hitting https://localhost/server-stats using a local web server. Each time you refresh, you should get the latest dynamic content.

Note: You could also have named your route .dynamic/server-stats/index.js and still hit it from https://localhost/server-stats. It’s best to keep to one or other convention (either using file names as route names or directory names as route names). Using both in the same app will probably confuse you (see Precedence, below).

Since Site.js contains Node.js, anything you can do with Node.js, you do with Site.js, including using node modules and npm. To use custom node modules, initialise your .dynamic folder using npm init and use npm install. Once you’ve done that, any modules you require() from your DotJS routes will be properly loaded and used.

Say, for example, that you want to display a random ASCII Cow using the Cows module (because why not?) To do so, create a package.json file in your .dynamic folder (e.g., use npm init to create this interactively). Here’s a basic example:

{

"name": "random-cow",

"version": "1.0.0",

"description": "Displays a random cow.",

"main": "index.js",

"author": "Aral Balkan <mail@ar.al> (https://ar.al)",

"license": "AGPL-3.0-or-later"

}

Then, install the cows node module using npm:

npm i cows

This will create a directory called node_modules in your .dynamic folder and install the cows module (and any dependencies it may have) inside it. Now is also a good time to create a .gitignore file in the root of your web project and add the node_modules directory to it if you’re using Git for source control so that you do not end up accidentally checking in your node modules. Here’s how you would do this using the command-line on Linux-like systems:

echo 'node_modules' >> .gitignore

Now, let’s create the route. We want it reachable at https://localhost/cows (of course), so let’s put it in:

.dynamic/

└ cows

└ index.js

And, finally, here’s the code for the route itself:

const cows = require('cows')()

module.exports = function (request, response) {

const randomCowIndex = Math.round(Math.random()*cows.length)-1

const randomCow = cows[randomCowIndex]

function randomColor () {

const c = () => (Math.round(Math.random() * 63) + 191).toString(16)

return `#${c()}${c()}${c()}`

}

response

.type('html')

.end(`

<!doctype html>

<html lang='en'>

<head>

<meta charset='utf-8'>

<meta name='viewport' content='width=device-width, initial-scale=1.0'>

<title>Cows!</title>

<style>

html { font-family: sans-serif; color: dark-grey; background-color: ${randomColor()}; }

body {

display: grid; align-items: center; justify-content: center;

height: 100vh; vertical-align: top; margin: 0;

}

pre { font-size: 24px; color: ${randomColor()}; mix-blend-mode: difference;}

</style>

</head>

<body>

<pre>${randomCow}</pre>

</body>

</html>

`)

}

Now if you run site on the root of your web folder (the one that contains the .dynamic folder) and hit https://localhost/cows, you should get a random cow in a random colour every time you refresh.

If including HTML and CSS directly in your dynamic route makes you cringe, feel free to require your templating library of choice and move them to external files. As hidden folders (directories that begin with a dot) are ignored in the .dynamic folder and its subfolders, you can place any assets (HTML, CSS, images, etc.) into a directory that starts with a dot and load them in from there.

For example, if I wanted to move the HTML and CSS into their own files in the example above, I could create the following directory structure:

.dynamic/

└ cows

├ .assets

│ ├ index.html

│ └ index.css

└ index.js

For this example, I’m not going to use an external templating engine but will instead rely on the built-in template string functionality in JavaScript along with eval() (which is perfectly safe to use here as we are not processing external input).

So I move the HTML to the index.html file (and add a template placeholder for the CSS in addition to the existing random cow placeholder):

<!doctype html>

<html lang='en'>

<head>

<meta charset='utf-8'>

<meta name='viewport' content='width=device-width, initial-scale=1.0'>

<title>Cows!</title>

<style>${css}</style>

</head>

<body>

<pre>${randomCow}</pre>

</body>

</html>

And, similarly, I move the CSS to its own file, index.css:

html {

font-family: sans-serif;

color: dark-grey;

background-color: ${randomColor()};

}

body {

display: grid;

align-items: center;

justify-content: center;

height: 100vh;

vertical-align: top;

margin: 0;

}

pre {

font-size: 24px;

mix-blend-mode: difference;

color: ${randomColor()};

}

Then, finally, I modify my cows route to read in these two template files and to dynamically render them in response to requests. My index.js now looks like this:

// These are run when the server starts so sync calls are fine.

const fs = require('fs')

const cssTemplate = fs.readFileSync('cows/.assets/index.css')

const htmlTemplate = fs.readFileSync('cows/.assets/index.html')

const cows = require('cows')()

module.exports = function (request, response) {

const randomCowIndex = Math.round(Math.random()*cows.length)-1

const randomCow = cows[randomCowIndex]

function randomColor () {

const c = () => (Math.round(Math.random() * 63) + 191).toString(16)

return `#${c()}${c()}${c()}`

}

function render (template) {

return eval('`' + template + '`')

}

// We render the CSS template first…

const css = render(cssTemplate)

// … because the HTML template references the rendered CSS template.

const html = render(htmlTemplate)

response.type('html').end(html)

}

When you save this update, Site.js will automatically reload the server with your new code (version 12.9.7 onwards). When you refresh in your browser, you should see exactly the same behaviour as before.

As you can see, you can create quite a bit of dynamic functionality just by using DotJS with its most basic file-based routing mode. However, with this convention you are limited to GET routes. To use both GET and POST routes, you have to do a tiny bit more work, as explained in the next section.

If you need POST routes (e.g., you want to post form content back to the server) in addition to GET routes, the directory structure works a little differently. In this case, you have to create a .get directory for your GET routes and a .post directory for your post routes.

Otherwise, the naming and directory structure conventions work exactly as before.

So, for example, if you have the following directory structure:

site/

└ .dynamic/

├ .get/

│ └ index.js

└ .post/

└ index.js

Then a GET request for https://localhost will be routed to site/.dynamic/.get/index.js and a POST request for https://localhost will be routed to site/.dynamic/.post/index.js.

These two routes are enough to cover your needs for dynamic routes and form handling.

Site.js is not limited to HTTPS, it also supports secure WebSockets.

To define WebSocket (WSS) routes alongside HTTPS routes, modify your directory structure so it resembles the one below:

site/

└ .dynamic/

├ .https/

│ ├ .get/

│ │ └ index.js

│ └ .post/

│ └ index.js

└ .wss/

└ index.js

Note that all we’ve done is to move our HTTPS .get and .post directories under a .https directory and we’ve created a separate .wss directory for our WebSocket routes.

Here’s how you would implement a simple echo server that sends a copy of the message it receives from a client to that client:

module.exports = (client, request) => {

client.on('message', (data) => {

client.send(data)

})

}

You can also broadcast messages to all or a subset of connected clients. Here, for example, is a naïve single-room chat server implementation that broadcasts messages to all connected WebSocket clients (including the client that originally sent the message and any other clients that might be connected to different WebSocket routes on the same server):

module.exports = (currentClient, request) {

ws.on('message', message => {

this.getWss().clients.forEach(client => {

client.send(message)

})

})

})

To test it out, run Site.js and then open up the JavaScript console in a couple of browser windows and enter the following code into them:

const socket = new WebSocket('https://localhost/chat')

socket.onmessage = message => console.log(message.data)

socket.send('Hello!')

For a slightly more sophisticated example that doesn’t broadcast a client’s own messages to itself and selectively broadcasts to only the clients in the same “rooms”, see the Simple Chat example. And here’s a step-by-step tutorial that takes you through how to build it.

Here’s a simplified listing of the code for the server component of this example:

module.exports = function (client, request) {

// A new client connection has been made.

// Persist the client’s room based on the path in the request.

client.room = this.setRoom(request)

console.log(`New client connected to ${client.room}`)

client.on('message', message => {

// A new message has been received from a client.

// Broadcast it to every other client in the same room.

const numberOfRecipients = this.broadcast(client, message)

console.log(`${client.room} message broadcast to ${numberOfRecipients} recipient${numberOfRecipients === 1 ? '' : 's'}.`)

})

}

DotJS should get you pretty far for simpler use cases, but if you need full flexibility in routing (to use regular expressions in defining route paths, for example, or for initialising global objects that need to survive for the lifetime of the server), simply define a routes.js in your .dynamic folder:

site/

└ .dynamic/

└ routes.js

The routes.js file should export a function that accepts a reference to the Express app created by Site.js and defines its routes on it. For example:

module.exports = app => {

// HTTPS route with a parameter called thing.

app.get('/hello/:thing', (request, response) => {

response

.type('html')

.end(`<h1>Hello, ${request.params.thing}!</h1>`)

})

// WebSocket route: echos messages back to the client that sent them.

app.ws('/echo', (client, request) => {

client.on('message', (data) => {

client.send(data)

})

}

When using the routes.js file, you can use all of the features in wxpress and our fork of express-ws (which itself wraps ws).

If a dynamic route and a static route have the same name, the dynamic route will take precedence. So, for example, if you’re serving the following site:

site/

├ index.html

└ .dynamic/

└ index.js

When you hit https://localhost, you will get the dynamic route defined in index.js.

In the following scenario:

site/

└ .dynamic/

├ fun.html

└ fun/

└ index.js

The behaviour observed under Linux at the time of writing is that fun/index.js will have precendence and mask fun.html. Do not rely on this behaviour. The order of dynamic routes is based on a directory crawl and is not guaranteed to be the same in all future versions. For your peace of mind, please do not mix file-name-based and directory-name-based routing.

Each of the routing conventions are mutually exclusive and applied according to the following precedence rules:

Advanced routes.js-based advanced routing.

DotJS with separate folders for .https and .wss routes routing (the .http folder itself will apply precedence rules 3 and 4 internally).

DotJS with separate folders for .get and .post routes in HTTPS-only routing.

DotJS with GET-only routing.

So, if Site.js finds a routes.js file in the root folder of your site’s folder, it will only use the routes from that file (it will not apply file-based routing).

If Site.js cannot find a routes.js file, it will look to see if separate .https and .wss folders have been defined (the existence of just one of these is enough) and attempt to load DotJS routes from those folders. (If it finds separate .get or .post folders within the .https folder, it will add the relevant routes from those folders; if it can’t it will load GET-only routes from the .https folder and its subfolders.)

If separate .https and .wss folders do not exist, Site.js will expect all defined DotJS routes to be HTTPS and will initially look for separate .get and .post folders (the existence of either is enough to trigger this mode). If they exist, it will add the relevant routes from those folders and their subfolders.

Finally, if Site.js cannot find separate .get and .post folders either, it will assume that any DotJS routes it finds in the .dynamic folder are HTTPS GET routes and attempt to add them from there (and any subfolders).

Your dynamic web routes are running within Site.js, which is a Node application compiled into a native binary. Here are how the various common directories for Node.js apps will behave:

os.homedir(): (writable) This is the home folder of the account running Site.js. You can write to it to store persistent objects (e.g., save data).

os.tmpdir(): (writable) Path to the system temporary folder. Use for content you can afford to lose and can recreate (e.g., cache API calls).

.: (writable) Path to the root of your web content. Since you can write here, you can, if you want to, create content dynamically that will then automatically be served by the static web server.

__dirname: (writeable) Path to the .dynamic folder.

/: (read-only) Path to the /usr folder (Site.js is installed in /usr/local/site). You should not have any reason to use this.

If you want to access the directory of Site.js itself (e.g., to load in the package.json to read the app’s version), you can use the following code:

const appPath = require.main.filename.replace('bin/site.js', '')

The code within your JavaScript routes is executed on the server. Exercise the same caution as you would when creating any Node.js app (sanitise input, etc.)

You can also include Site.js as a Node module into your Node project. This section details the API you can use if you do that.

Site.js’s createServer method behaves like the built-in https module’s createServer function. Anywhere you use require('https').createServer, you can simply replace it with:

const Site = require('@small-tech/site.js')

new Site().createServer

options (object): see https.createServer. Populates the cert and key properties from the automatically-created nodecert or Let’s Encrypt certificates and will overwrite them if they exist in the options object you pass in. If your options has options.global = true set, globally-trusted TLS certificates are obtained from Let’s Encrypt using ACME TLS.

requestListener (function): see https.createServer. If you don’t pass a request listener, Site.js will use its default one.

Returns: https.Server instance, configured with either locally-trusted certificates via nodecert or globally-trusted ones from Let’s Encrypt.

const Site = require('@small-tech/site.js')

const express = require('express')

const app = express()

app.use(express.static('.'))

const options = {} // to use globally-trusted certificates instead, set this to {global: true}

const server = new Site().createServer(options, app).listen(443, () => {

console.log(` 🎉 Serving on https://localhost\n`)

})

Options is an optional parameter object that may contain the following properties, all optional:

path (string): the directory to serve using Express.static.

port (number): the port to serve on. Defaults to 443. (On Linux, privileges to bind to the port are automatically obtained for you.)

global (boolean): if true, globally-trusted Let’s Encrypt certificates will be provisioned (if necessary) and used via ACME TLS. If false (default), locally-trusted certificates will be provisioned (if necessary) and used using nodecert.

proxyPort (number): if provided, a proxy server will be created for the port (and path will be ignored).

Returns: Site instance.

Note: if you want to run the site on a port < 1024 on Linux, ensure your process has the necessary privileges to bind to such ports. E.g., use:

require('lib/ensure').weCanBindToPort(port, () => {

// You can safely bind to a ‘privileged’ port on Linux now.

})

callback (function): a function to be called when the server is ready. This parameter is optional. Default callbacks are provided for both regular and proxy servers.

Returns: https.Server instance, configured with either locally or globally-trusted certificates.

Serve the current directory at https://localhost using locally-trusted TLS certificates:

const Site = require('@small-tech/site.js')

const server = new Site().serve()

Serve the current directory at your hostname using globally-trusted Let’s Encrypt TLS certificates:

const Site = require('@small-tech/site.js')

const server = new Site().serve({global: true})

Start a proxy server to proxy local port 1313 at your hostname:

const Site = require('@small-tech/site.js')

const server = new Site().serve({proxyPort: 1313, global: true})

Site.js is Small Technology. The emphasis is on small. It is, by design, a zero-configuration tool for creating and hosting single-tenant web applications. It is for humans, by humans. It is non-commercial. (It is not for enterprises, it is not for “startups”, and it is definitely not for unicorns.) As such, any new feature requests will have to be both fit for purpose and survive a trial by fire to be considered.

Please file issues and submit pull requests on the Site.js Github Mirror.

For locally-trusted certificates, all dependencies are installed automatically for you if they do not exist if you have apt, pacman, or yum (untested) on Linux or if you have Homebrew or MacPorts (untested) on macOS.

I can use your help to test Site.js on the following platform/package manager combinations:

Please let me know how/if it works. Thank you!

thagoat for confirming that installation works on Arch Linux with Pacman.

Tim Knip for confirming that the module works with 64-bit Windows with the following behaviour: “Install pops up a windows dialog to allow adding the cert.”

Run Rabbit Run for the following information on 64-bit Windows: “Win64: works with the windows cert install popup on server launch. Chrome and ie are ok with the site then. FF 65 still throws the cert warning even after restarting.”

FAQs

Small Web construction set.

The npm package @small-tech/site.js receives a total of 22 weekly downloads. As such, @small-tech/site.js popularity was classified as not popular.

We found that @small-tech/site.js demonstrated a not healthy version release cadence and project activity because the last version was released a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

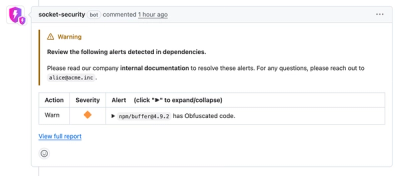

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Security News

The Rust Security Response WG is warning of phishing emails from rustfoundation.dev targeting crates.io users.

Product

Socket now lets you customize pull request alert headers, helping security teams share clear guidance right in PRs to speed reviews and reduce back-and-forth.

Product

Socket's Rust support is moving to Beta: all users can scan Cargo projects and generate SBOMs, including Cargo.toml-only crates, with Rust-aware supply chain checks.