Product

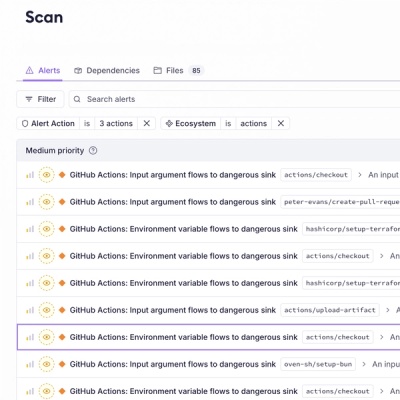

Introducing GitHub Actions Scanning Support

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

@vladmandic/human

Advanced tools

Human: AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gesture Recognition

AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition,

Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis,

Age & Gender & Emotion Prediction, Gaze Tracking, Gesture Recognition, Body Segmentation

Browser:

NodeJS:

@tensorflow/tfjsCheck out Simple Live Demo fully annotated app as a good start starting point (html)(code)

Check out Main Live Demo app for advanced processing of of webcam, video stream or images static images with all possible tunable options

All browser demos are self-contained without any external dependencies

NodeJS demos may require extra dependencies which are used to decode inputs

See header of each demo to see its dependencies as they are not automatically installed with Human

node-canvasffmpegfswebcamHuman eventing to get notifications on processinghuman by dispaching them to pool of pre-created worker processesSee issues and discussions for list of known limitations and planned enhancements

Suggestions are welcome!

Visit Examples gallery for more examples

All options as presented in the demo application...

demo/index.html

Results Browser:

[ Demo -> Display -> Show Results ]

468-Point Face Mesh Defails:

(view in full resolution to see keypoints)

Simply load Human (IIFE version) directly from a cloud CDN in your HTML file:

(pick one: jsdelirv, unpkg or cdnjs)

<!DOCTYPE HTML>

<script src="https://cdn.jsdelivr.net/npm/@vladmandic/human/dist/human.js"></script>

<script src="https://unpkg.dev/@vladmandic/human/dist/human.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/human/3.0.0/human.js"></script>

For details, including how to use Browser ESM version or NodeJS version of Human, see Installation

Simple app that uses Human to process video input and

draw output on screen using internal draw helper functions

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human.Human(config);

// select input HTMLVideoElement and output HTMLCanvasElement from page

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

function detectVideo() {

// perform processing using default configuration

human.detect(inputVideo).then((result) => {

// result object will contain detected details

// as well as the processed canvas itself

// so lets first draw processed frame on canvas

human.draw.canvas(result.canvas, outputCanvas);

// then draw results on the same canvas

human.draw.face(outputCanvas, result.face);

human.draw.body(outputCanvas, result.body);

human.draw.hand(outputCanvas, result.hand);

human.draw.gesture(outputCanvas, result.gesture);

// and loop immediate to the next frame

requestAnimationFrame(detectVideo);

return result;

});

}

detectVideo();

or using async/await:

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

async function detectVideo() {

const result = await human.detect(inputVideo); // run detection

human.draw.all(outputCanvas, result); // draw all results

requestAnimationFrame(detectVideo); // run loop

}

detectVideo(); // start loop

or using Events:

// create instance of human with simple configuration using default values

const config = { backend: 'webgl' };

const human = new Human(config); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

human.events.addEventListener('detect', () => { // event gets triggered when detect is complete

human.draw.all(outputCanvas, human.result); // draw all results

});

function detectVideo() {

human.detect(inputVideo) // run detection

.then(() => requestAnimationFrame(detectVideo)); // upon detect complete start processing of the next frame

}

detectVideo(); // start loop

or using interpolated results for smooth video processing by separating detection and drawing loops:

const human = new Human(); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

let result;

async function detectVideo() {

result = await human.detect(inputVideo); // run detection

requestAnimationFrame(detectVideo); // run detect loop

}

async function drawVideo() {

if (result) { // check if result is available

const interpolated = human.next(result); // get smoothened result using last-known results

human.draw.all(outputCanvas, interpolated); // draw the frame

}

requestAnimationFrame(drawVideo); // run draw loop

}

detectVideo(); // start detection loop

drawVideo(); // start draw loop

or same, but using built-in full video processing instead of running manual frame-by-frame loop:

const human = new Human(); // create instance of Human

const inputVideo = document.getElementById('video-id');

const outputCanvas = document.getElementById('canvas-id');

async function drawResults() {

const interpolated = human.next(); // get smoothened result using last-known results

human.draw.all(outputCanvas, interpolated); // draw the frame

requestAnimationFrame(drawResults); // run draw loop

}

human.video(inputVideo); // start detection loop which continously updates results

drawResults(); // start draw loop

or using built-in webcam helper methods that take care of video handling completely:

const human = new Human(); // create instance of Human

const outputCanvas = document.getElementById('canvas-id');

async function drawResults() {

const interpolated = human.next(); // get smoothened result using last-known results

human.draw.canvas(outputCanvas, human.webcam.element); // draw current webcam frame

human.draw.all(outputCanvas, interpolated); // draw the frame detectgion results

requestAnimationFrame(drawResults); // run draw loop

}

await human.webcam.start({ crop: true });

human.video(human.webcam.element); // start detection loop which continously updates results

drawResults(); // start draw loop

And for even better results, you can run detection in a separate web worker thread

Human library can process all known input types:

Image, ImageData, ImageBitmap, Canvas, OffscreenCanvas, Tensor,HTMLImageElement, HTMLCanvasElement, HTMLVideoElement, HTMLMediaElementAdditionally, HTMLVideoElement, HTMLMediaElement can be a standard <video> tag that links to:

.mp4, .avi, etc.hls.js or DASH (Dynamic Adaptive Streaming over HTTP) using dash.jsHuman is written using TypeScript strong typing and ships with full TypeDefs for all classes defined by the library bundled in types/human.d.ts and enabled by default

Note: This does not include embedded tfjs

If you want to use embedded tfjs inside Human (human.tf namespace) and still full typedefs, add this code:

import type * as tfjs from '@vladmandic/human/dist/tfjs.esm';

const tf = human.tf as typeof tfjs;

This is not enabled by default as Human does not ship with full TFJS TypeDefs due to size considerations

Enabling tfjs TypeDefs as above creates additional project (dev-only as only types are required) dependencies as defined in @vladmandic/human/dist/tfjs.esm.d.ts:

@tensorflow/tfjs-core, @tensorflow/tfjs-converter, @tensorflow/tfjs-backend-wasm, @tensorflow/tfjs-backend-webgl

Default models in Human library are:

Note that alternative models are provided and can be enabled via configuration

For example, body pose detection by default uses MoveNet Lightning, but can be switched to MultiNet Thunder for higher precision or Multinet MultiPose for multi-person detection or even PoseNet, BlazePose or EfficientPose depending on the use case

For more info, see Configuration Details and List of Models

Human library is written in TypeScript 5.1 using TensorFlow/JS 4.10 and conforming to latest JavaScript ECMAScript version 2022 standard

Build target for distributables is JavaScript EMCAScript version 2018

For details see Wiki Pages

and API Specification

FAQs

Human: AI-powered 3D Face Detection & Rotation Tracking, Face Description & Recognition, Body Pose Tracking, 3D Hand & Finger Tracking, Iris Analysis, Age & Gender & Emotion Prediction, Gesture Recognition

The npm package @vladmandic/human receives a total of 9,662 weekly downloads. As such, @vladmandic/human popularity was classified as popular.

We found that @vladmandic/human demonstrated a healthy version release cadence and project activity because the last version was released less than a year ago. It has 1 open source maintainer collaborating on the project.

Did you know?

Socket for GitHub automatically highlights issues in each pull request and monitors the health of all your open source dependencies. Discover the contents of your packages and block harmful activity before you install or update your dependencies.

Product

Detect malware, unsafe data flows, and license issues in GitHub Actions with Socket’s new workflow scanning support.

Product

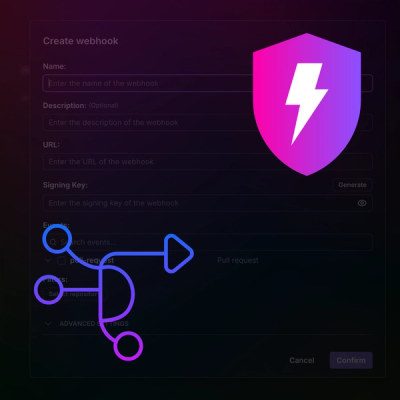

Add real-time Socket webhook events to your workflows to automatically receive pull request scan results and security alerts in real time.

Research

The Socket Threat Research Team uncovered malicious NuGet packages typosquatting the popular Nethereum project to steal wallet keys.